It has taken a lot of dreaming and even more engineering, but the many promises of silicon photonics are starting to make their way into real products in the datacenter. It doesn’t hurt that high-bandwidth and low-latency interconnects are a necessity for GenAI platforms and that the world is willing to spend enormous amounts of money to fulfill the promises of superintelligence and its agentic AI killer app.

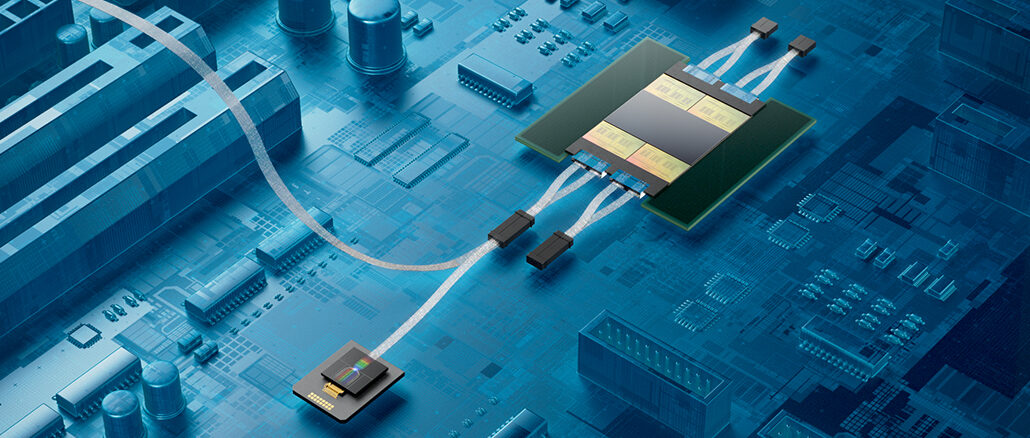

Scale out networks have been using pluggable optical transceivers for decades because of the need for network reach that is larger than can be built using electrical wires. And now, with co-packaged optics, the optical interconnect is being brought down into the package for datacenter switches and, in the future, for compute engines and even memory subsystems that feed into them.

It is not a question of when, much less if, but more a question of when will co-packaged optics, or CPO, be manufacturable at the high volumes and relatively low costs that are demanded in AI clusters. CPO will get traction first, it seems, in scale-out networks, just like optical transceivers did, because the volumes are relatively low and can be used as means of learning all of the tricks of volume manufacturing that will be needed before CPO can be used to stitch compute engines and memories together in rackscale systems and across rows and rows of gear. (And deeper down into the compute engine socket, optical interposers may be used to stitch together components.)

Here is the thing: Optics alone does not increase the bandwidth of links, but it does allow for a much larger number of links to be brought to bear, bandwidth that will be absolutely necessary for interconnects between XPUs and eventually their memories. The challenge is an order of magnitude more complexity, and then very quickly another order of magnitude will have to come. This is what will be required to scale from tens of thousands of XPUs to hundreds of thousands to millions in a single AI system, which is a requirement for training models in the coming years.

How can the industry manufacture the necessary components, at scale and without breaking the bank, to scale up compute and extend memory for these systems before the end of the decade? This is the question. and we will get some answers from experts in a webinar on November 5 from 9 am to 10 am Pacific Standard Time. Our panelists include:

- Erez Shaizaf, chief technology officer at Alchip

- Adit Narasimha, vice president and general manager of emerging technology at Astera Labs

- Vladimir Stojanovic, chief technology officer and co-founder at Ayar Labs

Join moderator Timothy Prickett Morgan from The Next Platform along with these industry experts to discuss how CPO will be used to weave future AI systems together. You can register for the webinar at this link.

Be the first to comment