SPONSORED In our first article in this short series we explored SambaNova Systems’ thesis that future advances in AI require a new architecture to handle new and highly demanding workloads. In this article we explore the company’s thesis that the new architecture is capable of broader applicability to new deployment scenarios where more traditional but still highly demanding workloads, as seen, for example, in high performance computing (HPC), are being augmented with machine learning to improve overall results of the application.

This is SambaNova Systems’ ambition; the software-defined AI hardware startup has invented a new computing architecture called ‘Reconfigurable Dataflow Architecture’ (RDA), that is designed to machine learning to all types of dataflow computation problems.

Reconfigurable Dataflow derives its name from the way it has been designed around the flow of data and dataflow graphs rather than using traditional computing approaches, and because it is reconfigurable to execute any dataflow graph. SambaNova, which recently announced the availability of DataScale, a platform built around RDA, dubs this approach “software 2.0”.

“If you think about conventional software development, it’s focused on algorithms, and instruction flow. So if you have a problem, you find some domain expert who knows about that area, and they write a lot of C code, and that requires you to decompose the problem, come up with algorithms for each of these different pieces, and then compose those algorithms into a system,” says Kunle Olukotun, co-founder and Chief Technologist at SambaNova.

“Contrast that with software 2.0 which is data focused and dataflow focused, where the developer curates the data, comes up with training data, and that data is used to train models, and the training process is an optimization process that gives you a network. And then the network can be used to solve your problem,” he explains.

Houston, we have a dataflow problem

This process is familiar to anyone who knows how AI and machine learning operate, but SambaNova argues that the architecture it has developed for accelerating these dataflow graphs is applicable to problems beyond machine learning, including many of the applications seen in HPC. This is because many of these HPC applications are also reducible to dataflow problems, and also happen to involve very large datasets, as machine learning does.

However, traditional compute architectures are not optimized for these use cases. Both CPUs and GPUs read the data and weights from memory, calculations are performed and the output results are written back to memory. The process has to be repeated again for each stage in the dataflow graph, meaning that huge amounts of memory bandwidth are needed to keep moving the data back and forth.

So, if you have a dataflow problem, you need a system designed around dataflow to solve it, a system built from the ground up as an integrated software and hardware platform. This is where SambaNova’s Reconfigurable Dataflow Architecture comes in.

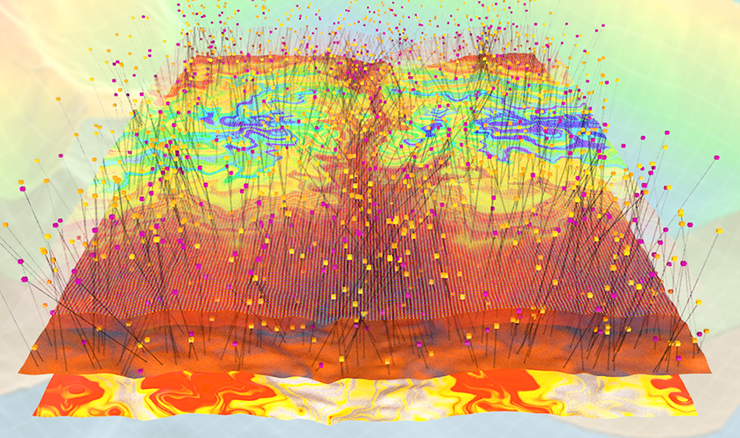

“Reconfigurable Dataflow is a ground-up re-imagining of how to do compute, focusing on what machine learning algorithms need,” Olukotun says. “It is a sea of computation and memory closely coupled together by a programmable network. And the key to that network is that you can program the dataflow between the different compute and memory elements in a way that matches the needs of the application that you’re trying to run.”

Cardinal Rules

This can be seen in the Cardinal SN10, the processor chip designed by the company, which calls it a Reconfigurable Dataflow Unit or RDU, to distinguish it from a CPU or GPU. The Cardinal SN10 consists of a grid of configurable elements, pattern compute units (PCUs) and pattern memory units (PMUs) that is tiled across the chip and linked together by a flexible on-chip communication fabric.

To implement an algorithm or workload, the functional components of the algorithm are mapped onto the compute and memory units, and the dataflow between them is implemented by configuring the communication fabric. This ensures that the flow of data seen in that algorithm or neural network is elegantly mirrored in the configuration of the chip elements.

SambaNova’s on-chip elements should not be compared with CPU or GPU cores, said Marshall Choy, the company’s vice president of product. “You should think of this chip as a tiled architecture, with reconfigurable SIMD pipelines, but I think the more interesting piece is actually the memory units on the chip, where we’ve got an array of SRAM banks and address interleaving and partitioning, so a lot of where the magic in the chip happens is really on the memory side.”

There are several hundred of each type of element (PCUs and PMUs), with the combined memory adding up to “hundreds of megabytes” of on-chip memory, which Choy compares favourably with GPUs.

The result is a big reduction in the off-chip bandwidth requirements, resulting in very high utilization of the compute and allowing it to hit teraflops of machine learning performance, instead of wasting compute power in shuffling data back and forth as seen in traditional architectures, Choy said.

The smallest DataScale system (SN10-8) takes up a quarter rack of space, and comprises eight of the Cardinal SN10 RDUs and 3TB, 6TB, or 12TB memory. Each RDU can handle multiple simultaneous dataflows or operate seamlessly together to process large-scale models.

SambaFlow is equally important in the DataScale system. The software integrates with popular machine learning frameworks such as PyTorch and TensorFlow and optimizes the dataflow graph for each model onto the RDUs for execution.

“Taking the example of a PyTorch graph we have a graph analyser, that then pulls out the common parallel patterns out of that graph, and then through a series of intermediate representation layers, our data flow optimizer, compiler and assembler build up the runtime, and we execute that onto the chip,” Choy explains.

The preparation work is handled by an x86 subsystem running Linux, but it can be bypassed using SambaNova’s RDU Direct technology, which enables a direct connection to the RDU modules.

Lawrence Livermore

This is how the SambaNova DataScale platform has been deployed at one early customer, the Lawrence Livermore National Laboratory (LLNL). Here, the Corona supercomputing cluster, which boasts in excess of 11 petaflops of peak performance, has been integrated with a SambaNova DataScale SN10-8R system.

LLNL researchers will use the platform in cognitive simulation approaches that involve the combination of high performance computing and AI. The SambaNova DataScale’s ability to run dozens of inference models at once while performing scientific calculations on Corona will help in the quest of using machine learning to improve nuclear fusion research efforts, LLNL said in a press statement. Researchers have already reported that the DataScale system demonstrates a five times improvement over a comparable GPU running the same models. SambaNova said a single DataScale SN10-8R can train terabyte-sized models, which would otherwise need eight racks worth of Nvidia DGX A100 systems based on GPUs.

This convergence of HPC and AI supports SambaNova’s assertion that its architecture based on dataflow does not merely accelerate AI functions but represents the next generation of computing. (That said, the company does not expect this new breed of computing to replace CPU-based systems for more transaction-oriented applications.)

“It’s not just for the high-end problems of language translation and image recognition that this software 2.0 approach works, it also works for classical problems,” said Olukotun. “It turns out that many classical problems from data cleaning to networking to databases have a bunch of heuristics in them, and what you find is you are better off replacing those heuristics with models based on data because you get both higher accuracy and better performance.”

He cites the example of the parallel patterns that represent machine learning applications and said these can equally well be used to represent the operators in SQL that are used for data processing.

Advancing AI for everyone

Meanwhile, SambaNova is keen to show that its technology is available to customers of all sizes and not just for high-end research labs or for huge companies.

For example, the company has introduced a Dataflow-as-a-Service offering that makes DataScale systems available via a monthly subscription service. This is managed by SambaNova and provides a risk-free way for organizations to jump start their AI projects using an Opex model.

AI may seem like an arcane topic to many, but machine learning is a significant and growing part of computing across a broad spectrum of everyday applications. Anything that can make AI more accessible and more efficient is vitally needed to drive future advances, and SambaNova argues its system-wide approach of a complete platform of software and hardware focused on dataflow is the answer.

In the third and final article in this three-part series we will discuss what state of the art compute looks like today and explore the requirements to support AI/ML and other apps that researchers and commercial enterprises will develop tomorrow.

Sponsored by SambaNova