The alliance between high performance computing and artificial intelligence is proving to be a fruitful one for researchers looking to accelerate scientific discovery in everything from climate prediction and genomics to particle physics and drug discovery. The impetus behind this is to use AI, and machine learning in particular, to augment the predictive capacity of numerical simulations.

The Department of Energy has been particularly active in integrating traditional HPC with AI, in no small part because it operates some of the most powerful supercomputers on the planet. Some of these machines, like the existing “Summit” system at Oak Ridge National Laboratory and the “Sierra” and “Lassen” systems at Lawrence Livermore National Laboratory are heavily laden with GPUs, the compute engine of choice for training neural networks. And that’s encouraging researchers with access to those systems to explore the utility of AI in their particular areas of interest.

Lawrence Livermore has been pushing ahead with these technologies on a number of fronts and recently won an HPC Innovation Excellence Award from Hyperion Research for work that applied machine learning to a fusion simulation problem. Specifically, the LLNL team trained a neural network model to help understand the results of Inertial Confinement Fusion (ICF) simulations in order to predict the behavior of fusion implosions. They ran 60,000 simulations on LLNL’s Trinity supercomputer and fed the results into their training workflow.

Once trained, the model could act as a surrogate for the simulations, enabling rapid evaluation of parameters. To exercise the entire parameter space in with simulations would have required something on the order of five million simulations, requiring about three billion CPU hours. The machine learning surrogate was able to do this in a fraction of the time.

The training work was performed on LLNL’s Sierra supercomputer, taking advantage of its thousands of Nvidia V100 GPUs and their machine-learning-friendly Tensor Cores. To achieve the desired level of scalability, the team used LBANN (Livermore Big Artificial Neural Network), a toolkit that supports strong scaling for neural net training. LBANN is also able to take advantage of the tightly coupled GPUs, high performance networking, and high-bandwidth parallel file systems that are available in systems like Sierra.

“We had Sierra handed to us just at the moment we needed it because the GPUs were there principally for precision simulation work,” explained Brian Spears, the principal investigator for the project. “But we were able to take advantage of it for the training side.”

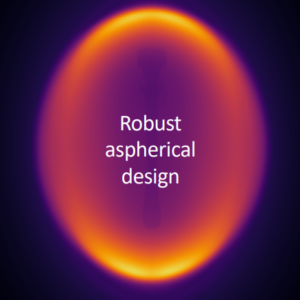

As a result, the team was able to uncover a small number of cases (0.25 percent of the simulations) that exhibited high-yield fusion implosions. According to Spears, the geometry of the implosions was completely non-intuitive. Instead of the expected spherical shape, the high-yield implosions were ovoid in structure. “As a biased designer, I would have said this was not a good place to go looking,” Spears explained to The Next Platform.

The team subsequently ran more detailed physics simulations on the ovoid target, which confirmed the predictions of the surrogate. The caveat here, said Spears, is that all these results are based on simulations, so real-world experiments could invalidate these predictions. That could be the result of a number of factors, namely, the underlying theories are incomplete; critical data may be missing; or the model is not tuned properly to the underlying physics.

Ideally, you’d like to confirm the results with empirical observations, but that comes with its own problems, the main one being that there isn’t enough of this data around. That’s because running experiments is expensive and time consuming, which is why people turned to numerical simulations in the first place.

To overcome some of these limitations, Spear’s team is developing ways to apply empirical data to previously trained models. The way they are approaching this is through transfer learning techniques, where they train with simulated data, as before, and then retrain with the sparser experimental data. The hope is that the latter will strip away any biases present in the numerical model.

Obviously, this is something of a balancing act, since the researchers have to decide how much weight to give the much smaller amounts of data from the experiments. At this point, Spears thinks that’s still an open question. Nevertheless, he believes mixing the two kinds of data will provide the best of both worlds. “It gets the lay of the land from the simulation, but it gets the much more focused and accurate prediction from the empirical observations,” he said.

Another area of active interest by his team is performing inference on the trained models at much higher frequency than what they do now. In general, applying machine learning to HPC work is skewed much more heavily toward training than inference. The idea is that a lot of time and energy is devoted to training the model, but inference is only used when a particular answer is needed, which is the opposite of what happens in the hyperscale space.

“But we’re turning that paradigm around a bit,” Spears said, “and recognize that often what we want to do is understand how these models behave, understand their sensitives, and especially their uncertainties, and their inability to capture the underlying simulation behavior, or how they are different from experimental data.”

To do that, he and his team have inserted inference software deep into the workflow, where it will be invoked much more frequently. And that is leading them to consider using specialized inference accelerators. Sierra’s V100 GPUs can do inferencing, but it is designed more as a training accelerator, and more generally, as an HPC accelerator.

Of course, Nvidia might argue that the V100 can do inference as well as or better than anything on the market right now. But the emergence of purpose-built chips from companies such as Habana and Intel’s upcoming Nervana Neural Network Processors for inference (NNP-I), not to mention Nvidia’s own “Turing” Tesla T4 GPU, suggests that inference specialization is worth pursuing for large-scale use cases. Both IBM and Intel are also working on neuromorphic processors, which might represent the ultimate in inference specialization.

Spears said they are reaching out to a number of vendors with the idea of hooking up some of these inference accelerators to the lab’s supercomputers. Ultimately, he hopes to have a machine that can interleave traditional simulations with training and inference at scale. But will that be a disaggregated system with specialized nodes devoted to each area or a more homogeneous platform comprised of heterogeneous components? “It’s not super-clear to me what that the answer is to that question,” said Spears. “But I don’t think it’s the machine we have right now.”

Comments are closed.

Interesting that the article spotlights 3 HPC clusters, all built on the IBM Power 9 platform, but hardly a mention of IBM in the entire article.

The Trinity supercomputer is at Los Alamos National Laboratory, not LLNL (https://www.lanl.gov/newsroom/video/video-stories/trinity-supercomputer.php). Each DOE laboratory has supercomputers, and with each new supercomputer comes new technology and speed.