Many of the technologists at AMD who are driving the Epyc CPU and Instinct GPU roadmaps as well as the $35 billion acquisition of FPGA maker Xilinx have long and deep experience in the high performance computing market that is characterized by the old school definition of simulation and modeling workloads running on federated or clustered systems. And so, it is no surprise that when AMD plotted its course back into the datacenter, it had traditional HPC customers, who flocked to its Opteron processors in droves in the middle 2000s, in mind.

AMD president and chief executive officer, Lisa Su, hails from IBM Microelectronics and notably headed up the “Cell” hybrid CPU-GPU processor that was used in Sony game consoles as well as the $100 million “Roadrunner” petaflops-busting supercomputer, which set the stage for hybrid supercomputing in 2008 when it was installed at Los Alamos National Laboratory. (Significantly, it paired dual-core Opteron processors with the Cell accelerators, themselves with Power4 cores and eight vector processing units that could do math or process graphics.) Papermaster led the design of several generations of Power processors at IBM, including many that were employed in federated RISC/Unix systems that predated Roadrunner. Interestingly, Brad McCredie, who took over processor design at Big Blue after Papermaster left and founded the OpenPower consortium, joined AMD in June 2019 to take over development of its GPU platforms.

https://www.youtube.com/watch?v=EoS5xCiImCc&feature=youtu.be

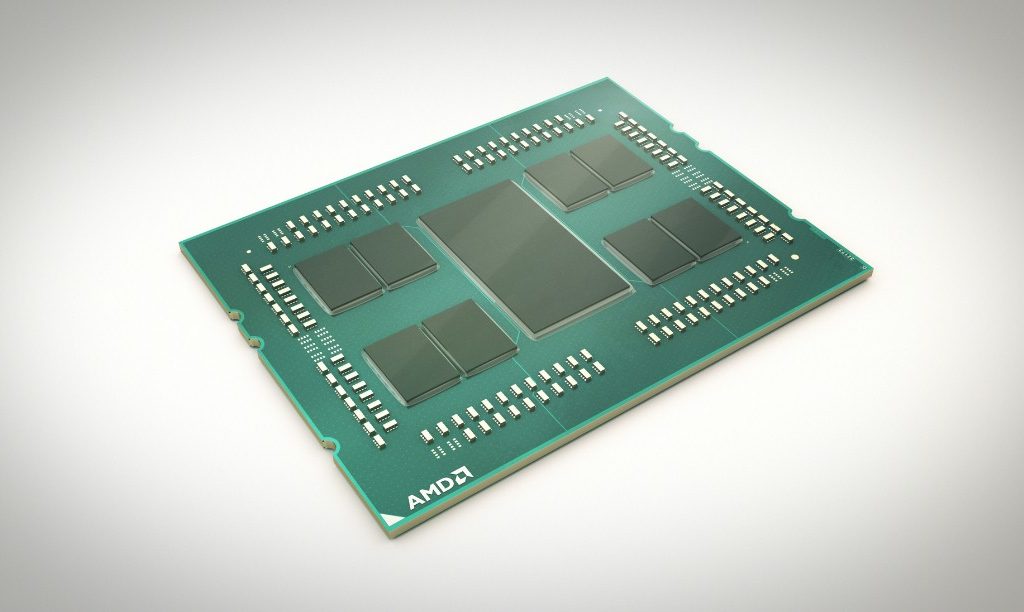

As part of our ongoing coverage of the SC20 supercomputing conference, we sat down with Mark Papermaster, chief technology officer at AMD, about the hard work AMD has done to get mindshare with its CPUs and GPUs with the much broader HPC market, which now includes traditional HPC and rising machine learning workloads as well as various kinds of data analytics, both on premises and in the public cloud. AMD has much to be proud of, pulling itself to parity or ahead of Intel in the X86 server chip space with the second generation “Rome” Epyc processors and getting a lot closer to Nvidia in GPU acceleration with the shiny new “Arcturus” server GPU and the Instinct MI100 GPU accelerator that uses it, which debuted during the SC20 virtual event.

This particular interview was recorded from my office (that’s the real one, including the cherry cradle that my Dad made for his grandchildren and that will be used for many more generations to come, my crazy ivy that is more than three decades old, and a portion of my library) and Papermaster’s office (really at AMD HQ in Austin, but that is not his view).

We started off with the obvious question: is the return of AMD just a reprise of the Opteron from almost two decades ago, or can AMD’s compute business just keep growing along with the appetite for compute and also take some market share away from rival platforms?

“One part is really the same and one part is really different,” explains Papermaster. “What do I mean by one part is the same? AMD is built on a history of innovation, and that part of AMD’s culture has thrived and is stronger than ever. One part of the AMD culture that you see has really improved, and that is our execution. We are executing on our roadmap exactly as we called it, and we re-engineered engineering, how we go about creating each of the components across the hardware, across the software, how we put it together, and delivers what our customers are really looking for. That is really key in all markets, but particularly in HPC. HPC typically plans out their purchases years in advance, and they have to have confidence in that delivery and confidence in the performance.”

One of the keys to AMD’s success in winning the deals to supply the compute engines for the future “Frontier” system at Oak Ridge National Laboratory and the future “El Capitan” system at Lawrence Livermore National Laboratory, weighing in at an estimated 1.5 exaflops and 2.1 exaflops respectively, was a close relationship between AMD’s engineering teams and the US Department of Energy, which funded pathfinding research that got woven into the CPU and GPU roadmaps.

AMD is starting to ship the third generation “Milan” Epyc 7003 processors for revenue this quarter and plans to do a formal launch for everyone who is not one of the hyperscalers or big public cloud builders in the first quarter of 2021. But Papermaster gave us some hints about what the server chips sporting the “Zen 3” cores might have in store and the advantages they might have over current and future Xeon SP processors from Intel. The Zen 3 core has improved branch prediction and prefetching and twice the L3 cache per core, and across a variety of workloads is delivering a 19 percent average instruction per clock (IPC) on integer workloads compared to the Zen 2 core used in the current Rome processors. This is a very significant bump in raw integer performance, with the typical Xeon E5 and Xeon SP offering somewhere between 5 percent and 10 percent increase in IPC generation to generation and not really much improvement at all between “Skylake” and “Cascade Lake” and “Cooper Lake” over the past several years.

The question we had is can this IPC gravy train keep going, or does it run out? AMD has done 50 percent IPC with the jump from the “Piledriver” Opteron core to the Zen 1 core (which stands to reason given how long AMD was really out of the server market), then double-digit from Zen 1 to Zen 2, and now 19 percent from Zen 2 to Zen 3. We wondered if there is a kind of limit of diminishing returns at work here as we have seen from Dennard scaling for processor clocks and then Moore’s Law limits to shrinking transistors and making them exponentially less costly to make.

“It does have more to come,” Papermaster says, in reference to IPC improvements. “There really is no lack of innovation that the team has to really continue to drive performance. But what you are seeing is that each successive node of semiconductor is getting more expensive, so you will see the continuation of these high performance cores and you are also seeing a bifurcation of how people optimize. People will put CPUs together with other kinds of accelerators like GPUs and FPGAs to drive performance forward not based on CPUs alone, but thinking about system design. And that is what we did with our supercomputer wins with the US Department of Energy and the EuroHPC win with the Lumi system.”

We talked about the bifurcation of the AMD GPU line, with the RDNA architecture for clients and the CDNA architecture for servers – the first time this has happened at AMD and mimicking to a certain extent (like Intel is doing as well) the different GPU tiers that Nvidia has created for the past several years. There are engines that do compute in many forms (and a lot of it) and engines that do graphics as well as compute (and less of it). We also talked about the new ROCm 4.0 software stack, which has some interesting new capabilities, and what might be in store for FPGAs as they are woven more tightly into the AMD fold. To hear about that, you have to listen to the full interview above. Enjoy.