After three relatively short years of explosive growth thanks to the GenAI boom, AI is driving half of systems revenues worldwide already. And so it stands to reason that the custom ASIC design business and the AI-related networking businesses will eventually come to dominate the semiconductor businesses of Broadcom.

In the second quarter of fiscal 2025 ended in early May, the share of AI-related semiconductors rose above 50 percent of sales for the second time in a row for Broadcom, and it is now reasonable to wonder when it might break above 75 percent given the focus on high-end networking and the increasing use of Ethernet switching for AI workloads – soon not just for the scale out network linking cluster nodes but also for the scale up networking linking XPUs.

To be specific, in the May quarter, Broadcom had just a smidgen over $15 billion in sales, up 20.2 percent year on year. Operating income nearly doubled to $5.83 billion, thanks in large part to cost-cutting at its VMware software division and the uptake by more than 8,700 of its 10,000 largest VMware customers of its rejiggered and simplified VCF product line. The Broadcom Infrastructure Software group, which includes VMware, CA, and Symantec, among other product lines, is smaller than its Semiconductor Solutions chip business, but it is throwing off 76 percent operating profit margins in the past two quarters compared to 57 percent for the past two quarters for the chip biz, which is a third again as large.

That legacy software business, which Broadcom has built up over the past few decades, takes some of the heat off of the chip business, which is always under intense pressure. And by collaborating with the hyperscalers and cloud builders in making their own host CPUs and AI XPUs, Broadcom can benefit from some of the risk spreading and cost savings these tech titans are after as they create and install compute engines of their own design without having to take on Nvidia, AMD, and Intel directly.

It is hard to imagine someone running Broadcom better than Hock Tan, its chief executive officer, does. It may seem like a weird agglomeration of stuff technically, but financially, it is working very well. Such as, net income being up by a factor of 2.3X to $4.97 billion, representing 33.1 percent of revenues. Net income as a share of sales is almost as high now as it was before Tan decided to eat VMware for $61 billion in May 2023 and rejigger it to throw off more profits.

Since VMware has been on the Broadcom books since Q1 F2024 ended in February 2024, we estimate that the server virtualization juggernaut has contributed $21.71 billion to the Broadcom top line and $14.59 billion to the middle line. That’s about half of the revenue and operating income of the Broadcom chip business, and at this rate VMware has paid back about a fifth a quarter of its $61 billion cost in operating profits already in a mere six quarters. In less than three years, VMware will have paid for its own deal inside of Broadcom.

In the May quarter, Broadcom’s Infrastructure Software group had $6.6 billion in sales, up 24.8 percent but down 1.6 percent sequentially. Operating income rose by 58.7 percent year on year to $5.03 billion, but was down 1.4 percent sequentially.

For The Next Platform, the Semiconductor Solutions group at Broadcom is more important, given the networking and storage chips Broadcom makes and sells to third parties. This chip business had $8.41 billion in sales, up 16.7 percent year on year and even rose a tiny bit sequentially from Q1 F2025.

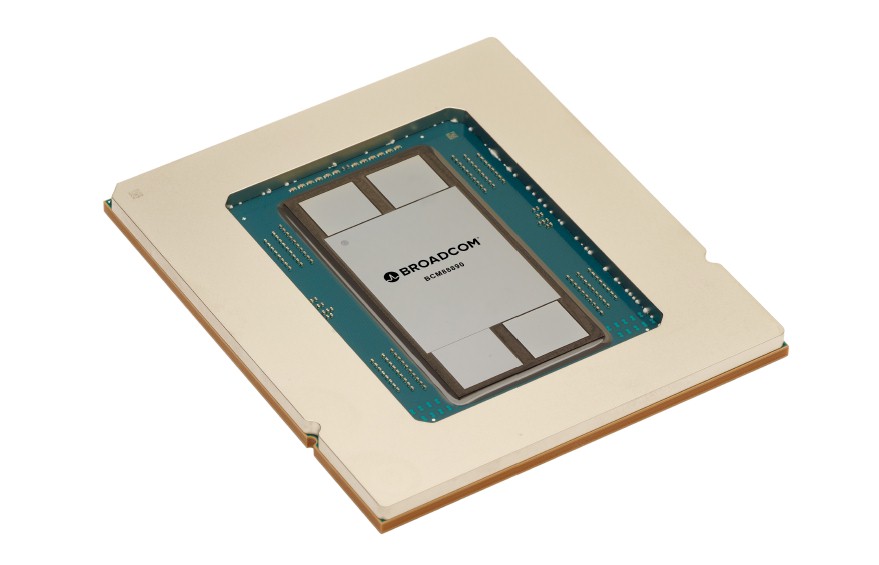

Within the chips business, our model shows AI chips revenues – which includes switch ASICs, XPU ASIC design, packaging, and shepherding for hyperscalers and cloud builders, as well as other chippery – at Broadcom rose by 46.7 percent to $4.42 billion, while other chip sales slipped by 4.8 percent to $3.99 billion. Broadcom has three custom compute engine customers – Google was the first, but now Meta Platforms is working on chips with the company and so is OpenAI – with four other prospects – which we think includes Apple, ByteDance, and two others – who are looking to use Broadcom as XPU shepherds.

Within AI sales, based on the comments made by Tan & Co on the call with Wall Street analysts going over the numbers, we believe that Broadcom booked $2.65 billion in AI compute sales, up 34.5 percent year on year and up 7.2 percent sequentially.

AI networking, which is just starting to take off with the Jericho-3AI and now Tomahawk 6 switch ASICs, rose by 67.7 percent to $1.77 billion in sales, up 7.1 percent sequentially.

As we previously reported, in fiscal 2023, Broadcom had $3.8 billion in AI chip sales, and this rose by 3.2X to $12.2 billion in fiscal 2024.

Broadcom is being a lot less specific about sales across its various groups, but here is what we think happened:

Networking sales rose by 33.7 billion to $5.121 billion in our model for Broadcom, while Server Storage Connectivity rose by 22.6 percent to $1.01 billion.

Looking ahead to the third fiscal quarter that will end in early August, Broadcom expects sales to be $15.8 billion, up around 21 percent. Chip sales will be around $9.1 billion, up 25 percent. If you read the tea leaves on the AI and non-AI forecasts in the semiconductor part of Broadcom, then AI compute should grow by around 42 percent to around $3.1 billion and AI networking should almost double to around $2.1 billion. That puts total AI revenue at $5.16 billion for fiscal Q3 and for the full fiscal 2025 year for maybe $19 billion, possibly a little higher. And Tan says that Broadcom can grow this business by another 60 percent or so in fiscal 2026 to maybe $30 billion.

And interestingly, the tech titans are seeking out their own AI training ASICs as well as their own AI inference ASICs – it is not all about training, and it is increasingly about inference. Explaining, Tan said the following on the call with Wall Street analysts:

“I think there’s no differentiation between training and inference in using merchant accelerators versus custom accelerators. I think the whole premise behind going towards custom accelerators continues, which is it’s not a matter of cost alone. It is that as custom accelerators get used and get developed on a roadmap with any particular hyperscaler, there’s a learning curve on how they could optimize the way the algorithms on their large language models get written and tied to silicon.”

“And that ability to do so is a huge value add in creating algorithms that can drive their LLMs to higher and higher performance, much more than basically a segregation approach between hardware and the software. It’s that you literally combine end-to-end hardware and software as they take that journey. And it’s a journey. They don’t learn that in one year. Do it a few cycles, get better and better at it. And there lies the value — the fundamental value in creating your own hardware versus using a third-party merchant silicon that you are able to optimize your software to the hardware and eventually achieve way high performance than you otherwise could. And we see that happening.”

The question is this: When will this same phenomenon of custom trumping merchant chips happen to the switch ASICs that Broadcom so loves to sell?