The first thing to note about the rumored “Stargate” system that Microsoft is planning to build to support the computational needs of its large language model partner, OpenAI, is that the people doing the talking – reportedly OpenAI chief executive officer Sam Altman – are talking about a datacenter, not a supercomputer.

And that is because the datacenter – and perhaps multiple datacenters within a region with perhaps as many as 1 million XPU computational devices – will be the supercomputer.

The report on the Stargate effort, which was reported by The Information as the Easter holiday was starting last Friday, is consistent with the scalability goal of 1 million interconnected endpoints that the Ultra Ethernet Consortium – of which Microsoft is a founding member – has set for future Ethernet networks.

The Stargate system – and we will continue to call it a system even if it is at datacenter or region scale – is, at this point something on the back of an envelope and almost certainly something that Altman leaked to get tongues wagging. Altman seems unable to decide if OpenAI should be wholly dependent on Microsoft, and who can blame him? This is why there are also rumors about OpenAI designing its own chips for AI training and inference, and outrageous comments about Alman trying to spearhead a $7 trillion investment in chip manufacturing that he subsequently backed away from.

You can’t blame Altman for throwing around the big numbers he is staring down. Training AI models is enormously expensive, and running inference – what will be mostly generation of tokens and is properly labeled that at this point, as Nvidia co-founder and chief executive officer Jensen Huang recently pointed out in his keynote at the GTC 2024 conference – is unsustainably expensive. And that is why Microsoft, Amazon Web Services, Google, and Meta Platforms have created or are creating their own CPUs and XPUs. And as the parameter counts go up and data shifts from text to other formats, LLMs are only going to get larger and larger – 100X to 1,000X over the next few years if present trends persist and the iron can scale.

And so, we are hearing chatter about Stargate, which demonstrates that the upper echelon of AI training is without question a rich person’s game.

Depending on what you read in the interpretations of the reports following the initial Stargate rumor, Stargate is the fifth phase of a project that will cost somewhere between $100 billion and $115 billion, with Stargate being delivered in 2028 and operating through 2030 and beyond. Microsoft is apparently in the third phase of the buildout right now. Presumably, those funding numbers cover all five phase of the machine, and it is not clear if this number covers the datacenter, the machinery inside of it, and the cost of power. Microsoft and OpenAI will probably not do much to clear any of this up.

And there is no talk of what technology the Stargate system will be based upon, but we would bet Satya Nadella’s last dollar that it will not be based on Nvidia GPUs and interconnects. And we would bet our own money that it will be based on future generations of Cobalt Arm server processors and Maia XPUs, with Ethernet scaling to hundreds of thousands to 1 million XPUs in a single machine. We also think that Microsoft bought the carcass of DPU maker Fungible to create a scalable Ethernet network and is possibly having Pradeep Sindhu, who founded Juniper Networks and Fungible, create a matching Ethernet switch ASIC so Microsoft can control its entire hardware stack. But that is just a hunch and a bunch of chatter at this point.

No matter what kind of Ethernet network Microsoft uses, we are fairly certain that at some point 1 million endpoints is the goal and we are also fairly certain InfiniBand is not.

We also think it would be dubious to assume that this XPU will be something as powerful as the future Nvidia X100/X200 GPU or its successor, which we do not know the name of. It is far more likely that Microsoft and OpenAI will try to massively scale networks of less expensive devices and radically lower the overall cost of AI training and inference.

Their business models depend upon this happening.

And it is also reasonable to assume that at some point Nvidia will have to create an XPU that is just jam packed with matrix math units and lose the vector and shader units that gave the company its start in datacenter compute. If Microsoft builds a better mousetrap for OpenAI, Nvidia will have to follow suit.

Stargate represents a step function in AI spending for sure, and perhaps two step functions depending on how you want to interpret the data.

In terms of datacenter budgets, all that Microsoft has said to date publicly is that it will be spending more than $10 billion in 2024 and 2025 on datacenters, and we presume that most of this spending is to cover the cost of AI servers. Those $100 billion or $115 billion figures are far too vague to mean anything concrete, so this is just a lot of big talk at this point. We will remind you that for the past decade, Microsoft has kept at least $100 billion in cash and equivalents around, and peaked at just under $144 billion in the September 2023 quarter. As it ended calendar 2023 (Microsoft’s second quarter of fiscal 2024), it was down to $81 billion.

So Microsoft doesn’t have the dough to do the Stargate effort right now all at once, but its software and cloud businesses together generated $82.5 billion in net income against about $227.6 billion in sales for the trailing twelve months. Over the next six years, if the software and cloud businesses just stayed where it was, Microsoft will bring in $1.37 trillion in revenues and have around $500 billion in net income. It can afford the Stargate effort. And Microsoft can afford to just buy OpenAI and be done with it, too.

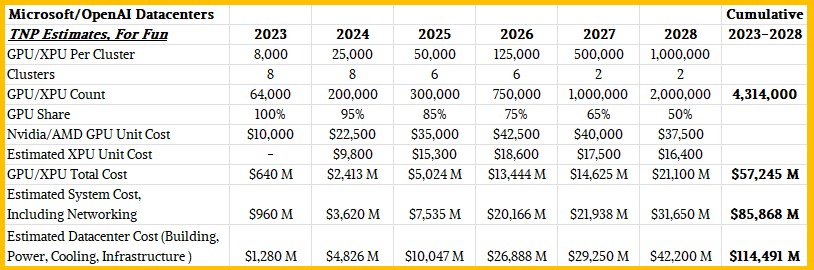

Anyway, just for fun, we cooked up a budget for clusters that Microsoft might have already built and could build in the future for OpenAI, showing how their composition and scale might change over time. Take a gander:

We think that over time the number of AI clusters allocated to OpenAI will go down and the size of those clusters will go up.

We also think that the share of GPUs in the OpenAI clusters will go down and the share of XPUs – very likely in the Maia family, but possibly also using an OpenAI design – will go up. Over time the number of homegrown XPUs will match the number of GPUs, and we further estimate that the cost of those XPUs will be less than half the cost of a datacenter GPU. Moreover, we think shifting from InfiniBand to Ethernet will also drive down costs, and especially if Microsoft uses homegrown Ethernet ASICs and homegrown NICs with AI function and collective operations function built into them. (Like Nvidia has with the SHARP features of InfiniBand.)

And we also forced the spending model such that there are two clusters with 1 million endpoints in 2028 – one made of GPUs and one made of homegrown XPUs, or half each across two clusters. We would like to estimate future cluster performance, but that is exceedingly hard to do. There may be a lot more XPUs of modest performance gains each year but at much better price/performance.

The thing to remember is that Microsoft can keep the current generation of GPU or XPU for OpenAI’s internal use – and therefore its own – and sell the N-1 and N-2 generations to users for many years to come, very likely getting a lot of its investment bait back to fish with OpenAI again. So those investments are not sunk costs, per se. It is more like a car dealer driving around a whole bunch of different cars with dealer plates on them but not running the mileage up too high before selling them.

The question is this: Will Microsoft keep investing vast sums in OpenAI so it can turn around and rent this capacity, or will it just stop screwing around and spend $100 billion on OpenAI (the company had a valuation of $80 billion two months ago) and another $110 billion or so more on infrastructure to have complete control of its AI stack.

Even for Microsoft, those are some pretty big numbers. But, as we said, if you look at it between 2024 and 2028, Microsoft has probably around $500 billion in net income to play with. Very few other companies do.

To think Microsoft started with a BASIC compiler and a piece of crap DOS operating system kluged from a third party and gussied up for a desperate Big Blue that didn’t understand it was giving away the candy store.

Maybe that is Altman’s nightmare, too. But it may be too late for that, given the enormous sums of money needed to push AI to supposedly new heights.

Comments are closed.

By 2028, Can Microsoft develop an ASIC + board + FW + SW + SYSTEM for : NIC + XPU + Ethernet switch ?

Yes. I assume this has been in the works for years already. The Marvell Triton ThunderX team and the Fungible team have been hard at it for a long time.

Will a full-range of commercially viable products, from networking gear, XPUs to dev-ready software ecosystem, who could benefit customers outside of a locked-in cloud subscription, come out if it?

That’s the real $100b question.

Hmmmm … seems to me, on the next April Fool’s day, it’ll be OpenAI buying both Microsoft and Nvidia … in cash! (unless MS buys Anthropic first, to cozy-up to Claude, the deliciously sensible French-born Roman emperor, who ruled between Caligula and Nero) 8^b

To be stuck between Caligula and Nero…

…, like Pac-Man, in a Shannon-style minotaur-maze of telephone relay switches! q^8

(fun ref.: https://en.wikipedia.org/wiki/I,_Claudius_(TV_series) )

(TNP ref.: https://www.nextplatform.com/2024/03/05/anthropic-fires-off-performance-and-price-salvos-in-ai-war/ )

If someone is interested,

Stargates .ai is for sale.

Just a reminder.

Is Microsoft & OpenAI’s 5GW Stargate supercomputer feasible?

The project is expected to rely on nuclear reactors to generate the required power for the site’s computers.

https://www.datacenterdynamics.com/en/opinions/is-microsoft-openais-5gw-stargate-supercomputer-feasible/

As long as Microsoft has lots of money to spend, yes. Whether or not it results in making Microsoft some multiple of its investment is to be seen.