The Energy Sciences Network (ESnet) is the U.S. Office of Science’s purpose-built high performance network user facility, operating as the “circulatory system” that connects every Department of Energy lab and its user facilities and experiment sites. It allows users to work with instruments remotely and share, access, and transfer scientific data between labs. The effort has been revolutionary in that it has allowed scientific progress without the need to be centered near instruments or supercomputers and in allowing large-scale collaborations on complex research projects.

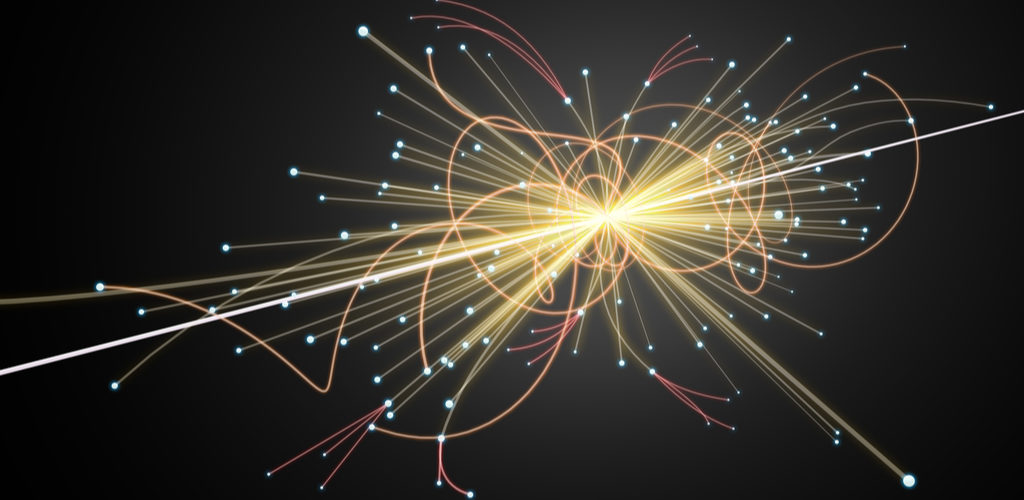

ESnet, in conjunction with several national labs has projected the future network requirements for several of its sites, focusing in particular on nuclear physics and related areas. Among the questions the team investigating future requirements wanted to consider were where new data might be used and analyzed, how science processes might change in the next five to ten years, and how changes in hardware and software capabilities might influence the potential for new discoveries. Insight via case studies (in full form here) from several scientific sites, including the ALICE large ion collider experiment, the Solenoidal Tracker at Brookhaven National Laboratory, the Gamma Ray Energy Tracking Array (GRETA), provided the multi-site basis for the projected network requirements.

Based on the experiences and needs from the many labs, supercomputing sites, and instrument locations, the team came up with some key findings about future network requirements. One of the most important shifts is that many new facilities supporting nuclear physics projects (detectors, arrays etc.) will be coming online in the next three to seven years, which will create additional demand on network infrastructure as well in changes in how that data is created, stored, and worked on collaboratively. In short, the time is ripe to prepare for those new sites and instruments and work through some future challenges, including the following (per the full report):

-

Network path diversity and capacity are critical issues for major NP facilities as the experimental data volumes increase and the need for external computation in the workflow changes.

-

Today, many of the facilities utilize local cluster computing to analyze data. As facilities upgrade and produce larger sets of data, such a model will not be able to manage the computing needs. To this end, scientists are challenged to identify resources within DOE ASCR and computing resources of the Open Science Grid (OSG). To fully adopt these resources, workflows will require significant changes to software and network architecture.

-

For workflows to become more mobile, there will need to be a series of steps taken to reduce areas of friction. These include upgrading software to facilitate streaming, increasing and improving network connectivity options, and altering computational models to allow for both local and remote data access.

-

A common access methodology (perhaps an application programming interface [API]) that spans ASCR computational facilities will greatly assist in the development of workflows that can adopt and regularly use these resources.

-

Data portals, typically used to share and disseminate experimental results, are either not widely available or are aging for a number of collaborations. Adoption of high-performance techniques in this software and hardware space is needed by several collaborating groups.

-

Network-based workflows that span facilities rely heavily on performance monitoring software, such as perfSONAR, to set expectations and debug critical transmission problems. This software is now widely available at all profiled facilities.

As the case studies from each scientific site demonstrate, the requirements and challenges vary based on instruments, computational resources, and other factors but ESnet has set forth lab-specific action items related to the use, sharing, and storing of data for each. They note that over the next two to ten years they will estimate the predicted growth in nuclear physics data to ensure the network is ready to take on the new, more challenging loads as new instruments and facilities are added.

There were some interesting findings from the case studies in particular. While they’re nuclear physics-centric they share some common elements from other areas in HPC. For instance, several sites reported experimenting with public clouds but found that the costs of converting (development, re-tuning workflows) was only useful when bursting. Teams also noted that HPC centers have “a set of strategic architectural issues to solve with regards to streaming workflows (the ability for worker nodes to have external network access or fetch data from a cache that will stage data for them). On the network-specific front, some labs, including Oak Ridge, stated a need for multiple 400G connections to ESntet as soon as the end of this year.

Each site’s case studies and conclusions from the full report can be found here.