Every new paradigm of computing has its own framework, and it is the adoption of that framework that usually makes it consumable for the regular enterprises that don’t have fleets of PhDs on hand to create their own frameworks before a technology is mature.

Serverless computing – something that strikes fear in the hearts of many whose living is dependent on the vast inefficiencies that still lurk in the datacenter – and event-driven computing are two different and often associated technologies where the frameworks are still evolving.

The serverless movement, which we have discussed before in analyzing the Lambda efforts of Amazon Web Services and in the clone of its Lambda service, called Fission, that is being spearheaded by OpenStack and Kubernetes platform upstart Platform9, is not really about trying to do compute without servers, which would be a neat trick indeed, but in creating such a high level of abstraction for compute, at such a fine granularity, that customers don’t have to care what the underlying server is. It just is not their problem anymore.

With event-driven computing, people are being taken out of the workflow because, to put it bluntly, they are too slow. For the longest time, transaction processing meant humans talking to humans, who keyed in the transactions that ran the business using homegrown or third party applications coded in high level languages. Eventually some of the people at the backend – those who were the literal transaction processors – were replaced by Web front ends, and customers talked directly to systems. Various message queueing middleware was used to link disparate and often incompatible systems to each other so they could trade data and do more sophisticated data sharing and transaction processing. In datacenter operations itself, system administrators combed through alerts and other data to keep things humming along. All of this still takes too much coding and too much human intervention, and a new generation of applications that react to vast amounts of telemetry, often with in-memory processing techniques shaped by statistical analysis and machine learning algorithms, is emerging. And serverless computing is meeting event-driven computing on what amounts to a single platform, where algorithms are the only thing coders write and the data store and compute is handled by the backend supplier, such as Amazon Web Services, Microsoft Azure, or Google Cloud Platform.

Microsoft has to keep pace with AWS if it hopes to convert the tens of millions of Windows Server customers not only to its Azure public cloud or its Azure Stack private cloud clone, but if it wants to capture this new breed of event-driven applications running on a serverless platform. This is what the Azure Event Grid is all about.

“We are seeing a pretty dramatic shift in how customers engage with the Azure platform and develop new applications,” Corey Sanders, director of Azure Compute, tells The Next Platform. “It really is an evolution in development and platforms from on-premises to infrastructure as a services to platform as a service to serverless platforms. And there are three things that we think define a serverless platform. The first is certainly the abstraction of servers, and not having to manage the underlying server instance, but we also think it has to include microbilling, which is pay for use down to the exact second of usage and the exact amount of CPU and memory capacity used. Many of these applications will run for very short periods of time. And the third thing is that a serverless platform is event driven, when it is an IoT device or a user click on a mobile app or initiating a business process from some customer request.”

The benefits of serverless and event-driven computing are many fold, says Sanders. For one, there is a big reduction in the amount of DevOps that IT shops will have to pay for. Moving to the cloud cuts down on people costs because on Azure Microsoft – or more accurately, tools like Autopilot – do the managing, and even if some of this cost is added to the raw, underlying infrastructure services cost, it is a shared cost by what is now millions of customers across Microsoft’s many cloud services. There is much less to manage, and it is much simpler to develop code. That means programmers don’t have to be infrastructure experts, and they can focus on the business logic and algorithms that drive and shape the business itself. (This was the hallmark of the Application System/400 minicomputer from IBM, which we know a thing or two about, which made relational database processing transparent to coders who focused on business logic. In a sense, AWS, Azure, and Cloud Platform are modern AS/400s, suitable for tens of millions of customers doing modern stuff, just like the AS/400 was modern when it was introduced in 1988.) The integrated nature of platforms, whether legacy and yet modernized ones like the AS/400, which is still in use today to its great credit, or shiny new ones like Azure, means that companies can not only focus on business logic, but can turn things around quicker and possibly get a market advantage. That’s how – and why – the hyperscalers all do this for their own internal code.

And you can bet that they all have better stuff we can’t yet see.

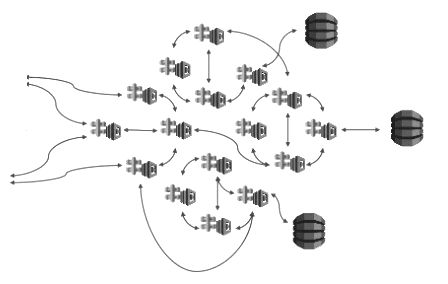

In the serverless space, Microsoft has two separate but related platforms: Azure Functions and Azure Logic Apps, and Azure Event Grid is the framework that is bringing them together and also hooking them into other Azure services as well as over a hundred other services, such as Office365, Dynamics365, and Salesforce.com, that can interface with this event-driven framework and thereby create a true serverless platform. The idea, says Sanders, is that Event Grid provides a single framework from which any event, from any source, can kick off an application response, which may in turn drive other events and cause things to happen. (Causing things to happen is what applications do, after all.)

At the moment, Event Grid supports the following Azure services as initiators for events: Azure Blob Storage, Azure Resource Manager, Application Topics, Event Hubs, Azure Functions, Azure Automation, Logic Apps, and WebHook Endpoints. Other integrations as senders and receivers of events are in the works, including: Azure Active Directory, API Management, IoT Hub, Service Bus, Azure Data Lake Store, Azure Cosmos DB, Azure Data Factory, and Storage Queues.

Obviously, the sheer number and diversity of events coming into an application just inside of Azure with its native services would be overwhelming to keep track of and to integrate, which is what the Event Driven framework is all about. Customers with homegrown applications, whether running on Azure or in Windows Server or Azure Stack in their own datacenters, will no doubt expose some parts of their applications as event drivers and other parts as event receivers, and this integration will need to be tracked and monitored, too. This may be easy to do, but it will fast become a hairball without some centralized control.

Azure Functions, which is analogous to Lambda at AWS, became generally available a year and a half ago and is now driving over 1 billion serverless executions per day, according to Sanders. This product has billing that measures how many seconds of CPU execution and how many GBs of memory is used for how many seconds to come up with an aggregate price; these units are miniscule and so are the prices, as you can see here, but they will add up when an application is a seething mass of events triggering stuff. Azure Logic Apps became generally available in July 2016 and was in preview for many months before that, and Sanders did not have any stats available on how much stuff it was processing on Azure, but the pricing for it is similarly miniscule per actions taken as events come into and out of the cloud. The Azure Event Grid is in is in public preview now and neither the general availability date or pricing is therefore available yet for it.

Microsoft has been pretty clear that customers want a private version of Azure to run in their datacenters, and Azure Stack, which currently tops out at a very modest dozen nodes in a single instance, is what Microsoft has come up with to bring the Azure metaphor for compute, storage, and networking on premises in the corporations that don’t want to plunk some or all of their apps in the public cloud. We have gone into detail about why Microsoft is bothering with Azure Stack, and frankly we would have expected this to ramp faster and scale further than it has. Taking hyperscale infrastructure down to enterprise scale is not easy, and making it easy for enterprises to use is not, either. In any event, if Azure Stack is to be the platform for serverless computing, too, then Azure Functions, Azure Logic Apps, and Azure Event Grid will have to come to it, too. So far, Microsoft has committed to bringing Azure functions to Azure Stack later this year, it has no plans for bringing Azure Logic Apps over, and Azure Event Grid support will depend on customer demand.

We would think that as a matter of policy that whatever can be moved from the Azure public cloud to Azure Stack would be moved because having them be equivalent and compatible would seem to be the whole point.

By the way, no one is suggesting that serverless applications will replace everything out there in the tens of millions of corporations, governments, and other organizations in the world, and certainly Sanders does not believe this. And Microsoft doesn’t want it to happen all at once even if it could because it has a very lucrative Windows Server platform from which it wants to extract the maximum amount of profit as it builds out Azure and Azure Stack to render it obsolete sometime in the distant future. Sometime between five years and forever from now.

“We think serverless will be a part of an overall application strategy,” says Sanders. “I don’t believe that serverless will replace every part of an application, but I think that almost every application – modern or otherwise – is going to see integration points where serverless components dramatically simplify the application and improve the customer experience. Certainly IoT is an obvious place where serverless makes a lot of sense, but even simple things like bots built into helpdesk websites to engage with customers are a great place to start with serverless. I think serverless will integrate into almost all applications in some form or another.”

Serverless has another interesting effect, and it is both good for the public cloud providers and their private cloud emulators as well as for the customers. Here’s the though experiment. With traditional bare metal servers, CPU utilization was probably on average somewhere between 5 and 10 percent across all datacenters in the world, which is pitiful but it is good business if you can sell on the resource spikes, as so many server makers and their parts suppliers did for so long. Server virtualization came along and maybe average utilization was driven up to maybe 15 percent to 20 percent – a big improvement that saved lots of money once customers shed big bucks on shiny new virtualization-assisted servers and hypervisors. These days, maybe the best of breed is doing 20 percent to 30 percent utilization, and maybe the big public clouds are doing on the order of 40 percent because they don’t just interleave workloads across a company, but across many companies. With microservices, provided there are enough of them and provided their utilization doesn’t come as one big spike, there is a change to get the server virtualization hypervisor overhead out of the equation on the underlying platform, perhaps by moving to Docker or some other container, and really bin packing the event-driven code. Let’s say average utilization can be driven to 65 to 75 percent.

Think about how many datacenters the cloud builders like Amazon, Microsoft, and Google would not have to buy to deliver capacity. This could be a very big number, and that’s true even if serverless is a meaningful part of but not a majority of the zillions of concurrent micro-workloads.