Balancing Performance, Capacity, And Budget For AI Training

If the world was not a complex place, and if all machine learning training looked more or less the same, then there would only be one accelerator to goose training workloads. …

If the world was not a complex place, and if all machine learning training looked more or less the same, then there would only be one accelerator to goose training workloads. …

AMD has been on such a run with its future server CPUs and server GPUs in the supercomputer market, taking down big deals for big machines coming later this year and out into 2023, that we might forget sometimes that there are many more deals to be done and that neither Intel nor Nvidia are inactive when it comes to trying to get their compute engines into upper echelon machines. …

We have a saying around here at The Next Platform, and it is this: Money is not the point of the game. …

When we said thirteen weeks ago that we thought that Nvidia’s datacenter business would be its largest operating division before too long, we didn’t think it would only take a quarter to do that. …

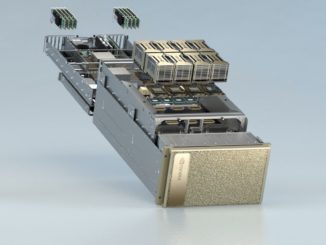

When you have 54.2 billion transistors to play with, you can pack a lot of different functionality into a computing device, and this is precisely what Nvidia has done with vigor and enthusiasm with the new “Ampere” GA100 GPU aimed at acceleration in the datacenter. …

A new CPU or GPU compute engine is always an exciting time for the datacenter because we get to see the results of years of work and clever thinking by hardware and software engineers who are trying to break through barriers with both their Dennard scaling and their Moore’s Law arms tied behind their backs. …

All Content Copyright The Next Platform