Nuclear Weapons Drove Supercomputing, And May Now Drive It Into The Clouds

If the HPC community didn’t write the Comprehensive Nuclear Test Ban Treaty of 1996, it would have been necessary to invent it. …

If the HPC community didn’t write the Comprehensive Nuclear Test Ban Treaty of 1996, it would have been necessary to invent it. …

Decades before there were hyperscalers and cloud builders started creating their own variants of compute, storage, and networking for their massive distributed systems, the major HPC centers of the world fostered innovative technologies that may have otherwise died on the vine and never been propagated in the market at large. …

After a long wait, now we know. All three of the initial exascale-class supercomputer systems being funded by the US Department of Energy through its CORAL-2 procurement are going to be built by Cray, with that venerable maker of supercomputers being the prime contractor on two of them. …

In the following interview, Dr. Matt Leininger, Deputy for Advanced Technology Projects at Lawrence Livermore National Laboratory (LLNL), one of the National Nuclear Security Administration’s (NNSA) Tri Labs describes how scientists at the Tri Labs—LLNL, Los Alamos National Laboratory (LANL), and Sandia National Laboratories (SNL)—carry out the work of certifying America’s nuclear stockpile through computational science and focused above-ground experiments. …

As the theme goes this year, what’s old is new again. …

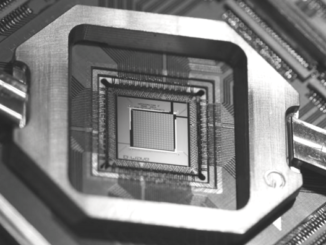

With the end of physical nuclear testing came an explosion in computing technology at massive scale to simulate in rich detail and high resolution the many scenarios and factors that influence a hefty nuclear weapons stockpile. …

All Content Copyright The Next Platform