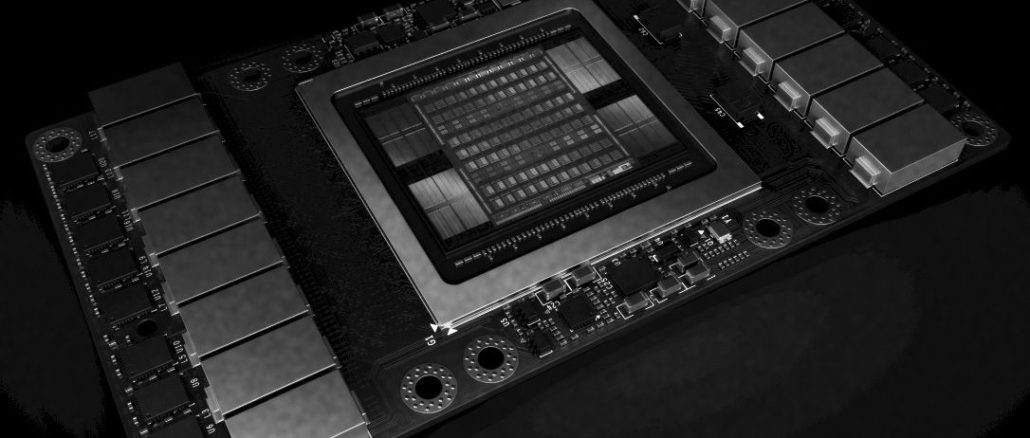

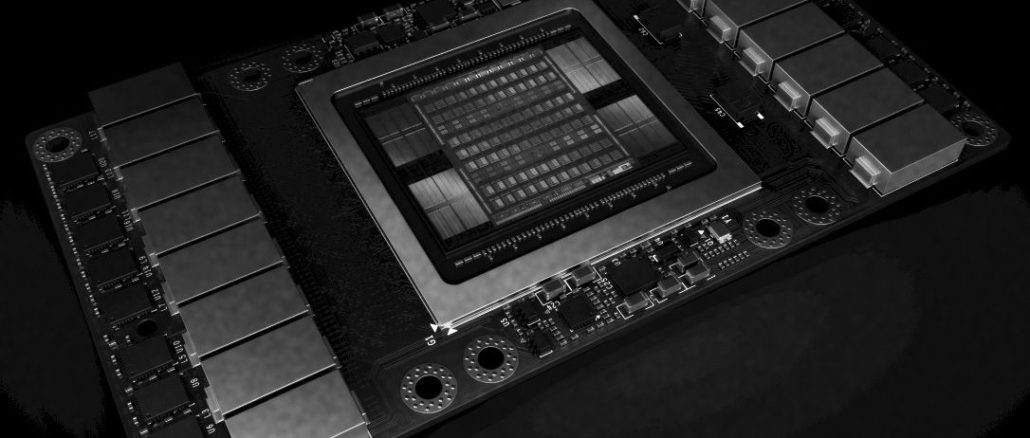

Nvidia’s Tesla Volta GPU Is The Beast Of The Datacenter

Graphics chip maker Nvidia has taken more than a year and carefully and methodically transformed its GPUs into the compute engines for modern HPC, machine learning, and database workloads. …

Graphics chip maker Nvidia has taken more than a year and carefully and methodically transformed its GPUs into the compute engines for modern HPC, machine learning, and database workloads. …

While it is always best to have the right tool for the job, it is better still if a tool can be used by multiple jobs and therefore have its utilization be higher than it might otherwise be. …

There is an arms race in the nascent market for GPU-accelerated databases, and the winner will be the one that can scale to the largest datasets while also providing the most compatibility with industry-standard SQL. …

Like other major hyperscale web companies, China’s Tencent, which operates a massive network of ad, social, business, and media platforms, is increasingly reliant on two trends to keep pace. …

The hyperscalers of the world are increasingly dependent on machine learning algorithms for providing a significant part of the user experience and operations of their massive applications, so it is not much of a surprise that they are also pushing the envelope on machine learning frameworks and systems that are used to deploy those frameworks. …

Nvidia has staked its growth in the datacenter on machine learning. …

There is something to be said for being at the right place at the right time. …

If Nvidia’s Datacenter business unit was a startup and separate from the company, we would all be talking about the long investment it has made in GPU-based computing and how the company has moved from the blade of the hockey stick and rounded the bend and is moving rapidly up the handle with triple-digit revenue growth and an initial public offering on the horizon. …

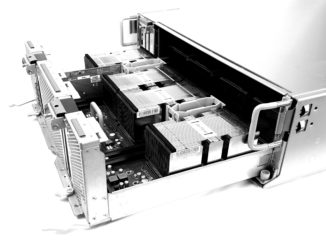

We have written much about large-scale deep learning implementations over the last couple of years, but one question that is being posed with increasing frequency is how these workloads (training in particular) will scale to many nodes. …

In the ideal hyperscaler and cloud world, there would be one processor type with one server configuration and it would run any workload that could be thrown at it. …

All Content Copyright The Next Platform