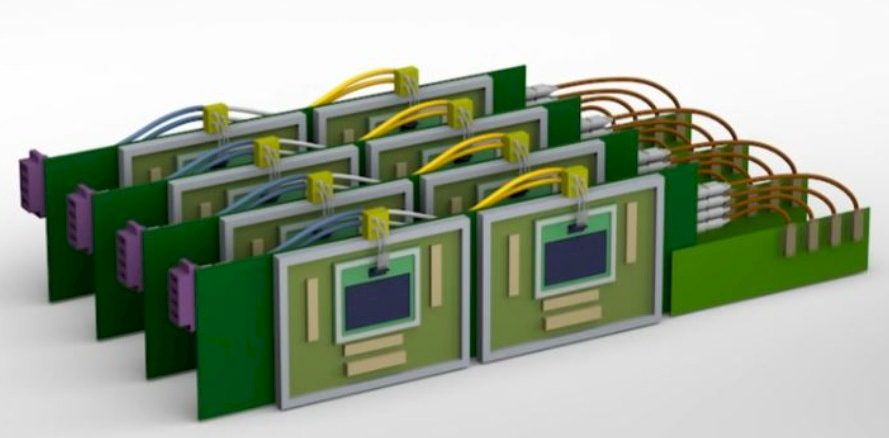

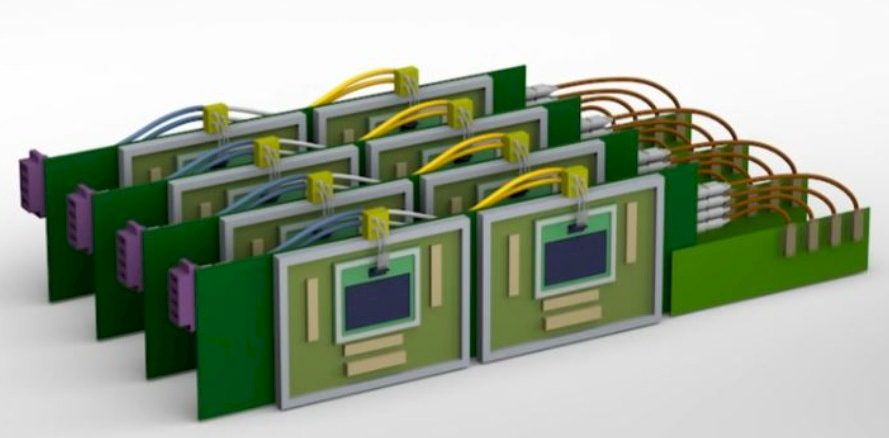

Nvidia Shows What Optically Linked GPU Systems Might Look Like

We have been talking about silicon photonics so long that we are, probably like many of you, frustrated that it already is not ubiquitous. …

We have been talking about silicon photonics so long that we are, probably like many of you, frustrated that it already is not ubiquitous. …

System architects are often impatient about the future, especially when they can see something good coming down the pike. …

Atos, for a long time a key IT services provider in Europe, stepped onto the HPC stage in a big way in 2014 when it bought systems maker Bull’s hardware business in $840 million, instantly making it a major supercomputer vendor on the continent. …

In March 2020, when Lawrence Livermore National Laboratory announced the exascale “El Capitan” supercomputer contract had been awarded to system builder Hewlett Packard Enterprise, which was also kicking in its “Rosetta” Slingshot 11 interconnect and which was tapping future CPU and GPU compute engines from AMD, the HPC center was very clear that it would be using off-the-shelf, commodity parts from AMD, not custom compute engines. …

UPDATED: The EuroHPC Joint Undertaking, which is steering the development and funding for pre-exascale and exascale supercomputing in the European Union, has had a busy week. …

One of the most pressing challenges in deploying deep learning at scale, especially for social media giant, Meta, is making full use of hardware for inference as well as training. …

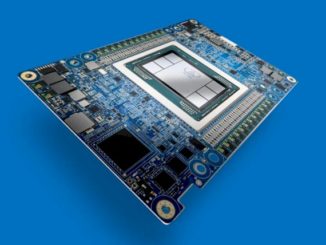

For the last few years, Graphcore has primarily been focused on slinging its IPU chips for training and inference systems of varying sizes, but that is changing now as the six-year-old British chip designer is joining the conversation about the convergence of AI and high-performance computing. …

Significant business and architectural changes can happen with 10X improvements, but the real milestones upon which we measure progress in computer science, whether it is for compute, storage, or networking, come at the 1,000X transitions. …

Nvidia is not the only company that has created specialized compute units that are good at the matrix math and tensor processing that underpins AI training and that can be repurposed to run AI inference. …

There is increasing competition coming at Nvidia in the AI training and inference market, and at the same time, researchers at Google, Cerebras, and SambaNova are showing off the benefits of porting sections of traditional HPC simulation and modeling code to their matrix math engines, and Intel is probably not far behind with its Habana Gaudi chips. …

All Content Copyright The Next Platform