The Datacenter GPU Gravy Train That No One Will Derail

We have five decades of very fine-grained analysis of CPU compute engines in the datacenter, and changes come at a steady but glacial pace when it comes to CPU serving. …

We have five decades of very fine-grained analysis of CPU compute engines in the datacenter, and changes come at a steady but glacial pace when it comes to CPU serving. …

Since the advent of distributed computing, there has been a tension between the tight coherency of memory and its compute within a node – the base level of a unit of compute – and the looser coherency over the network across those nodes. …

It has been two and a half decades since we have seen a rapidly expanding universe of a new kind of compute that rivals the current generative AI boom. …

Timing is a funny thing. The summer of 2006 when AMD bought GPU maker ATI Technologies for $5.6 billion and took on both Intel in CPUs and Nvidia in GPUs was the same summer when researchers first started figuring out how to offload single-precision floating point math operations from CPUs to Nvidia GPUs to try to accelerate HPC simulation and modeling workloads. …

Heaven forbid that we take a few days of downtime. When we were not looking – and forcing ourselves to not look at any IT news because we have other things going on – that is the moment when Nvidia decides to put out a financial presentation that embeds a new product roadmap within it. …

Back in 2009, I was the editor of a mini-side publication from supercomputing magazine, HPCwire, called HPC in the Cloud. …

The history of computing teaches us that software always and necessarily lags hardware, and unfortunately that lag can stretch for many years when it comes to wringing the best performance out of iron by tweaking algorithms. …

UPDATED: It is funny what courses were the most fun and most useful when we look back at college. …

If Nvidia and AMD are licking their lips thinking about all of the GPUs they can sell to the hyperscalers and cloud builders to support their huge aspirations in generative AI – particularly when it comes to the OpenAI GPT large language model that is the centerpiece of all of the company’s future software and services – they had better think again. …

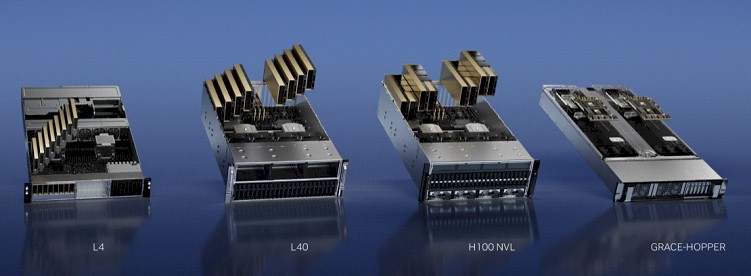

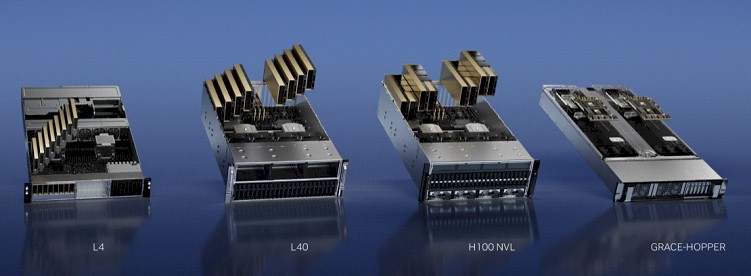

Updated With More MGX Specs: Whenever a compute engine maker also does motherboards as well as system designs, those companies that make motherboards (there are dozens who do) and create system designs (the original design manufacturers and the original – get a little bit nervous as well as a bit relieved. …

All Content Copyright The Next Platform