When three years ago Dell rolled out its Apex initiative, the massive IT supplier was joining a growing list of tech companies like HPE, Lenovo, and Cisco who were adapting to a rapidly expanding multicloud world by making their product portfolios available in a cloud-like, as-a-service model. Organizations wanted more of that pay-as-you-go vibe they were feeling in the public clouds for their on-premises environments and the OEMs were eager to oblige.

Those efforts – GreenLake for Hewlett Packard Enterprise and TruScale for Lenovo, as examples – also enabled the vendors to make more of their technologies available in the public clouds, giving them a footprint there while keeping firmly on premises, an important capability as the initial enterprise sprint into the public clouds eventually resulted in some workloads making their way back into datacenters.

In this as-a-service rush – with smaller vendors like storage companies Pure Storage and NetApp pushing their own strategies – Dell made some decisions that the company said is paying off today. One was that while it would offer most products via flexible consumption models – subscriptions, pay-as-you-go, and the like – it would keep other payment avenues open, giving enterprises a range of options.

It also would emphasize data in its as-a-service strategy.

“When you look at how we see the multicloud world vs. how cloud vendors see the cloud world – and even how a lot of our friends in the virtualization world see it – they see the multicloud world as dominated by the applications,” John Roese, global CTO for Dell, told The Next Platform during this week’s Dell Technologies World 2023 event. “We don’t. We see applications as important, but applications are ephemeral and they change. The data doesn’t. The data foundation that you build – the ability to get your data organized for the multicloud environment – is far more important long-term than the ability to have a rapid path to developing an application.”

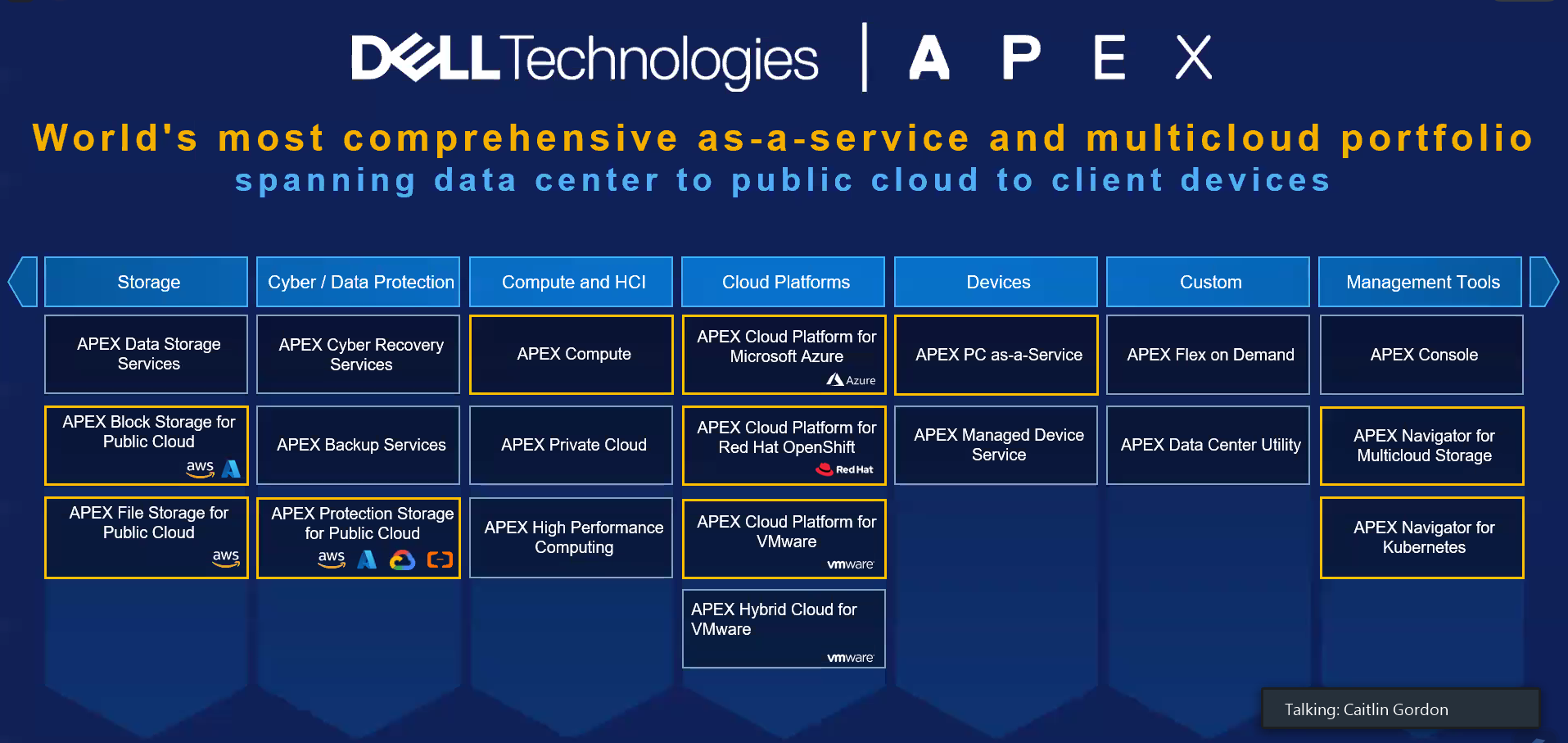

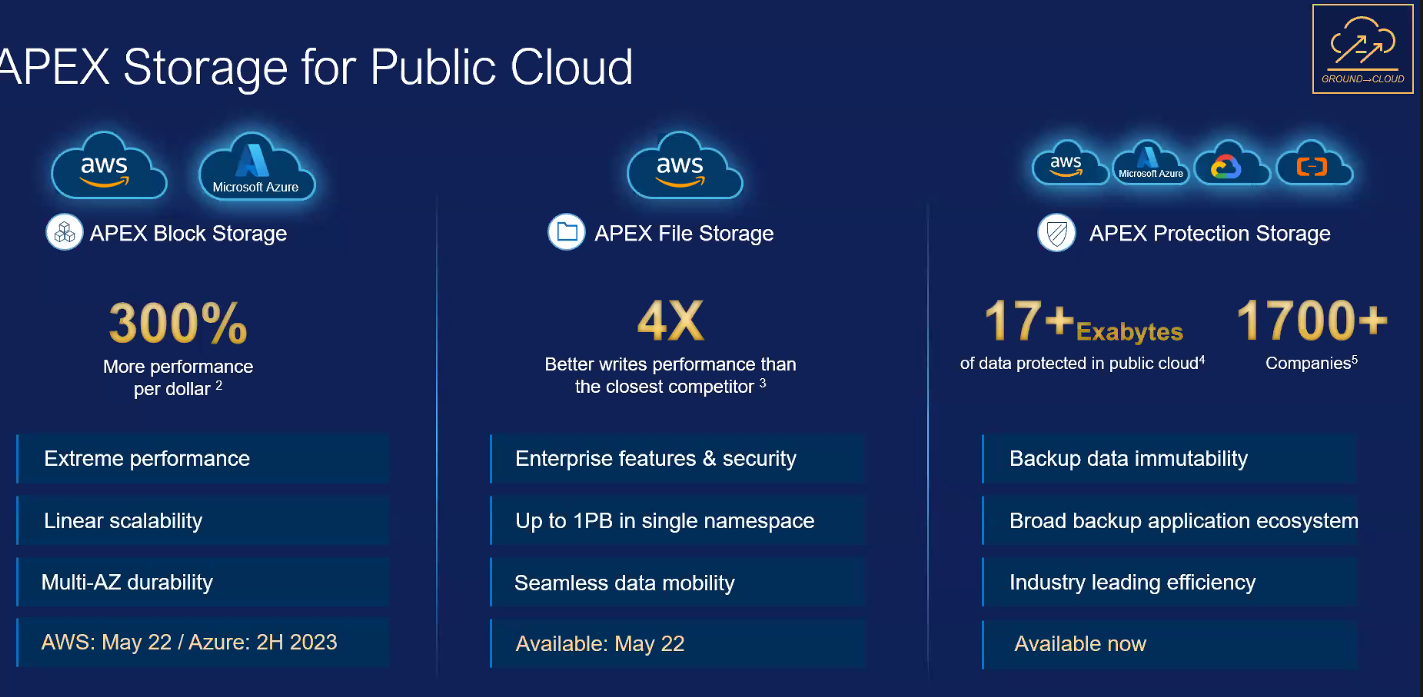

In line with that, Dell has been rapidly expanding its storage portfolio – including block, file, and object storage – in the Apex fold, building on the promises made last year with the introduction of its Project Alpine. The addition of the storage offerings also significantly broadened the entire portfolio inside Apex.

Dell introduced block storage in Apex for Amazon Web Services – it’s coming to Microsoft Azure later this year – and its file storage technologies into AWS. The storage offerings – including object storage – should expand to other clouds, such as Google Cloud Platform.

At the same time, the company introduced two additions to the Apex Console management tool, including Navigator for Multicloud Storage for managing block and file storage in Apex and Navigator for Kubernetes for container storage modules.

The storage expansion is a crucial move for Dell’s vision of how the multicloud should function. Jeffrey Clarke, Dell’s vice chairman and co-COO, said the Apex Storage for Public Cloud serves as the hub through which workloads move between (and among) the public clouds and on-premises datacenters, which now includes offerings in the newly announced Apex Cloud Platforms, Dell infrastructure designs that enable enterprises to run public cloud environments on-premises.

The initial offerings include platforms for Azure, Red Hat’s OpenShift, and VMware, for deploying and running vSphere.

It illustrates Roese’s belief that “the thing that is the immutable center is your data. Once you land that, you end up with data gravity.”

That gravity is important, he said. If an organization with a multicloud strategy puts its systems of records – where the corporate knowledge resides, such as databases and HR information – into a single public cloud, it ramps up cost and complexity aand creates a preference for developers to use that cloud to build their applications.

“If you want to build a different customer care application in GCP, but your systems of record – all your core systems—are in Amazon, that is a fairly nightmarish process of being able to reach across that boundary and get access to that database because the data is sitting in a storage system that is bound to that cloud,” Roese said. “It’s not available to the other ones easily except through very clunky external interfaces.”

By contrast, a common storage substrate “could live in any one of those clouds. It also could live in a co-location, which is one hop away from all of those clouds. You have a lot of choice where you put it. So now, that same system of record, that data, the same historical database that contains your most important data, if you have it in that same common storage layer and you have the ability to move it around, to go to a co-location, it becomes Switzerland for data. Then what you’ve created is a model where you’re not tightly binding that system of record to any particular cloud but you’re close enough to all of them so that an application developed in any one of those clouds should be able to talk to that database.”

Enterprises can also put that system of record into a virtual environment that can be housed in a public cloud. Because it remains in the enterprise’s control, it can be replicated to another cloud like Azure or moved to a co-location facility to be accessed from all the public clouds.

“We’re not saying all data needs to be with Dell, but we are saying that in a multicloud environment, some of your most important data needs to be accessible to all of your clouds, including your on-prem and edge, and there are no easy vehicles to do that because typically, the world obsesses over where the application is with your data,” he said. “But what if twenty applications in five different clouds need to access the same data? That’s an incredibly hard problem to deal with, so what people end up doing is they do these really weird gymnastics with databases to create replicas and synchronize between them, which is unnecessary if you just change your storage.”

Dell’s been doing storage for a long time and the point of storage arrays was to create a shared and centralized storage environment that multiple applications and services could access it. Doing this “in a cloud world where the consumers of that data are multiple clouds is very hard to do without someone like us stepping in the middle of it to create essentially a Switzerland,” Roese said.

Dell certainly isn’t the first to storage-as-a-service (STaaS). Over the past couple of years, HPE has aggressively pushed its storage offerings into GreenLake, including its fast-growing Alletra line and block storage. Meanwhile, Pure Storage and NetApp both have aggressively expanded their storage-as-a-service offerings.

That said, the advantages Dell has is not only the depth of its storage portfolio but the sheer size of the company’s entire product portfolio, Roese said. That muscle was flexed this week when Dell added PCs and bare-metal compute to the Apex lineup. He noted HP’s split in 2015 that separated PCs (now with HP Inc.) and HPE’s enterprise IT infrastructure business.

“It’s impossible for HPE to deliver PC-as-a-service all the way to storage-as-a-service all the way to bare metal-as-as-service,” Roese said. “They’re not capable of doing that, yet if you look at the enterprise, there are zero enterprises in the world that don’t have all those opportunities. … As we apply it to multicloud storage, other companies are attempting to do that. NetApp certainly has activity there, Pure gets it. We’re just at a significantly different scale.”

Despite its relative newness, the as-a-service push by vendors appears to be gaining momentum. Dell co-COO Chuck Witten said during a quarterly earnings call in November 2022 that the Apex business “sort of” reached a $1 billion revenue stream, and HPE earlier this year said that in Q4 of fiscal 2022, as-a-service orders rose 68 percent and annualized recurring revenue jumped 25 percent.

The announcements at the Dell event marked the largest expansion in Apex’s three-year-history. Roese and other executives noted that challenges of the first two years, with a relatively small number of offerings in Apex and an ongoing effort by Dell to get its internal back-end operations in sync with the new as-as-service way of consuming and paying for products and services.

“Our back office was still evolving,” he said. “It was pretty clunky. We were having to replumb the whole company to do it. Today we’re far, far along on it. We’re not done, but you won’t notice anymore. Last year, you could kind of notice the rough edges. This year, there aren’t rough edges on it. It just works.”

Along with the back-office improvements, “the portfolio inside of [Apex] now is starting to reflect our vision. As opposed to it being one or two things [in Apex] and 30 other things that you can only consume as a product, today across almost our entire portfolio, there is at least an element that could be consumed in Apex.”

The portfolio — and the partnerships tied to Apex – will grow in the coming year, Roese said, and likely will include Project Helix, a collaboration with Nvidia announced at Dell Technologies World aimed at using Dell’s AI-optimized systems – such as the recently announced PowerEdge XE9680 server – and Nvidia’s GPUs and AI Enterprise software and frameworks to create on-premises platforms for enterprises that want to use proprietary corporate data to customize pretrained foundation models for their own AI workloads.

Switzerland immutable data??? Well, welcome to superintelligence buddy!!! Where all your data are belong to LLM AI! (these are scary times in terms of how “people” seem to consider lossy databases to be the pinacle of progress — multicloud data independence, agnosticism, and easy on- and off-ramps, do seem like a good ideas though).