The tech world is awash with generative AI, which for a company like Nvidia, is a good thing. The company that a decade ago put its money down on AI to be its growth engine has in the intervening years been pulling together the bits and pieces of hardware and software – and partnerships – to be ready when that future arrived.

The recent GTC 2023 show was all about what Nvidia is doing in AI, from new offerings to strategies and roadmaps, and the message that Nvidia has many of the pieces in place to help enterprises adopt AI into their businesses continues to be spread far and wide. That includes earlier this week, when Manuvir Das, Nvidia’s vice president of enterprise computing, delivered the keynote at the MIT Future Compute event in Cambridge, Massachusetts.

Das came to the event with a few messages, including that enterprises absolutely will need to hop on the generative AI train, whether through broadly used technologies from the likes of startup OpenAI, a partner of Nvidia’s whose GPT-4, ChatGPT, Dall-E, and other products are rapidly being integrated throughout Microsoft’s expansive portfolio and embraced by other vendors, or through efforts within the enterprises themselves, using their own data to train the large-language models (LLMs).

Another message was around the evolving compute landscape and AI. More of the world’s work is being done via computing, which means more energy is being consumed. Somewhere around 1 percent to 2 percent of the world’s energy consumption done by datacenters and use cases like compute-hungry generative AI will only increase that, according to Das. The rate of growth is unsustainable and computing needs become even more efficient. Unsurprisingly, Nvidia sees a key step is to have as many workloads as possible – not only those based on AI and machine learning – running on accelerated systems based on GPUs, which he argued can handle the compute demands while keeping power consumption low, essentially taking over the position of driving computing innovation that the now-faltering Moore’s Law once occupied.

“The message I was conveying to this audience, because the topic was the future of computing, is that ‘Yes, the new use cases like generative AI have been built on accelerated computing, so those are good,” Das tells The Next Platform. “But in order to find space for these new workloads and to prevent the world from having to go through this massive expansion of compute, we need to take all the stuff we are already doing in datacenters, domain by domain, and basically move that all to this accelerated computing, because that’s how you get 10 times the output from the same datacenter that you have today. That shift has to happen.”

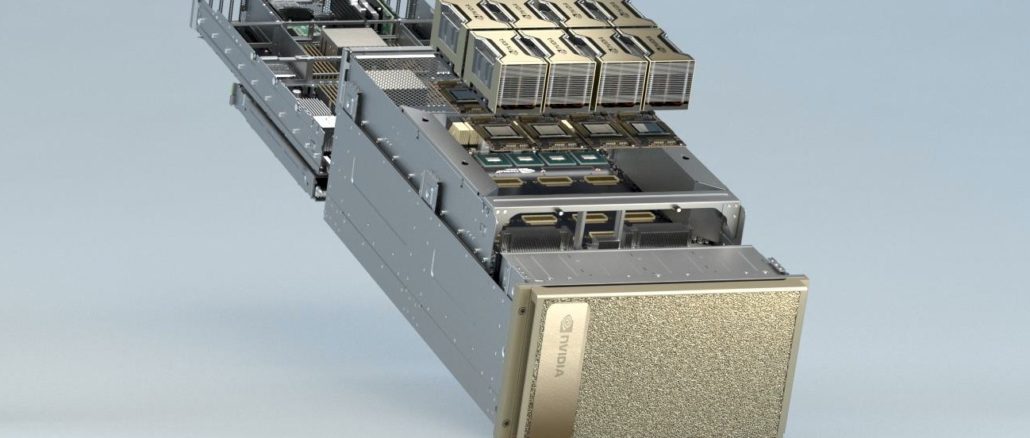

Nvidia has been busy in recent months pouring products aimed at the burgeoning AI space into the market, building out what Das says is a full-stack approach to the market. That includes the H100 “Hopper” H100 GPU accelerator, which is finding a home in such places as Microsoft Azure, Oracle Cloud Infrastructure, AWS, and Meta’s “Grand Teton” AI nodes, which the company is using internally for its own AI work.

In addition, the DGX H100 AI supercomputers – complete with Nvidia’s Enterprise AI software suite – are in production and the H100 NVL essentially puts two H100 GPUs connected on a single form factor that can be put into any server for AI training or inferencing. OEMs like Dell and HPE are developing systems that will support the H100 NVL double-wide PCI-Express H100s, Das says.

“The same system is optimized to be the most efficient for training, [but] you need a slightly different characteristic of system to be most efficient for the use of the model,” he says. “There are a lot of companies in the world that are not going to train a model. They’re going to start with the model that’s already there, but they’re going to use it. They want a form factor that’s just optimized for using it.”

At GTC, the company also unveiled such technologies as NeMo Guardrails to ensure AI chatbots don’t veer off course by making things up – creating “hallucinations” – or just getting information wrong.

Addressing such trust problems is key if businesses are going to go all-in on LLMs, from OpenAI’s offerings to LLaMa from Meta Platforms. There are questions about data security, privacy, and compliance that have to be addressed in what is still a somewhat amorphous environment despite the rush to run generative AI tools into the market. Some countries have questioned such LLMs as ChatGPT – Italy recently banned it, though this week said it reversing that decision – and Samsung said it was banning employees from using ChatGPT and similar products due to risks raised by generative AI. Some enterprise executives worry that applications developed using generative AI make inadvertently leak sensitive data or corporate secrets.

The federal government and those in other countries have begun making noises about the need for regulation and oversight of the fast-evolving AI market, which as we talked about this week, could continue to morph as open source players start bringing their LLM code to market. Vice President Kamala Harris met Thursday with the chief executive officers of Microsoft, OpenAI, Google, and Anthropic to talk AI.

Despite all this, enterprises are coming to the understanding that generative AI is something they have to adopt is they are to stay competitive, Das says. He notes that some companies, such as Meta, Google, and Microsoft, were early adopters of AI. However, the “bread-and-butter enterprise customer” has “done the R&D stuff – they’ve built models, they’ve trained models – but not as many of them have put stuff into production because of all the reasons that enterprise companies worry about. Probably what they were all waiting for was a compelling event. What’s happened now with generative AI is they found a compelling event, so now they know they have to do it because if they don’t do it, they’re going to get left behind.”

Companies that thought they had five or so years to deliberate about it are seeing their timeframe rapidly shrink. They have to get into now, so all those concerns have to be addressed and assurances given for them to do so.

“All these things are coming to the fore,” Das says. “But the technology is at hand. That’s the thing. These techniques – like fine tuning with your own data, retrieving information from the right databases, teaching the skills that matter – this technology does not have to be invented. We’ve got the technology. It’s more about delivering it into the hands of people.”

Despite how ubiquitous generative AI seems to be, there are still myriad issues that need to be dealt with. Certainly one is the aforementioned power consumption that will come with it. However, another is the amount of data that is being created, collected, stored, processed, and analyzed, which feeds back into the power issue, he says. If computing in the future is to be sustainable, organizations now seem hesitant to toss any data away need to be smarter about what they keep and what they let go.

“The data is the fuel that fuels all of this,” Das says. “We’ve improved the state of the art a lot in storage over the years, but the rate at which data is being generated from devices, sensors, everything, and the all these use cases for the data now popping up, nobody wants to throw that data away. If we try to keep all of this data, the number of datacenters we’re going to need [and] the number of storage systems we’re going to need to hold on to all this data is going to be crazy. The fact of the matter is, there’s a lot of data.”

This is an issue for both vendors and enterprises. For example, companies developing self-driving cars collect huge amounts of data driving around armed with cameras and sensors and recording everything to build up a dataset to train the AI models. Can some of the incremental information be eliminated? Nvidia, with its own autonomic vehicle unit, simulates traffic situations, which gives researchers the information they need without collecting as large amounts of data.

On the enterprise side, companies can do a better job of data deduplication to make the storage more efficient. CIOs and other executives also need to inventory the data their systems are holding and decide what’s valuable and what can be thrown out.

“Then, where am I keeping this data in the most efficient manner?” Das says. “That’s all part of the job description that is very important going forward. That part’s on them. Then obviously, the vendor should provide the very best technology to make it all efficient.”

Be the first to comment