SPONSORED Every year, a fairly large portion of the several tens of millions of servers running in the world needs to be replaced because the cost of using the old machinery can be higher than buying in the new machinery – and this can be true even if the old kit is entirely paid for and completely depreciated.

We reckon that at least 13 million servers will be sold in 2023. And despite the wonkiness of the global economy, we also anticipate a pretty aggressive upgrade cycle even if the growth rate for revenues in the server market will be smaller than what we saw in 2022. This is a sentiment that is backed up by the market researchers at IDC.

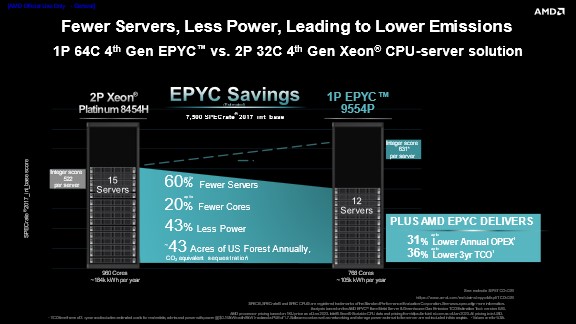

It’s hard to predict precisely what will happen of course, but one thing is fairly certain. AMD, together with its server OEM and ODM partners, has the potential to carve out a pretty big piece of the server upgrade cycle and the volume of incremental new machinery sold. The reason for that rests with the substantial technical and economic benefits that the EPYC™ 9004 processors, also known by their “Genoa” former code name, offer compared to alternative compute engines in the datacenter.

AMD launched the 4th Gen EPYC server chips in November 2022 – we did a thorough analysis of the Zen 4 core and the resulting 4th Gen lineup and how the 18 different compute engines in the 4th Gen EPYC SKU stack compare to the three prior generations of EPYC processors.

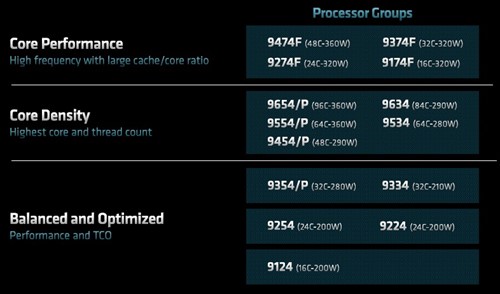

There are four EPYC 9004 series CPUs aimed at single-socket servers, which are designated with /P in the product name. There are another four that are frequency optimized, designated with the suffix ”F”, which means they have high frequencies and a large cache-to-core ratio to balance out that performance. The F models represent one performance band in the EPYC 9004 lineup, and then there are “core density” SKUs that have the highest possible core and thread counts from the middle to the high bin.

The low-bin 4th Gen EPYC parts offer a balance between reasonable performance and the optimal total cost of ownership (TCO). As we have pointed out before, and to give a sense of how much progress is embodied in the EPYC 9004 lineup, the low-bin 4th Gen EPYC parts look like the middle bin of the EPYC 7003s (formerly codenamed “Milan”) from 2021 and the low bin of the EPYC 7002s (formerly codenamed “Rome) from 2019 and the high bin of the EPYC 7001s (old codename “Naples”) from 2017.

If history is any guide, four or five “Genoa-X” SKUs with 3D stacked L3 cache are likely to be added to the 4th Gen EPYC lineup later this year.

Stacking Up EPYC 9004 CPUs Against Prior Gen EPYC CPUs And Other Competition

The EPYC 9004 CPUs offer a significant performance boost compared to their EPYC 7003 predecessors, which in turn pretty consistently offered more performance than the “Ice Lake” Xeon SP server processors from Intel. And while the “Sapphire Rapids” update to the Xeon SPs also provides a significant generational jump in performance per core and more cores, Intel wasn’t really able to close the gap with the 4th Gen EPYC processors. And in many workloads, the “Milan” EPYC 7763 processors can meet or beat the Sapphire Rapids Xeon SPs in terms of raw performance.

Five charts looking at the top of stack performance of 4th Gen versus 3rd Gen EPYC and Ice Lake and Sapphire Rapids server chips provide a good overview of the competitive X86 server CPU landscape.

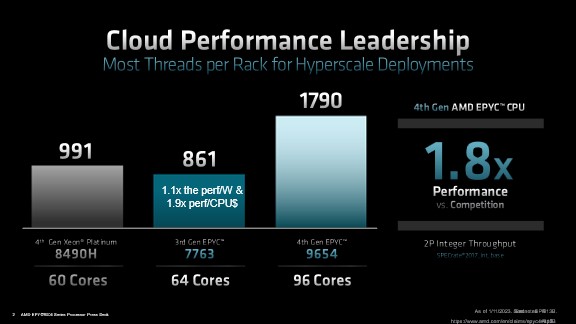

The first is for generic integer performance, which is gauged by the SPECrate®_2017_int_base benchmark test running on two-socket servers:

In the comparison above, a pair of the EPYC 9654s with 96 cores each is compared to a pair of EPYC 7763s with 64 cores each and a pair of Xeon SP 8490Hs from Intel with 60 cores each, all top of stack for their respective generation. For integer work, EPYC 9654s offer 1.6X more cores compared to Intel, yielding just a tad under 2X the overall performance on the SPEC integer test. When you look at the numbers compared to the EPYC 7763s, the Xeon 8490Hs provide about 15 percent better performance, but the EPYC 7763 perf per watt and perf per dollar make this an extremely good TCO value for those that aren’t needing to upgrade to DDR5 or PCIe® 5.

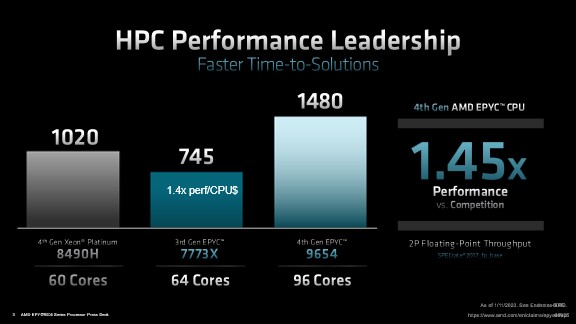

The performance gap is not quite as wide on floating point work commonly done with HPC and AI workloads, but it is still quite large as gauged by the SPECrate_2017_fp_base benchmark test:

This floating point performance provides a good illustration of why AMD is seeing a resurgence in its HPC business. And that rebound isn’t just down to the AMD Instinct MI250X GPU accelerators (former codename “Aldebaran”) installed on several upper echelon HPC supercomputers, the most notable being the 1.1 exaflops “Frontier” system at Oak Ridge National Laboratory.

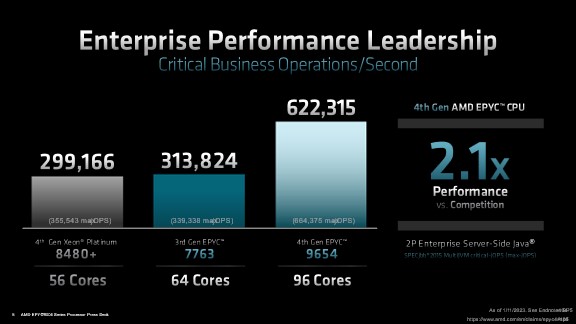

For a lot of enterprises, the performance of Java and other interpreted languages and relational databases that underpin applications are more important than raw integer and floating point performance. And in these cases, systems based on the top of stack EPYC 9654 and EPYC 7763 have a similar lead over the Xeon 8480+ which have about the same advantage as the raw integer performance would imply was possible when running Java applications:

In this case, Java performance is gauged by the SPECjbb®2015-MultiJVMbenchmark running on multiple JVMs and measuring critical Java operations per second throughput. The best published score for Sapphire Rapids Xeon SP with 60 cores doesn’t beat the EPYC 7763 and is 2.1x away from the EPYC 9654. Though to be frank, it’s hard to envision how Intel’s Xeon SP line will catch up to the EPYC line any time soon on raw integer and floating point performance as well as higher level application performance.

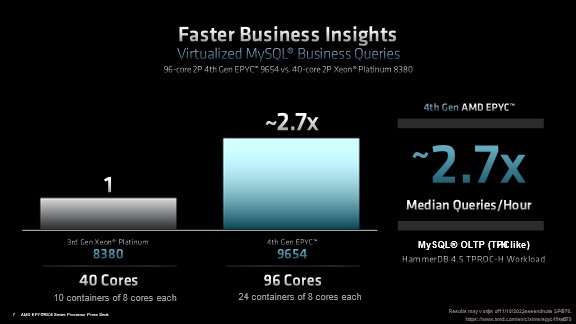

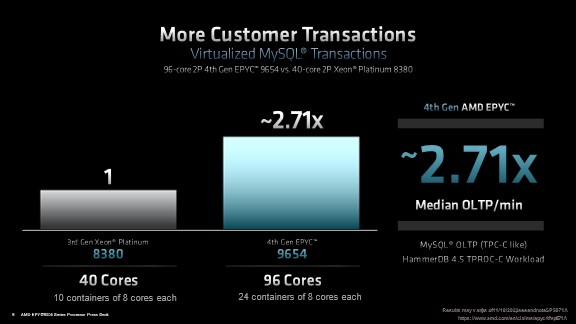

It’s equally difficult to imagine how Intel will be able to catch up with AMD on performance for running online transaction processing and decision support queries against relational databases. Take a look:

The EPYC 9654 provides approx. 2.7x more decision support queries compared to the Intel 8380 and approx. 2.7x more online business transactions compared to the same processor. (Note, these benchmarks haven’t been updated with the latest Sapphire Rapids CPUs).

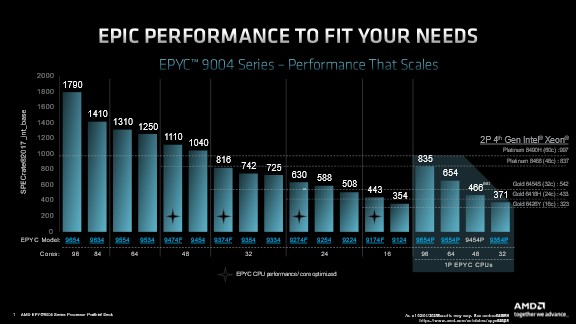

The following chart shows how the performance of the different core counts in the EPYC 9004 line compare to the 24-core Xeon 6418h, 36-core Xeon 8452Y, 48-core Xeon 8468 and 60-core Xeon 8490H parts, which helps to visualize this performance gap between the Intel and AMD lines more broadly:

What this showcases, is that at every major core count boundary, the new EPYC 9004 parts beat the 4th Gen Xeon parts, while still providing higher core count parts with more processing capabilities that Intel cannot match. Even more impressive is the right side of this chart. The popular 1P parts from AMD match, or beat, similar core counts from Intel, but in a 2P setting.

The Energy Crunch

This performance gap between the Intel Xeon SP and AMD EPYC lines of processors is particularly important as energy costs have skyrocketed around the globe. The situation means datacenter operators are trying to not only to improve application performance or increase application capacity, but also need to do so in an energy conscious way. Companies upgrading from older iron can do a lot more work in the same footprint – it is estimated that it will not be uncommon to see a 4:1 or even 5:1 compression when taking out older Intel X86 iron and replacing it with new AMD X86 iron.

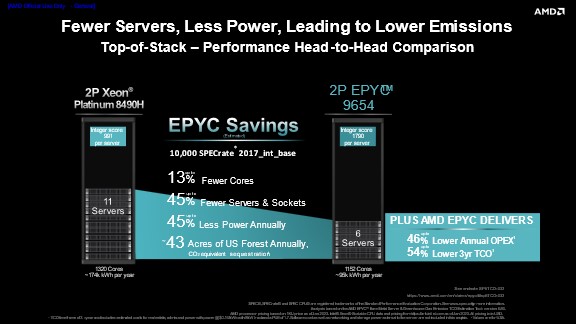

Here is one example of how the math works out for server clusters with a total of 10,000 units of integer performance as gauged by the SPEC integer benchmark:

The number of servers that can be replaced will likely get increasingly higher as the Intel server generations go back in time, when performance was a lower per socket (though admittedly, power draw and heat dissipation was somewhat lower per socket, too). It takes only six machines using a pair of the 96-core EPYC 9654 processors to yield that 10,000 SPEC integer performance capacity, compared to 11 servers using a pair of 60-core Ice Lake Xeon 8490H processors. More importantly, that adds up to 46% lower annual OPEX and 54% lower three year TCO, which in this economic climate is critical for IT organizations that are looking to be good corporate stewards of performance and finance.

And when you look at the economics.

The Intel Skylake Xeon SP generation from 2017 is a large portion of the 45 million-strong X86 server installed base and illustrates just how significant these compression factors could prove.

If you take an enterprise workload, like virtualization, and create a scenario based upon using 380 VMs compare five servers using a pair of Skylake 28-core Xeon SP-8180s to a single server using a pair of 96-core EPYC 9654 processors, you see some incredible consolidation and energy efficiency impacts. The Xeon based servers will burn 31,360 kilowatt-hours per year, while the EPYC server will only burn 11,466.84 kilowatt-hour per year. In this scenario, you get an 80 percent reduction in servers, using 65 percent less power, which opens up more flexibility for growth in the future.

And companies with large fleets of “Broadwell” Xeon E5 or “Skylake” or “Cascade Lake” Xeon SP servers could radically reduce their fleets to get the same performance, radically expand their capacity, or find a happy medium somewhere in the middle and could still save lots of energy and the related carbon emissions.

Sponsored by AMD.

Be the first to comment