A decade ago, waferscale architectures were dismissed as impractical. Five years ago, they were touted as a fringe possibility for AI/ML. But the next decade might demonstrate waferscale as one of only a few bridges across the post-Moore’s Law divide, at least for some applications. Luckily for the only maker of such a system, Cerebras, those areas are among the most high-value workloads in large industry.

The range of use cases for computational fluid dynamics (CFD) applications spans across a wide swath of industries, from automotive, aerospace, and energy to broader manufacturing and product design. Most companies that require complex high-fidelity CFD simulations must dedicate significant internal supercomputing resources to these simulations, which can be prohibitively time-consuming in the face of ambitious time-to-solution requirements.

“It can take months to run a single CFD simulation at high resolutions. The impractical nature of how long it takes to do a single simulation, especially when doing pre-production activities like design optimization and uncertainty quantification mean it’s so slow it’s basically never used in these contexts,” says Dirk Van Essendelft, an ML and data science engineer at the National Energy Technology Laboratory (NETL).

For these simulations, a design of experiments is created that can include 100 discrete simulations to run through a sweep of different velocities and pressures, for instance, or even using more uncertain parameters like viscosity to capture the impact on the design.

“Running those 100 simulations and consuming a majority of a supercomputer for one month at a time just to run one of those design points means you can’t use that system well for these types of applications,” Van Essendelft adds.

This isn’t a problem one can just throw accelerators and cores at to solve, either. While GPU acceleration can be a benefit to CFD runs in that they can allow larger problems to be solved in a single simulation, this does not necessarily mean that simulations run faster. Having high capacity and good energy efficiency out of CFD is one thing but for companies trying to get products to market faster, time to result is the name of the game.

What enterprise CFD shops need is the ability to take advantage of fine-grained parallelism and lower the work done by each processor so each loop is far faster.

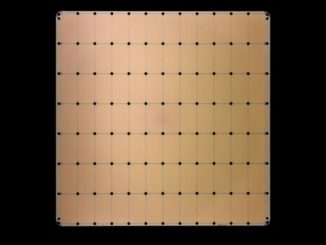

Van Essendelft tells The Next Platform about work done at NETL on the Cerebras CS-2 waferscale system that resulted in a 470X speed gain on structured grid problems in CFD using a math library he developed called the Waferscale Engine Field Equation Application Programming Interface (WFA) that skips the AI story for the system and performs the role of HPC machine.

While it’s limited to structured grid problems now his team is working in conjunction with Cerebras to tackle unstructured problems, which is where the real enterprise appeal will be for large companies that need to handle complex geometries (making cells in CFD fit around complex shapes).

The 470X speedup is compared to an OpenFOAM run on the GPU-accelerated Joule supercomputer and focused on a 200 million cell simulation focused on a convection fluid flow. “This speed gain takes these problems from intractable to possible because at that speed gain simulations take a couple of hours versus months,” Van Essendelft explains.

“This is important in automotive and aerospace and even iterating designs on refrigerators and stoves and other commodity goods—all of this involves fluid and heat transfer and all products go through this kind of design and analysis. So the consumer is going to be touched by this kind of technology, whether they realize it or not. And they’re going to see a price drop because it will cost less and they’ll get better performing products at lower cost,” he adds.

What it really comes down to is bandwidth at every level, Van Essendelft says. “The things that make things slow, that control the ability to strong scale, are bandwidth and latency. If I introduce any kind of bottleneck into the system that prevents me from being able to get to do at least two reads and one write for every processor cycle to my own memory and get that support to a neighbor that means you completely eliminate the bottleneck that happens in other systems.”

In terms of how the difference in waferscale makes a difference, consider Most processors achieve this at the L1 cache level with the smallest pool of cache right next to the processor. But there are only two systems that are designed with this kind of architecture on the macro-scale: One is the Cerebras CS-2 and the other is the chiplet-based Tesla Dojo supercomputer architecture.

“Once you achieve that kind of bandwidth and latency, the differentiators are in how much energy it takes to move data between neighbor processors. This is where I think an architecture like the waferscale engine has an advantage over Tesla’s Dojo—it’s all about physical proximity.” The chiplet approach still involves data movement penalties versus doing compute that’s contiguous on a single wafer.

This architecture also solves some of the programmability challenges, which are generally the result of complex memory tiers. Developing the WFA overall took 18 months, starting at assembly level kernels, Van Essendelft tells us. That reduction in data movement is also a boon for power consumption, he adds.

The work on both structured and unstructured grids is generalizable across CFD applications, Van Essendelft says. “If you can put your solution on a regular grid, you can use the WFA to solve it like you do with other HPC applications. We chose this problem first because it was quick and easy to get off the ground.”

Commercial advantages aside, as NETL and the Department of Energy, Van Essendelft says “we care about fast-running digital twins that are very accurate and for the first time in history we have CFD-type applications at that level of fidelity that are at or very close to—or even faster—than real time, which means we can now increase security. We can predict equipment breakdowns. We can respond to upsets.” He points to the ice storms in Texas and the challenge of making fixed asset power plants handle grid variability and integrate other power sources as a prime example of how a system like the CS-2 might be used as a digital twin to make fast, critical decisions.

While Cerebras got its start during the rush of AI accelerators, it’s finally time for the mainstream to take a closer look at waferscale architecture. AI/ML is but one workload—and an emerging one at that. It will be interesting to see where traditional HPC applications start getting a leg up and what other domains might benefit.

Interesting article…I guess IF the problem at hand can fit into the relatively modest on-wafer SRAM (40-GB for the CS-2 if I recall correctly, “If I introduce any kind of bottleneck into the system that prevents me from being able to get to do at least two reads and one write for every processor cycle to my own memory”) THEN this architecture many be able to solve for other HPC type workloads.

(in jest) As a former Sys Admin looking after the mental and physical needs of a 100K+ Xeon/EPYC core HPC cluster, I can’t imagine the amount of thermal paste required when testing various CPU chip versions on a new version of said system.

The tens of GB of on-wafer sram is certainly amazing, but would not need to be the only ram. There’s no reason a wafer-scale architecture could not also make use of HBM stacks or include DDR controllers. Obviously those would not perform any better than they do for traditional dies. Possibly as a huge swap/ssd.

Can you provide a ballpark analysis of cost reductions with wafer-scale training for AI/ML. Specifically around some of the recent info on LLMs and OpenAI: https://www.semianalysis.com/p/peeling-the-onions-layers-large-language

It is great to see this advanced (and alternative) computational architecture applied to such problems as these, that most benefit humanity (as compared to language models). Rayleigh-Bénard convection couples Fourier and Navier partial differential equations (PDEs) to describe those cellular tourbillons 1st studied experimentally by Bénard (French like Fourier and Navier), circa 1900 or 1901. Incidentally, Bénard passed away in 1939, at the age of 64, before he could have benefited from retirement if he had lived under the currently proposed retirement reform, here in France, which is protested in the streets on a weekly basis (4 or 5 times thus far). There are caveats that the CS-2 waferscale systems are FP32 (rather than FP64), and the 470x speedup is for explicit time-dicretization (implicit discretization still gives an impressive 100x speedup). Also, the WFA-on-WSE authors do note that “hand-optimized assembly code” could provide better perf. than OpenFOAM, on conventional hardware. Still, to me, this is better use of state-of-the-art hardware than the silly cats/hot-dogs/essay-homework-cheating applications that seem so common. Good stuff!