Sponsored Post: Analyst firm Gartner believes AI will remain one of the top workloads driving infrastructure decisions through to 2023 as more organizations push pilot projects into the production stage. But the company also notes that those initiatives require specific infrastructure resources that can grow and evolve alongside AI technology to ensure they are successful.

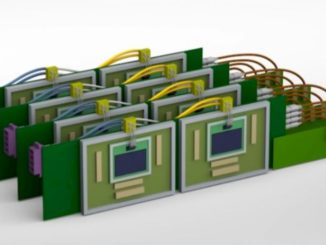

The simple truth is that AI is very compute heavy. Workloads engage in excessive number crunching that demands substantial processing power, memory muscle, and data storage resources, as well as fast, reliable, and low latency network connections to interlink those components. And while modern high performance computing (HPC) systems have stepped up to meet that demand to a certain extent, it’s equally true that many existing data centers haven’t yet come to the party.

Which leaves the traditional architectures in situ for the most part struggling to cope with the demands that AI is putting on them. But while organizations recognize the need for an upgrade, building out an IT environment customized to match the requirements of specific AI applications and workloads isn’t as easy as it sounds.

All sorts of factors need to be carefully considered — the balance of CPU and GPU resources to optimize utilization for example, as well as the precise composition of the racks, cabinets, servers, motherboards, memory modules, and disk drives (spinning and SSD). And for all of those components to work efficiently in tandem, they also need a lot of bandwidth at both system and network level to smooth the transmission of the large volumes of data that AI models tend to create.

You can learn more about these challenges by listening into this webinar — “Meeting the Bandwidth Demands of Next-Gen HPC and AI System Architectures” — at 9 am PST on November 9, 2022.

Moderated by The Register’s Nicole Hemsoth, the panel features a stellar cast of knowledgeable speakers. They include Vladimir Stojanovic, co-founder and chief architect at Ayar Labs; Simon Hammond, program manager for the National Nuclear Security Administration; Christopher Long, chief architect at Liqid; and Alex Ishii, a distinguished architect at Nvidia.

Together, they’ll explore the innovative system designs and solutions they are building to address the AI/HPC infrastructure problem and help unlock the huge potential that AI presents to research, science, and business initiatives.

You can register to watch the “Meeting the Bandwidth Demands of Next-Gen HPC & AI System Architectures” webinar by clicking here.

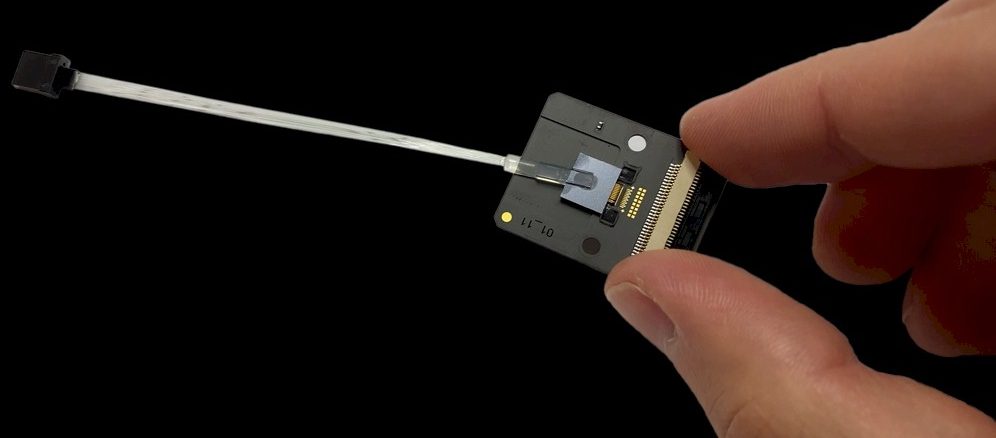

Sponsored by Ayar Labs.

Be the first to comment