The annual Computex computer expo in Taiwan is one of the places that IT vendors like to trot out some of their new wares, and most of the time they have to do with consumer electronics or maybe PCs and smartphones, but every now and then, we get some insight into future datacenter chippery or gear.

And so it is with Nvidia, which will be unveiling new reference designs for its future “Grace” Arm CPU and “Hopper” H100 GPU accelerator as well as liquid cooling options that it will be making available on current “Ampere” family GPUs and with future Grace and Hopper chips when they become available in 2023.

The introduction of reference platforms for Grace and Hopper compute engines as well as the liquid cooling options well ahead of when these components are shipping is necessary for Nvidia for a few reasons. For one thing, it wants to keep people talking about its engineering efforts, and for another thing, it takes more time to get inventory of just about anything these days, so pre-selling its server reference designs now helps it to build a better pipeline – and fulfill it either directly or through partners – down the line when the compute engines and their companion DPUs and NICs are available to go into systems.

We got a heads up on the reference designs using the Grace CPU during a prebriefing with Nvidia and then had a chat with Paresh Kharya, senior director of accelerated computing at Nvidia, to get a little more insight after the call. (We will publish that interview separately.)

Targeting Increasingly Fragmented Markets

The end of Dennard scaling of CPU performance and Moore’s Law lowering of the cost of transistors while boosting their density has wreaked all kinds of havoc on system architecture. The net effect of all of the necessary “facings of facts” is that it is increasingly important to co-design hardware and specific applications together to wring the most efficiency, in terms of cost and performance, out of a system. And so the two-socket X86 server that ruled the datacenter from the late 1990s through the early 2010s has been displaced by all kinds of different system designs with varying types and sizes of compute engines and now adjuncts like SmartNICs and DPUs to take unnecessary work off those expensive CPUs.

The markets these diverse systems serve are necessarily unique, and thankfully for both Nvidia and its competitors, are also quite large.

Here is how Kharya cased the total addressable markets that Nvidia is chasing, and we presume these are the TAMs for a five year forecast starting in the prior year as is common when people talk TAMs, because the numbers are too large to be for the current market as far as we can see. Traditional HPC simulation and modeling plus AI training and discrete AI inference represent a $150 billion opportunity, while the creation of digital twins for the “AI factories for the world” represents another $150 billion opportunity. (We presume these numbers are for hardware, software, and services, and it is getting harder to break these things apart anyway.) The systems behind massive gaming platforms comprise another $100 billion opportunity.

Considering that the IT market, according to Gartner, is going to be kissing $4.67 trillion worldwide by 2023, and if you extrapolate a growth of around 5 percent (let’s be optimistic for a second) then by 2026 global IT spending would be on the order of $5.4 trillion. This means the target markets that Nvidia is chasing (with its partners and resellers running as part of its hunting pack) represents roughly 8 percent, give or take, of all IT spending at the end of that forecast period. And what Nvidia is chasing is probably the most profitable part of the IT sector. Well, excepting traditional HPC, which think is vital for science and national security and our future but which we also think has never been particularly profitable for those creating supercomputers. This is not something we are happy about, but there it is.

Naturally, with these big and fragmented markets, you would expect for Nvidia to have an increasingly diversifying set of server designs that it provides as reference platforms and that it often sells itself to specific customers – starting first and foremost with its own scientists and engineers, who use its own supercomputers to design chips and to create HPC and AI software stacks.

Here is the lineup for systems using the Grace Arm server CPU coming next year:

The CGX platform has a pair of Grace CPUs (with a total of 144 cores across the dual-chip package), a BlueField DPU with integrated ConnectX-7 networking (which can drive either the Ethernet or InfiniBand protocol), and a pair of Ampere A16 accelerator cards. The A16, which debuted last November, puts four GA107 GPUs – the same one used in the A2 inference engine, but running slower – onto a single PCI-Express GPU card. It looks like the CGX platform has two of these and a lot of airspace.

The OVX platform, which was unveiled at this year’s GPU Technical Conference, will have a pair of Grace CPUs and unspecified graphics GPUs with AI inference capabilities for running Nvidia’s Omniverse digital twin platform, as well as BlueField-3 DPUs with integrated ConnectX-7 network interfaces. The current OVX testbed. (Don’t assume those GPUs will be Hopper GPUs just yet. . . . ) The current OVX servers have an unspecified CPU complex, eight A40 GPUs, three ConnectX-6 Dx NICs with a 200 Gb/sec network port, 1 TB of main memory, and 16 TB of flash storage. Eight OVX servers make a pod, and four pods linked with 200 Gb/sec Spectrum-3 Ethernet switches make a SuperPOD. Inspur, Lenovo, and Supermicro will be shipping OVX iron by the end of the year, and presumably Nvidia will sell some directly as well.

The two Nvidia server platforms on the right are variants of the HGX platforms that Nvidia has been selling as nearly finished preassemblies of CPUs, GPUs, and interconnects for the past several generations of its GPU accelerators for the datacenter.

These new HGX variants using the Grace chip come in two flavors, and there is not a special sub-designation for each but might we suggest HGX-C and HGX-CG because one is for CPU-only systems aimed at traditional HPC simulation and modeling workloads that have not been ported to CUDA and GPU acceleration and the other is for hybrid CPU-GPU systems that have a combined Grace-Hopper package. Both of these come with a BlueField-3 DPU and assume that the OEM will supply an interconnect adapter that reaches out into the network.

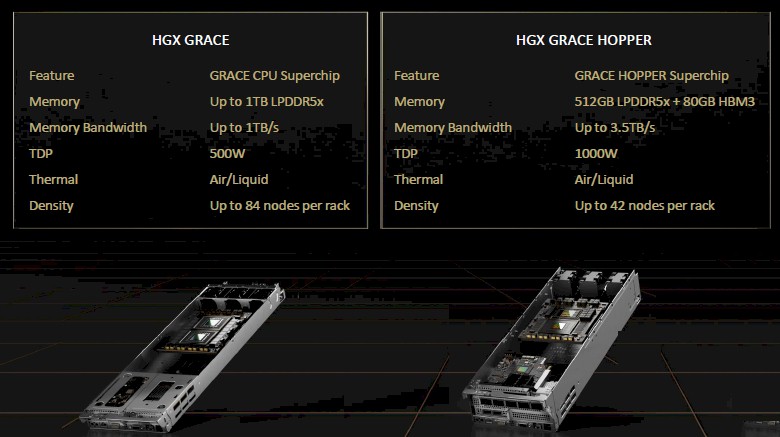

Here are the general feeds and speeds of these new HGX reference systems:

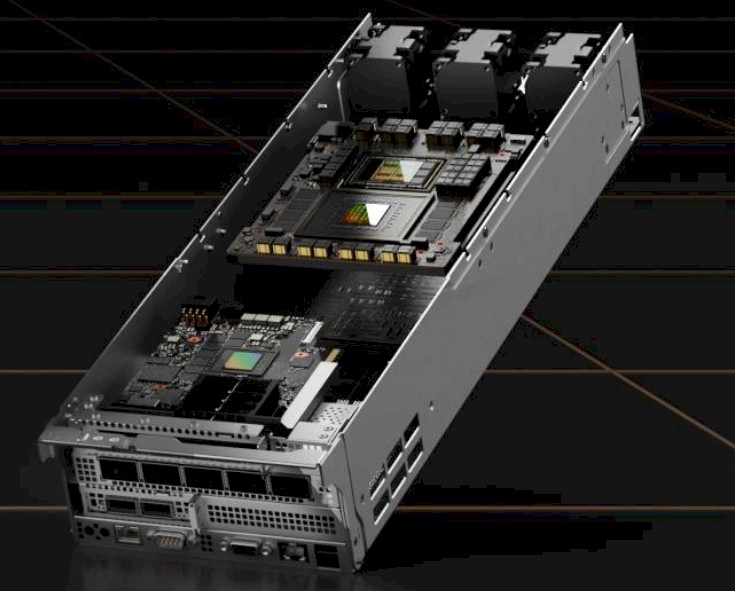

These server nodes are 2U high, and each sled has room for a pair of Grace CPU superchips. Here is a zoom in on the HGX Grace sled:

And here is what the server with two sleds looks like:

There are four nodes, each with a Grace superchip, in the chassis, although it is hard to see that. The compute and memory is burning 2,000 watts.

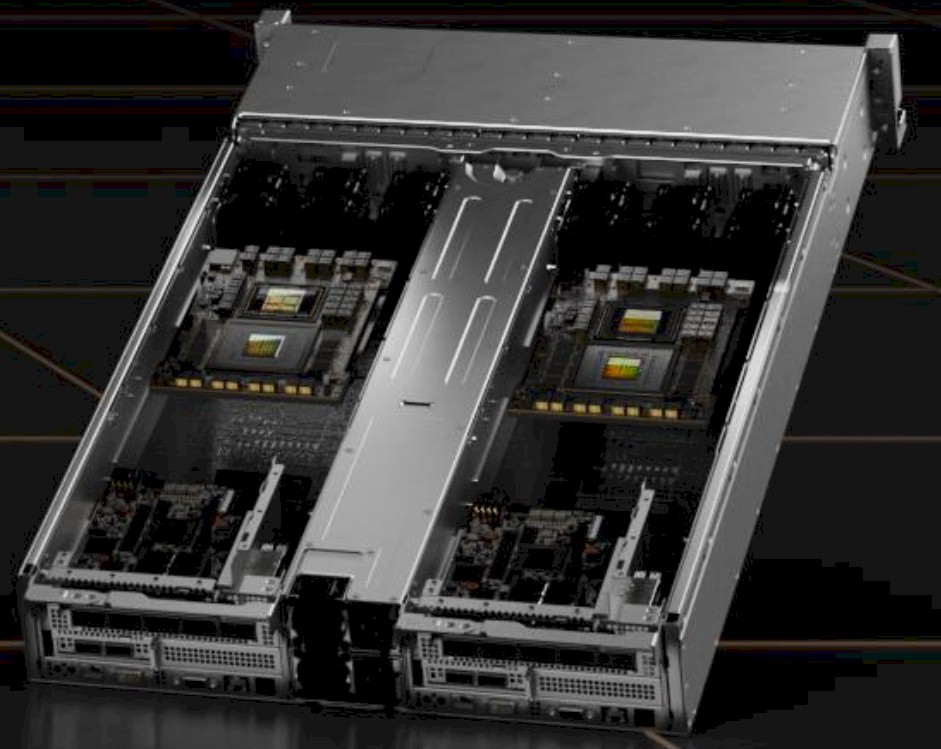

The Grace-Hopper version of the new HGX platform has room for only two Grace-Hopper units in a 2U chassis, and that is because of the limits of power draw and heat. The Grace-Hopper superchip will draw 1,000 watts each, so there is only room in the thermal and power budget for two – and they are twice as high as well.

Here is the HGX Grace-Hopper sled:

And here is what the server looks like loaded with two sleds:

The interesting bit is that the HGX Grace-Hopper system will allow for the NVSwitch fabric to be added to the system to create 256-GPU clusters with shared memory across those GPUs and still have CPUs for compute and data management and DPUs to handle virtualization and security.

Companies that want to sell these CGX, OVX, and HGX platforms can partner with Nvidia to get access to the reference design specifications, which shows companies Nvidia’s own Grace-Grace and Grace-Hopper motherboard designs and gives them the details they need to make modifications if they are so inclined. So far, there are six server makers that have signed up to make these HGX machines, including ASUS, Foxconn, Gigabyte, Quanta, Supermicro, and WiWynn. (The usual suspects.) They are expected to start shipping the new HGX machines in the first half of 2023. There will be reference architectures with X86 servers, too. This is not just about Grace, even though that is what the presentation is showing.

Going With The Flow

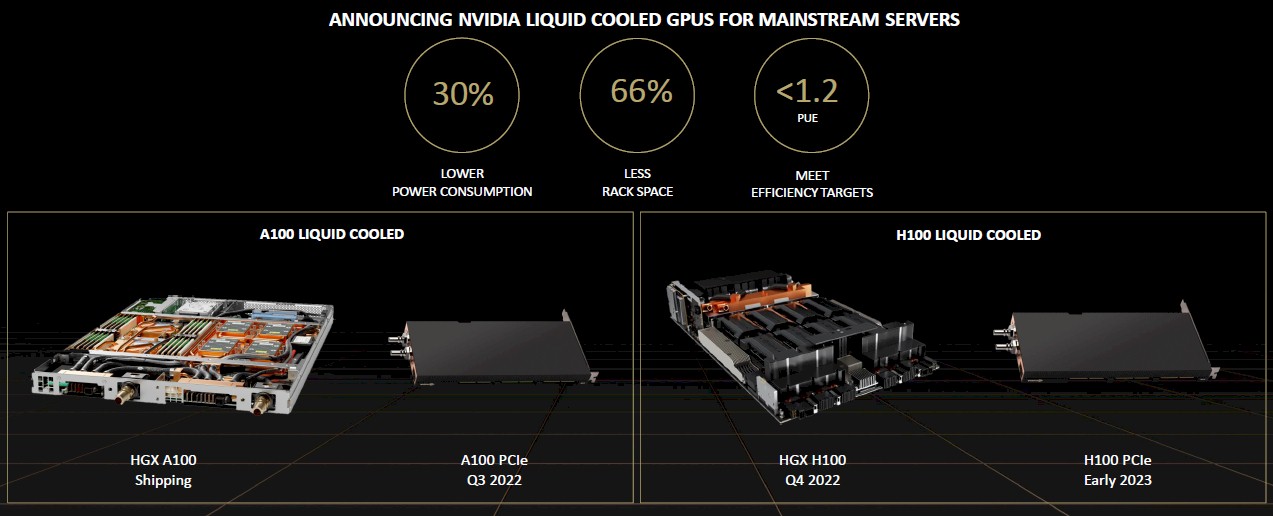

With the wattages of compute engines rising fast as system architects hit the Moore’s Law wall, it is no surprise that liquid cooling is making a comeback in the datacenter. Nvidia is adding liquid cooling on its HGX designs and on PCI-Express versions of its A100 and H100 GPU accelerators to get the heat off the devices in a more efficient manner and drive energy efficiency in the datacenter.

Kharya says that about 40 percent of the total electricity in the datacenter is used to cool the gear, and this liquid cooling on hot components in the system – CPUs, GPUs, memory, and DPUs – is meant to remove heat more efficiently than is possible using air cooling. Air, of course, is a terrible medium to move heat or cooling around, but it is a universal medium and hence replaced water cooling on high-end systems decades ago.

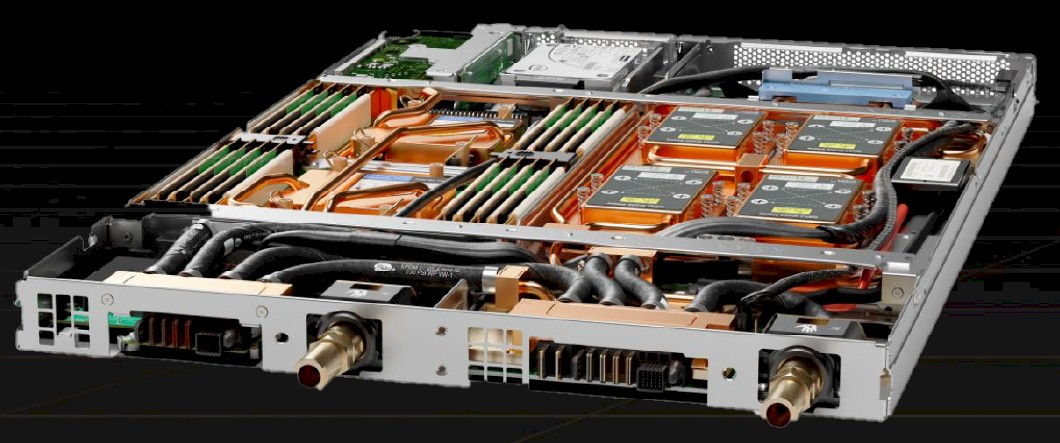

Here is a zoom in on the liquid cooled HGX A100 node that Nvidia is already shipping, and it is our guess that it was designed in conjunction with one or more of the hyperscalers and cloud builders:

As you can see in the image above, there are two CPUs on the left and they are linked, very likely with PCI-Express switching but possibly just through PCI-Express ports, to four A100 GPUs on the right.

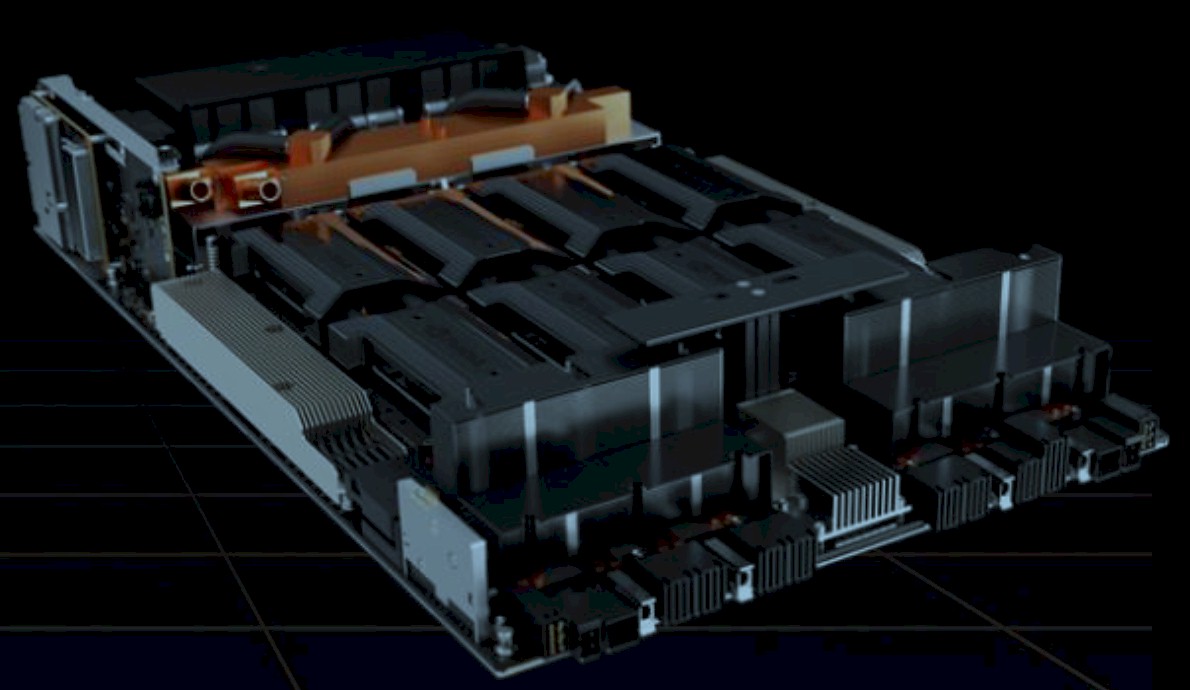

Here is a mock-up of the future water-cooled HGX system:

This looks like an eight GPU system board that would be mated with a server and interconnect system board, as is done with the existing HGX and DGX systems based on the A100 accelerators.

The liquid cooling used in these machines is not designed to allow for overclocking, but rather to allow it to sustain the GPUBoost speed built into the GPUs and presumably something that will be called CPUBoost for the Grace processor for a longer period of time. This is not about additional overclocking but making the energy use in the datacenter drop.

The liquid cooling is also about increasing the compute density of a server. The liquid cooled A100 and H100 accelerators in the PCI-Express form factor will only require one slot because they do not have a giant heat sink on them. The regular A100 and H100 PCI-Express cards require the space of two physical slots each. So in theory, you could cram more GPUs into a box with liquid cooling or make a smaller box with the same number of GPUs.

Be the first to comment