Wouldn’t it be funny if Google ends up being the stalwart supporter of the X86 architecture among the hyperscalers and cloud builders?

Amazon Web Services has been pushing its Graviton line for the past several years, and has had Graviton2 in production since March last year and is still previewing its Graviton3 chip, which it unveiled last November but which is not yet in full production – meaning with feeds, speeds, and prices – across AWS regions and datacenters.

Now Microsoft is getting into the game with new instances on the Azure cloud, also in preview, that are based on the 80-core “Quicksilver” Altra Arm server chips made by Ampere Computing, which debuted almost two years ago in June 2020 with up to 80 cores running at a top speed of 3 GHz. While much noise has been made this week about the new Azure D-series and E-series instances that use these Ampere Computing Altra processors, the wonder is why Microsoft didn’t deliver these a year ago – or even earlier – and why it is also not deploying the 128-core “Mystique” Altra Max follow-ons, which were supposed to be sampling in Q4 2020 and available starting last fall. The good news for Microsoft is that the Mystique processors are socket compatible with the Quicksilver chips it is now deploying, so as it can get its hands on them, it can roll the Altra Max chips into its supply chain, into its servers, and into its datacenters.

We do not expect that the future “Siryn” chips, which may not use the Altra brand and which are based on a homegrown core we have dubbed “A1” because Ampere Computing doesn’t understand that people need code names and final product names so they can speak efficiently about technology as it is developing and as it is delivered. (A synonym or two is a beautiful thing for both readers and writers.) In our Ampere Computing roadmap story from last May, we pointed out that hyperscalers and cloud builders buy roadmaps, not point products, and companies like Oracle, Tencent, Alibaba, and now Microsoft are buying for their public clouds because they believe Ampere Computing will get the next generation chip, which we are calling “Polaris” in the absence of a name and with a new A2 core to boot, out the door in 2023 as expected.

Just because Ampere Computing gets it out the door does not mean it can ship it in huge volumes immediately or that hyperscalers and cloud builders can just instantly drop 100,000 machines in their clouds. Volume production, qualification, and construction all take time, and these phase changes in the datacenter will take five to ten years before they become material. But we so no reason that Arm server chips cannot reach the 20 percent to 25 percent goal that Arm Holdings set so long ago – provided everything is working well.

Microsoft has made no secret of its desire to see Arm server chips represent a lot of its capacity on the Azure cloud – one presentation from Open Compute Summit in 2017 said the goal was for Arm servers to be 50 percent of server compute capacity – but Microsoft did not commit to making Arm servers on the infrastructure cloud portion of Azure available (as opposed to platform and software cloud portions where users are buying access to Microsoft software, not raw capacity) and it similarly did not promise to make the Windows Server stack available on Arm (although it was using Windows-on-Arm internally for homegrown apps hosted on Azure). And when Microsoft unveiled its “Project Olympus” designs at that same OCP Summit in 2017, both Cavium ThunderX server chips and Qualcomm Centriq server chips were previewed on those servers. But Qualcomm pulled the plug on Centriq and Marvell bought Cavium and after a while pulled the plug on ThunderX3. Which left Microsoft with a choice to either design its own Arm server chip or help Ampere Computing establish itself. (Many of us think Microsoft will eventually do both.)

It would be interesting to know how much ThunderX2 iron was deployed inside of Azure (our guess is not very much or Marvell would have kept it going), and how much Ampere Computing Altra gear is in Azure now (our guess is probably a lot more). The fact that Microsoft is making Arm capacity available on the infrastructure cloud – running Linux operating systems inside of VMs, but not Windows Server as far as we know, but that can also change – is significant. We need to view this as a stake in the ground, and a statement from Microsoft that it is not going to let AWS define the future of Arm servers in the datacenter all by its lonesome.

The Feeds And Speeds Of Microsoft’s Arm Azure Instances

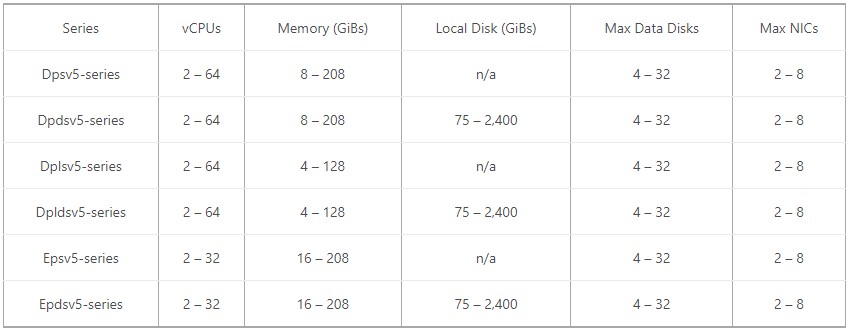

Because these Quicksilver Arm instances are in tech preview, we know a lot less about them then we would like, but the minute they come off preview and the minute the AWS Graviton3 instances come off preview, you can bet the last two dollars of Bill Gates and Jeff Bezos that we will be putting together a price/performance analysis comparing these two styles of Arm server instances to each other as well as to the X86 iron these big clouds – the two biggest clouds in the world – sell. In fact, we built the spreadsheets to do the comparisons assuming the feeds, speeds, and prices would be available now. Microsoft, to its credit, has revealed the six Arm server instance types it has launched and some basic salient characteristics:

First of all, with eight DDR4 memory controllers per chip, the Altra CPUs can have a lot more memory than Microsoft is configuring them with. And the Altra CPUs also support NUMA clustering for creating a bigger memory address space and compute engine. It is not clear if these Azure instances are based on are single-socket designs or dual-socket designs.

The first thing we wanted to know, and which Microsoft has not answered, is why are these instances topping out at 32 cores and 64 cores when the Altra chips top out at 80 cores? It could be that they are single-socket servers and that they are in systems without a DPU to offload network and storage virtualization and so some of the cores are being burned running the hypervisor stack. It could be that the Microsoft servers have a DPU in them and that the 32-core and 64-core parts are the sweet spots in terms of volumes and pricing from Ampere Computing. Whatever it is, there is a reason for not running it all the way up, and it is one of these two scenarios. (Or, if the Hyper-V hypervisor running on Arm is really inefficient, it could be a little of both.)

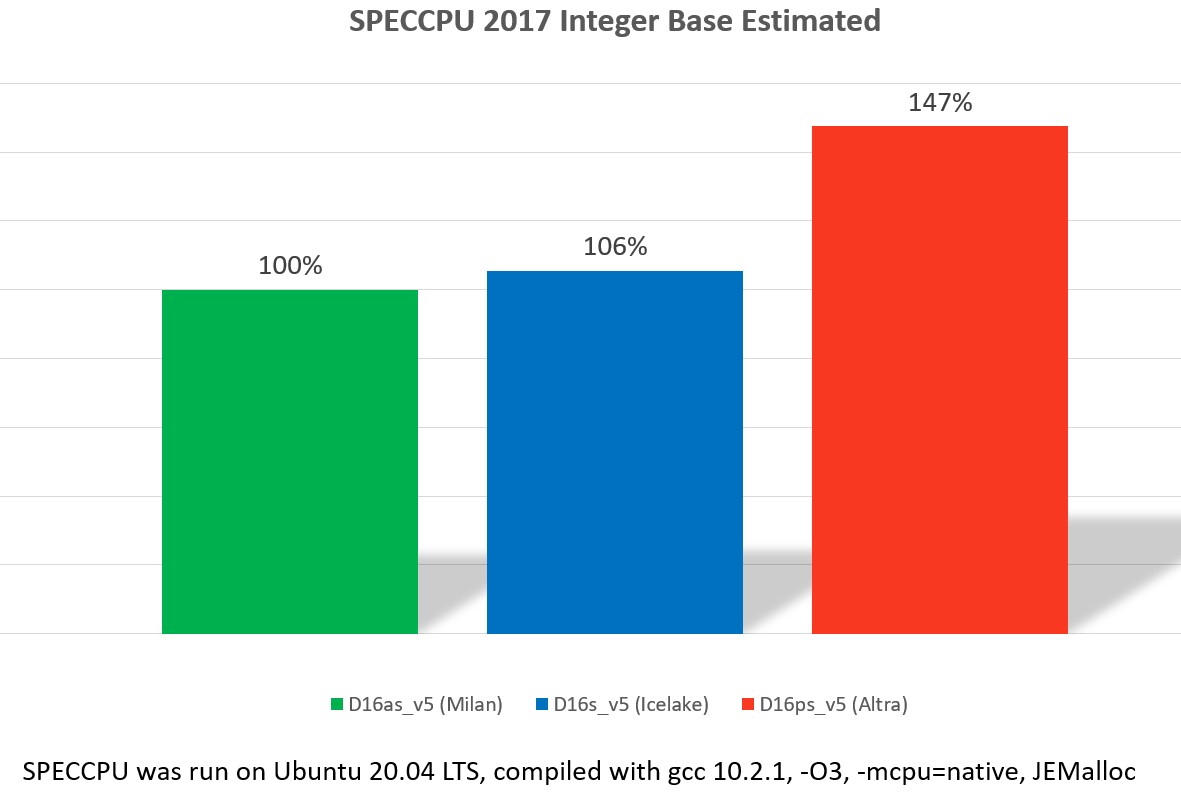

To help get a feel for how the different Azure Arm server instances SKU, we put together this table, which includes the cost per hour for on-demand instances. (Spot pricing is also available, but we find this less interesting as a common metric across clouds.) Just for fun, and to give you a sense of what it costs to run these instances for as long as a physical server tends to stick around in a hyperscale and cloud datacenter, we calculated the cost of the instance over three years. We will eventually use this as the basis for cross-cloud and intracloud price/performance comparisons. (Four years is probably more accurate these days, when it comes to server longevity in the clouds.)

The prices on Azure scale linearly with the number of virtual CPUs, or vCPUs, at least in this product line. The cost of the underlying chips may not – and the absolute performance from each instance on any given workload may not scale linearly, either. And thus, don’t assume that price/performance is constant across the instances. It is not, and it certainly was not with the Graviton2 and the X86 instances that AWS compared them to last year, as we showed this time last year.

The Dpsv5 series instances on Azure, according to Microsoft, are designed to run Web servers, application servers, Java and .NET applications, open source databases, and gaming and media servers. The Dpsv5 instances have 4 GB per core and no local disk, while the Dpdsv5 instances have 4 GB per core and local storage that scales with the cores. The Dplsv5 instances have 2 GB per core, and the Dpldsv5 instance is the same but with local storage. All four of these instance types scale from 2 to 64 cores. The Epsv5 instances have 8 GB per core but only scale to 32 cores, and the Epdsv5 instances add local storage. (If you can keep those names straight, good for you.)

The instances without local storage cost less, and all of the different external flash storage options that Microsoft offers on Azure are available to these Arm instances. These instances have from 2 to 8 network interfaces. Microsoft says that it offers “up to 40 Gb/sec” of networking, and that must be per virtual NIC in some way. The number of virtual NICs probably scales inversely with the bandwidth per virtual NIC, and our guess is the pipe is only 100 Gb/sec into each physical server. But with two Ethernet ports, it could be 200 Gb/sec. We shall see.

Here is an important thing, and we have discussed this many times with Ampere Computing and have heard the same thing from AWS with regard to the Graviton line of chips: A set of real cores with static clock speeds are better than a set of virtual cores with variable clock speeds when it comes to deterministic performance.

With the Ampere Computing Altra chips, there is no simultaneous multithreading, or SMT for short, so a vCPU is an actual CPU core. And when it comes to Altra clock speeds, they are locked at one speed and there is none of this variable clock speed depending on the number of cores in use at any given time. The premise of the cloud is that servers are sliced up in a zillion ways with a million customers and every core is kept busy, so there is not much of a chance of overclocking a core or set of cores for very long because a new workload will come around any second now. The design point that Ampere Computing has – and this is after listening to hyperscalers and cloud builders – is to make the CPU more deterministic. That means keeping the clock speed constant and not mucking around with virtual threads on cores, which can cause contention.

Not always, mind you, and this is also important. With HPC workloads, SMT is often turned off because the overhead of managing virtual threads actually can slow down overall performance. With workloads that like lots of threads – transaction processing monitors and relational databases, for instance – where there is not a lot of contention across the caches on the cores can go like crazy. For HPC style workloads, Intel’s HyperThreading, or HT, implementation of SMT can cause performance degradation of 10 percent to 20 percent according to anecdotal evidence we have seen over the years. On the other end of the spectrum, take IBM’s “Cumulus” Power10 processor in the Power E1080 server. A full-bore, 240-core system has a relative performance according to IBM’s rPerf benchmark test (an I/O unbound variant of the TPC-C online transaction processing test) of 2,250.8 with one thread activated per core. As virtual threads are turned on – 2 per core, then 4 core, then 8 per core – the performance scales by 2X, 2.8X, and 3.6X. (That SMT4 jump is not as good as the SMT8 jump.)

The point is, the effects of SMT are complicated, and every cloud using X86 iron turns it on for a lot of instances – we are not sure that it is universal – to boost the granularity of their vCPUs. It gives them smaller slices and more SKUs, and they can make servers using middle bin X86 CPUs look like they are bigger than perhaps they really are. It would be nice if they told customers when SMT was running and nicer still if it gave them a bare metal option if they didn’t want it. (Many do for just the reasons outlined above.)

Now, let’s talk about performance. In a blog post announcing the Arm-based Azure instances, Paul Nash head of product for the Azure Compute Platform, said this: “The new VM series include general-purpose Dpsv5 and memory-optimized Epsv5 VMs, which can deliver up to 50 percent better price/performance than comparable X86-based VMs.”

This statement was rather vague, and the term is “price/performance” because it is a division, not a product. So we fixed the Microsoft quote above to be accurate.

Anyway, the Microsoft statement does not tell us much specific about performance or price/performance.

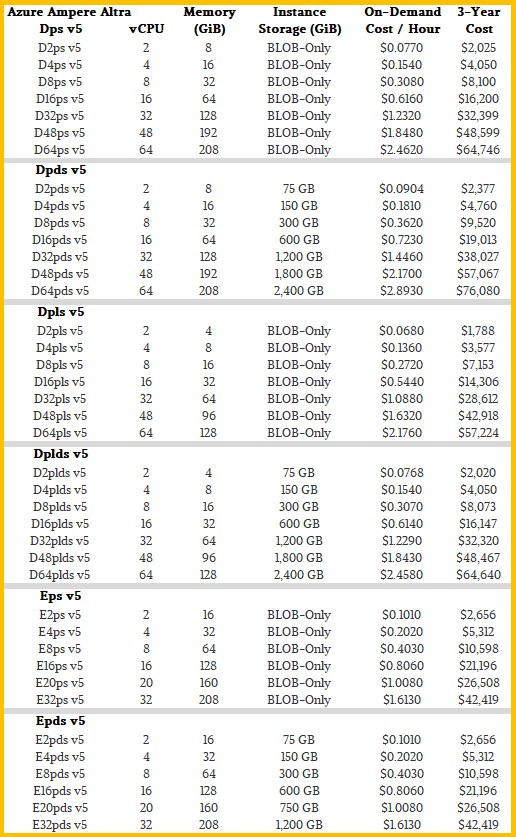

This statement from Jeff Wittich, chief product officer at Ampere Computing, moved the ball a little bit forward: “Ampere Altra VMs outperform equivalently sized Intel and AMD instances from the same generation by 39 percent and 47 percent, respectively.* In addition to being the high-performance choice, Ampere Altra processors are extremely power efficient, directly reducing users’ overall carbon footprint.”

The footnote where that asterisk above led says this: “Based on est. SPEC CPU 2017 Integer Base for D16ps v5, D16s v5, D16as v5 Azure VMs running on Ubuntu 20.04 LTS and compiled with gcc 10.2.1, -O3, -mcpu=native, JEMalloc”

Closer, but still unsatisfactory. SPECrate is the throughput integer test that CPUs are commonly gauged against. (There is a base result, which means all SPEC modules are compiled using the same compiler flags, and a peak result, which says use whatever compiler options make it scream.) Testers run multiple copies of the SPEC tests and see how much transactional throughput they can get through a chip. With the SPECspeed2017 test, one copy of each benchmark in the suite is run in a series, and testers get to choose how many threads to use based on OpenMP, and the result is a measure of time – how quickly can the complete set of single tests be run.

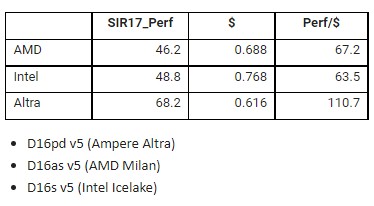

We reached out to Wittich expressing frustration at such vagaries, and he gave us the actual data used in one of the comparison references in the short blog Ampere Computing put out about the Azure instances:

And here is the table that brings together the raw SPECrate2017 Integer performance of these instances, which are based on the latest CPUs from AMD, Intel, and Ampere Computing, and the cost per hour of these instances. Because of the relative size of the two sets of numbers, Wittich divided the performance by the cost, which tells you how much SPEC Integer throughput you get for a buck across the three.

The table above has a typo, which just goes to show how difficult these Microsoft instance names are. It was the Altra D16psv5 instance, not the Altra D16pdv5, that was tested. (The latter has 600 GB of flash and it costs more.)

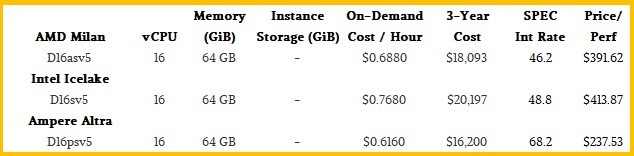

We like to think in terms of cost per unit of performance, not performance per unit of money, so we flipped the data around and calculated a three-year cost (without discounts) for the three different instances tested by Ampere Computing using SPEC’s Integer rate test:

This, we think, gives you a better sense of what an instance on the cloud costs if you intend to use it for a long time. You get the same percentages as Wittich calculated. Now, what happens if you could turn off SMT on the AMD and Intel chips? Well, if the virtual CPU count is what you are thinking about, to one way of thinking about it, you would have to buy an AMD and Intel instance that was twice as large. They would have 16 cores, and those cores would be a lot more powerful and would do a lot more work. But that is not the measure, really.

What we think is more reasonable is to step back to eight real cores on the AMD and Intel chips and boost the performance by between 10 percent and 20 percent and see what happens to the bang for the buck. If you do that, then the SPECrate Integer performance of the AMD “Milan” Epyc 7003 instance would be between 50.8 and 55.4, and of the Intel “Ice Lake” Xeon SP instance would be between 53.7 and 58.6. The Altra instance still has a lower price – 10.5 percent lower than the Milan and 19.8 percent lower than the Ice Lake – and it still has higher performance – between 23 percent and 34.2 percent more than the Milan and between 16.5 and 27 percent for the Ice Lake. And thus, the price/performance advantage to the Altra chip is still significant – about a third less expensive per unit of performance, generally speaking.

As Google told us seven years ago, it would be willing to change CPU architectures to get a sustainable 20 percent price/performance advantage.

One last thing. Microsoft is supporting Canonical Ubuntu Linux, CentOS, and Windows 11 Professional and Enterprise Edition on the Arm instances, with support Red Hat Enterprise Linux, SUSE Linux Enterprise Server, Debian, AlmaLinux, and Flatcar coming next. No mention of Windows Server.

We look forward to seeing performance numbers for AWS Graviton and Azure Altra instances, and seeing how this all plays out. This is what was supposed to happen more than a decade ago. Real competition, on more than one front.

Be the first to comment