It may not seem like it, but Oracle is still in the high-end server business, at least when it comes to big machines running its eponymous relational database. In fact, the company has launched a new generation of Exadata database servers, and the architecture of these machines shows what is – and what is not – important for a clustered database to run better. At least one based on Oracle’s software stack.

The Exadata X1 database appliances debuted formally in September 2008, but Oracle had been shipping them to selected customers for a year, which was just as the Great Recession was getting going and large enterprises were spending a fortune on big NUMA servers and storage area networks (SANs) with lots of Fibre Channel switches to link the compute to the storage. They were looking for a way to spend less money, and Oracle worked with Hewlett Packard’s ProLiant server division to craft a cluster using commodity X86 servers, flash-accelerated storage engines, and an InfiniBand interconnect that made use of low-latency Remote Direct Memory Access (RDMA) to tightly couple nodes for both running the database and the storage. Networking to customers was provided by Ethernet network interfaces. In a sense, Oracle was using InfiniBand as a backplane, and this is why it took a stake in Mellanox Technologies way back then.

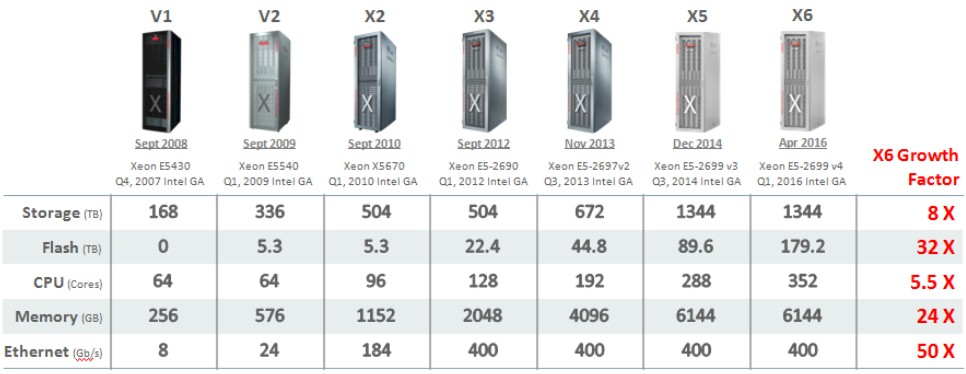

After that experience and after learning that IBM was pondering a $6.85 billion acquisition of Unix system powerhouse Sun Microsystems, Oracle co-founder and chief executive officer Larry Ellison caught “hardware religion” and in January 2009 made a $7.4 billion bid to acquire Sun, and in January 2010 the deal got done. By September 2009, Ellison was so sure the deal would be approved by regulators that the HP iron soon got unplugged from the Exadata line and replaced with Sun X86 machinery running Oracle’s Linux variant – not Sun Sparc gear running Solaris Unix. These were the second-generation Exadata V2 machines, which were followed by the Exadata X2 and so forth. By the time The Next Platform had been published for its first year in 2016, Oracle was already up to the seventh-generation Exadata X6, with the cranks turned up on compute, storage, and networking.

As you can see from the chart above, the disk and flash storage capacity, the CPU core counts, database node memory capacity, and Ethernet bandwidth into the Exadata clusters grew steadily in the first decade of products. The Exadata X7-2 and X7-8 systems were unveiled in October 2017, and Oracle had thousands of customers in all kinds of industries that had yanked out their big NUMA machines running the Oracle database (the dominant driver of Unix machines three decades ago, two decades ago, a decade ago, and today) and replaced them with Exadata iron.

In any Exadata generation, the models with the “2” designation have relatively skinny main memory and no local flash on the database servers, and the models with the “8” designation have eight times the main memory (terabytes instead of hundreds of gigabytes) per node and eight Xeon processor sockets instead of two. And starting with the Exadata X8-2 and X8-8 generation in June 2019, Oracle switched from InfiniBand to 100 Gb/sec Ethernet with RoCE extensions for RDMA for linking the nodes in the cluster together as well as four 10 Gb/sec or two 25 Gb/sec Ethernet ports per database node to talk to the outside world.

With the X8 generation, Exadata storage servers started to come in two flavors: a High Capacity (HC) variant mixing flash cards and disk drives and an Extreme Flash (EF) variant that had twice as many PCI-Express flash cards but no disk drives (which offered maximum throughput but much smaller capacity). Oracle also started using machine learning to automagically tune the clustered database – just the kind of thing that AI is good at and people are less good at.

That little bit of history brings us to the tenth Exadata generation from Oracle: the X9M-2 and X9M-8 systems announced last week, which are offering unprecedented scale for running clustered relational databases.

The X9M-2 database server has a pair of 32-core “Ice Lake” Xeon SP processors (so 64 cores) running at 2.6 GHz and comes with a base 512 GB of main memory upgradeable in 512 GB increments to 2 TB. The X9M-2 database server has a pair of 3.84 TB NVM-Express flash drives and another pair can be added. Again, the two-socket database node can have four 10 Gb/sec ports or two 25 Gb/sec plain vanilla Ethernet ports for linking out to applications and users, and it has a pair of 100 Gb/sec RoCE ports for linking into the database and storage server fabric.

The X9M-8 database node is for heftier database nodes that need more cores and more main memory to burn through more transactions or burn through transactions faster. It has a pair of four-socket motherboards interconnected with UltraPath Interconnect NUMA fabrics to create an eight-socket shared memory system. (This is all based on Intel chipsets and has nothing at all to do with Sun technology.) The 9XM-8 database server has eight 24-core “Cascade Lake” Xeon SP 8268 processors running at 2.9 GHz, which is 192 cores and which is about 3X the throughput of the 64-core X9M-2 database node. (It is interesting that Oracle did not choose the “Cooper Lake” Xeon SP for this machine; it is good that it did not plan on “Sapphire Rapids” being ready now, but maybe all of these machines were supposed to be based on that still-not-here-yet Intel processor.) The main memory in the fat Exadata X9M-8 database node starts at 3 TB and scales to 6 TB. This database server has a pair of 6.4 TB NVM-Express cards that plug into PCI-Express 4.0 slots so they have lots of bandwidth, and the same networking options as the skinny Exadata X9M-2 database server.

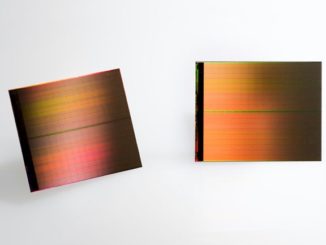

The HC hybrid disk/flash and EF all-flash storage servers are based on a two-socket server node employing a pair of Ice Lake Xeon SP 8352Y processors, which have 16 cores each running at 2.2 GHz. The HC node has 256 GB of DDR4 DRAM that is extended with 1.5 TB of Optane 200 Series persistent memory, which is configured to act as a read and write cache for main memory. The HC chassis has room for a dozen 18 TB, 7.2K RPM disk drives and four of the 6.4 TB NVM-Express flash drives. The EF chassis has the same DDR4 and PMEM memory configuration, but has no disks at all and eight of the 6.4 TB NVM-Express flash cards. Both storage server types have a pair of 100 Gb/sec switches to link into the fabric with each other and to the database servers.

The first thing to note is that even though 200 Gb/sec and 400 Gb/sec Ethernet (with RoCE support even) is available in the market and certainly affordable (well, compared to Oracle software pricing for sure), you will note that Oracle is sticking with 100 Gb/sec switching for the Exadata backplane. We would not be surprised if the company was using cable splitters to take a tier out of the 200 Gb/sec switch fabric, and if we were building a large scale Exadata cluster ourselves, we would consider using a higher radix switch and buying a whole lot fewer switches to cross connect the database and storage servers. A jump to 400 Gb/sec switchery would provide even more radix and fewer hops between devices and fewer devices in the fabric.

Let’s talk about scale for a second. Oracle RAC is based on technology that Compaq licensed to Oracle but was developed for Digital VAX hardware and its VMS operating system. This VAXcluster and TruCluster clustering software was very good at clustering databases and HPC applications, and the Rdb database from Digital had good, working database clustering long before Oracle did – it is debatable that you can call Oracle Parallel Server, which preceded RAC, a good implementation of a clustered database. It worked under some circumstances, but was a pain in the neck to manage.

The Exadata machine provide both vertical scale – coping with progressively larger databases – as well as horizontal scale – coping with more and more users or transactions. The eight-socket server provides the vertical scale and RAC provides the horizontal scale. As far as I know, RAC ran out of gas at eight nodes when it was trying to implement a shared database, but the modern versions of RAC, including RAC 19c launched in January 2020, use a shared nothing approach across database nodes and using shared storage to parallelize processing across data sets. (There is a very good whitepaper on RAC you can read here.) The point is, Oracle has worked very hard to take a combination of function shipping (send SQL statements to remote storage servers) to boost analytics, and a mix of data shipping and distributed data caching to boost transaction processing and batch jobs (which rule the enterprise) – all in the same database management system.

An Exadata rack has 14 of the storage servers, with a usable capacity of 3 PB for disk, 358 TB of flash plus 21 TB of Optane PMEM for the HC storage and 717 TB of flash and 21 TB of Optane for the EF storage. The rack can have two of the eight-socket database servers (384 cores) or eight of the two-socket servers (512 cores) for the database compute. If you take some of the storage out, you can add more compute to any Exadata rack, of course. Up to a dozen racks in total can be hooked into the RoCE Ethernet fabric with the existing switches that Oracle provides, and with additional switching tiers even larger configurations can be built.

As for performance, a single rack with the Exadata X9M-8 database nodes and the hybrid disk/flash HC storage can do 15 million random 8K reads and 6.75 million random flash write I/O operations per second (IOPS). Switching to the EF storage, which is good for data analytics work, a single rack can scan 75 GB/sec per server, for a total of 1 TB/sec on a single rack, which had three of the eight-node database servers and eleven of the EF storage servers.

Finally, Oracle is still the only high-end server maker that publishes a price list for its systems, and it has done so for each and every generation of Exadata machines. You can see it here. A half rack of the Exadata X9M-2 (with four of the two-socket database servers and seven storage servers) using the HC hybrid disk/flash storage costs $935,000, and the half rack with the EF all-flash storage costs the same. So $1.87 million per rack. That cost is just for the hardware, not the Oracle database or RAC clustering software or any of the other goodies companies need to make this a Database Machine, as its other name is. And that software is gonna cost ya, but no more than it does on big Unix and big Linux and big Windows iron that Exadata is meant to replace. Our guess, at least for the initial system, is that it costs quite a bit less when you move from some other system to Exadata.

The procurement of Oracle database is of whole awesome of big organization that requires networking of share and client server, it thus compliment metadata and exadata to conclusive create a larger organization that can run query of input and output of operating system of database

Ugh, you failed to mention the storage server license cost that one would not be subject to on traditional servers. Also you didn’t mention at all that Smart Scan and HCC is virtually useless for OLTP workloads.

Ugh. That’s why we have smart readers like you.