Having to install a new kind of systems software stack and create applications is hard enough. Having to master a new kind of hardware, and the management of it, makes the situation worse. And for normal enterprises, this makes embracing AI in its many forms difficult.

Enterprises looking to embrace AI have been stymied by the cost and complexities involved with pulling together the often specialized IT tools and trying to bridge a yawning skill gaps. Hybrid cloud environments – particularly built atop hardware and software that are commonly found in on-premises datacenters – offer a way for organizations to address those challenges.

About five months ago virtualization and cloud software provider VMware and GPU maker Nvidia announced a deep and multi-tiered partnership aimed at leveraging the best of both companies to enable enterprises more easily embrace AI in hybrid cloud. At VMware’s virtual VMworld 2020 event in late September, Nvidia co-founder and CEO Jensen Huang and then-VMware CEO Pat Gelsinger (who in February took over the top job at Intel, a company he spent three decades at before jumping to VMware in 2012) outlined what the partnership to develop an AI-ready enterprise platform would look like and how it will enable enterprises to reap the benefits AI despite the hurdles.

Gelsinger at the time said that until now, VMware had historically been a “CPU-centric company” that was now “making the GPU a first-class compute citizen and through our network fabric. Through the VMware virtualization layer, it is now coming as an equal citizen in how we treat that compute fabric through that VMware virtualization management, automation layer. This is critical to making it enterprise-available.”

The alliance between Nvidia and VMware made sense. Nvidia for much of the past decade has built a wide-ranging strategy for AI and machine learning based on its GPUs, associated software (like its NGC suite of software optimized for AI and high-performance computing, including containers, models and SDKs) and integrated AI-focused appliances that include the DGX-2. More recently, the GPU maker in January unveiled its Nvidia Certified Systems program, a way to work with OEM partners like Dell, HPE, Inspur and SuperMicro to develop servers accelerated with Nvidia GPUs and networking from Mellanox – which it bought for $6.9 billion las year – and optimized for AI and other advanced workloads.

Nvidia also is pursuing a bid to by chip designer Arm for $40 billion, a move that would add to its compute capabilities but one that is being strongly challenged by the likes of Qualcomm, which uses the Arm architecture for its own systems-on-a-chip (SoCs) and is worried that Nvidia will tweak the designs to favor itself.

For its part, VMware, after changing on-premises datacenter dynamics over the past two decades with its server virtualization technology, has systematically expanded its reach into other parts of the infrastructure, such as the network with NSX. As the rise of cloud computing threatened its gains in the datacenter, VMware quickly embraced the hybrid cloud model through innovations, acquisitions and partnerships with top cloud providers like Amazon Web Services, Microsoft Azure and Google Cloud. It has expanded vSphere to become a cloud computing virtualization platform and subsequently rolled out Tanzu, its portfolio of products and services for containers and Kubernetes, adding to what it already offered via virtual machines.

At the same even where Huang and Gelsinger announced the companies’ partnership, VMware also unveiled Tanzu integration with vSphere.

The two companies are moving forward to leverage the GPU and other datacenter technologies Nvidia can offer with the broad hybrid cloud reach from VMware to address the myriad challenges enterprises face in a rapidly changing IT world, including the proliferation of Internet of Things (IoT) and mobile devices, the ramping 5G networks and tools for analyzing the massive amounts of data being generated across datacenters, the cloud and the rapidly expanding edge environments (IDC analysts predict that in 2025, there will be 175 zettabytes of data created).

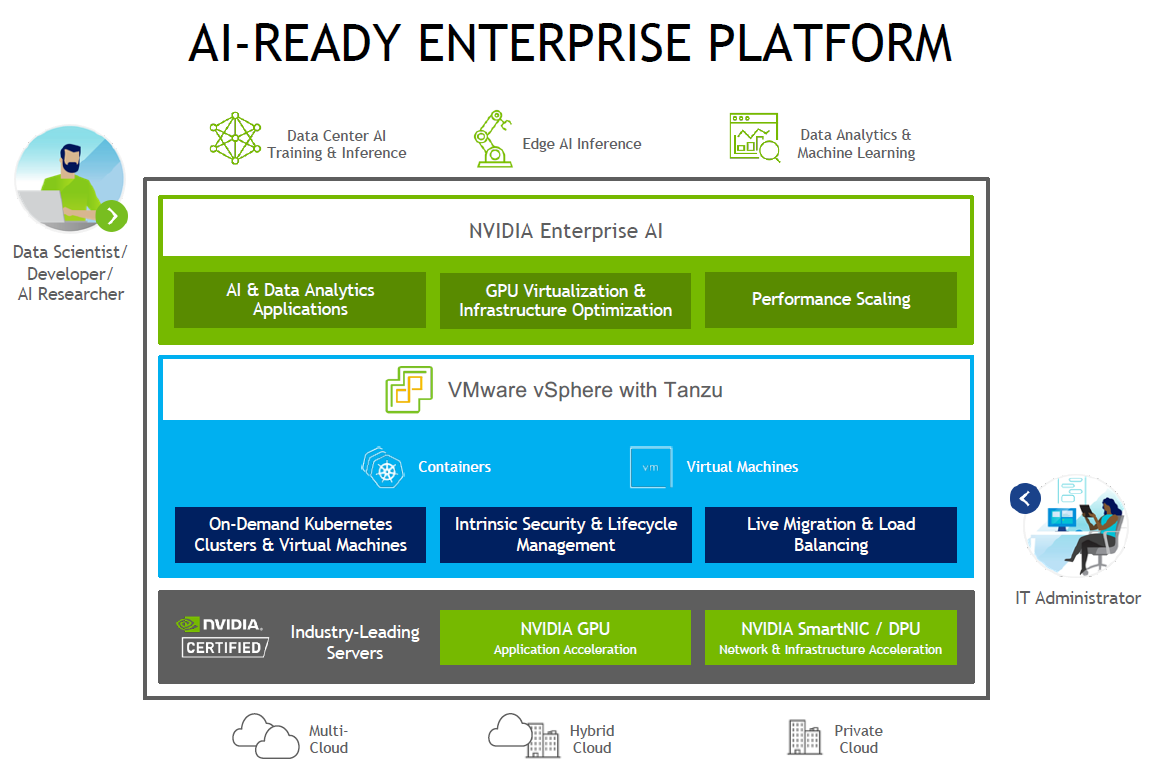

“AI is really kind of a full-stack computing problem that’s going to change every single industry,” Justin Boitano, vice president and general manager of enterprise and edge computing at Nvidia, said at a recent virtual press conference. “We always say artificial intelligence is really the most transformational force of our lifetime. It’s going to help businesses improve operational efficiency and help their bottom line. What we saw up to this point has been kind of bespoke. It’s been an island of infrastructure where people had to try to do a DIY approach to setting up and managing it. The partnership with VMware lets us really build the infrastructure that people are used to using with all the great vSphere tools that exist that really optimize it. You don’t have to go create a siloed project to figure this out. We really want to make it turnkey for the admins and make it easier for them to support the line-of-business teams using AI.”

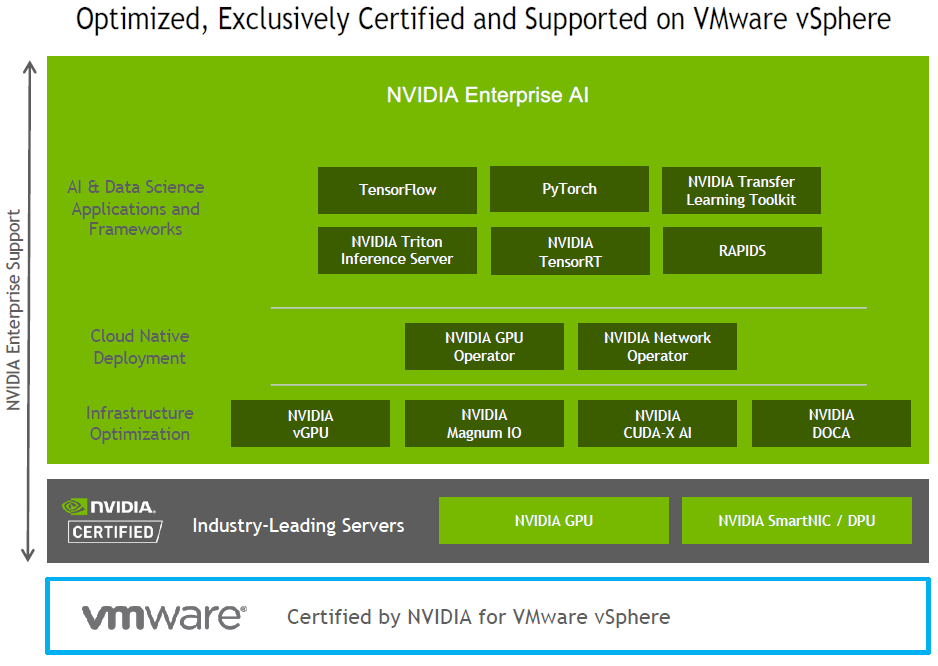

The two companies took a step forward with the partnership this week. Nvidia unveiled Nvidia AI Enterprise, a software suite of AI tools and frameworks that are supported and available exclusively with VMware’s vSphere 7 Upgrade 2, which was released at the same time. Nvidia AI Enterprise includes the vendor’s GPUs and SmartNICs, infrastructure optimization offerings like vGPU and Magnum IO (for quickly crunching through massive amounts of data) and applications and frameworks ranging from TensorFlow and PyTorch to its own Triton inference server and TensorRT, an SDK for high-performance deep learning inference that includes an optimizer and runtime for low latency and high throughput for such workloads.

Boitano said the tools in Nvidia Enterprise AI “make it easier for enterprises to get started building models and operating them at scale in their enterprise datacenters.” For example, companies that are just beginning to move toward AI can spend more than 80 weeks to curate the data necessary for a vison AI model and then train, develop and deploy it to a manufacturing floor. Leveraging the Transfer Learning Toolkit in Nvidia Enterprise AI, that time can be pared to eight weeks.

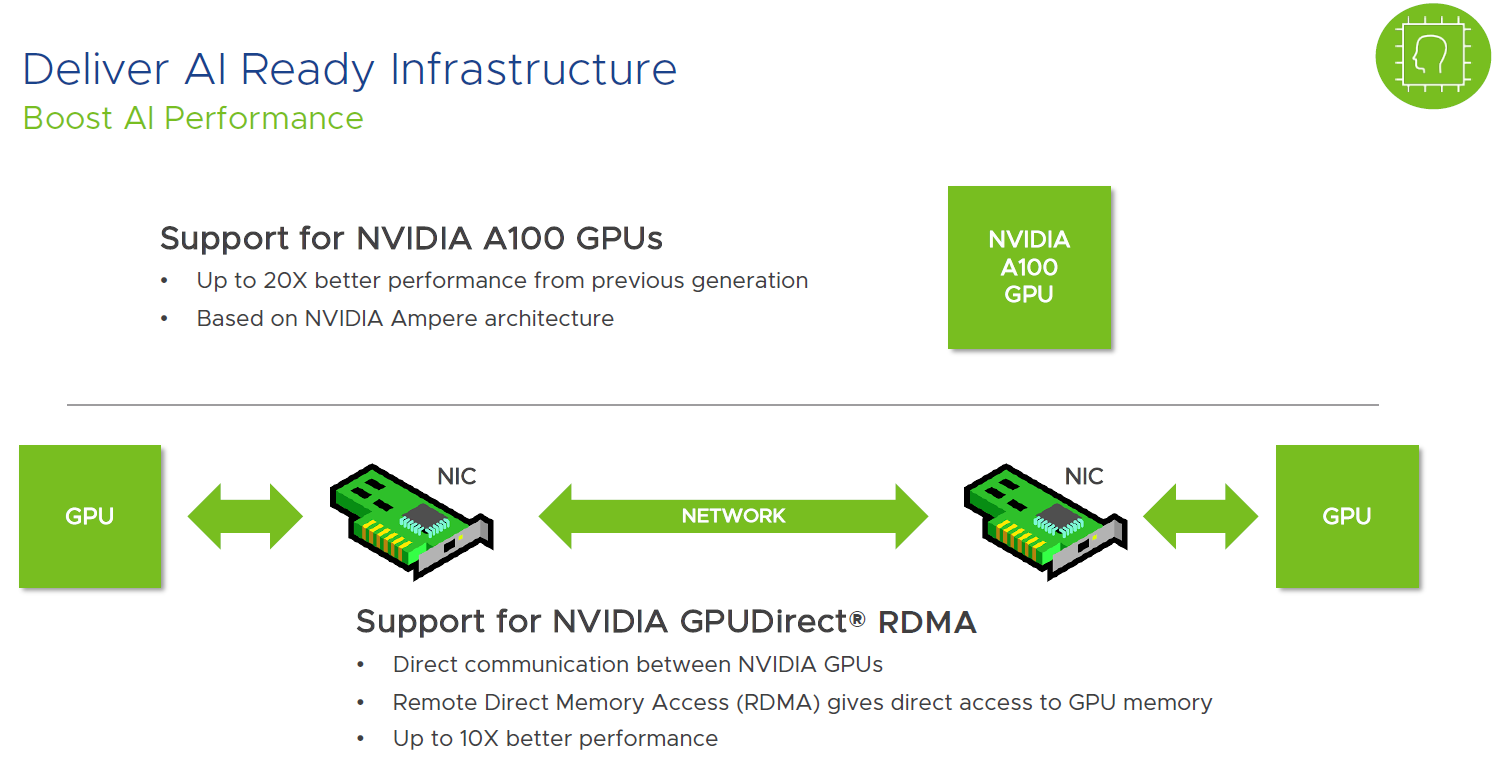

VMware rolled out new releases of is vSphere cloud computing virtualization platform and vSAN storage virtualization software with capabilities designed to support organization’s AI efforts. Among the notable upgrades in vSphere u Update 2 is support for Nvidia’s A100 Tensor Core GPUs in Nvidia Certified Systems. Combined with Nvidia AI Enterprise, enterprises will find it easier to run AI workloads on the familiar vSphere platform rather than separately on more specialized architectures.

They also will be able to use the latest Nvidia GPUs in their virtual environments, leveraging a mix of technologies like Multi-Instance GPU (MIG) that enables GPU cycles to be shared by multiple users and live migration via VMware’s vMotion.

“You can see where hard work built on the power of the enterprise footprint of vSphere and with our Tanzu portfolio, where we’re taking container-based applications and container-based tools from the Nvidia catalog and making sure those can run seamlessly on vSphere,” Lee Caswell, vice president of VMware’s Cloud Platform business unit, said during the same recent press briefing. “You’ve got support for both containers and for VMs with a common operating model. In the early days of vSphere, this is a way to just speed the deployment of new applications and then make sure, too, that they were easily managed. They could be updated, they could be maintained. We’re bringing all of that value that VMware is known for and bringing that down to the AI user.”

Organizations are able to run all this on Nvidia-certified systems and see the benefits of the vSphere support of the A100 GPUs, which offer up to 20 times the performance of the prior chips. Caswell also noted the support for Nvidia’s GPUDirect RDMA, which drives 10 times better performance.

“The heart of this is if you look at artificial intelligence, the model complexity and the amount of computed needed to build models is growing exponentially, precisely at the time that Moore’s Law is leveling off,” Nvidia’s Boitano said. “What we’re doing is using a bunch of the enhancements of the new [Ampere] architecture on GPU side and effectively making sure that GPUs can talk to other GPUs across the network in a way that isn’t bottlenecked by any other choke points in the system. In terms of the 20X performance with the A100s, our newest generation GPUs have a new type of Tensor Core so that we get a 20X performance increase on training and inference. That’s really where that performance is coming from.”

Be the first to comment