Although there are now well-engineered systems that tightly package compute, acceleration, and data movement for deep learning training, for some users, working on time-critical AI training (and constant retraining), the backend applications and frameworks require a different way of thinking.

The real way to kickstart a new way of thinking about AI training systems, however, is to have a workload that does not fit the mold and that engenders some serious engineering around time to result, accuracy, performance, cost, programmability and integration, and scalability. These considerations (among others) are just part of what content discovery giant Taboola needed to weigh as it built and scaled along a GPU-accelerated training path. As they scaled their capabilities along with demand, all alongside evolving neural network framework, algorithmic, and data management technologies, they had to blaze some of their own trails in both training and inference—and that has been a rewarding journey, according to the company’s VP of IT, Ariel Pisetzky.

As he explains, “there were many known configurations for convolutional neural networks and recurrent neural networks, but for list recommendation, our needs are different and require some fine-tuning and optimization of existing systems. Many of the use cases for large-scale production training focus of image or speech recognition and variations on those large training set-sized problems. Accordingly, hardware and software tricks and tools are often built to serve these more pervasive workloads, which means companies like Taboola need to rely on their wits (and plenty of engineering expertise) to figure out which configurations are right.

All of the deep learning training happens in Taboola’s Israel-based backend datacenters and unlike some other large-scale AI use cases we’ve tracked over the years, it handles a nearly constant load of retraining. Taboola’s content recommendations are displayed on news sites that must shift swiftly with the tide of topics and current events. With that in mind, time is of the essence for Taboola when it comes to training. While accuracy matters, responding to the moment and looping readers in at the second they are following a given topic or concept is part of where Taboola’s value lies.

“We continuously train on an ongoing cycle where actual time is more important than accuracy. In training we measure our time in hours, not milliseconds. Instead of working on models and improving them over the course of three days, we need to do this in hours; the faster the better.”

This is just part of what makes Taboola’s AI training demands unique. Outside of high performance content recommendation, some workloads require high accuracy, others simply maximum power efficiency (often at the expense of accuracy). Still others want high performance that balances just enough accuracy tradeoff to deliver a continuously updated product that can be beamed back out to inferencing systems for sub-second delivery. That is where Taboola fits and it has taken some engineering and optimization to reach that point, especially with aggressive power budgets and the need to keep pace with changing content recommendation demands and needs.

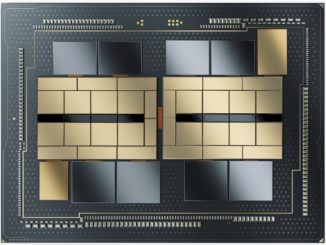

When Pisetzky’s team began the deep learning training journey, they were at the beginning of the AI era and still did not have a keen sense of how their AI systems would interface with the rest of the analytics backbone that comprises the company’s multifaceted recommendation engine. He says at the beginning, they started with Dell EMC PowerEdge R730 servers with two NVIDIA P100 GPUs per server, quickly saw value in running their training operations on GPUs and moved to Dell EMC PowerEdge R740 servers, each with three NVIDIA V100 GPUs. He says that while other technologies might have added some performance, it would have meant re-investment in systems that were already being well-optimized and balanced in the face of their mounting content recommendation targets.

While hardware, whether GPU or CPU or even I/O system come with a certain amount of performance built-in, careful, thoughtful optimization for given workloads is where the real power of compute, storage, or even software can shine through. From their CPU-based inference systems, to the training clusters on the backend, to all points where billions of data points hit in between, maximum balance of performance, energy efficiency, and accuracy or quality of results is driven by intensive, ongoing optimization techniques, Pisetzky adds.

“We didn’t want specialized hardware just for training when we started out, although to date, we only use this hardware for training. We didn’t know how much local storage we would need, but now we do as well, there has been a lot of learning along the way. We didn’t have a use case we could model ourselves after, so much of what we’ve learned has been the result of careful thought and optimization from compute and acceleration down to how those have an impact on the larger storage and network infrastructure.”

He notes that it takes partnerships to build production AI-ready systems that can meet the demands of billions of hits against their many AI and analytics systems. For instance, it’s one thing to work with a vendor like Dell Technologies and NVIDIA to put together the most balanced training servers, it’s another to think about the impact on file systems, storage, and networks. With their Dell relationship, Pisetzky says they could see both the “big picture” from a systems-level view, even across their many datacenters (locally and globally) while drilling down to the node level for a deep understanding of what might provide the right cost/efficiency/performance balance.

He also adds that NVIDIA GPUs will continue to be central to all of their training operations. “Making the jump from the NVIDIA P100 to the V100 brought immediate improvements,” he says. While his teams at Taboola did evaluate some of the AI chip startups, much of the focus there was on inference, which is a completely different side of the company’s deep learning story from a hardware perspective. “There was never any doubt that we could handle the training and retraining demands we’ve had over the years. It has been a matter of growing our understanding as well as adopting newer NVIDIA GPUs over time, which has given us definite performance advantages.”

Check out the full Taboola case study, which provides more detail about the GPU, storage, and system architecture.

And damn the people who do it. If GPU manufacturers cared, they’d be taking advantage of this market by rolling out custom-tooled fpga boards for them, but it seems they have no interests in segregating their markets if the spoils are theirs to keep.