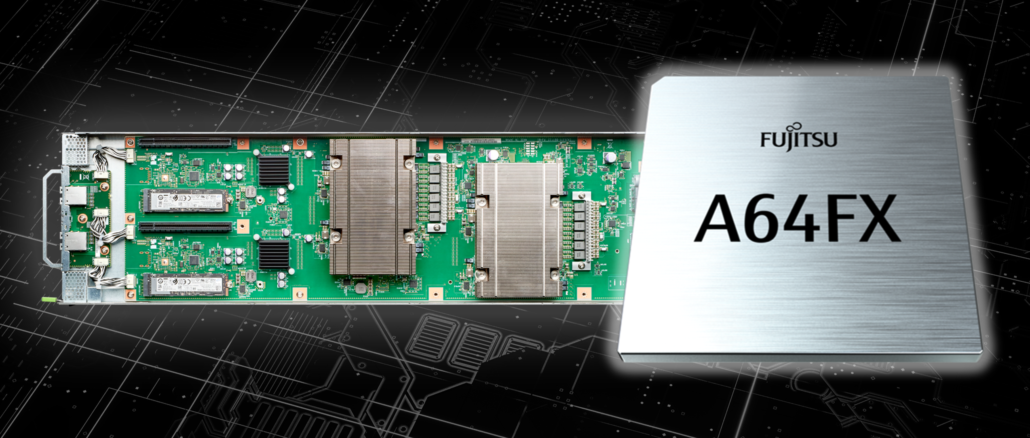

We’ve been closely following momentum with Fujitsu’s Arm-based A64FX processor, from its inception to its placement inside the world’s most powerful supercomputer. What has been missing from the conversation is a boots on the ground view of how seamless the environment is from a compiler and early usability perspective. To this end we caught up with Oak Ridge National Lab’s Ross Miller, a systems programmer at the lab who is in charge of the experimental “Wombat” system.

The small evaluation cluster has a mix of nodes, including four HPE Apollo 70 nodes with ThunderX2, another four with the Arm processors alongside Nvidia V100 GPUs and the newest, most interesting addition, sixteen Apollo 80 nodes sporting the A64FX processor. Of course, it’s not just about taking the hardware for a test drive. Seeing how the compiler efforts to date pan out to fully exploit the high performance and energy efficiency (see Fugaku’s status on the Top 500 and Green 500) is a task yet ahead, but so far, so good, Miller says.

“The Fujitsu A64FX is the first processor available to us that actually implements these new SVE instructions. With SVE, you can take a code and run it on a processor that has 128-bit vectors or 512-bit vectors, and it will still run and use all the available hardware.”

Many HPC sites are closely watching the software and usability angle of the Fujitsu devices, specifically how it supports the scalable vector extensions (SVE) in hardware and how the compilers will work out, especially since in the U.S. at least, the formal Fujitsu compiler isn’t available, we are guessing due to licensing and support and other reasons that have nothing to do with technology readiness. In other words, you want the compiler? Buy the entire system. Still, things are looking up, even without their own software tooling.

From the early tests at ORNL it was clear that things compiled without a hitch, the real question is, what performance benefit comes out of the SVE instructions? Miller explains that to start you can put a flag on a compiler command line to make sure instructions are enabled and let the compiler do the heavy lifting. This is similar to what we’ve heard from folks at Arm lately, just sit back and let it do the job. In practice, he says, there’s some catching up for the compilers to do but it isn’t that far off. In fact, their experiments with the STREAM Triad benchmark (which is for memory bandwidth more than compute) were originally run on GCC 10.2—the latest available, with a yield of 620GB/s. Just by recompiling with the pre-release GCC 11.0 from Github the newer version returned closer to 800GB/s.

Wombat’s new Fujitsu A64FX processors, developed by Fujitsu, are the first to use the Arm Scalable Vector Extension (SVE) technology, a unique instruction set that offers flexibility for processing vectors—strings of numbers treated as a coherent unit in memory. SVE can process vectors as short as 128 bits and as long as 2,048 bits without forcing users to recompile their scientific codes for different vector lengths.

“My main goal is to understand the performance portability of different applications,” said Oscar Hernandez, senior staff member on the Wombat team at Oak Ridge. “We really want to understand how the compilers are efficiently generating code for SVE, and we are in the very early stages of doing performance analyses.” According to Hernandez, other codes are in the process of being ported to the new processors as well.

“We are interested in whether we can write a single code to get performance from the Fujitsu processors as well as an architecture that includes the NVIDIA Quadro GV100 GPUs using portable parallel programming models like OpenMP, OpenACC, Kokkos, and using scientific libraries,” Hernandez said. “We still need to try different applications, compilers, and libraries that specifically target these new processors.”

For those following SVE questions around Arm in HPC in more broadly, look no further than this presentation recorded for the Arm HPC User Group from John Stone (of NAMD fame, among other things). He walks through it in the slides but in essence, he took kernels from NAND that had been hand-optimized for Intel AVX and other architectures and tuned them to SVE to see what kind of performance he might get. What stands out here is what Arm obviously did to architect the SVE instruction set to make it easy for compilers to automatically vectorize. He shows this by providing an example of an AVX optimized kernel and the code doesn’t even fit on the slide, even with reduced font. The SVE was a nice, neat block, showcasing how the SVE instructions are architecture overall, again, in this example of a classic HPC code.

But of course, the Fujitsu story goes beyond code and usability. The real power of the Fujitsu A64FX is how it snaps into a system and at the core of that is the interconnect. With HPE-Cray the only company with rights to sell the chip on systems in the U.S. right now, we have to assume a steady assertion that the Cray “Slingshot” interconnect is going to be part of that package no matter what. Still, the performance, particularly the memory bandwidth, is already proving itself in the early tests Miller and team have conducted since the systems were up and running six weeks ago.

Getting Red Hat to boot and run was no issue, Miller says, but there are still several open questions experimental users want to explore, including where the chip’s limitations are. With HBM2 he says the memory bandwidth is great but with only 32GB overall, it boils down to less than 1GB per core, which is low by current standards. “We’ve been trying to decide if that’s really a limitation and if so, how bad it is. For just benchmarking, it doesn’t matter since you set you problem based on what’s available. But we’ll look forward to seeing what happens with big applications. But the memory bandwidth. Just wow,” he tells us.

Getting a handle on usability matters early on. This is especially the case in Europe and Asia where we expect the A64FX to take off and even challenge GPUs for some share since similar performance can be achieved for some apps with just the Fujitsu CPU/no offload (and no American company at the center outside of integration, assuming you don’t want that all-important Fujitsu “Tofu” interconnect). All of that is a bit of a side point, but worth mentioning because the usability and portability and all those other matters will begin to push where these devices go in the next couple of years.

Be the first to comment