For more than a decade, as they have watched the amount of data they are generating stack up and technologies like artificial intelligence and analytics come to the forefront, enterprises have turned an eye toward high performance computing equipment and tools to help them get a handle on all of it. And vendors, seeing their capabilities dovetailing with that demand and the chance to expand the reach of their HPC offerings into broad markets beyond hyperscalers and academic and research institutions, have been looking for ways to make this technology more accessible to mainstream organizations.

Hewlett Packard Enterprise, IBM, Lenovo and others all have pushed for ways to get HPC, AI, and other tools into the hands of their enterprise customers to help them better collect, storage, manage and analyze the massive amounts of data they’re creating, to more efficiently run their modern workloads and to control costs as they continue to digitize their businesses.

“The exascale systems have spawned a whole new set of technologies, both hardware and software technologies, that are in the process of being developed for these exascale systems that will be leveragable by everyone,” Peter Ungaro, the former CEO of Cray CEO and now senior vice president and general manager of HPE’s HPC and AI business after it bought Cray for $1.3 billion in 2019, told The Next Platform earlier this year. “A lot of times people feel that these very large systems at the top of the supercomputing pyramid are kind of irrelevant for them, but I really believe in the future that pretty much every company … will have a system in their datacenter that will look and feel very much like a supercomputer and have the same kinds of technologies that a supercomputer today has in it, just because of the tremendous expansion of the amount of data that people are dealing with, which is creating bigger computing problems.”

Dell EMC has been in the mix of making these high-end computing capabilities available to mainstream businesses. That was on display earlier this year, when Dell announced a 5 petaflops system housed at the San Diego Supercomputer Center powered by PowerEdge servers running on AMD Epyc chips and Nvidia V100 Tensor Core GPU accelerators and connected via Mellanox HDR InfiniBand networking. The system, which will be able to serve 50,000 users, is aimed at organizations not only in academia and government but also various industries.

Dell, which over the years has seen its efforts in HPC ebb and flow, began getting serious about the market again a couple of years ago. The company created an HPC business and put Thierry Pellegrino in charge as vice president and general manager of the company’s HPC business and, among other steps, turned its attention to enterprises and midsize companies. That’s included offering some Ready Solutions – pre-integrated and pre-configured solutions that leverage the company’s PowerEdge servers and PowerSwitch networking as well as storage products and third-party software – for HPC workloads in such areas as research, manufacturing, life sciences, AI and storage.

More recently, the company has been exploring as-a-service models as another way to make HPC and AI more accessible to enterprises.

“More and more companies are recognizing that if they want to perform either simulations or data analytics – including AI – beyond the level of a single server, they’re going to have to go to HPC systems to do it, integrated systems that have many, many servers and scalable storage in them,” said Jay Boisseau, AI and HPC strategist at Dell Technologies. “Many of those are doing it on-prem. That’s the largest part of the market, but a growing part of the market is this HPC- and AI-as-a-service, where expert services companies with access to leading-edge datacenters are willing to operate those systems effectively and efficiently for you.”

Boisseau and Thierry recently spoke to journalists in a conference call to talk about the latest moves Dell is making to expand their reach of its HPC and AI offerings, including adding to its portfolio of Ready Solutions for HPC, leveraging public clouds and embracing the as-a-service model. Dell’s moves also are a recognition of the growing relationship between AI and HPC, according to Onur Celebioglu, senior director of engineering for HPC and emerging workloads at Dell.

“We’ve been doing a lot of experimentation in our labs to understand how can we combine these HPC and AI workloads under the same umbrella, under the same infrastructure,” Celebioglu said. “When you look at it from an infrastructure layer, HPC and AI workloads look similar in the sense that they both require high-performance computing. The usage of accelerators like GPUs or purpose-built accelerators is very prevalent. You need high-speed network interconnects. You need a lot of storage and to be able to see that storage in parallel high fashion to the systems. At the hardware level, the infrastructures for each look relatively similar. But AI is driving more of these purpose-built accelerators and that’s a trend you seen growing in the commercial and enterprise sector, in addition to the traditional HPC customers that have … used HPC or simulation.”

On the software, side, he said, “there are some differences [like] the AI-specific frameworks and languages, and then Kubernetes becoming de facto orchestration layer for these containers is going to drive further growth in those kinds of software technologies. You can see some of the implications of that — VMware supporting containers [with] Tanzu, a lot of companies coming up that are Kubernetes-based, the software stacks that support Red Hat’s OpenShift container orchestration. You can see a lot of examples of those container-based orchestration frameworks finding their way into enterprise deployments being able to run these AI workloads.”

Boisseau added that “HPC has been around since the early days of supercomputing in the late 1960s and 1970s, long before virtualization and containers. That community has used compile programing languages and not used containers or virtualization. But AI has really erupted … in the last six or seven years, especially deep learning approaches. That’s all been in the modern world of DevOps practices for software development. It evolved and emerged during this time of virtualization being prevalent and containers now being a new way to encapsulate software. That’s been a good thing for HPC as well, because so many of these AI workloads now need underlying HPC systems. Those AI developers are bringing these modern DevOps approaches into HPC environments.”

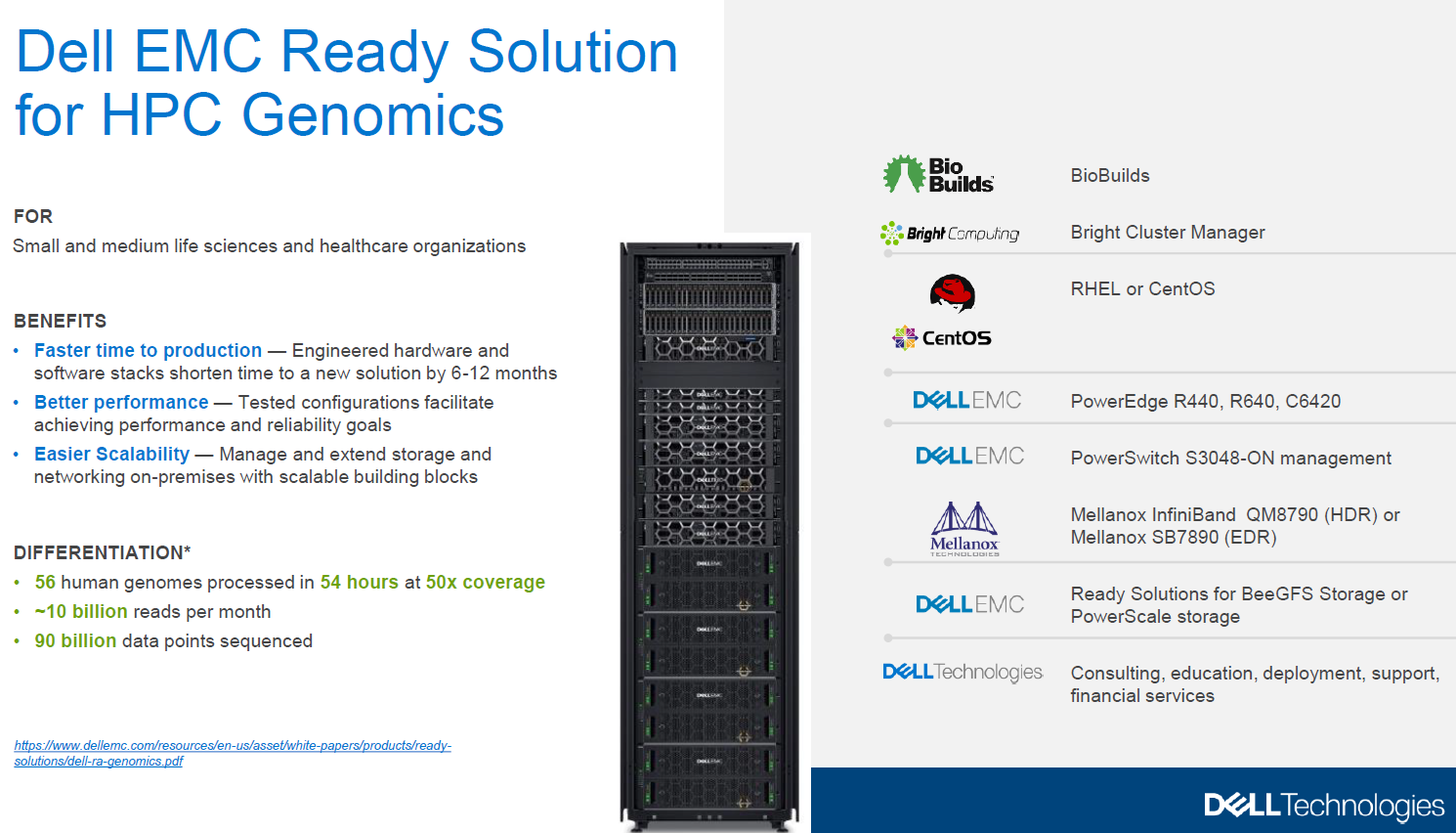

In conjunction with the virtual SC 2020 show this week, Dell EMC is introducing Ready Solution for HPC Genomics, an offering that can include PowerEdge R440, R640 and C6420 servers, Mellanox InfiniBand QM8790 or Mellanox SB7890 networking, Dell’s PowerSwitch S34328-ON management and Ready Solutions for BeeGFS or PowerScale storage. It runs Red Hat Enterprise Linux or CentOS Linux distributions, BioBuilds – a collection of open-source bioinformatics tools for genomic workloads – and Bright Computing’s Bright Cluster management software. TGen, a company that uses genomic sequencing of diseases for precision medicine and that is working on developing a vaccine for COVID-19, is leveraging Ready Solutions for Genomics and has been able to cut its genomic sequencing from two weeks to seven or eight hours, according to Pelligrino.

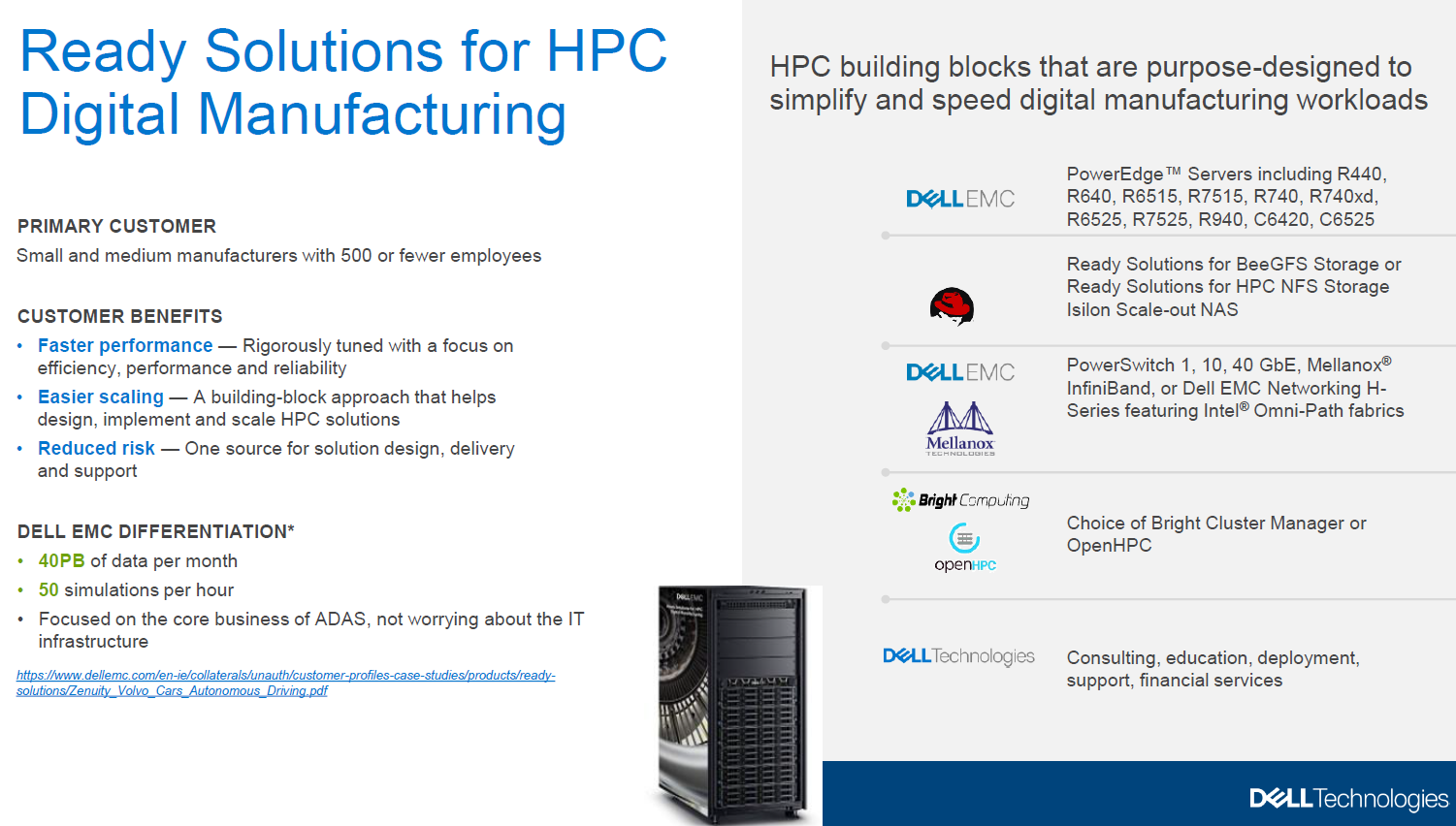

Dell EMC is aiming the latest addition to its Ready Solutions for HPC Digital Manufacturing that includes a range of PowerEdge server, Dell or Mellanox networking, BeeGFS or Dell Isilon storage, and cluster management from Bright Computing or the OpenHPC community. It also runs Altair Hyperworks’ simulation software and HPC middleware.

The vendor is targeting small and midsize companies with 500 or fewer employees and offering it as a managed service, part of Dell’s larger Project Apex initiative announced last month aimed at enabling the company to eventually offer its product portfolio – from PCs and servers hyperconverged infrastructure, storage, networking and other solutions – as a service that is managed by Dell. The Ready Solution is designed to manage 40 petabytes of data a month and run up to 50 simulations per hour.

Dell is continuing on its as-a-service push by partnering with VMware and Bright Computing to automate the building and managing of high-performance clusters as part of the Ready Solutions portfolio. Bright’s Cluster Manager 9.1 brings integration with VMware for virtualization, containers and multicloud management, enabling organizations to leverage their VMware clusters to run AI more workloads by pooling provisioning, monitoring and change management into a single tool. There also is cloud provisioning for building clusters in public clouds or expanding physical clusters to the public clouds.

“A couple decades ago … when you asked about virtualization for HPC, most customers would standardize on bare metal systems and not consider virtualization technology because of its performance overhead,” Celebioglu said. “But in recent years, we’ve seen a significant drop in that performance overhead that virtualization provides. You’ve also seen a lot of commercial companies that previously did not utilize HPC but standardized on VMware for their IT infrastructure, needing high-performance computing systems and AI systems. Since they are standardized on VMware technology for their IT infrastructure, they are also interested in leveraging the same way of managing systems to manage their new high-end HPC systems. Combined with those facts, we are seeing an increasing interest in utilizing virtualization technology-as-a-service type models for HPC systems and AI systems. That’s one of the reasons why we partnering very closely with VMware and Bright Computing and have been working on this in our labs in collaboration with them for the past couple of months to provide this capability where customers can easily manage their systems using virtualization infrastructure as well as dynamically being able to stand up and spin down a cluster of clusters using VMware technology with the integration.”

Dell EMC also is working with several cloud and colocation providers to make its HPC and AI technologies available as a service. The vendor is partnering with DXC Technology (in the Asia Pacific and Japan regions), R Systems (North America) and Verne Global (EMEA) to host, run and manage Dell’s technologies for organizations.

The hosted offerings will enable companies to access the technologies without having to the expense or management responsibilities that come with running them in their own datacenters. Most HPC and AI workloads are running on premises, but enterprises are increasingly embracing the cloud-based as-a-service model, according to Boisseau.

Be the first to comment