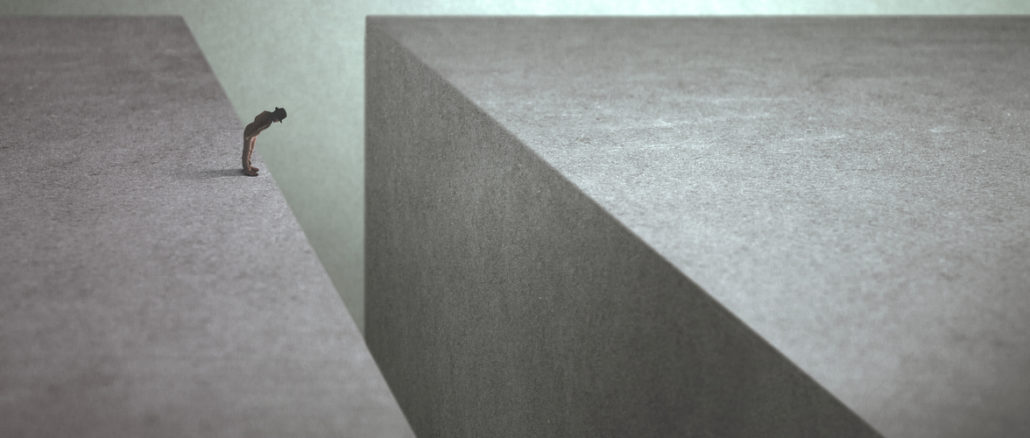

The more things change, the more they stay the same in HPC storage. But for the broader enterprise world, the more things stay the same, the quicker companies are to seek out change. As long as it’s easy to manage and provides reliability, cost is not at the top of the list. This is exactly the opposite in HPC, which is keeping supercomputing limited in what it can do now and certainly in the fast-approach exascale age.

Every few years a new crop of storage vendors rises to the top with bold simultaneous claims for both the world’s largest supercomputers and the most demanding enterprises. However, the ability to tackle both well is actually impossible, despite best efforts. This is becoming a pronounced as enterprises diversify their infrastructure dramatically, disaggregating, cloudifying, containerizing, and so on. And the number of storage startups that have sprung up to meet this demand has been staggering. A few tentatively approach HPC but nothing sticks—or likely will in the coming five years.

That balance between the requirements of both worlds, with one emphasizing performance/cost, the other usability/reliability is not possible for the largest systems, even if it might have seemed like some vendors were on track to deliver something for everyone five years ago. In fact, the divergence in these two disparate areas is peaking, says HPE’s head of HPC storage product, Uli Plechschmidt.

When it comes to perspectives on the evolving HPC storage landscape, few have better context than Plechschmidt. He was one of the first users of IBM’s GPFS (now Spectrum Scale) file system and over the years, has landed at companies that have pushed forth various file system and storage innovations, from IBM, to Seagate, then Cray, and now HPE, mostly via acquisitions. In his role at HPE he has seen how various storage startups have tried to tackle both markets, only to find their successes when they burrowed all their efforts into particular niches.

“At the moment there’s not a technology that can serve both HPC and enterprise well and I don’t see anything in the next five years that can make up that gap,” he says. So, if this is the case, what’s missing and where and how is the divide between the two worlds widening? As it turns out, starting at the file system provides some of the best context.

“If you want to have long term success, look at the file system level. In the HPC market you need to have an open source, community-driven model that’s developed by big proponents in the national labs because it’s hard to make a good file system with all the development needed without feeding those developers, keeping them on the payroll. That’s the biggest issue,” Plechschmidt explains. “It is simply hard to do a 400 PB file system or 700 usable capacity or an exabyte with commercial software if you don’t want to lose too much of your fixed budget and have to tradeoff CPU or GPU nodes.”

Five years from now when exabyte storage will be the norm at the largest systems (700 PB usable, at the least) the divide will only get deeper.

“If you look at the forecasts for flash and disk, all predict that in four years there will be a price difference per TB more than 5X. It wasn’t even three years ago the flash price per terabyte came down and crossed the HDD line and now they say HDDs are dead? They also said everything would have been flash by now, even long-term archives,” Plechschmidt says. “For a while everything was object storage in enterprise and HPC and while object gained some traction in HPC storage architectures, it’s maybe an archive outside the parallel file system tiered with Lustre and Ceph, for instance. But it just doesn’t deliver the performance.” The point is, projecting where storage will go in five years is not simple or clean, there is still quite a bit that’s unknown—not in terms of the demand or requirements, but which path provides the kind of price/performance HPC is looking for.

He points to other directions HPC is going that don’t represent anything in enterprise, including the move to near-node or “tier 0” storage where there’s an internal layer inside the supercomputing cabinets that’s mainly persistent memory with ephemeral file systems in addition to the external file system. This is what the forthcoming Aurora supercomputer at Argonne will have and is something Plechschmidt is helping build based on Cray Lustre. “We’ve already shipped 200 PB of Cray Lustre as external but we’ll integrate with near-node Intel DAOS storage that will also be part of the machine. That’s happening on several large systems, this near-node inside the machine that interfaces with the parallel file system.”

Plechschmidt says his prediction is that if things continue on their current course for HPC, storage will look quite a bit like what we see with Intel and DAOS between now and the delivery of the first true exascale machines. “There will be a layer of 100 PB or so most likely persistent memory, then another 200 PB or so of flash for a fast tier, then within the same file system a pool of disk to make up the other 700 PB. Even then it will still be economically prohibitive to build everything on flash unless a ton of the budget goes that direction, which isn’t a good use of funds.”

Hardware aside, it’s the file system that will be the limiting factor for both HPC and enterprise shops as they figure out their next five to ten years of data management.

Unlike the rest of the enterprise world, which often has fewer performance-driven constraints and a bigger focus on usability at any cost, the world of file systems will continue to be limited by Lustre and IBM Spectrum Scale. Around two-thirds of all major supercomputing sites are using Lustre, another one-third IBM’s file system and there is very little room for the startups to make any headway, at least in Plechschmidt’s opinion. We talked about BeeGFS as one example. He says that this is a solid offering but with limited traction. Considering how much work it will take to pass muster on exascale systems, that Lustre/IBM equation isn’t going to change and even if some of the Pure Storage and Vast Data and other companies can find an in with HPC it will be for very particular use cases.

He points to Weka.io and its Matrix file system as one example of an enterprise-geared storage company that just couldn’t find footing with the HPC set until it narrowed its scope significantly. “Weka’s Matrix is an interesting file system. As HPE we’ve used Weka on the Proliant architecture for some nice use cases where the customers were willing to pay top dollar for extremely high IOPS in financial services trading and some areas of genomics and it’s a great fit. But it’s a lot more expensive than an HPC file system. With HPC’s insane performance for ridiculous [low] budget, the question is always how can we deploy the fewest number of drives and not be charged for a file system license per terabyte. But with Weka, in the beginning when they’d been encouraged to tackle the HPC market with HPE we were disappointed until they focused their go to market on finserv trading and genomics, those very specific, top dollar use cases. It was clear going after the whole HPC market didn’t make sense because of the price/performance level required.”

This was in no way a slam against Weka.io, of course. The point is not about a specific vendor, it’s about the lack of ability for vendors who build file systems for enterprise and expect those translate to HPC simply by nature of scalability or speed. If it’s not Lustre or GPFS based, it is very likely not going to fly. This comment comes after a series of briefings from storage companies who say that their performance and benefits translate just as easily from a hyperscaler to a supercomputing center. The technology might carry over, but the decision-making equation is night and day between the two.

So, at what point will the current, limited approach to HPC storage give way to something entirely new? Perhaps not in the next decade. Lustre and Spectrum Scale both continue to be the subject of extensive work to add robustness and perhaps that’s enough. But to those ambitious startups we’ve talked to about HPC market readiness, come in through the side door by picking an application or area for intense acceleration that can justify the cost, particularly from a file systems point of view.

casfs from codewilling I feel has solved the hpc file storage issues for example it can handle multiple anaconda setups, and millions of files in a directory while being distributed, and using petabyte scale in AWS, and also on prem without super cost where you typically need multiple servers, it can handle all on one

Commercial bullshit. Lustre + ZFS can serve hudnreds petabytes.

NOW 1EB storafe is being built