The external storage market – like most sectors of the IT industry – took a beating in the first quarter, thanks in large part to the novel coronavirus pandemic.

According to IDC numbers released earlier this month, global revenue for enterprise external OEM storage systems fell 8.2 percent year-over-year, dropping to $6.5 billion, even though total storage capacity shipped increased 3 percent, to 17.3 EB (that’s exabytes). As with the server space, hyperscalers like Google, Microsoft, Amazon, and Facebook – many benefiting from the accelerated push into the cloud by enterprises needing to quickly adapt to most of their employees suddenly working from home due to the global health crisis – saw demand rise and moved to bulk up their datacenters accordingly. That helped prop up original design manufacturers (ODMs), which saw revenues grow 6.9 percent, to $4.9 billion.

However, as business becomes increasingly data-centric and multicloud-focused, the need for flexible, scalable, automated and cost-efficient storage continues to grow and vendors over the past several weeks have pushed to expand their offerings. That has included Hewlett Packard Enterprise unveiling a host of new innovations for its Primera and Nimble Storage offerings that bring on more automation and intelligence, support for NVMe and storage-class memory, improved replication capabilities and availability through its GreenLake as-a-service platform. Dell EMC last month rolled out its PowerStore all-flash systems for mid-range companies and enterprises and this week launched its PowerScale hardware and software aimed easing management of unstructured data.

All-flash vendor Pure Storage in June came out with Purity 6.0 for FlashArray, the latest version of its operating system, which has enabled the company’s FlashArray systems to support both file and block storage natively on the same system. The announcement, made it the company’s virtual Accelerate event, also included ActiveDR, which comes with continuous active-passive replication, the ability to run two physical instances of Purity anywhere in the world – removing distance requirements – and near zero recovery point objective (RPO). All of this is available to companies via Pure’s Evergreen subscription-based pricing model, similar to HPE’s GreenLake, giving customers a pay-as-you-go option.

The company aims to have Purity 6.0 for FlashArray help to continue to fuel the growth the company has seen since launching its first products in 2011. Pure is now a company with $1.64 billion in revenue (at the end of its last fiscal year) and more than 7,500 customers. It also is one of the few storage vendors that came out of the first quarter in fairly decent shape. According to IDC, the top four external storage OEMs – Dell Technologies, NetApp, HPE and the New H3C Group (which it has a stake in with Tsinghua) and Hitachi – all saw revenues fall anywhere from 8.2 percent to 20 percent. By contract, Pure saw revenue jump year-over-year 7.7 percent. The company was tied for fifth place on the list with IBM and Huawei, whose revenues also grew 3.8 percent and 17.7 percent, respectively.

Matt Kixmoeller, vice president of strategy at Pure, spoke with The Next Platform during the Accelerate event about the status of Pure’s Modern Data Experience, an initiative kicked off last year, the company’s recent innovations, the future of flash and NVMe and how it differentiates from its competitors.

Jeffrey Burt: It’s been a year since Pure announced the Modern Data Experience. What is this initiative and a year later, where does it stand?

Matt Kixmoeller: I think the main driver was actually seeing how some of our more sophisticated customers were operating and wanting to really help the broad-based consumer base make the jump. It was really about helping customers kind of jump into finally running the storage in the cloud bottle, for lack of a simpler way to say it. Storage historically had been very app-by-app, independent solutions, very manually driven. The largest customers had kind of really graduated to a cloud delivery model, where they had defined standard storage services, largely consolidated them, delivered them in an automated way so users could sell provision chargeback and all that. They truly made that leap. The whole idea of the Modern Data Experience was to basically show customers how they could graduate into this bottle and our products that help get them there. The thing that’s happened between now and then – it’s an interesting accelerant – unfortunately, is COVID. I think it’s showing folks that they really need to get beyond some of the human reliant processes that happened in the past. They just have to graduate to a world where they can automate so they can have a peace of mind that the automation works and isn’t so dependent on not just humans, but like X-, Y-, Z-specific human. That’s the only person knows how to run that mainframes storage solution. That’s just a recipe for disaster.

JB: COVID-19 accelerated the direction people were going already or was it a real sea change?

Matt Kixmoeller: It was definitely an accelerant and it’s getting people to really think about automation not as a luxury, but as a requirement for business continuity in a lot of ways. That’s the second thing I would say it’s an apropos time to be launching some enhanced replication and resiliency features. People are really focused on just making sure they have a reliable foundation within IT so that they’re prepared for disasters like this. This is more of an ongoing disaster than kind of a one-time disaster in the past. We were used to things that came fast and then we recovered from it. This is more of learning how to operate in this kind of new normal.

JB: The pandemic eventually is going to pass and things will settle down a bit, but a lot of what’s happening now is going to be permanent going forward.

Matt Kixmoeller: It also caused a number of our customers to have to pretty rapidly scale their digital innovation plans. All of a sudden, they were going from a luxury or a side experiment on digital to, ‘This is the main way we have to run the business for a while.’ That required a lot of scaling very fast for a number of them. It’s actually a time in the company that as Puritans, we took a lot of pride that we were able to just kind of rise up and help deliver storage, help customers through this journey. We won a number of customer relationships where competitors just simply couldn’t shift gear and we had a very stable supply chain and just a ‘let’s make stuff happen’ attitude that was good for everyone. In some ways it’s brought the company more cohesively together around customer success during this time.

JB: Looking back over the past year, what have been the key steps Pure’s taken to push forward the Modern Data Experience idea?

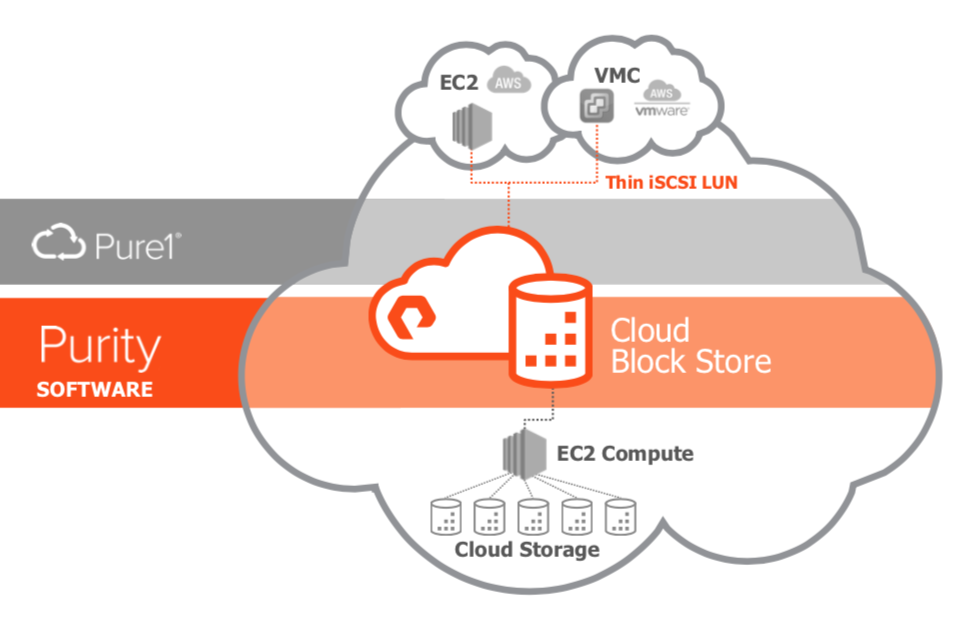

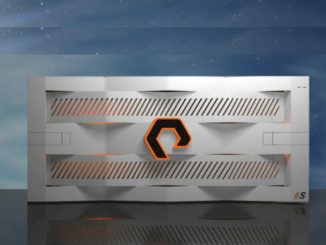

Matt Kixmoeller: The first is just we’ve worked on all of our APIs and automation technology. Whether you have bare metal or VMs or containers, we’ve just worked holistically on making all that more performant, more robust, etc., so that the customers who are really serious about treating infrastructure as code and automation can do so with our product lines. The second I would say is, we’ve really matured our Cloud Block Store offering [below]. Being able to have options for running on our infrastructure or just software capabilities that can run in the cloud are critical as well.

The third thing that we’ve focused a lot on is we’re seeing our more advanced customers start to build environments for running cloud-native apps on-prem. When you step back and ask what that means, there are two things I see customers really doing more seriously now than they were a year ago. The first is getting serious about containers. It’s a very different architecture. VMs have changed a lot of the infrastructure, but containers change infrastructure and the applications stack, so there are many, many implications on storage, particularly about just the level of automation required and the ephemeral nature of containers. We’re seeing our customers having graduated from point container deployments and isolated applications or isolated departments to IT really building a container foundation within the company for a private container cloud or whatnot. The second thing is really getting serious about on-prem object storage. You look at cloud-native apps and generally they persist state in object storage and that object storage is presumed to be big, global, fast and just always available. That didn’t really ever exist on-prem for object storage. If anything, customers who have on-prem object storage bought out of the last generation of object storage that was really designed for archiving and low content.

There’s massive content archiving and low performance use cases, so when we launched FlashBlade four years ago, we talked about it being a platform for fast file and objects. A lot of people scratch their heads of the object part and said, ‘Who needs a fast object store on-prem?’ That conversation’s had a total 180 in the last year, where we’re seeing all the large customers understand that this is not just a dumping ground for content, but really, the foundation for their modern web-scale applications. That’s becoming a thing in a big way and FlashBlade’s ability to bridge that gap between file and object, where many of those applications still need file, [we have] a single platform that can do both as people evolved objects. It’s very strategic.

JB: What role has containers played in what Pure is doing and where it’s going with its strategy?

Matt Kixmoeller: The biggest thing that containers have meant for storage companies in general is a much higher change rate, everything being API-driven and many, many small objects as opposed to very, very big volumes. I’ll give you an example: If you think about kind of physical systems that we built 10-plus years ago, they were designed to be around for years. You would connect storage to the system and that Fibre Channel pipe was bullet-proof and meant to be there forever. VMs put in a little bit more fungibility where maybe people would add VMs daily and whatnot, but they’re still long lived.

The assumption was that app was going to be there for a long time, so they didn’t present that much of a challenge to storage. If you look at all of our colorful blogs and our traditional environments, we observe just a handful of administrative actions per server per day — under 10 — creating a volume, deleting a volume, etc. I’m aware of one of our large SaaS customers who runs about 30 of our systems, and they basically create and destroy over 10,000 iSCSI connections daily because containers are stood up, they do their work for a couple minutes and they go away. Your storage system wasn’t designed to be able to deal with that high change rate. You can’t adapt it to this container world, so that’s challenging a number of vendors. It challenged us just as well. We worked quite a lot on our APIs. We also introduced a software capability called Pure Service Orchestrator, which basically helps automate our storage environment. It’s fairly obvious but a human admin can probably make 10 volume a day, but they’re never going to be able to do 10,000, so automation is really the only option for keeping up with the fluid nature of the container world.

JB: How significant a step forward is Purity 6.0 for customers?

Matt Kixmoeller: It’s a proof point of just the constant ongoing innovation in our Evergreen model, where when you subscribe to Pure, whether it be by buying into our Evergreen program or subscribing to Pure-as-a-Service, you’re not only buying the storage of today, but you’re subscribing to an ongoing stream of new innovation. Purity Six is one of the largest feature releases we’ve ever done.

The two big features are ActiveDR and FlashArray file services. On the ActiveDR side, we’re really taking what we’ve done with our top-of-the-line active cluster solution that allows you to run active-active between two datacenters with zero data loss and allowing that to be used anywhere. When you’re using ActiveCluster, you have to basically have your two datacenters relatively close, because you can’t get it over the speed of light. It’s good within metro distances. But there are a lot of customers who basically want that same level protection, but fundamentally, their DR objective has to be, ‘I want to go between New York and Florida or between Europe and the US or whatever,’ so ActiveDR gets as close to that as possible over long distances, where you still have basically the data being sent as quickly as it can, but we just don’t wait for the acknowledgment that it made it to the other side before acknowledging the I/O. It really brings that highest class of DR protection to longer distance plans and it finishes our suite of protection.

The exciting thing about FlashArray File Services is it really enables Pure to meet the kind of complete market need for file. When we came out with Flash Player four years ago, we really had in our minds building a product that could go after tomorrow’s use cases. That was built for fast file and object to go after machine-generated data, log data, analytics data, things that were more being generated by applications of machines, at that kind of scale and pace, than human content. Many were always asking us, ‘OK, but what about my user files? You know, I’ve had this old filer in the datacenter forever. I want to bring it into the Pure environment.’ They were always asking us, ‘Hey, can I get a lower end version of FlashBlade or more of the enterprise features that I expect from a file product for more enterprise use cases?’ Bringing out FlashArray File Services really allows for us to solve that other half of the file problem and help customers who want a single storage device. It does both block and file and also user-centric file content.

Because it’s the result of our acquisition last year of the Compuverde technology company, this first generation of it is quite a mature offering in terms of capabilities and protocols support. We didn’t develop this from scratch. We built on the ten-year foundation that Compuverde had started. That said, though, we also were very careful to take our time with this integration and make sure it was a full-fledged integration. If I look at the space of unified storage devices over the years, you had a lot of block storage devices that kind of added some sort of a file gateway on top. It was this kind of manageability and performance work that sat on top of an otherwise functional storage array. We didn’t want to do it like that. We wanted the file services to be a true peer of the block services, so we integrated them very deeply in the OS. They’re first-class citizen. They share dedupe globally, across the entire array, shares resiliency, RAID and flash management globally attached across the entire array, so you don’t have this kind of two-headed monster of ‘I’ve got this thing in this thing’ and they exist in the same sheet metal, but they’re basically two different systems. This is a fully integrated product.

JB: Other vendors, such as HPE and Dell EMC, are going in the same direction that Pure is headed, such as subscription models and storage-as-a-service. How do you guys differentiate? How are you different from what they’re doing?

Matt Kixmoeller: Number one, I think the results speak for themselves. We actually had the IDC data come out … and in Q1, the overall storage market was significantly down, over 8 percent. We were up 7.7 percent. … I think there is a gap in execution that’s becoming more and more evident. And this isn’t just a Q1 COVID thing. They’ve been shrinking for three to four quarters, depending on the competitor now. We’re opening up a nice gap in terms of sustained execution compared to our large competitors.

In terms of high-level differentiation, I think about it in a couple different areas. Number one, the continuity in the market over the past decade have been flash and cloud. Each of our competitors basically built their products before both those things and have been trying to add it on. We’ve been fortunate to be able to build for flash and the built for the agility and fast flexibility that cloud demands with APIs, fast change rates, etc. You can paper over some of that stuff, but fundamentally, if your product wasn’t designed for these changes, it’s very difficult to adapt it. The second thing I would say is simplicity. Simplicity is just a religion of Pure. It goes into everything we do. It’s easy to say, but the details really matter.

The third thing is, here’s a service. This is another thing that I think we’re really seeing acceleration with in the post-COVID era, where customers are wanting more and more flexibility as they realize they don’t know what the future brings. Pure-as-a-Service was designed to offer flexibility on two dimensions. The first dimension is just the flexibility to bring your consumption up and down. We’ve worked to create a very low entry commitment, 50 terabytes for one year. As long as you sign up for that entry commitment, any consumption above that is completely flexible. You can consume up, consume back down. It’s all pay-per-use. This is quite different than if you look at most of the deals that our competitors are doing. They’re basically the same three- to five-year lease that has a long-term commitment. It’s just being financed in a different way via opex. We’ve worked hard to make our Pure-as-a-Service program truly a program that’s transactional and easy for everyone to sell as opposed to a highly customized, per-customer leasing deal. The second dimension of flexibility is really the ability to go between on-prem and cloud. This is unique with Pure-as-a-Service, where it’s a unified subscription, where you buy 100 terabytes of Pure-as-a-service. On day one, we’ll land an array at your datacenter. You can use all 100 terabytes right there, but two months later, you decide you want to move a certain application to the cloud and move 30 terabytes to the cloud, you’re already licensed for our Cloud Block Storge or you can use our application to move the data to the cloud and you’re off and running and you didn’t have to pay us anymore. You can just move back and forth between those two deployment models. That in particular is something right now that people are struggling with in that they need to beef up their infrastructure and they’re making investments, but they don’t know long term how much of it is going to be on-prem versus cloud.

JB: Can you give kind of give me an idea of where you see the flash market going? How do you see it evolving over the next year or two?

Matt Kixmoeller: When we started the company, at our very first Accelerate six years ago … the title of my [talk] was the all-flash datacenter. We were talking about how our long-term goal is to get to the all-flash datacenter. Back then, people said, ‘Oh, OK, that’s it. You’re the flash company. You guys like flash. That feels so much more tangible now as people now see that flash is where the performance is, about efficiency, reliability and everything else. As the costs come down, we can now very, very easily make the case that anything that’s a performance-centric workload, you’ll easily save money by being on flash. But now with FlashArray//C [below] and our QLC- enabled products, we’re now really going after the second tier of applications. Finally we’re seeing a ton of uses of flash for the recovery, for rapid recovery, we call it. That’s probably the part I wouldn’t have predicted, but it turns out that rapid recovery is something that is absolutely critical.

The risks around ransomware in particular have brought that into the limelight. We have a customer who moved recently to a very, very modern backup. They did everything right, they invested in the most modern technology, they had disk-based backup everywhere and they got, unfortunately, hooked up with ransomware. As they went to recover, they were finding that they could recover about 10 VMs a day in an environment of just thousands. In the past, the backup recovery discussion has been around backup speeds and how fast I get everything backed up, but truly having to do a bulk recovery is infrequent. Ransomware is one of the first things that’s actually bringing to everyone’s realization that, ‘Man, I might, after this, actually recover everything one day. And how long is that going to take?’ Using flash to avoid that disaster is as important. It’s also a different mindset. A hurricane sweeps through everyone at some level, kind of gives people the benefit of saying, ‘Okay, that was that was pretty bad.’ But ransomware is a present threat that everybody is now expected to protect themselves against, so you don’t particularly get a pass on that as a team leader.

JB: They shouldn’t be surprised if it happens.

Matt Kixmoeller: You have to architect for expecting it will happen.

JB: When you look at the storage market, disk is still a larger part. How do you see flash and disk rolling out in the coming years?

Matt Kixmoeller: Flash never got less expensive than tier-one disk. It just got close enough that the overall benefits far outweigh the price. I believe the same thing is going to happen at all tiers over time. The thing to understand is that disk keeps getting bigger, but not faster, so actually the price-per-performance of disk just goes down every year. That means that the number of applications that can tolerate the speed of disk are just going to reduce over and over and over as time goes by. … Unfortunately, the options for continuing the trajectory of cost reduction on disk and density improvement are just not very attractive for customers. There’ll be large-scale content farms in situations where performance doesn’t matter at all that perhaps will remain on disk for quite some time. But anything that’s an application, anything that users actually interact with on some basis, anything that has even the slightest dimension of needing response time and performance, I think that goes all-flush.

JB: What’s the status of NVMe and how will that evolve in the next year or two?

Matt Kixmoeller: We came out with our direct flash modules that are our own designed software-enabled flash models in our system several years ago now. But in the early generations of FlashArray//X [below], those were in our higher-end systems, the x70 and x90. In our lower end systems we still used off-the-shelf, which are smaller SSDs. We’ve now taken that architecture across the entire products and we don’t ship any SSD anymore. In addition to having just great cost dynamics for us and the customers, we can save money that way. After years of how it is in the field, we’ve seen a materially lower failure rate as well. So faster, more reliable, less expensive. It just kind of winds on every dimension. That’s why we decided to go across the whole product line.

On the NVMe-over-Fabric side, it’s still early days in that transition, but it was exciting to see VMware come out with NVMe-over-Fabric support in their latest release. That will really open up mainstream deployments. We’ve seen today largely cloud-style customers, SaaS [software-as-a-service] customers, be the folks who deployed our NMVe solutions. But with VMwre now supporting it, I believe it really opens the floodgates for traditional enterprise adoption. The commitment most customers at this point see towards Ethernet is very strong and NVMe-over-Fabric just allows Ethernet to have the performance high ground in terms of storage connectivity.

Great vision…great company

BTW…I got better understanding on flash…thanks Matt

They were clearly going for a Max Headroom gag with this photo.

Matt Kixmoeller is known as Kix, so maybe Kix Headroom?