One of the common themes – and one could say even the main theme – of The Next Platform is that some of technologies developed by the high performance supercomputing centers (usually in conjunction with governments and academia), the hyperscalers, the big cloud builders, and a handful of big and innovative large enterprises eventually get hardened, commercialized, and pushed out into the larger mainstream of information technology. It is our pleasure to chronicle these migrations and transformations.

We have been watching Vast Data since it uncloaked its high performance Universal Storage in February 2019, which is a new clustered storage that takes an object store running atop low-cost QLC flash and runs its compute and storage elements (which can scale independently unlike in many storage arrays) and lashes them together using an NVM-Express switched fabric that is at the heart of the system. We saw this high-end storage, which was aimed at HPC and AI workloads initially, ramp up, but we knew, as Vast Data obviously knew, that it would have to adopt a wider variety of storage protocols so it could be more widely deployed.

Vast Data needed to do this to live up to the Universal Storage name, and we think that supporting more than the S3 object protocol and the NFS v3 file system format was necessary. Specifically, we thought that Vast Data had to add support for Server Message Block (SMB) block access that is commonly used with Windows and Mac environments for both clients and servers. And now, with Version 3 of its Universal Storage stack, Vast Data has done as promised and has created its own SMB layer – not just dumped the kludgey open source Samba implementation on its object storage software foundation – and expanded its total addressable market to move from selling to HPC centers that remember the simplicity of NFS fondly and who don’t want to use parallel file systems unless they have to – and Vast Data will argue that they do not have to – to selling to enterprises who are never going to get rid of SMB block storage access in their applications.

SMB is in a lot of places in the enterprise. Every hedge fund has MATLAB running against large datasets somewhere in its stack (Square Point Capital, a hedge fund in New York, is a big and referenceable customer), and MATLAB runs on Windows Server and requires SMB block access. Media and entertainment companies also have petabyte-scale storage needs where people do their jobs from workstations and their applications expect SMB data formats. And the same holds true for myriad life science applications. Just to name a few. There are plenty of Windows and Linux applications that also access data using some form of SMB, whether it is CIFS or Samba, and there are usually complaints about how some of these implementations of SMB work.

“We didn’t go and get some third party software or rip off Samba to make our SMB implementation,” Jeff Denworth, vice president of products at Vast Data, tells The Next Platform. “We made ours from scratch from day one, and that is not typically what storage companies customarily do. And the reason we didn’t take the easy way out is that if you do that, you don’t get the robust failover experience that enterprise customers want. It is hard work to make sure the SMB customer experience is really solid and not second rate to NFS or S3.”

Universal Storage Version 3, which has been in beta testing for the past three months, also adds encryption for all data at rest, and to keep the underlying hardware as similarly universal as possible, the AES-256 encryption that is being deployed in the Universal Storage controllers runs on generic X86 processor cores, not on any specialized encryption engines either adjacent to the cores on the CPU package or in PCI-Express controllers. Denworth says that “cores are relatively abundant” and “we think of them as kind of free these days,” and moreover that because the compute scales independently from the flash storage capacity in the Universal Storage cluster, customers can add more compute to handle encryption when they want to turn it on and not have to touch the storage servers in the cluster at all. Moreover, all of the storage software that comprises Universal Storage runs in Docker containers and is orchestrated by Kubernetes, and you just want to keep it as generic as possible. We strongly suspect that if customers need to use AES-256 accelerators for some reason, Vast Data could accommodate this, but as long as the encryption is at line rate performance and doesn’t eat too many cores, this should not be a problem.

The third thing that Universal Storage Version 3 offers is asynchronous replication between object storage services running on the big public clouds, such as S3 on Amazon Web Services or Azure Blob Storage on the Microsoft Azure cloud or secondary Vast Data clusters running either locally or remotely.

Customers who are on support contracts – and at this point all of them are – will get these new features when they upgrade to Version 3. Vast Data does not want to “nickel and dime” customers as many storage vendors do when they add new features.

Looking ahead, Denworth says that adding the 20 new features in Version 3 is a lot of the core development that will be done, and for the rest of the year improvements will be in giving the product more fit and finish to make it even more suitable for enterprise customers who require this. But in 2021, Denworth hints, there is plenty of innovation that will be brought to bear on the product in a future version.

“We think there is a ton of opportunity with this architecture as it pertains to getting a lot more intelligence out of the underlying storage,” explains Denworth, quickly tweaking that thought. “I should say infusing that storage with intelligence so that you get a lot more insight out of it.”

Counting The Customers And The Money

With the update out and the company taking down its third round of venture funding for $100 million a few weeks ago, now seemed a pretty good time to get an update on how the Vast Data business is doing. With that Series C funding round, Vast Data has raised $180 million so far and still has $140 million of it in the bank. The company now has 145 people, up by a factor of 2.3X since last year, and with that promised SMB support, it can chase a $10 billion total addressable market in HPC, AI, and object storage workloads.

That Series C funding, by the way, was not in the works for a long time, but was rather triggered by the financial results that Vast Data turned in for 2019 and investors being eager to get in on the ground floor. When you are a startup and people are throwing money at you during a global pandemic, you take it. The first part of the Series A funding for $15 million, which came in January 2016, had Norwest Venture Partners and 83North kicking in money, and the second piece of Series A for $25 million, which was taken down in March 2018, added in Goldman Sachs and Dell Technologies Capital as well as additional funds from the two prior investors. The Series B round in February 2019 for $40 million was done by Norwest, 83North, and Dell, and the Series C just announced for $100 million had the original four investors participating as well as Next47, Mellanox Capital, Greenfield Partners, and Common Fund. With that funding and the projected growth of the business sustained by that funding, Vast Data now has a valuation of $1.2 billion, which is pretty fast to unicorn status compared to other storage startups. Here is how Denworth charts out the speed, in months, to unicorn status for some familiar storage upstarts:

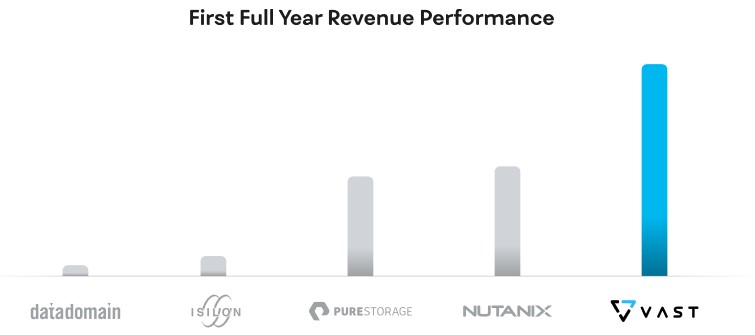

And here is another one showing the relative revenue sizes of storage upstarts in their first full year of business:

That’s a pretty good first year, and especially considering that so far Vast Data has only a few dozen customers and just over 100 PB of flash capacity sold last year. This is the very tippy top of the tip of a very large iceberg, and Vast Data thinks it is on a tipping point that is only going to be accelerated now thanks to the addition of SMB to its support for NVF and S3 on its Universal Storage software stack.

Last year, according to Denworth, the average deal size was around $1 million, and the largest deals done were in the range of $5 million. The biggest customers bought in the range of 15 PB to 20 PB of capacity, so there were a bunch of smaller customers doing a few PB of capacity, obviously. But, and here is the important thing, Denworth says it has deals in the exabyte – that’s EB or 1,000 PB – range in negotiation now, and given all of the prospects out there and its very conservative research, development, sales, marketing, and general expense cost control, he adds that the company is trying to get to breakeven and then profitability as fast as it can.

This is something that Pure Storage and Nutanix are still struggling to do, many years after going public.

“We have charted a path to breakeven next year, and we don’t intend to spend all of the money we have raised to get there,” says Denworth. “And the only way that you can do that is if you don’t go down the pyramid and start selling $50,000 and $100,000 systems. You have to sell significant infrastructure to pay for the cost of sale right now. If you look at companies that have come before us, a lot of times they were chasing after a specific segment of the market, but because we can go after all of it, our deal sizes are also larger as a consequence. So, for example, take a media customer that is buying us for media workflows, VMware server virtualization, and Commvault all in one system. And when we go into AI environments, we are not only selling for high performance applications, but we’re also combining that with the capacity tier into one big system so we can a larger deal size than other point solutions.”

Denworth has studied the first three years of Pure Storage, Nutanix, Zoom, and Datadog – the last two being Saas services for conferencing and systems management, not even storage – and the unit economics of Vast Data and its capital efficiency for its go-to-market strategies are just a lot higher. And hence its optimism about getting to breakeven quicker than we might expect from a storage unicorn. Or, what Denworth says is actually a storage camel because that animal is all about being efficient and tough, not pretty and imaginary.

Be the first to comment