Intel’s struggles to get its 10 nanometer processors out the door has forced the company to do some serious soul-searching. And while the brain trust there has not given up on Moore’s Law, Intel is not going to rely on it in quite the same way as it has in the past.

The tasking of educating Wall Street analysts about the company’s new approach fell to Intel chief engineering officer Murthy Renduchintala, who talked about the revamped chip manufacturing strategy at the company’s recent Investor Meeting. Renduchintal, who is also the president of the Technology, Systems Architecture & Client Group, let it be known that Intel has learned a few lessons from the 10 nanometer fiasco.

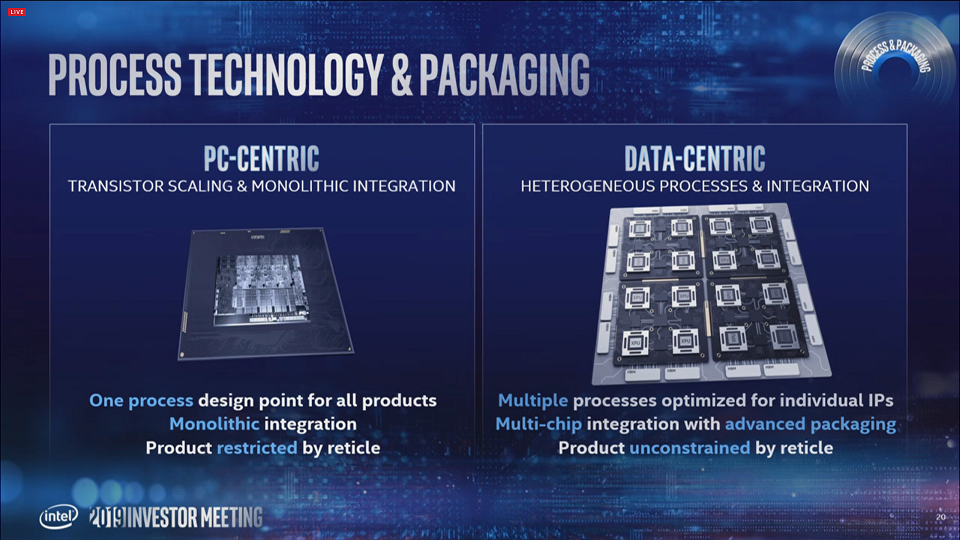

Key to Intel’s new strategy is its package-level integration, which will use the company’s Foveros 3D stacking and Embedded Multi-die Interconnect Bridge (EMIB) technologies to glue together different kinds of chips (chiplets), logic blocks, and controllers in the same package. Renduchintal told the investor crowd that this represents a much more advanced approach than just vanilla multi-chip modules (MCMs) “Our Foveros technology offers a 10X increase in interconnect bandwidth, along with a 6X reduction in interconnect power compared to multichip packaging,” he said.

He outlined a number of advantages of using to this kind of packet-level integration compared to chip-level integration, starting with the fact that the company will be able to take advantage of semiconductor technology advancements significantly faster with this approach. The implication here is that you get better manufacturing yields with smaller chiplets, compared to large monolithic chips, on cutting-edge process nodes.

A related advantage is that not all the package components need to be manufactured on the latest node, which can also lower costs for the platform. In fact, more generic components can even be outsourced to other semiconductor manufacturers, enabling Intel to focus on the IP that differentiates them from their competition.

Intel is not the only one doing this. AMD famously used their own chiplet packaging and interconnect technology with its EPYC processors. That approach likely enabled AMD to jump to Taiwan Semiconductor Manufacturing Corp’s 7nanometer process node a lot quicker than it would have had Intel gone the monolithic die route. In fact, Renduchintal estimates their approach will enable Intel to accelerate the use of a given process node by up to two years as a result of the kind of flexibility afforded by packet-level integration.

Package integration also enable you to build larger platforms compared to the monolithic approach, enabling all sorts of interesting combinations. For Intel, this is especially relevant, given that fact that its silicon portfolio spans CPUs, GPUs, FPGAs, and customized AI ASICs. Renduchintal also maintains that the inherent configurability of this approach means the cadence of product introductions can be accelerated.

Apparently, none of that has curbed Intel’s appetite for aggressive transistor scaling, with Renduchintal noting that its process technology will continue to be a foundational element of the company’s chipmaking strategy. However, he didn’t mince words when it came to admitting that mistakes were made in the 14 nanometer-to-10 nanometer transition.

“It’s no secret that Intel has struggled with 10 nanometers,” he conceded. “And what I have found in discussions with many of you is the perception that Intel’s process innovation has slowed down during this time.”

In Renduchintal’s telling, Intel’s primary transgression with the 10nm effort was overreach. The 2013 plan to bring the new process node to market in 2016, was to sync up a number of technologies all at once, including the company’s Foveros and EMIB technologies. The interplay between those elements, combined with the goal to deliver a 2.7X improvement in transistor density, as well as a number of other semiconductor enhancements, created too many competing goals for the 10 nanometer engineers. “In hindsight, that team took on way too much risk in one step,” said Renduchintal.

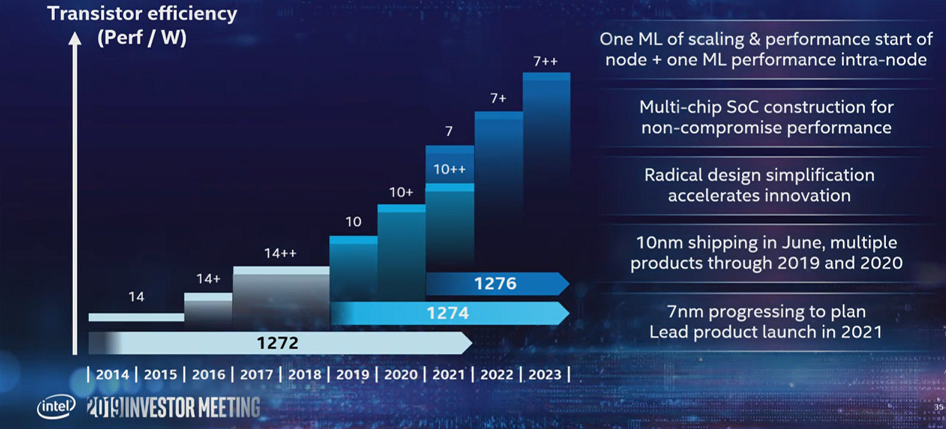

The delay in the 10 nanometer rollout forced Intel to come up with step refinements in the 14 nanometer process node – first 14nm+, followed by 14nm++, as they are abbreviated. What Intel found, according to Renduchintal, was that there was “a ton of untapped performance” that could be harvested from the technology – a 20 percent performance improvement, alone, for the 14nm+ upgrade. The company also found it could use those process refinements as the basis for new products, in the case of the Xeon line, “Cascade Lake” and “Cooper Lake.”

From now on though, Intel plans to do step refinements for each process shrink on purpose, rather than as a mitigation tactic. “We will deliver sustained process advancements between nodes and within a node,” said Renduchintal. “Put another way, we will deliver one Moore’s Law of performance and scaling at the beginning of a node, plus another Moore’s Law of performance within the node.”

If you look at their plans for the 10 nanometer node, that works out to two cycles of Moore’s Law in three years, which is actually back to its original concept of doubling transistor density every 18 months. Whether than actually comes to pass remains to be seen.

With Intel’s current schedule, the 7 nanometer node will debut in 2021, with a 7nm+ kicker in 2022, and a 7nm++ in 2023. That leaves just two years between the first 10 nanometer chips (which will ship next month in the form of the “Ice Lake” client processor) and the first 7 nanometer chips. The 7 nanometer products will also sync up with the Foveros and EMIB technologies and will be the first Intel manufacturing process to employ of extreme ultraviolet (EUV) lithography, which is set to drive transistor etching for future nodes as well.

The first 7 nanometer chip out of the chute will be Intel’s upcoming Xe GPU, which is being aimed at the AI and HPC markets and which we first detailed here. For the investor crowd that doesn’t follow the HPC market closely, Renduchintal reminded them that Xe will power Aurora, the first exascale supercomputer to be installed in the United States in 2021. The new GPU will also use the Foveros technology, although it’s not clear what that actually entails. (It wouldn’t surprise us if a bunch of Xeon cores ended up in the GPU package.)

That fact that Intel is leading its 7 nanometer charge with its first discrete GPU is indicative of the competitive landscape of the HPC/AI accelerator space. Not only has Nvidia captured much of that market already, but with AMD chalking up a big GPU (and CPU) win with the Department of Energy’s Frontier exascale system, Intel has to account for a battle on two fronts.

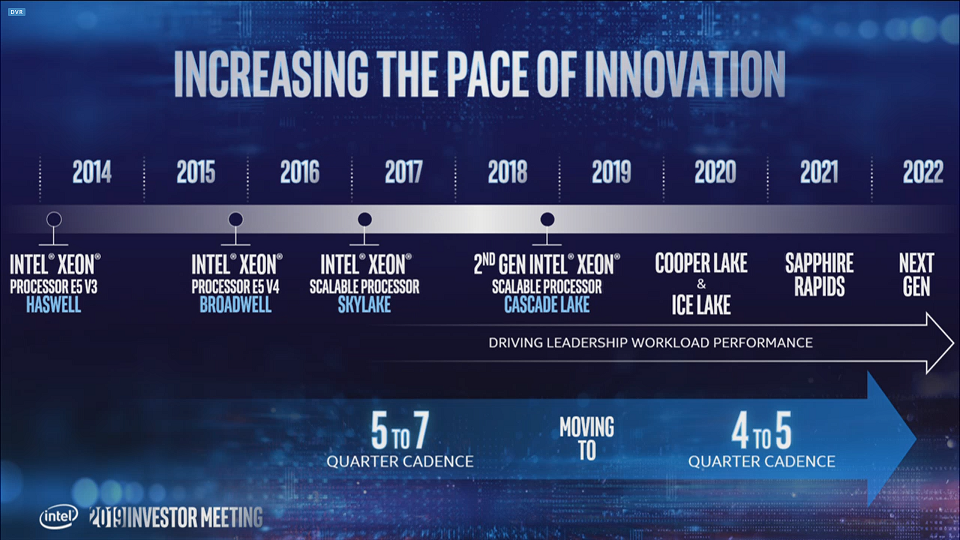

Of course, we’re getting a little ahead of ourselves, since Intel’s 10 nanometer story has yet to play out. Fortunately, Navin Shenoy, general manager of the Data Center Group, was on hand to offer some guidance in this regard. According to Shenoy, Intel’s first 10 nanometer processor for the datacenter, “Ice Lake SP,” is already sampling to some customers and is slated to start shipping in the first half of 2020. That will be followed by the next-generation Xeon, codenamed “Sapphire Rapids,” in 2021. Give that timeframe, its looks like this will be CPU that will end up in Aurora, alongside the aforementioned Xe GPUs.

If all of this sounds fast-paced, Shenoy also let it be known that starting now, the cadence of processor rollouts will be increased from the current five to seven quarters to four to five quarters. Which means Intel will be cranking out new chips in a given product line essentially every year, whether there’s a new process node to land on or not. The rationale for all this rapid-fire chipmaking is that the total addressable datacenter market is growing so fast – Intel pegs it at $223 billion by 2023 – there will be opportunities aplenty for new silicon.

“In a world where there is near insatiable appetite for computing, and in a world where computers are in every increasing demand, we are going to pick up the pace,” said Shenoy.

So, Intel will be able to make just cpu cores on 10nM, and squeeze them in betwEen the defects?

First 8 weeks of Xeon Cascade Lake channel supply data here:

https://seekingalpha.com/instablog/5030701-mike-bruzzone/5303478-amd-and-intel-market-share-today

Top three XCL SKUs by volume; 5218 16C, 6248 20C, 6240 18C, and 23 others ranked by channel availability. Plus XSL full run highlight and commercial market x86 CPU share for the week.

Mike Bruzzone, Camp Marketing

I’d like one of those chip IP analysis firms with access to their own Electron Microscope, Top End Optical Microscopes also, to look at the relative numbers of 2, 3, or 4 Fin transistors that the CPU/GPU/Other Processor makers make use of in their actual respective processor’s Tapeout.

So that’s maybe some Trained AI running an Electron Microscope/Optical Microscope based automated Fin/transistor counter that can get a rough estimate of the numbers 2 fins, 3 fins, 4/higher fins transistors that are made use of on any CPU’s/GPU’s/Other Processor’s design/tapeout. One can drive 4 fin transistors to higher overall switching speeds using more fins per transistor for better drive current and other electrical metrics compared to 3 fin and 2 fin transistors.

So Intel on their 14nm+++(Whatever) process node refresh could have been favoring more 3/4 fin transistors at the cost of some density/larger die area needed to get the clock speeds higher. Of course that also includes deeper instruction pipelines etc. as well that take more transistors to implement.

For more Mobile oriented processors it’s easier to go with denser 7.5T libraries at say 10 fins per cell rather than 9.5T libraries and 12 fins per cell and get more 2/3 fin transistors on 7.5T libraries and mobile chips are clocked lower anyways. And Globalfoundries’ 12nm node made use of this less than half node design library option for their customers over their 14nm/Licensed from Samsung node. Remember that AMD at 28nm(GF’s Node) on its final Excavator core Designs for its Bristol Ridge APU platform that made use of GPU style automated design libraries on the Excavator CPU core’s layout and netted around a 30% CPU core area savings on that rather long in the tooth at that time, 28nm GF process node. Of course that reworking of the CPU core’s layout/verilog HDL required a new round of verification/certification for the Bristol Ridge/Excavator based SKUs but they did get some performance improvements also in addition to more CPU cores/Integrated graphics in less die area, mostly from the CPU core layout reworking.

From what I have read concerning Intel’s 10nm problems it was partly because Intel tried for too much density scaling at first and had to back off somewhat on that and fix some other issues also. But maybe for 7nm Intel will be able to get that done on time without any more delay because even at Intel’s 7nm timeline Intel will still be chasing Samsung’s 5nm and TSMC’s 5nm/5nm+ offerings.

And there are rumors of Intel Partnering with some Third party chip fab for fabrication of Intel’s discrete GPU designs that are supposed to begin arriving sometime in the 2020/later time frame. That probably will not include any on Intel’s CPU/Integrated Graphics offerings but the third party fabs(Samsung, TSMC, GF for 14/12nm only GF’s is out of the 7nm/below race) have plenty of experience with large GPU die production.

Moore’s law is more about the economics of doubling transistor counts every 18-24 months and not really the laws of physics running out on the ability to go smaller on process node shrinks. So that main economic part of Moore’s law that’s running out means that some fabs like GF could not afford to finance their 7nm process node development costs and had to drop out of the leading edge fab process node contest. So only the deepest pockets survive at 7nm and below, and EUV coming into its own, and only the third party chip fabs companies with the largest customer base will have the economy of scale on their side for 7nm smaller process node market competition.

Intel owns its own fabs and every little delay really costs them some serious money as chip fabs that are idle still run up the revenue draining upkeep costs and any potential lost sales or lost market share that comes from parts shortages in the time of great demand. Intel’s main competition sure is benefiting from some parts of that failed Intel 10nm timeline in the x86 ISA processor market that’s currently the largest for Desktop PCs/Laptops and Servers/HPC also.

AMD’s financial necessity drove that die/chiplet( Zen/Zeppelin Die for Epyc/Naples and Ryzen 1000-desktop-CPUs and 7nm CPU Chiplets/14nm I/0 Die for Zen-2 for Epyc/Rome and Ryzen 3000-Desktop CPUs) innovative direction as a matter of survival for AMD. And that paid off many times over in Die/Wafer yields at 14nm and now at 7nm/below where future sales/revenue growth is assured for AMD now and in the future.

Intel’s 10nm woes are still occurring and 7nm will not put Intel instantly ahead of the third party fabs. Intel has been more creative with it’s packaging IP, EMIB/Other IP, and if only Intel had focused more on its new process node development and also keeping online more Wafer Starts capacity at 14nm. Intel should have focused more on its core business and less on those mobile market/IOT(Edge devices and Phones/5G) and more on the servers that back all that Mobile/IOT up.

Intel’s consumer PC, and even more so for laptop, market sales are really being more affected on the CPU supply chain shortage end than than Intel’s Server/HPC CPU supply chain that Intel has been prioritizing during the Wafer Starts at 14nm, waiting in 10nm to get fixed, fab capacity shortage that will not be fully over with until the 2020 time frame.

As it stands for Intel and Moore’s law the competition has made some very strategic market inroads into the HPC/Exascale markets(Both AMD and OpenPower/Nvidia) and also the x86 PC/Laptop market. And Intel’s lead in fab process node is behind even Moore’s economics with TSMC/Samsung now ahead for the next few years, and very crucial years at that. Cloud services and AI growth will continue to accelerate relative to other processor markets that are maturing or already mature and Intel is still going to be coming from behind with its current process node timelines still not enough.