Containerized high performance computing is fast becoming one of the more popular ways of running HPC workloads. And perhaps nowhere is this more apparent than in the realm of GPU computing, where Nvidia and its partners have devoted considerable attention to making this method as seamless as possible.

Seamlessness is really the whole idea behind containers. By surrounding an application in its own software cocoon, containers are able to make workloads portable across a wide range of systems, both for on-premise systems and for cloud computing environments. This is especially advantageous in the HPC realm, given the complexity of the software environment and the relatively low supply of infrastructure for these codes relative to user demand.

The complexity of HPC applications stems from the fact that they’re dependent on a lot of different supporting libraries – think MPI, OpenMP, and Fastest Fourier Transform packages – that tend to be updated on a fairly regular basis. By isolating the application from these dependencies, it’s much easier for users to run their codes on the local machine (or even farm them out to other systems or cloud platforms). No more waiting for a system administrator to install the needed application packages and supporting libraries. Essentially, the containers automate away the manual sysadmin function.

This is all the more appreciated in HPC set-ups, where GPUs add another layer of software complexity, which, in turn, has provided an extra bit of motivation for Nvidia to embrace the container model. The company’s efforts in this area has centered on the Nvidia GPU Cloud (NGC) which encompasses its own registry of GPU-based containers. The only significant drawback is that compatibility is limited to Nvidia GPUs of fairly recent vintage, specifically the Pascal-generation and Volta-generation processors.

Since NGC was launched in 2017, the registry catalog has grown to 41 containers, about half of which are aimed at users of deep learning and machine learning – primarily frameworks, support libraries, and development platforms. NGC supports the most popular frameworks, including the Caffe, TensorFlow, Torch, Chainer, MXNet, Microsoft Cognitive Toolkit, and Theano, as well as Nvidia’s own TensorRT. The field has become so dynamic that these frameworks and their associated containers are often updated on a monthly basis.

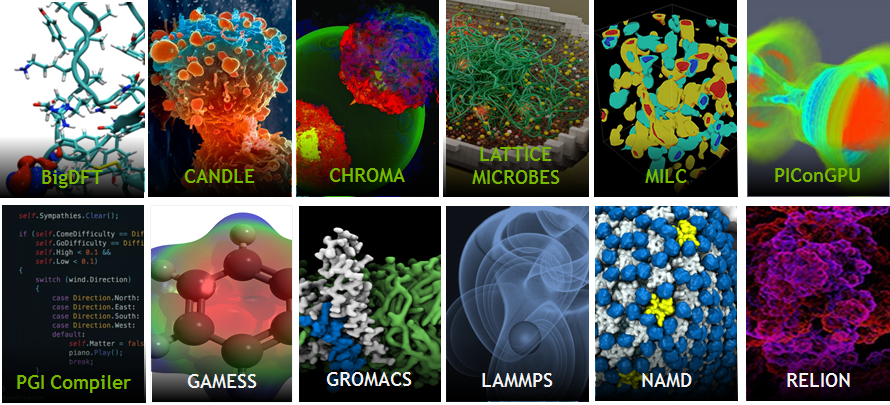

For the more traditional high performance computing crowd, the registry offers containers for scientific HPC codes. As of this writing, there are 14 such applications, not counting the half dozen devoted to specifically to HPC visualization.

For the more traditional high performance computing crowd, the registry offers containers for scientific HPC codes. As of this writing, there are 14 such applications, not counting the half dozen devoted to specifically to HPC visualization.

Some of the most popular application suites in this category include GROMACS, NAMD, LAMMPS, CHROMA, and GAMESS. Keep in mind that there are more than 400 scientific computing applications currently supported by Nvidia for GPU acceleration, so it’s safe to assume that HPC containers in NGC is just getting started.

Nvidia also offers a handy container for PGI compilers and related tools, which can be used by developers for building HPC applications. It includes support for OpenACC, OpenMP and CUDA Fortran.

The catalyst for all this has been Singularity, a container technology that has been embraced by high performance computing users and is the one used by NGC. As Dell EMC engineer Nishanth Dandapanthula wrote in an article published here in 2018, application performance under Singularity tends of be on par with that of execution on bare metal. In fact, Dandapanthula reported less than a two percent performance penalty on the finite element analysis code LS-DYNA running under Singularity versus a bare metal cluster, noting that this was within the run-to-run variation. The deep learning Resnet50 code using Nvidia V100 GPUs also reported the same sub-two-percent performance delta.

A real-world use case exists at Clemson University, which is using NGC containers in conjunction with its own Palmetto HPC cluster. Palmetto has more than 2,000 Nvidia GPUs, some of which are the container-compatible V100s. Like any good academic HPC cluster, the system is used for scientific research in areas such as molecular dynamics, computational fluid dynamics, quantum chemistry, and deep learning. And like most systems of this ilk, it is in high demand from researchers.

Unfortunately, for these types of these environments, system administration is a full-time job. Researchers typically want the latest and greatest version of applications and libraries installed in order to take advantage of fixed bugs, new features, and performance tweaks. Some users, though, for reasons of compatibility or toolset limitations are tied to older versions of software. This makes administration a challenge, and in cases where different versions of the same libraries must co-exist, nearly impossible.

By adopting NGC containers at Clemson, researchers were able to avoid these kinds of conflicts. More importantly, they were able to bypass the fuss of system administration and bring up applications on their own using the relevant NGC container. According to Ashwin Srinath, a research facilitator in Clemson’s IT department, by spending less time on installing software, his group was able to devote more time to help researchers improve their workflows and parallelize their codes.

He noted that a half-day installation of GROMACS, a widely used molecular dynamics code, was reduced to minutes by using the containerized version. Srinath said that ratio of time savings is fairly typical when you compare bare metal installations with NGC containers. For trickier installations, though, even more time can be recovered. Srinath recalled it took two weeks to install Caffe on the cluster the first he tried versus the few minutes when it was brought in via a container.

Besides in-house clusters like Palmetto, the NGC containers can also be run on GPU-accelerated servers in most of the big public clouds, including Amazon Web Services, Microsoft Azure, the Google Cloud Platform and the Oracle Cloud. Nvidia has also qualified a number of HPC systems that they deem “NGC-Ready.” At present, they include the Cray CS Storm NX, HPE Apollo 6500, Dell EMC PowerEdge C4140, Atos BullSequana X1125, Cisco UCS C480ML, and Supermicro SYS-4029GP-TVRT. The containers are qualified for Nvidia’s own DGX platform.

While it might seem like a lot of trouble to continually integrate updated software for the NGC containers and maintain compatibility with the growing number of hardware environments, this approach is very much in the Nvidian DNA. More than anything else, GPU computing at Nvidia has been a software-centric endeavor, the idea being to make it as simple and painless as possible for users to take advantage of their coprocessors. Thus far, it’s proven to be a winning strategy.

Be the first to comment