What if a hyperscale rack designer decided not to locally optimize legacy form factors for thermal management, but instead to start over and design a rack based on optimizing thermal efficiency? That’s not really new, there are many products and experiments in this direction. But, what if the designer decided to optimize air-cooling as the thermal transfer mechanism, instead of using water or more exotic liquids? Would you question their sanity?

We met David Binger, the chief technology officer at Forced Physics, and Terri Frits, vice president of sales and marketing at the company at the recent Colo+Cloud conference in Dallas. They brought a functional demonstration of JouleForce Conductor, the company’s fully passive air cooling solution plus a static display unit. By “fully passive” we mean that the Conductor has no moving parts (no integrated fan) and no integrated closed-loop liquid circulation to help spread heat to the its fins. Using large, efficient, reliable industrial fans, a purpose-built rack of Conductor-based server modules can cool a significant compute cluster using ambient air anywhere on Earth without using air conditioning or evaporative cooling.

The fundamental challenge for air cooling servers is perspective. If you assume you can’t radically redesign a rack, you are stuck with trying to optimize the airflow you have been given. Forced Physics started by designing a modular, highly optimized air-based heat transfer system, then they designed a rack full of servers around it. It is a change of perspective on optimizing datacenter thermal design using air cooling.

This is exactly what we see happening with rack designs based on oil and engineered fluids, because those fluid designers must think outside of legacy rack architecture. Water-based liquid cooling systems mostly try to use the legacy air volume more efficiently. But water-based systems add a lot of complexity to a rack, plus a lot of sensors to detect when the complexity breaks down. What makes Forced Physics worth a look is that they radically redesigned a server rack using simple building blocks and freely available air.

Heavy Metal Air Cooling

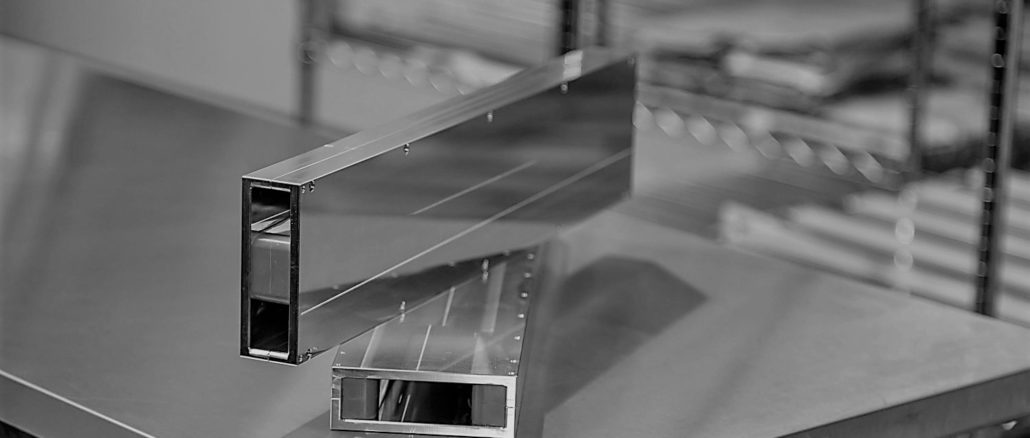

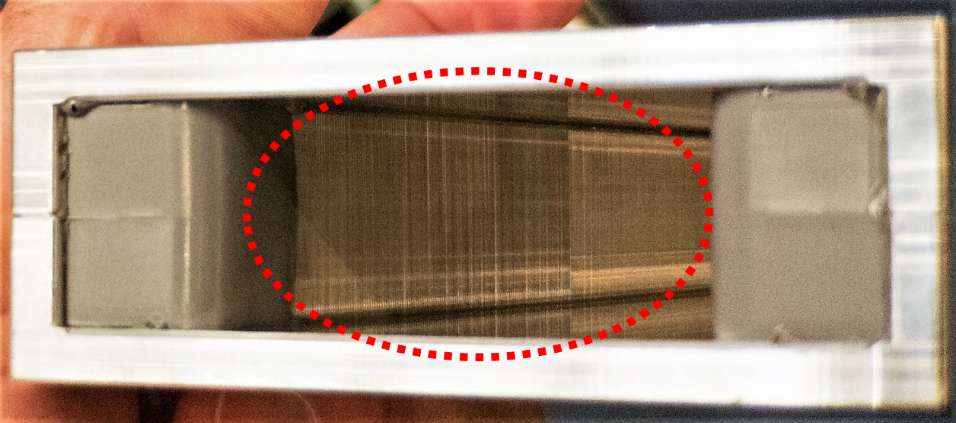

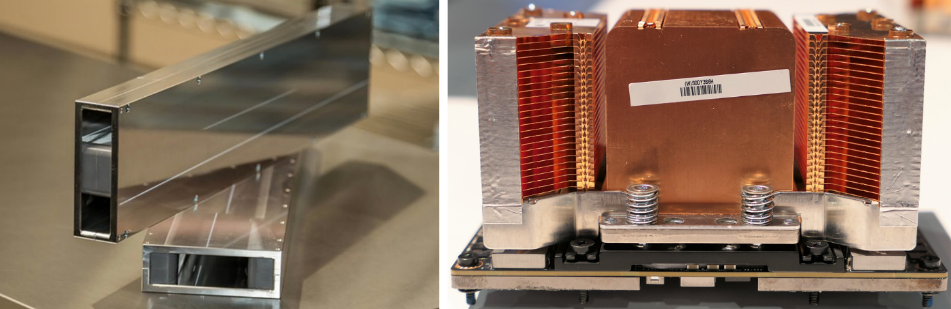

Forced Physics’ JouleForce Conductor is a 20 inch deep/long (508 mm), 6 pound (2.7 kg) tube of milled aluminum containing about 3,000 small radiator blades arranged perpendicular to the end-to-end airflow in the tube. The Conductor tube is 4.25 inches (108 mm) wide and 1.5 inches (38 mm) high.

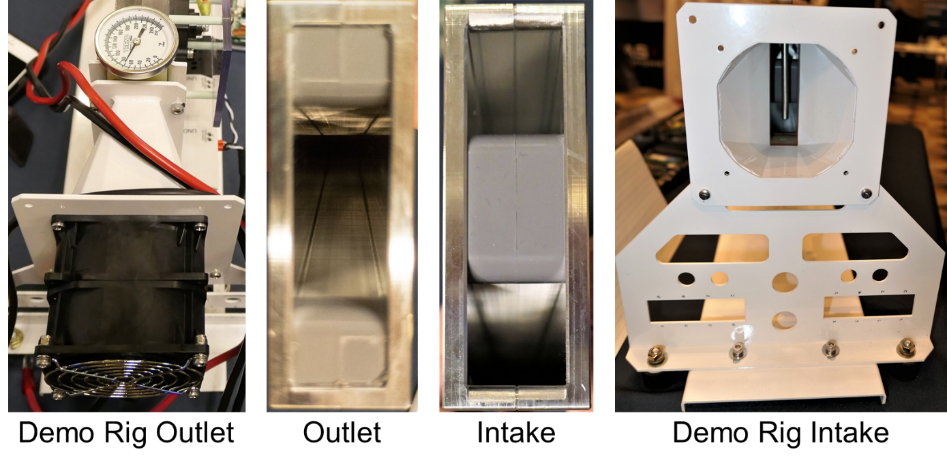

The new science in the JouleForce Conductor is in the arrangement and spacing of and micro-channel gaps between the blades. The radiators are configured in a V-shaped array, with half (about 1,500) mounted on each side of the ‘V’. The Conductor’s intake splits the airflow into two streams of air flowing along the outside of the V-shaped blade array, which forces the air through micro-channels between the blades and out through the wide mouth of the ‘V’.

JouleForce Conductor depends solely on negative pressure. It pulls air through the tube, in stark contrast to traditional servers that both push and pull air through a server chassis. Pulling air through the tube creates less turbulent airflow than pushing air through the tube and its blades.

Forced Physics claims its passive JouleForce Conductor tube can dissipate 1 kW of thermal energy, using ambient air temperatures found anywhere on Earth. That would be more than enough to cool a modern dual-socket Xeon server loaded with memory. It should be enough to cool a power-efficient single-socket AMD Epyc or Marvell Arm server with a bunch of memory plus two 350 watt GPU or FPGA compute accelerators.

The Conductor is rated to dissipate 1 kW at up to 120°F (49°C), high enough to cover ambient air temperatures in Forced Physics’ hometown of Phoenix, Arizona. At 120°F, the required fan energy is about 10 percent of the thermal load, or about 100 watts. With more fan energy, the maximum ambient temperature can be higher – Forced Physics used 140°F (60°C) as an example. And with cooler ambient air or a chilled air source, the Conductor can dissipate much more heat.

Forced Physics has been granted three US patents that bear on this design (8,414,847, 8,986,627, and 10,113,774). It has waited until now to unveil the design so that international patents had time to grant. Earlier Forced Physics patents cover air-based turbine innovations. It looks like the patented JouleForce technology innovations were the result of practical application of turbine blade design for a different application, where the blades stay put in a moving air flow.

Demonstration System

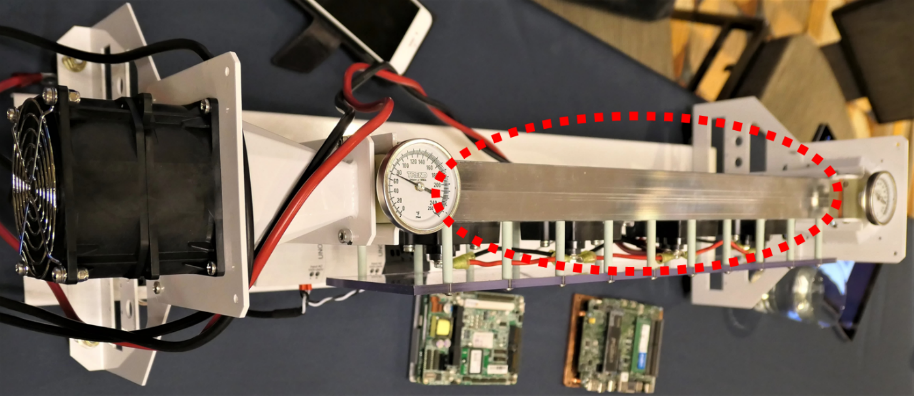

The demonstration unit uses heaters placed directly on the Conductor to simulate an electronics thermal load. Other than the thermometer gauges, the fans are the only moving parts in the demo system.

At full load of 1 kW, with an intake ambient air temperature of 110°F (43°C), the outlet air temperature from the Conductor is 150°F (66°C). This 40°F (23°C) temperature rise dissipates 1 kW of power without using any liquid circulation or integrated fans.

For this demo, Forced Physics slows the fans for lower air intake temperatures, saving power while keeping the outlet temperature constant. For example, with 70°F (21°C) intake temperature, fan speed is set to give an 80°F (27°C) temperature rise, yielding a consistent outlet temperature of 150°F (66°C).

This gives rack designers several degrees of freedom. They can manage inlet temperature, fan power consumption and outlet temperature separately, and dynamically, if they wish. Binger mentioned that providing chilled intake air can double the thermal dissipation load from 1 kW to 2 kW.

Remember that the fans on the demonstration system are pulling air through the Conductor by creating negative pressure, they are not pushing air through the Conductor.

Rethinking Air-Cooled Racks

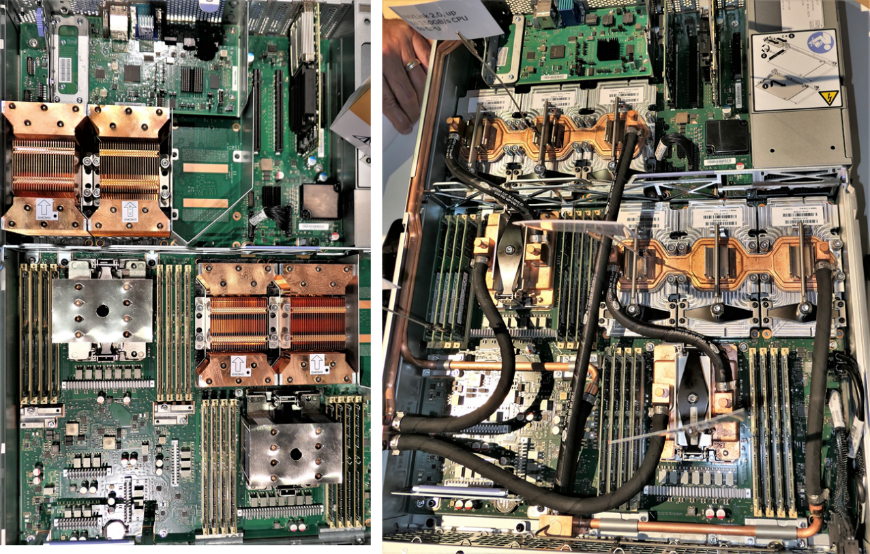

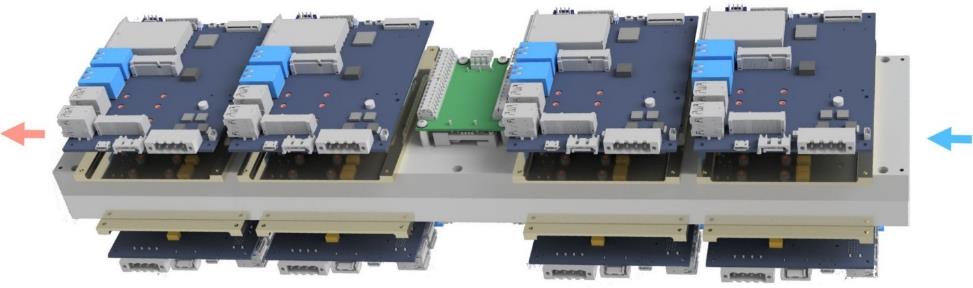

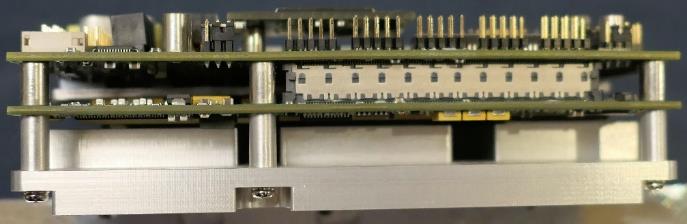

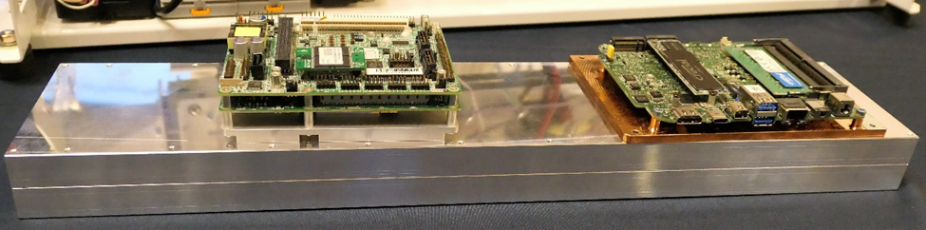

Forced Physics starts with a microserver-based design using eight Diamond Systems COM Express Type 7 boards. Each microserver uses eight 55 W small form factor boards mounted on a JouleForce Conductor, four boards to each side, for a total of about 500 W power consumption. The Diamond Systems board hosts a 12-core Xeon-D 1500 series processor.

The JouleForce Conductor assembly is packaged in a 6 inch (152 mm) by 7 inch (178 mm) cross section module, about 26 inches (660 mm) long. Six of these modules can fit side-by-side into a modular sled. Power is delivered via a modular backplane at the back of the modular sled. The power module is slotted to fit around the JouleForce Conductor outlet port without interfering with airflow.

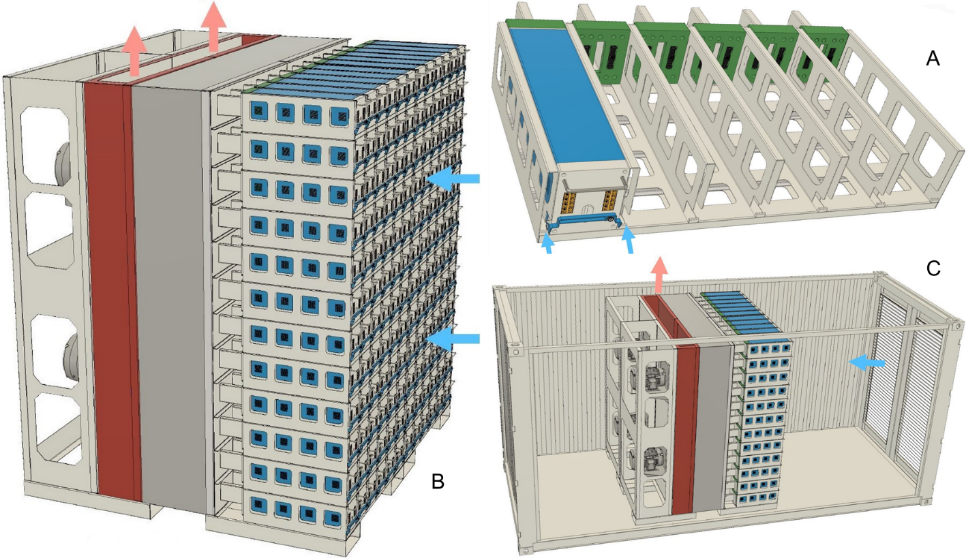

Twelve of these sleds can be stacked into a rack configuration. Each JouleForce rack is 3.74 feet (1.13 m) wide and 8 feet (2.44 m) tall, holding 12 rows of 6 modules, for 72 modules dissipating up to 72 kW of power. Including power delivery, fans, and air ducting, a rack is about 8 feet deep.

Two racks side-by-side form an 8-foot cube and fill a standard 20-foot long “High” (taller than the base spec) ISO shipping container side-to-side and top-to-bottom. A 20-foot High ISO shipping container is 8-feet (2.43 m) wide, 9.5-feet (2.9 m) high, and 20-feet (6.06 m) long. The two racks can dissipate up to 144 kW of power.

The only moving parts in the two server racks are four industrial sized fans, and the fan motors are located outside of the hot duct. The only consumable resources are air filters.

144 kW is a lot of compute capability in a standard shipping container that only needs ambient airflow, power delivery, and network connectivity to be fully operational. There is room in a 40-foot High ISO container to put another two racks back-to-back with the first rack and double the power dissipation to 288 kW, with some thought given to keeping the fan motors cool.

If you want to compute at the edge of the network, Forced Physics enables dropping a bunch of compute power into seriously harsh and rugged environments. Other companies build 72 to 144 kW generators into similarly sized ISO containers, so transportable power is a viable option.

What If I Like My Legacy Infrastructure?

Redesigning a telco rack using JouleForce technology in the same datacenter volume as the CG-OpenRack-19 rack would enable clever rack designers to put four JouleForce Conductor based server modules side-by-side. They could then stack these four-module chassis 6 deep, for a total of 24 modules. Standard power supply to the rack is 27 kW, which means that 24 kW of power dissipation leaves plenty of power delivery and vertical rack space for adding network and power delivery gear to the rack. There is enough depth in the CG-OpenRack-19 specification to supply front air filters (if needed in a filtered air server room) and rear negative pressure generation. Hot-aisle heat ducting would not be needed in a temperature-controlled server room.

We think this design challenge would not be hard to solve. Module designs might mount only one 500 W server node to each side of a Conductor or one 1 kW server node to only one side of a Conductor.

However, to create a sustainable datacenter market, Forced Physics will have to create a partner program with server motherboard manufacturers. We recommend that the JouleForce partner program enable a wide range of motherboards and compute capabilities for standard JouleForce technology-based modules. Hyperscale datacenters are all about component supplier flexibility.

Operational Considerations

While the demo system uses a couple of large-ish but chassis-sized fans, better economies of scale can be reached with large form factor industrial fans. However, any fans used in a JouleForce technology system will have to withstand hot-aisle temperatures, perhaps hotter depending on application.

JouleForce Conductor requires MERV 13 air filtration, which is a common datacenter air filtration standard and even used in residential HVAC systems. If JouleForce technology is being used in remote applications, then changing air filters will be the biggest periodic maintenance consideration.

To maintain a fully passive thermal design, systems designers and architects need to plan on using a lot of thermally conductive material, of which metals are some of the best conductors and lowest cost choices. Boards designed specifically for JouleForce Conductor use should help reduce the amount and cost of materials used.

A rack full of JouleForce Conductors and requisite passive heat spreaders and other materials-based thermal conducting mass will be heavy. Planning for these racks will likely require the same heavy-duty flooring and types of racks as used in water-cooled systems.

A knock-on effect of creating negative pressure using large format fans is that a JouleForce technology server or an entire rack should be orders of magnitude quieter than their traditional server equivalents. Low complexity, no moving parts, and slower thermal changes should lower the failure rates and extend the useful life of JouleForce technology-based servers.

Life at the Edge

Rethinking air-cooling for datacenters was a big bet. We think Forced Physics’ focus on a shipping container form factor is a great idea for edge computing deployments. It will enable Forced Physics to earn vendor consideration in a growing application class, where it solves major operational pain points. And it is a good demonstration of a dense, quiet, and reliable design philosophy.

Hyperscalers are always interested in lowering energy costs and should be interested in avoiding water-cooling wherever possible. Forced Physics is manufacturing beta quantities of its JouleForce Conductor and says it is already talking with hyperscale designers.

JouleForce technology is an elegant and deceptively simple looking rethink of air cooling. It’s nice to see that basic innovation can still happen in a maturing industry.

Forced Physics might find another market for this cooler in cooling desktop video cards.

Cooling just one CPU is easy. Problems start when cooling two or more because the CPU lids are not consistently the same height and angulation. At least one of the processors will have bad thermal contact. Several other entrants to this space have discovered this issue and failed.