Sometimes, a workload needs more memory, more compute, or more I/O than is available in the two socket server that has been the standard pretty much since the dot-com boom two decades ago. While there are many frameworks that allow for horizontal scaling to boost throughput or performance (or both), sometimes because of the nature of the code and its sensitivity to latency, a big iron box that scales up with a true single memory space rather than out through multiple nodes is all that can work.

This is why even here in 2018, big iron NUMA machines are still a big and profitable business even though the increasing core counts of standard two socket machines and available four socket machines has obviated the need for more scale up capacity in a lot of cases. That is why Big Blue still makes a lot of its hardware money from scale up machines in its System z and Power Systems lines.

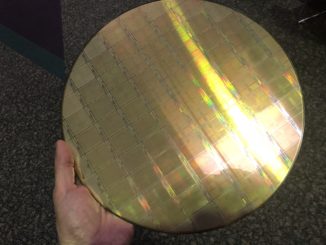

As we had been anticipating, IBM has just rolled out the final machines in its Power9 family of servers, in this case those that employ the “Cumulus” variant of the Power9 chip, which has 12 fat cores with up to eight threads of simultaneous multithreading and which is distinct from the “Nimbus” Power9 chip, which has 24 cores with only four threads per core. (What IBM calls SMT-8 and SMT-4). The Nimbus chips were limited to machines with one or two sockets of shared memory and did not use IBM’s “Centaur” memory buffer chips, which allow for twice as many DIMMs to be hung off each memory controller and for twice the memory bandwidth per socket. The Cumulus chips do use the Centaur chips, allowing for certain customers to preserve their massive investments in DDR4 main memory that they bought with prior Power8-based NUMA machines. In many cases, the memory in a configured system will cost more than the processing, and we are talking many millions of dollars, so this is not pocket change.

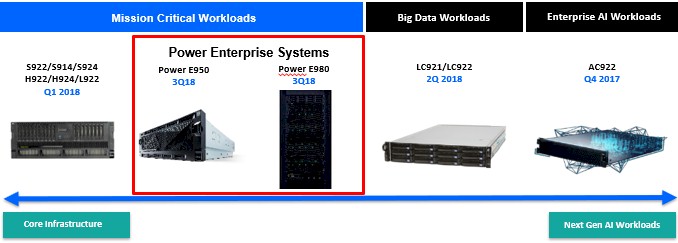

The latest announcements from Big Blue include the “Zeppelin” system, which will be sold as the Power E950 and which scales to up to four sockets, and the “Fleetwood” system, which will be sold as the Power E980 and which scales up to sixteen sockets. These systems complement the “Newell” Power AC922 machine announced back in December 2017 and that is the foundation of the hybrid CPU-GPU “Summit” supercomputer installed at Oak Ridge National Laboratory and its companion, the “Sierra” supercomputer at Lawrence Livermore National Laboratory; the “ZZ” systems for mainstream enterprise customers announced in February of this year; and the “Boston” systems for mainstream HPC and analytics that came out in May. This is pretty much the Power9 server lineup for IBM, although Steve Sibley vice president and offering manager for the Cognitive Systems division at the company, tells The Next Platform that there is a possibility that the company reserves the right to do customized versions of tweaked Power9 machines, much as it did a Power8+ rev with updated NVLink interconnects as a stepping stone to Power9. If IBM does this, it will no doubt be used as a prototyping system for the future CORAL-2 exascale systems that Big Blue has pitched to the US Department of Energy.

The Zeppelin Power E950 machine will be the core enterprise server for a bunch of AIX and Linux shops that run enterprise software workloads like databases and transaction processing and where a two socket server does not have enough capacity across compute, memory, or I/O. This is not particularly interesting to us at The Next Platform, except inasmuch as it helps pay for Power9 chip and system development and except in those cases where some customers put fat memory nodes into their supercomputing or analytics clusters. But Zeppelin machine is interesting in some other ways which could be important for hyperscaler, cloud builder, and supercomputing customers.

For one thing, the Power E950 has a new processor option that has 11 cores per chip, which is weird in that it does not have base 2 symmetry like options with 8, 10, or 12 cores. But IBM had a bunch of chips that came back from GlobalFoundries, the fab that makes the Power9 chips in 14 nanometer processes, that had 11 cores and there was no point in wasting them. The Cumulus chips used in the Power E950 have different starting clock speeds depending on the core counts, with the chips with lower core counts running faster, as you might expect given the thermals. The 8 core chip has a base frequency of 3.6 GHz, the 10 core chip runs at 3.4 GHz, the 11 core chip runs at 3.2 GHz, and the 12 core chip runs at 3.15 GHz. All of them have a maximum frequency of 3.8 GHz on their cores, and that can only happen if other elements of the chip and the system are not running hot.

The Power E950 system is also neat in that it puts standard DDR4 memory sticks running at 1.6 GHz onto a memory riser card that has four of the Centaur buffer chips on it with sixteen memory slots. Up to right of these memory risers can be put into the system for a total of 128 memory slots across the four sockets (two riser cards per socket). That is four times the memory slots of the prior Power E850C system, but because the memory buffers add latency, the aggregate memory bandwidth of the Power E950 machine is only 20 percent higher at 920 GB/sec. That is still pretty good, and is 1.8X better memory bandwidth and 2.6X more memory capacity (at 16 TB) than Intel is delivering on its top-end “Skylake” Xeon SP Platinum processors. Sibley says that IBM also reckons that it can deliver 2X the performance per core and 2X the I/O bandwidth (630 GB/sec thanks to the move to PCI-Express 4.0 controllers) of the Skylakes per socket, too. IBM is offering memory sticks that range from 8 GB to 128 GB each, which yields a capacity of between 1 TB and 16 TB for the system. You can dial up the capacity and bandwidth by filling slots with certain capacities. (This is, of course, true of all servers. But the range is large in the Power E950.)

The NUMA interconnect on the Power E950 Zeppelin machine is running at 16 Gb/sec, up from 8 Gb/sec with the Power8 chips, and the X-Bus cross interconnect allows for a single hop between sockets by using three ports per sockets, up from two ports with the Power8-based Power E850C. The smaller number of hops and double the bandwidth should help increase performance for workloads that have jobs spanning the cores and memory that is bigger than one socket, but Sibley says that IBM did not separate out the performance increases due to the boost in oomph from the Power9 cores and caches versus the improvement in the NUMA interconnect. In general, the performance boost in a machines with the same number of cores and memory capacity will be somewhere around 40 percent to 50 percent higher on the Power E950 versus the Power E850C.

The Power E950 server has ten PCI-Express 4.0 slots plus one PCI-Express 3.0 slot, and also has eight 2.5-inch drive bays using the SAS protocol and four U.2 NVM-Express flash bays; all are bootable. The whole shebang fits into a 4U form factor that can be rack mounted or tipped on its side to be a tower server. Each processor in the Power E950 has a single 25 Gb/sec port, and all of the eight PCI-Express x16 slots in the machine can support the Coherent Accelerator Processor Interconnect (CAPI) protocol that IBM initially created for the Power8 chips.

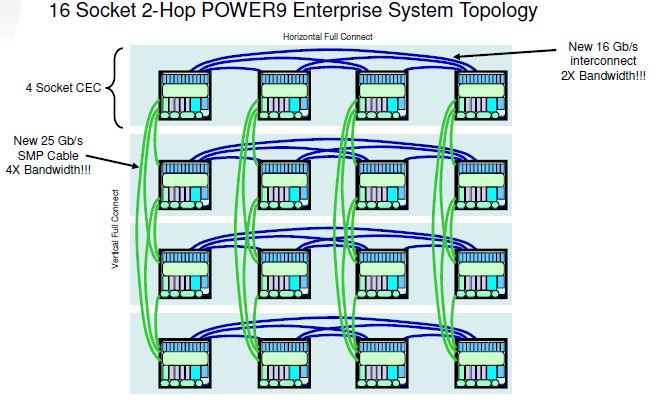

This four-socket Power E950 node is the base used to create the more capacious Fleetwood Power E980 NUMA system, which gangs up multiple four-socket nodes to scale up even further using the 25 Gb/sec “Bluelink” ports. As we explained back in October 2017 when we first caught wind of the Fleetwood systems and their “Mack” NUMA and system controller – IBM often says SMP when it means NUMA, and that can be confusing because they are different things. Anyway, in older Power E870 and Power E880 machines (and their C-class follow-ons which were not very different), the NUMA interconnects between nodes (not sockets) ran at 8 Gb/sec like the NUMA interconnects between the sockets. But in the Power E980 (there is no Power E970), the hops across nodes now run atop the Bluelink ports and therefore four times faster at 25 Gb/sec. Importantly, with the extra local NUMA port coming out of each machine and the twelve NUMA interconnects between nodes, any socket can reach the memory local to any other socket in the system in no more than two hops. Like this:

We do not yet know the performance impact of this radically improved NUMA interconnect on the Power E980, but rest assured, we will be looking around and will find it. We will also be doing a deep dive into the Zeppelin and Fleetwood machines.

The Power E980 has slightly different processor options from the Power E950, and the nodes are 5U high instead of 4U high. The 8 core chip runs at 3.9 GHz, close to the peak 4 GHz performance; the 10 core chip starts at 3.7 GHz and can push up to 3.9 GHz; the 11 core chip starts at 3.58 GHz and can rise to 3.9 GHz as well; and the 12 core chip starts at 3.55 GHz and also peaks at 3.9 GHz. The machine scales from 128 cores to 192 cores, depending on the processor options selected. Memory can scale up to 64 TB using 512 GB CDIMMs. (The CDIMMs come in 32 GB through 512 GB capacities, doubling with each bump up from 32 GB.) The number of memory slots on the Power E980 is 32 per node, for a maximum of 128 memory slots – the same as the Power E950. But with four times the density on each card, the Power E980 can scale four times as high on the capacity while also maintaining that 230 GB/sec per socket of memory bandwidth. That works out to an aggregate of 3.6 TB/sec of aggregate memory bandwidth in the full-on Power E980.

The Power E980 system has a base 32 PCI-Express 4.0 slots, and can fan out peripherals using up to sixteen PCI-Express expansion drawers (four per node). It has the four U.2 NVM-Express ports per node as in the Power E950.

The Power E950 will be available on August 17, and it will support IBM’s own AIX variant of Unix as well as Linux variants from Red Hat and SUSE Linux. The Power E980 machines with one or two nodes will be ready to roll on September 21, and IBM is taking a little more time to test and certify the versions with three or four nodes and won’t have them ready until November 16. That is still plenty of time to have a very good fourth quarter – one that IBM desperately needs. Upgrades from prior Power8 big iron to the Power E980 will also be available in November.

This is what it called ‘big.iron’! IBM is the only trusted vendor providing this amazing type of systems! 16 node system! I want that aaS on the cloud!