The cost of servers keeps going up and up, thanks in large part to memory, flash, and GPU prices rising as too much demand chases too little supply and also due in part to the rising cost of processors. So the server market is booming. And guess who is actually paying for it? Network vendors, who are being ground down like sacks of wheat in the massive granite grist mill of intense competition.

The demand for increasing network bandwidth across all kinds of workloads, from branch office and campus switches that still use a lot of 1 Gb/sec and 10 Gb/sec tech, to HPC and AI workloads that demand 100 Gb/sec speeds and the buildout of fabrics at hyperscalers and cloud builders that are also using 100 Gb/sec switching is making up for the fact that the cost per port is going down and down on all fronts. Thus, the market is growing. We just wonder who is profiting.

There are a few other things going on here, too, and we have get a sense of how much these forces are changing the overall market for network devices and bolstering the Ethernet switching racket while taking away from it – and, frankly, helping out the server market. As is always the case, there is a lot more churning going on that is masked by the relatively smooth revenue curves in any market.

The first thing is that more and more Ethernet ASICs have router functions built into them, and in a lot of cases, companies are foregoing buying a bunch of very high cost and usually modular routers and moving these functions over to spine switches wherever possible. In other cases, companies are running virtual routing software in the network operating systems in the switches if these functions are not etched into the switch ASICs themselves. Either way, this saves them a bundle of dough. We think that this phenomenon is showing up in the trendlines of Ethernet switching and routing.

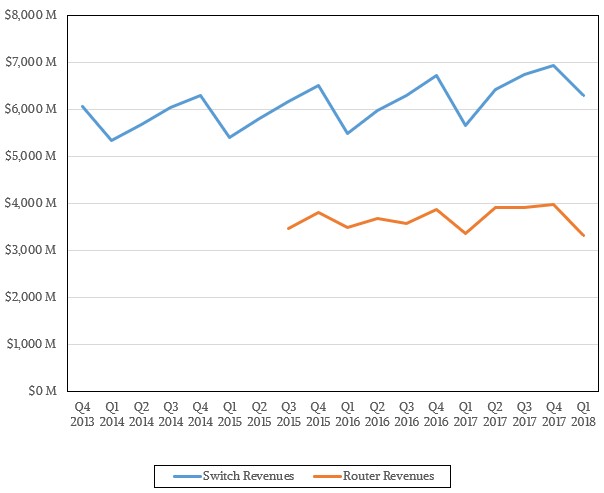

In the first quarter ended in March, Ethernet switching revenues across all products and speeds and uses grew by 10.9 percent to $6.29 billion according to IDC, while the router market – including enterprises and service providers of all kinds – shrunk by 1.4 percent to $3.31 billion.

We do not have good data going back many years on the breakdown of switching and routing – IDC only recently started giving out such details to the public recently – but you can see that over the past two and a half years that Ethernet router sales have been basically flat while Ethernet switch sales have been sawtoothing their way up and to the right very gradually. Very gradually, to the tune that it has taken the market four years to grow quarterly sales by about $1 billion incrementally. We think that this is being driven, at least in part, by certain network functions – firewalls, load balancers, routers, WAN accelerators, intrusion detection systems, session border controllers, and on and on – are being pulled onto the switches. The switch is, in essence, becoming the network.

Now here is the funny bit. At the very same time, the server – or more precisely, the hypervisor and the network interface card – is becoming the switch. For those virtualized servers that require east-west traffic across virtual machines, which means most of them, the hypervisor is equipped with a virtual switch. Under normal circumstances, the servers would have been real and the switches would have been real, and everyone pushing tin would have made a lot more money. Virtualization has radically altered the datacenter landscape, obviously, and many things that did get done with infrastructure in the past decade could only get done – meaning, could get paid for – because of the efficiencies that server and network virtualization brought to compute and therefore there was money that could be plowed back into the virtualization software itself. We think the industry came out with a net improvement of probably around 2X to 3X in the price/performance for combined switching and serving over a decade because of these factors alone. (But that is just a wild guess.) It is clearly how VMware and Red Hat and Microsoft got rich in datacenter infrastructure software sales, though. There is no denying that. Network interface cards, particularly at the hyperscalers and cloud builders, have switch functions in them, too, and this has also pulled physical switches out of the network, pushing down the switch box count even as larger clusters and fabrics pushes it back up. And, to finish off this monster thought, how many servers do you think are actually running virtualized network functions and have yanked how much kit out of datacenters that would have been called networking and now gets lumped into servers? How much of the increase in server demand is actually what we would have called storage or switching? Where do we draw some of these lines?

The point is, switching is not simple. It is perhaps the most complex part of the datacenter. (We are of three minds about that, thinking that in their turn, compute and storage are more twisty and turny. It is hard to decide, so we just love all three as equally as we can.)

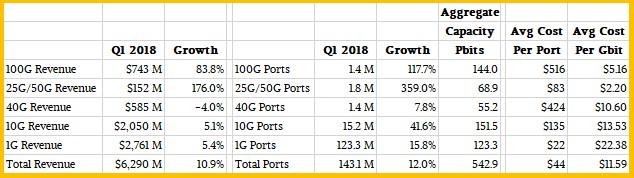

In terms of bandwidth and port count, here is what the breakdown looks like if you take all of the IDC data for Ethernet switching and play around with it a bit:

As you might expect, the markets for 25 Gb/sec and 50 Gb/sec switches are exploding now that silicon is in the field, and the latest generations of 100 Gb/sec products are also affordable enough for companies to really invest in a big way and get rid of their old 10 Gb/sec and 40 Gb/sec switches. Now, for every large enterprise, hyperscaler, and cloud builder that is pulling out the old 10 Gb/sec and 40 Gb/sec devices, there are many orders of magnitude of companies that have cruftier stuff where these 10 Gb/sec and 40 Gb/sec devices, which have come down radically in price over the past decade, are a big improvement over 1 Gb/sec. And oddly enough, 1 Gb/sec switching is still fine for a lot of applications, and that is why it absolutely dominates the port count (86.1 percent), is relevant in the aggregate total bits of bandwidth shipped (22.7 percent), and still comprises a big chunk of overall Ethernet switching change (43.9 percent). There isn’t really an Ethernet market so much as several different ones.

The interesting thing for us is to look at the average cost per port and the average cost per bit of switches each quarter. If you go back a couple of years, a port of 100 Gb/sec switching was on the order of $3,000 per port, and in the first quarter, the average was $516, and it is down 13.2 percent from a year ago. That is about what a 40 Gb/sec port cost three years ago and that delivered only 40 percent of the bandwidth oomph. The market for 25 Gb/sec and 50 Gb/sec switches is obviously exploding, but an average port is costing a mere $83, and it is down 40 percent from a year ago and falling fast.

You begin to see now why the hyperscalers and cloud builders pushed their own 25G standard and then used those goodies developed for 25G to drive down the cost of 100 Gb/sec switching. They were right, and the industry as represented by the key players in the IEEE, was wrong.

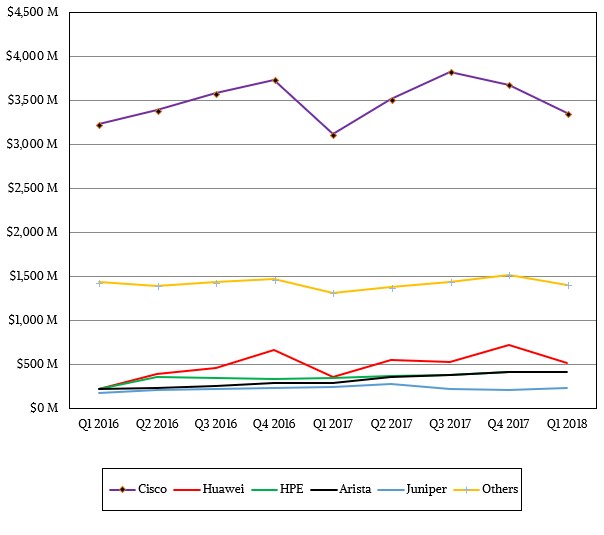

There is a whole new breed of switch ASIC vendors that are changing this market, but the key switch makers still tend to run the show even if they are under pressure from upstarts and whitebox switch makers. Take a look:

As you can see, from the vendor perspective, change comes tectonically slow in switching. But that doesn’t mean the forces on these players is not great. They are practically volcanic, in fact. Just enough to keep things churning and burning, which is good for progress.

which one would you prefer for hosting, VPS or dedicated server? Thanks 🙂