A team at Intel, in collaboration with QuTech in the Netherlands, is researching the possibilities of quantum computing to better understand how practical quantum computers can be programmed to impact our lives. Given the research nature and current limitations of quantum computers, particularly in terms of I/O, researchers are focusing on specific types of algorithms.

As you might expect, Intel Labs is focused on applications such as material science and quantum chemistry. Other possible algorithms include parameterized simulations and various combinatorial optimization problems that have a global optimum. It is also worth noting that this research may never come to commercial fruition.

Richard Feynman’s 1982 conjecture that quantum computers can be programmed to simulate any local quantum system has been shown to be correct. This is why we hear about success stories in simulating atoms and molecules at a quantum level and the strong interest in using quantum computers for materials design. One example is the success in simulating the energy of simple H2 molecules.

“Quantum computers can compute problems we cannot do today. However, they will augment rather than replace existing computers” says Jim Held, Intel Fellow and director of emerging technologies research at Intel Labs. As to practical impact, Held notes that “quantum computing is a research area that is a decade away from running commercial applications.” He also downplays the press quantum computers have gotten about the expectation that they can be used to break existing cryptographic methods. Specifically Held points out that “cryptography requires large quantum systems that have error correction” and expects that, “post quantum cryptography will be in place before quantum systems scale to the required size, which means that there will not be as huge an impact as one might expect.”

Quantum Computer Programming 101, Or Rather, 1 And 0

It is possible to view programming a quantum computer at a very high level using the conventional compile and run methodology that is familiar to most programmers. However, there are some significant differences.

Programmers may write programs for quantum computers using quantum instructions embedded in one of a variety of high level languages. These compile down to instructions for a classical computer controlling a quantum co-processor execution of an algorithm implemented as operations on quantum bits (qubits).

The power of the quantum computing comes through the two key properties of qubits (quantum bits) that are unique to quantum computers:

- A qubit can be in superposition of 0 and 1, which if measured will be, with some probability (not necessarily equal) be one or the other.

- Two or more qubits can be entangled, meaning their states are correlated so that N qubits can simultaneously represent up to 2N

Thus entangling qubits A and B means they can represent four possible states at the same time. Similarly, each additional qubit entangled represents an exponential doubling in the number of superposed states that may be represented at the same time. A quantum algorithm operating on a set of entangled qubits transforms all of the represented states.

This ability to operate on all the states in an exponentially large state space is fundamental to how quantum computer programs solve problems that are intractable on conventional computers. Succinctly, a conventional computer must evaluate each state in a given state space – one state at a time. By definition, this means that a conventional computer will exhibit an exponential runtime growth on problems that have exponentially large state spaces.

Cryptography is one example because it uses this exponential explosion to protect data. The intractable nature of these problems remain true even when the computational problem maps perfectly to massively parallel hardware because it is expensive to exponentially scale up hardware, the granularity of a decryption algorithm, or the required compute energy. The exponential growth in number of states (and hence runtime) is guaranteed to outrun the processing ability of any non-quantum computer regardless of how big, fast, or parallel. This is why quantum computing can potentially augment CPUs to add the ability to solve exponential scaling problems – a very exciting possibility that is currently not possible with any CPU.

In a very real sense – and without going into the math – the programmer’s quantum instructions define how these entangled qubits can be transformed during runtime in an exponentially large state space without requiring exponentially large numbers of operations or exponentially long runtimes. It is then up to the programmer to implement an algorithm that will produce a solution, hopefully unique, from this exponentially large state space.

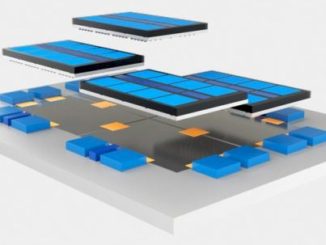

A more detailed yet still high-level description (with math) of how such quantum based computations can be performed can be found in Seth Lloyd’s seminal 1993 paper, A Potentially Realizable Quantum Computer. In particular, Lloyd describes how arrays are used to process information and how update rules in the quantum computer are able to act much like rules in a cellular automata to modify the state of many qubits based on individual state and the state of both local and distant neighbors. Thus, readers can get a more detailed view of how a one form of quantum computing works. Just keep in mind that how the quantum system works will differ according to the actual implementation of the quantum hardware. Lloyd described a polymer-based quantum computer (essentially the stuff used to make rugs). Intel Labs, in contrast, is investing in both superconducting qubits while also researching silicon-based spin qubits.

In addition to defining algorithms that transform qubits, the programmer can initialize the state of a qubit (for example, zero or one) to define input data. The quantum system also allows the specification of conventional logic operations like AND, OR, NOT, and FANOUT as well as how qubits are “wired” together. (See Lloyd for one possible way this wiring can occur.)

So from a software point of view, a compiler takes a program and turns it into the appropriate host computer logic and quantum coprocessor qubit operations to initialize, entangle, and transform the state into a desired result. Mapping the algorithm into an array of qubits and sequencing and parallelizing the application of large numbers of these single and multiple qubit gates to move and transform the qubits into the desired state is an important research topic, and a problem that needs to be addressed as we move to increasingly large numbers of qubits.

Regardless of the technology, it is important to note that I/O is a big problem for quantum computers and this affects the types of algorithms they can run. Held emphasizes this point by stating, “Quantum computing is not big data friendly.” Though a set of qubits can encode an exponentially large state space, the operations necessary to put them in a state corresponding to that much input data erode the complexity advantage. There is a similar limitation on output. Since qubits are quantum devices, measuring the state of a qubit also causes it to collapse probabilistically from its superposition of 0 and 1s. Getting all the answers represented in a set of entangled qubits requires running the algorithm repeatedly, measuring the value each time, then evaluating their distribution to determine the result.

While a quantum computer can process problems that reside in an exponentially large state space in non-exponential time, it can – and will – take exponential time to read out an exponential number of solutions.

Thus, when thinking about using a quantum computer, it is important to understand that they can only be realistically utilized to solve problems with algorithms that produce one (e.g. a unique) solution, or at least a limited number of valid solutions.

The Achilles Heel: Noise And Error Correction

The big problem with quantum systems is that they are easily perturbed because qubits are tremendously fragile.

The two qubit technologies Intel is exploring with QuTech are cooled to around 20 millikelvin (20 thousandths of a degree above absolute zero) to eliminate as much thermal noise as is practical. This is roughly 250 times colder than deep space. Otherwise, thermal noise will affect the computation of a solution. Held notes that the cooling technology is commercially available and therefore not a barrier to deployment.

Many open research topics exist, including how to manipulate the quantum computer without adding heat or other forms of noise, and connect to the qubits without introducing noise from the outside environment. Overall Seth Lloyd observed that, “The number of steps over which coherent superpositions of computational states can be maintained is limited by interactions with the environment.” For these reasons, people are actively trying to eliminate noise and develop error correction schemes to solve the challenges of reliably working at the quantum level.

Amazingly, quantum computing is becoming a practical reality. Even so, most researchers will have to content themselves for the time being with programming on various quantum simulators. Intel Labs has made available in open source the high-performance quantum simulator it uses on 01.org. There are a variety of other compilers and simulation environments from industry and academia, such as ProjectQ from ETH Zurich, an open source software framework that uses Python to implement quantum programs.

For more information on Intel’s efforts, Held’s plenary session at the 2017 Intel HPC Developer Conference, “Leading the Evolution of Compute: Neuromorphic and Quantum Computing,” is available online.

Rob Farber is a global technology consultant and author with an extensive background in HPC and advanced computational technology that he applies at national labs and commercial organizations. Farber can be reached at info@techenablement.com

Hey, what do you think about Maxthmatics?