When looking for all-flash storage arrays, there is no lack of options. Small businesses and hyperscalers alike helped fuel the initial uptake of flash storage several years ago, and since then larger businesses have taken the plunge to help drive savings in such areas as power and cooling costs, floor and rack space, and software licensing.

The increasing demand for the technology – see the rapid growth of Pure Storage, the original flash array upstart over the past nine years – has not only fueled the rise of smaller vendors but also the portfolio expansion of such established vendors such as Dell EMC, NetApp, Hewlett Packard Enterprise, Hitachi, and IBM. About the only thing that might be holding flash back is the price spike that happened for both flash and DRAM memory starting in late 2016. These higher prices might be breathing some new life in disk drives, but everyone is buying all the flash that they can afford just the same.

The co-founders of Vexata are well are that non-volatile storage is going to be part of the storage hierarchy from here on out. Co-founder and chief executive officer Zahid Hussain at one point was senior vice president and general manager of EMC’s Flash Products Division, which included the XtremeIO all-flash array, while Surya Varanasi, co-founder and CTO, was vice president of R&D at EMC in charge of a range of the company’s product lines, including the VIPR data platform, SRM (storage resource manager) and AppSync, for snapshot management. Other executives have experience with such vendors as Hitachi Data Systems, Brocade, Toshiba and IBM.

Five years ago, Hussain and Varanasi started working on designing a new storage architecture that they say takes fuller advantage of the capabilities within flash, that can scale with the demands from modern workloads like data analytics, large simulations, predictive analytics, and artificial intelligence (AI) and machine learning, and that can seamlessly fit into a customer’s existing datacenter ecosystem without having to change the operating system or application stack, making it easier for businesses to adopt.

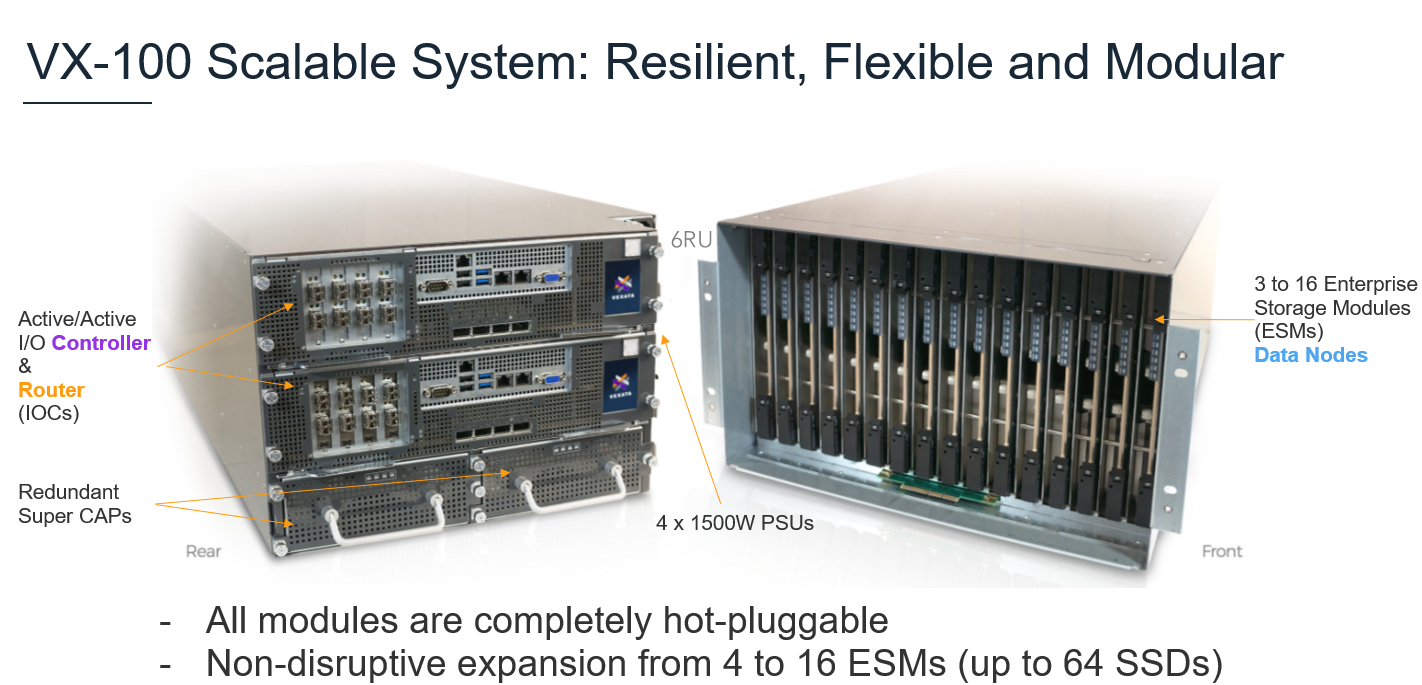

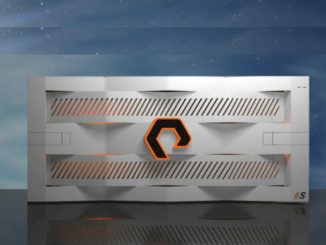

Vexata, backed by $54 million in funding from such investors as Intel Capital, Lightspeed Venture Partners, Redline Capital Management and Mayfield, came out of stealth mode last year and now offers its VX-100 Scalable Storage systems (see below) that house the company’s VX-OS embedded operating system and VX-Manager management and analytics software. The current arrays leverage Intel “Broadwell” Xeon E5 processors and FPGAs from Xilinx.

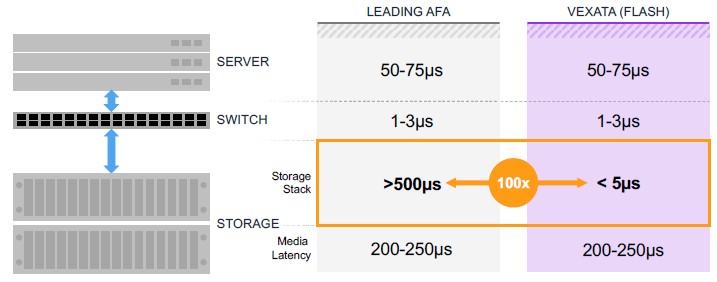

“We’re really going after primary database and analytics applications,” Rick Walsworth, vice president of product and solution marketing, tells The Next Platform. “What we’re finding in these [current storage] environments – especially now as datacenters are moving toward next-gen platforms with Intel pushing out these Xeon SPs, with GPUs becoming much more prevalent, especially in use cases around AI and machine learning – it’s exposing the bottleneck in the underlying storage architectures. Even with the first generation of all-flash arrays, if you deconstruct those arrays, effectively those arrays still use storage controllers meant for mechanical spinning media. What they’re trying to do to overcome that is just putting more expensive [and] more powerful processors in there. But at the end of the day, all the IO has to run up and down that CPU in order to deliver meaningful performance, and that CPU in that storage controller ends up being the bottleneck. We’ve essentially taken and distributed that architecture, completely separated the control path from the data path, providing the ability to really take full utilization of that underlying media. This has allowed us to use standard, off-the-shelf SSDs from a variety of vendors.”

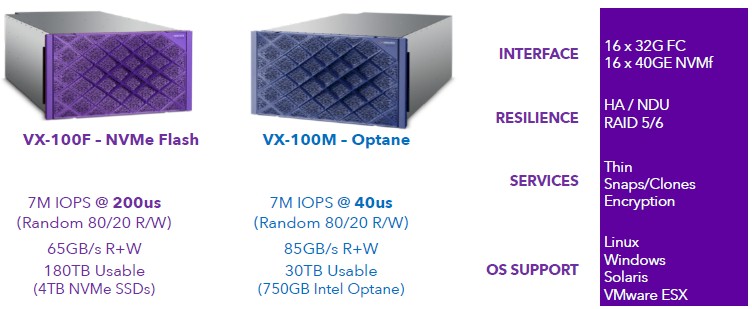

According to Walsworth, the company has qualified SSDs from Micron Technology, Toshiba and Intel, and now offers 3D XPoint Optane SSDs in its array.

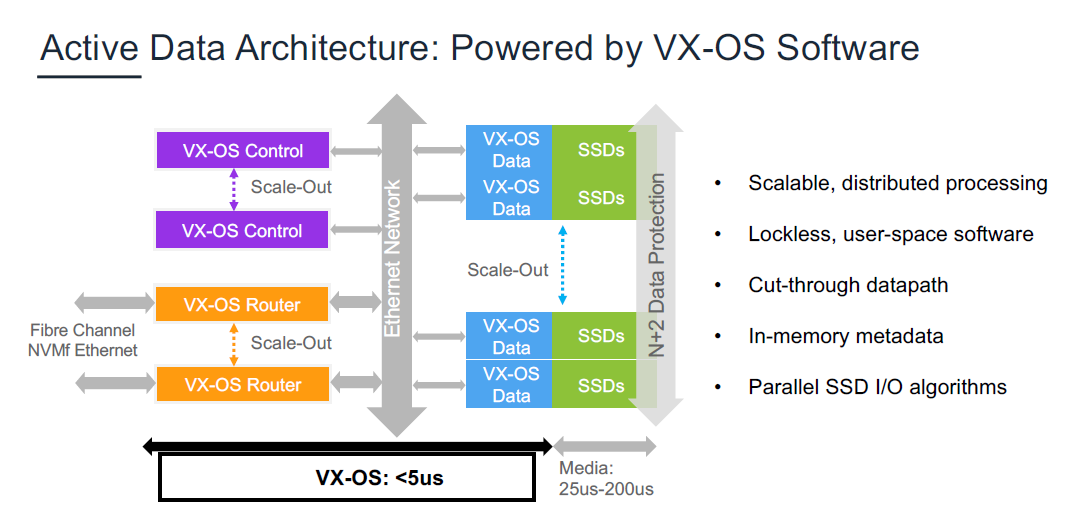

While other storage arrays also separate the data and control paths, Varanasi says that Vexata has done it in a unique way. “We rewrote the entire target storage stack for block devices to focus on ultra-low latency for the control path. We have written everything using Intel’s storage version of the SPDK and the DPDK libraries.” The goal has been to optimize the amount of time it takes to process an I/O request and reduce latency, and that is done by minimizing the data path.

“The easiest way to think about it is to compare it to the GPU,” the Varanasi explains. “A GPU does all the complex calculations for rendering that the processor could do but doesn’t. What we do in the FPGA complex – we call it the VX Router complex – is handle all those really complicated calculations that make storage very slow, so you are not bottlenecked by the CPU or RAID calculations that really cripple the compute cycles on the CPU. The complex calculations like RAID, like encryption, like the compression – these are very CPU-intensive calculations, and we have offloaded them to the FPGAs. Because of our unique separation, whenever we find there is some calculation that can be offloaded from the CPU, we do it. So the CPU is really responsible for the control path – just the handling of the I/O from the external world and managing the metadata – and the FPGA complex, our router, is really responsible for data movement and the calculations for data protection.”

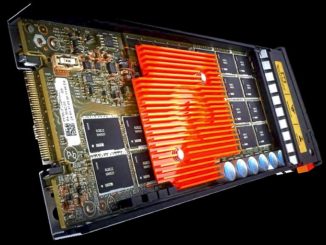

The VX-100 controllers include a single 18-core Xeon processor and an FPGA complex that features three Xilinx FPGAs, Varanasi says.

“The router complex interfaces to the outer world into this sort of SAN externally and it moves data into the midplane of our system, and the midplane is all 10 Gb/sec Ethernet in the back, and that is something that is unique,” says Varanasi. “Most storage architectures are tied to a specific protocol. Most storage architectures are either based on the SAS or NVM-Express protocols over PCI-Express. What happens to them is, once you are on SAS, switching to NVM-Express is not easy. It’s a lot of work to do protocol changes. If you are using NVM-Express and you design to that, if the storage protocols change and tomorrow some other media comes along, again it is very painful to change, so we decided early on to go with Ethernet, because not only does Ethernet speed continually increase massively – today we run at 10 Gb/sec in the midplane and it can go to 25 Gb/sec or 50 Gb/sec already, and we are also independent of the protocol. Inside we run lossless Ethernet and we go to our back end, which is what we call our enterprise storage modules, and these have embedded processors that have four SSDs on them.”

The bladed system is not dependent on a storage protocol but rather it relies of Ethernet, making it adaptable. In the midplane, there are 128 ports of 10 Gb/sec Ethernet, giving 256 GB/sec of bi-directional throughput. There’s no switch; instead the FPGA complex does the work, Varanasi says.

The back end leverages Cavium’s MIPS-based Octeon multicore processor. Regarding memory, “one of the things that limits everybody is if I use a dual-socket processor. I only get so many DIMM slots so I know how much memory I’m limited with,” Varanasi says. “In our case, the memory is distributed, so the metadata for the SSDs is carried on the DRAM that is associated with the embedded processor, so we scale along with the SSDs. For us, a single controller is just fine because our memory grows along with the SSDs.”

Though the SSDs in the back end leverage the NVM-Express protocol, if a customer wants to use something else – for example, SATA for lower costs – the change could be made without any tweaks to the front end or the entire architecture. At the moment, each enterprise storage module has a Cavium with four SSDs on it, and Vexata has up to sixteen of those modules in a chassis, and with qualifications for up to 3.84 TB drives, that is the scalability limit. “But you know that 8 TB and 15 TB are possible, so the system is architected for more than a petabyte of usable storage,” says Varanasi. As capacity needs grow, the system can be upgraded with larger sets of drives, too, not just fatter drives. Scalability can happen in the chassis, but real scalability happens by adding another box and interfacing them with the server, he adds. Vexata currently supports the OpenStack Cinder driver, and come July, will be adding multi-chassis support to enable users to manage multiple boxes in a single chassis.

Scalability is important, particularly as the use of emerging workloads in the datacenter grows. According to Vexata, its architecture offers an 11X improvement over current all-flash arrays when running SAS analytics, 30X the performance when running Oracle-based OLTP workloads, and 10X the performance when running Microsoft SQL Server data warehousing tasks.

Vexata is getting some traction, and the company has moved from customer trials in 2016 to first customer shipments last year; it is on the third major release of the VX-100 stack. There are dozens of customers in production with another 10 to 12 proof-of-concepts underway. Among the customers in production are Oath – which was created by AOL and Yahoo – and Verizon has three systems deployed.

“What the customer sees when they deploy this technology is they get much better utilization of their compute infrastructure, and at the end of the day, that’s what ends up providing a very strong TCO for the solution because I can take my existing investments in my compute infrastructure, my database licenses, my analytics licenses, and in some cases they can twice the performance only by changing that underlying storage infrastructure,” Walsworth explains. “We have seen instances where essentially they were so I/O bound and the queuing of the I/O wait times essentially started to stack up that latency, it becomes almost unusable in some of those environments. By taking away those bottlenecks, we are able to help customers get much better utilization of the existing infrastructure that they’ve made and get them more headroom to be able to scale out these solutions.”

Be the first to comment