Supercomputer makers have been on their exascale marks, and they have been getting ready, and now the US Department of Energy has just said “Go!”

The requests for proposal have been opened up for two more exascale systems, with a budget ranging from $800 million to $1.2 billion for a pair of machines to be installed at Oak Ridge National Laboratory and Lawrence Livermore National Laboratory and a possible sweetener of anywhere from $400 million to $600 million that, provided funding can be found, allows Argonne National Laboratory to also buy yet another exascale machine.

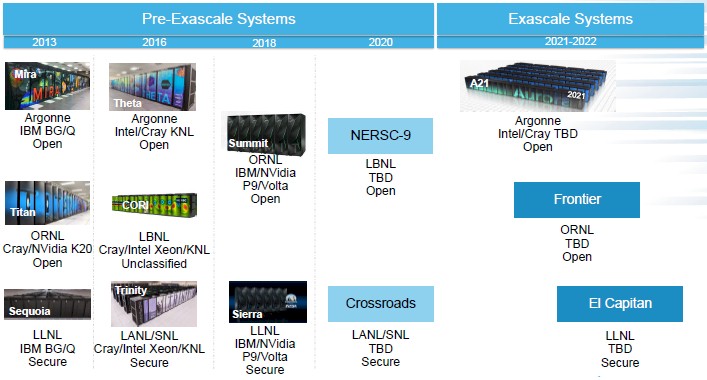

Oak Ridge, Argonne, and Livermore are, of course, the three Department of Energy HPC labs that collaborated back in 2012 with the original CORAL procurement, which requisitioned the 207 petaflops “Summit” hybrid CPU-GPU machine at Oak Ridge and the similar (but not identical) 125 petaflops “Sierra” system at Livermore, built by the OpenPower collective of IBM, Nvidia, and Mellanox Technologies, with IBM as the prime contractor. The “Aurora” machine at Argonne had Intel as its prime contractor and the “Knights Hill” many-core processor as its compute engine, with 200 Gb/sec Omni-Path interconnects lashing nodes together, but last year this contract was altered and now there is an Aurora 2021 machine that Intel and Cray are designing that will be the first exascale system deployed in the United States, sometime in 2021.

The follow-on machines that are part of this CORAL-2 procurement, as we have already reported, are called “Frontier” at Oak Ridge and “El Capitan” at Livermore; we do not know what the second exascale machine, based either on the architecture used in Frontier and El Capitan or possibly a different architecture, that will possibly be acquired by Argonne will be called. All three machines are covered by the same RFP, which simplifies things for the Department of Energy and the bidders on the CORAL-2 contract.

The US government likes to have two major supercomputer architectures from two prominent prime contractors who have deep pockets – and back in the day, it liked to have three. But with the rising prices and capital outlay by the bidders, even getting two prime contractors is a challenge. This may start to change during the CORAL-2 procurement, and we will find out when the winning bids are announced in later this year. (The original plan was to have the RFP out in February with selections made in May, but the DOE is running a bit behind.)

The Frontier system is expected to be installed in late 2021 and accepted in 2022, and it has to have a different architecture from Aurora 2021. El Capitan will be installed in 2022 and accepted in 2023, and it does not have to be different from Aurora 2021. While not covered under the CORAL procurement, the NERSC-10 machine at Lawrence Berkeley National Laboratories is yet another exascale system that will be installed in the United States, possibly with the same architecture used by Oak Ridge and Livermore, possibly with a follow-on to the Aurora A21 architecture, slated currently for 2024.

We shall see. A lot is predicated on the US Congress coming up with as much as $1.8 billion in funding for the DOE to proceed. Supercomputing is a nationalistic endeavor, a kind of practical sport where real things get done, and the competition is high across the United States, Europe, Japan, and China for the biggest, baddest systems.

The CORAL-2 Feeds And Speeds

The CORAL-2 RFP, which you can see here, provides quite a bit of insight into what the DOE is thinking as it pushes the performance envelope of its supercomputers into the exascale range above and beyond that it is willing to pay to do so. These machines are going to be 2X to 3X more expensive than the $205 million it cost to build Summit, which itself cost more than 2X the $99 million price tag of the 27 petaflops “Titan” predecessor to Summit. Machines are getting more powerful, but the price tag is rising fast, too.

Just like Summit and Sierra at Oak Ridge and Livermore were supposed to be 50X faster on real science applications running on their Titan and Sequoia predecessors, the Frontier and El Capitan systems are supposed to offer at least a 50X speedup on a diverse set of real science and data analytics workloads compared to Summit and Sierra. (Figuring this out is a bit of a challenge, with only about a quarter of the nodes installed on the Summit machine and Sierra at probably around the same scale. Everyone hopes that these machines will be fully installed by the TOP500 rankings in June at ISC 2018.) The workloads are getting broader for these capability-class machines, but the desire to tightly couple the software to the underlying hardware is not lessening, either. This is a very big engineering challenge, and it is one of the reasons why Nvidia has so many different kinds of compute crammed into the current “Volta” GPU accelerators, which provide the vast majority of the compute and memory bandwidth in the Summit and Sierra machines.

To get that 50X speedup on its core applications, Oak Ridge and Livermore say they will probably need machines with at least 1.3 exaflops of aggregate double precision peak floating, and are quite upfront that just supplying a flat 1 exaflops will not do the trick. The RFP has a requirement that the scalability of the system be able to be dialed up and down, and moreover, as was the case with the Summit and Sierra machines, there has to be a way to configure memory, storage, and networks to meet different performance and budget profiles.

The CORAL-2 procurement also requires that the system have a total of at least 8 PB of memory, and early tests on simulated exascale applications showed that somewhere between 4 PB and 6 PB of high bandwidth memory (generically, not literally the HBM made by Samsung and used in Nvidia’s GPUs and compute engines made by others) were needed. The RPF pegs 5 PB as the minimum for this high bandwidth memory sitting next to the compute engines. This memory capacity and the bandwidth associated with it is not just necessary for traditional HPC simulation and modeling workloads, but also for data analytics and machine learning workloads that are increasingly part of the science toolbox.

With the original CORAL procurements, the systems proposed had to have local persistent storage but the larger I/O subsystems, including burst buffers and parallel file systems, were optional. Now, with CORAL-2 systems, the RFP has to include the entire I/O subsystem. This is, we presume, one of the reasons why the budgets for these exascale systems are on the rise compared to pre-exascale systems. The Frontier machine at Oak Ridge has to have a POSIX-compliant file system that has a global namespace and that will be used for the entire center for various applications, not just for Frontier. The El Capitan system at Livermore needs to support two types of namespaces – one that is global and the other that is transient – so it presents two logical storage tiers to the El Capitan machine, each with its own performance and data storage and resilience requirements.

For the past several years, as organizations have discussed bringing out exascale machines perhaps as early as 2020, the talk always drifted towards lifting the power envelope on the systems and scaling out the nodes more aggressively within that power to hit the performance targets. Some suggested that power might have to go as high as 80 megawatts for an exascale systems. Oak Ridge, Livermore, and Argonne are having none of that. They prefer that the power consumption of the Frontier and El Capitan systems be somewhere between 20 megawatts and 30 megawatts – the average of the two being about what one nuclear reactor kicks out. But the CORAL-2 procurement also hedges a little and says that 40 megawatts is the limit to what the three labs can deliver to their HPC centers for a single machine and it is also about the most that they can afford to spend on juice to run them. We have little doubt that it will be very difficult to make a 25 megawatt power budget for such a machine (including its storage and peripherals) in the 2022 to 2023 timeframe, and kudos to the hardware and software engineers if they can make it happen.

One interesting and new bit that is part of the CORAL-2 RFP is that the system has to include an integrated telemetry database and analytics tools to monitor all aspects of the performance of the Frontier and El Capitan systems and to allow role-based access so different groups – system administrators, operators, researchers, and other end users – can access different parts of the data stored in this database help them figure out what is going on with their jobs as they run on the machines. You can manage what you can’t measure, and the hyperscalers are masters at such monitoring and management because this is precisely what gives them margin.

Maybe Google or Amazon Web Services or Microsoft Azure should build such a machine, then? Although it is a very remote possibility, it would no doubt be fun to see that happen. It seems likely that the IBM-Nvidia-Mellanox partnership is the frontrunner for the CORAL-2 procurement, but perhaps not by a huge margin if Intel and Cray are cooking up something interesting for the Aurora 2021 machine and have plans that could quickly expand upon that by 2022.

If Intel and Cray can deliver a 1 exaflops machine by 2021, goosing it by 30 percent to 1.3 petaflops by 2022 does not seem like a huge stretch. AMD is also a possible contender, particularly since it is working on a exascale machine based on its CPUs and possibly DSP or GPU accelerators with China. AMD would need an interconnect partner to make such a bid, and Mellanox would presumably be interested. Hewlett Packard Enterprise could also be a contender, not just for a hybrid CPU-GPU machine, but also possible as a “novel architecture” alternative with a massively scaled version of The Machine, something we discussed here last June. There is another possibility: Nvidia could pitch its own system design and be the prime contractor instead of IBM. We think this is probably not going to happen, but it could. It seems extremely unlikely that other architectures, such as the HPC and AI chips coming out of Fujitsu for the RIKEN lab, would ever be used in a DOE facility.

The average modern nuclear reactor puts out a Gigawatt.

Skynet?

Not sure how Intel is involved as a contender at all because CRAY does not work with Intel anymore:

http://investors.cray.com/phoenix.zhtml?c=98390&p=irol-newsArticle&ID=2343215

Global supercomputer leader Cray Inc. (Nasdaq:CRAY) today announced it has added AMD EPYC™ processors to its Cray® CS500™ product line. To meet the growing needs of high-performance computing (HPC), the combination of AMD EPYC™ 7000 processors with the Cray CS500 cluster systems offers Cray customers a flexible, high-density system tuned for their demanding environments. The powerful platform lets organizations tackle a broad range of HPC workloads without the need to rebuild and recompile their x86 applications.

“Cray’s decision to offer the AMD EPYC processors in the Cray CS500 product line expands its market opportunities by offering buyers an important new choice,” said Steve Conway, senior vice president of research at Hyperion Research. “The AMD EPYC processors are expressly designed to provide highly scalable, energy- and cost-efficient performance in large and midrange clusters.”

Cray added AMD, but it certainly did not drop Intel.