Ian Buck doesn’t just run the Tesla accelerated computing business at Nvidia, which is one of the company’s fastest-growing and most profitable products in its twenty five year history. The work that Buck and other researchers started at Stanford University in 2000 and then continued at Nvidia helped to transform a graphics card shader into a parallel compute engine that is helping to solve some of the world’s toughest simulation and machine learning problems.

The annual GPU Technology Conference was held by Nvidia last week, and we sat down and had a chat with Buck about a bunch of things relating to GPU accelerated systems, including what is driving the adoption of GPU computing and the things, such as the new NVSwitch that helps boost the performance of machine learning and perhaps HPC workloads.

Timothy Prickett Morgan: I will start with something that has been bugging me lately. I wrote a piece recently about how GPUs were the engine of compute for AI and HPC, and some of the feedback I got was that for a lot of HPC workloads, the average speedup for hybrid CPU-GPU clusters was on the order of 3X compared to plain vanilla CPU clusters, but the nodes cost three times as much so really it was just a wash and all you really got out of it was more programming effort in exchange for denser compute. I am not that cynical about that, and I suspect that plenty of the HPC codes are only offloading key parallel parts of their code to the GPUs, like the solvers, and that limits the speedup they get, but that is only one possible explanation. What do you think about this feedback?

Ian Buck: Your analysis is correct. For some applications which did not have a certain X factor of speedup, the price/performance doesn’t work out. It’s a wash.

We have done surveys of the HPC codes, and about 70 percent of the processing cycles of the HPC centers is dominated by 15 different applications. This small number of applications dominate compute time. We have been focused on accelerating those applications first as well as making them run well. There are hundreds of accelerated applications, but some of them are just moving to GPUs and they are going to take a while. You do have to know your workloads, and at every HPC center, all that we ask is that they look at their applications and first of all make sure they are on the most recent versions of the code, many of which have been accelerated by GPUs. On average, if you compare a server with four Volta GPUs against a best-in-class server with two “Skylake” Xeon SP processors, the average speed up across a basket of HPC applications is 20X. You have to do the division to get the price/performance because we don’t set pricing or talk about it.

TPM: OK, I can do some quick math. It is probably on the order of $15,000 for a heavily configured Skylake Xeon machine, and maybe $60,000 for one of IBM’s AC922 nodes, and that works out to a factor of 6.7X better price/performance.

Will we ever get to the point where every important HPC code is accelerated and sees the high performance levels that Nvidia is seeing with AI workloads? When does this become a no brainer?

Ian Buck: There will always be some applications that, for a lot of different reasons, will not be accelerated in the medium term, and that is alright. We are not naïve – we don’t have a tool that just automatically parallelizes everything. So I think that at least for the medium term there will always be some applications that will not be accelerated. I don’t think it is necessary for Nvidia to add value to an HPC center for us to have all of their applications accelerated, but they should work on the top 15 and have a significant portion if not all of their nodes accelerated with GPUs. If all of them are not equipped with GPUs, good. Buy some. That’s OK, partition the cluster and get the 5X, the 7X, and the 10X speedup for these applications.

TPM: That partitioning idea brings me to my next point, and it relates to the new NVSwitch that is at the heart of the DGX-2 system. If you look at the “Catapult” FPGA accelerated system from Microsoft, the innovation was not so much having a 40 watt FPGA mezzanine card that plugged into hyperscale servers, but rather the SAS networking fabric that the company created to link the FPGAs to each other in their own mesh network. This allowed for Microsoft to pair FPGAs to CPUs in a lot of different ratios and topologies, and importantly, let the CPUs do other and therefore at higher utilization than they might have otherwise had if the FPGAs sitting next to them in the chassis were not actually doing work dispatched by them. I can envision an extended NVSwitch architecture, daisy chaining across multiple server nodes, meshing up not just sixteen GPUs, but maybe dozens or hundreds and allowing a similar kind of capability, thus allowing for CPU-only and largely serial workloads or workloads that have not yet been ported to GPUs to run full tilt at the same time that GPUs, under control of other CPUs in the cluster and potentially dynamically configured across that mesh memory fabric, were doing parallel work. Is this something that makes sense with NVSwitch?

Ian Buck: That is an interesting idea, but it is not the impetus behind the design for NVSwitch. NVLink fabric using NVSwitch is really meant to create a very tight consortium of GPUs to do high performance deep learning training and in some cases HPC workloads where all-to-all communication within the node really matters.

TPM: Give me an example of a kind of HPC workload that NVSwitch might be useful for.

Ian Buck: Large scale Fast Fourier transforms tend to have all-to-all communications for doing large scale seismic processing, for example. They want to do FFTs on huge volumes, and FFTs generally do shuffle operations through the network and this can really benefit from that all-to-all communication.

What you are talking about is more like remote GPU harvesting, being able to reach out to other nodes and use the GPUs in them. That is not really enabled in NVSwitch right now, and there are some challenges in doing that, particularly with regard to the program model and the operating system is kind of counter to the tight interconnect we have and our programming languages, which assumes a unified memory space.

TPM: And it is a memory semantic architecture anyway.

Ian Buck: And you are putting a very big latency gap in there, and NVLink doesn’t go outside of the server chassis. You really need to do that over InfiniBand. We have done some playing around in a research setting, but it is not something we support.

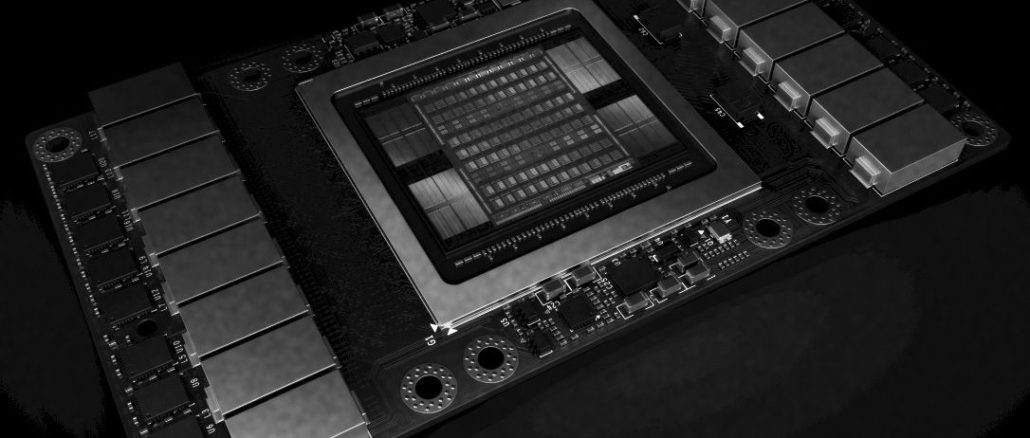

TPM: Was NVSwitch invented because you know that the Moore’s Law steps are getting harder and harder to do? Nvidia is on a very advanced, bleeding edge, custom 12 nanometer FinFET process from Taiwan Semiconductor Manufacturing Corp for the Volta GPUs, and as far as I know, you are right up against the reticle limit with this immersion lithography technique. It is hard to image what else you can do to scale up the GPUs, so you have to scale out.

Ian Buck: Well, we are not done on the silicon side because we are not done with computer architecture. We are revising and adapting our architectures for the future of deep learning. As we learn more things from our customers, we incorporate that up and down the stack, not just in the chips. We have always been at the reticle limit, we have always built the biggest possible chip we could.

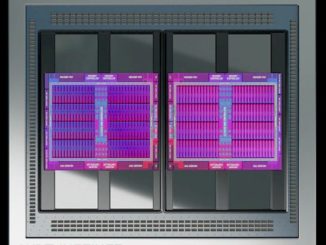

One of the things that NVSwitch does let us do for sure is scale within a node, but another thing it lets us do is model parallelism. Today, most neural networks are trained doing batch parallelism, which means having 64 or 128 images and I train 64 or 128 copies of the neural network at the same time, and then at the end I merge the results together. This is basic data parallelism. This gives more efficiencies, in that I can train faster. The other way of doing it is to take the neural network and spread it across the GPUs, so the first layer is on one GPU, the second on the second GPU, and so forth. That’s the model parallelism. And then you can batch up the model parallelism and have multiple copies in flight, and have this product of model parallelism and batch parallelism. The challenge with this is that the communication requirements between the GPUs go way up. NVSwitch lets us do model and batch parallelism, and at scale.

TPM: Is there a desire to have a larger NUMA instance on the CPU side – say four sockets instead of two – and then double up the GPU counts to 32 per node to get an even larger memory space for these models to play in?

Ian Buck: You could go further, but for training we felt like two high-end Xeons and 1.5 TB of memory was enough.

TPM: Some of the suppliers of machines to the hyperscalers are talking about having 64 GPUs or 128 GPUs in a cluster for running training, and they are using a mix of PCI-Express switching and sometimes NVLink within the node and then 100 Gb/sec InfiniBand or Ethernet with RoCE across nodes. I imagine they would want to have this all podded up in a much together shared memory cluster, and you could do it with optical interconnects with NVLink and NVSwitch. So I don’t think 16 GPUs in a node is by any means an upper limit of where the models are today, and the models keep getting bigger. I can envision a second generation of NVSwitch having 36 ports or 48 ports, and daisy chain them and extend that memory fabric a lot further. Is there demand for that? It may be a corner case with three or four hyperscalers, I realize.

Ian Buck: Certainly NVSwitch lets us build an NVLink memory fabric. You have seen what we have done inside DGX-2, and it is an amazing engineering feat, with twelve switches providing that all-to-all communication. With that full stack optimization, we showed Fairseq training going from 15 days to 1.5 days, a 10X improvement, in only six months. It would be really cool to keep going, but from the market standpoint, we are going to absorb and learn how to take advantage of 16 GPUs in one server and one OS image before we scale up beyond that. Beyond 16 GPUs right now, we are relying on InfiniBand, and we have eight ports running at 100 Gb/sec, and doing distributed training across multiple operating systems. We did not design NVSwitch to be a local area network switch because Mellanox does a great job of that. That is not our goal.

TPM: Will Nvidia ever produce what I would call a DGP-2, which is a version of the Nvidia GPU server platform that has IBM’s Power9 processors instead of Intel’s Xeons? Obviously, with the Power9 chip having native NVLink ports and the ability of coherency across the CPU and GPU memories, this would seem to be a pretty big advantage. IBM’s “Newell” Power AC922 is a good platform and forms the basis of the “Summit” supercomputer at Oak Ridge National Laboratory and its “Sierra” companion at Lawrence Livermore National Laboratory, but the system does not pack as many GPUs as a DGX-2, which has that nifty new NVSwitch to cram up to sixteen “Volta” Tesla V100 accelerators into a single fat node. Given all of this, I think many customers would want this, but for all I know, you and IBM have a deal to not create such systems or you have a deal to eventually do so.

Ian Buck: IBM makes great systems, and they are building lots of them right now. Our strategy is not to be an OEM, and we are not trying to be what IBM, Hewlett Packard Enterprise, Dell, and others do for the market, which is to allow enterprises to have highly configurable systems in terms of the number and performance of CPUs and GPUs for their datacenters. They are good at their job, IBM especially and they are certainly an innovation partner in that we designed the original NVLink with IBM and they will continue to be part of that process as we build future supercomputers.

But our mission is to build one system at a time – the best possible one that we know how to make – and to build it first and foremost for ourselves for our own deep learning development for self driving cars and other use cases.

TPM: But you could choose OEM systems or do what the hyperscalers do and design a machine and have it built by an ODM without having to put a machine up for sale and arguably compete with your own channel partners. You didn’t have to be a server vendor, although Nvidia no doubt loves to get the revenues from system sales. I see how it is good for Nvidia – you get in the front of the line for the latest GPUs, you get to parcel them out to researchers and enterprises, and you get all that good public relations. The presumption is that this is a kind of reference architecture or showcase for integration of technologies, such as NVLink and PCI-Express switching in a fat node and now the NVSwitch, which is even better.

Ian Buck: The DGX machines are not just made for that reason, because we make them available to the market and we can help create that market and help it take that next step by presenting a leadership class system, which gives confidence to AI researchers and developers and their enterprise counterparts that this is the kind of system that reflects where AI is going and create demand for these amazing powerful and complicated servers. And this de-risks a lot of it for our channel partners.

TPM: Where do you draw the line with how many of these DGX machines in terms of the volumes you want to sell? I saw this problem with SeaMicro after AMD bought it. AMD was a CPU and chipset partner to the server makers, but then it was a competitor in microserver clusters aimed at webscale datacenters, which annoyed plenty of partners who would just as soon do that part of the engineering as their value add. In the end, SeaMicro’s interconnect was not adopted and resold by OEMs and they went off and did their own microservers, AMD’s Opteron processor business died of neglect, and it was all for naught. It is a delicate balance that you have to walk as a technology supplier also building systems.

Ian Buck: This is different. First of all, this is only for AI, and we are not trying to corner the market. Second, we are not afraid of that conflict. For instance, we make Titan GPU cards, but we also enable a world of AIC vendors to make graphics cards. We make Nvidia Shield, but we also help Nintendo make the Switch. We make DGX, but we help HPE, Dell, IBM, and others make their servers. The more ways we can give customers access to the technology, the better. In the end, we just want more GPU acceleration in the datacenter.

Be the first to comment