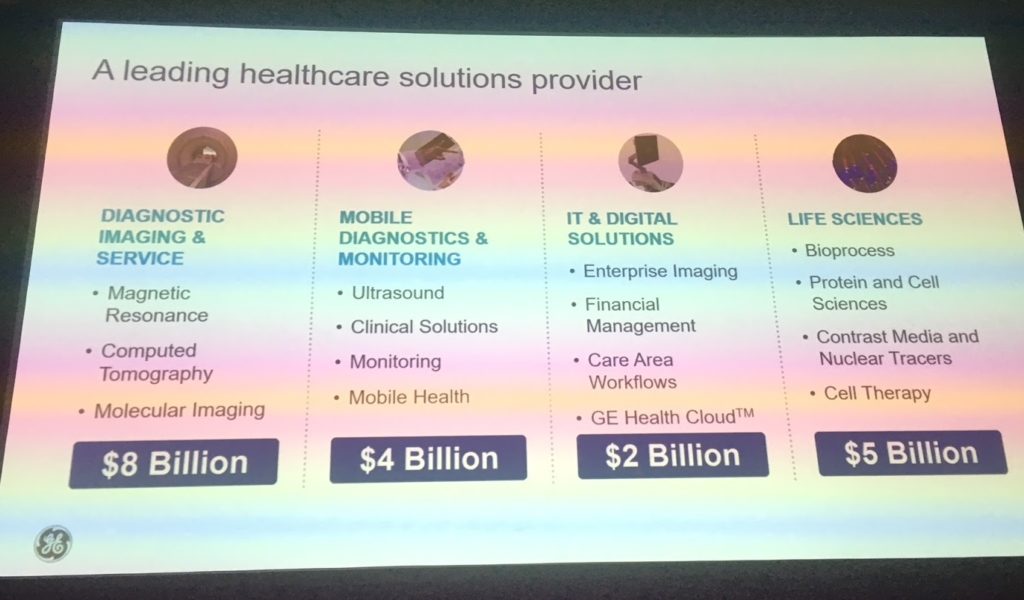

For more than a decade, GE has partnered with Nvidia to support their healthcare devices. Increasing demand for high quality medical imaging and mobile diagnostics alone has resulted in building a $4 billion segment of the $19 billion total life sciences budget within GE Healthcare.

This year at the GPU Technology Conference (GTC18), The Next Platform sat in as Keith Bigelow, GM & SVP of Analytics, and Erik Steen, Chief Engineer at GE Healthcare, discussed the challenges of deploying AI focusing on cardiovascular ultrasound imaging.

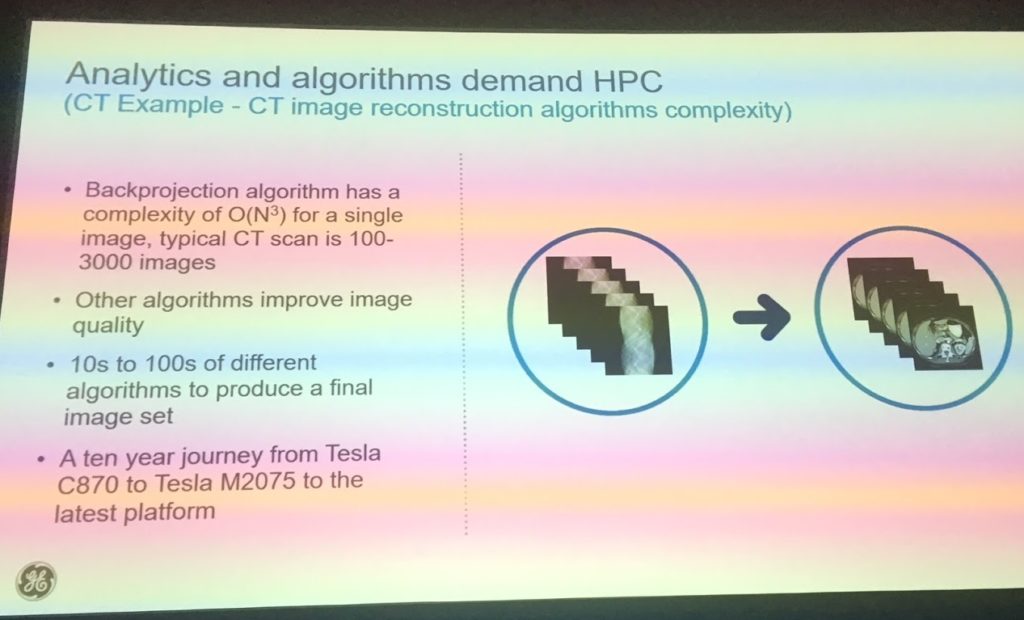

There are a wide range of GPU accelerated medical devices as well as those that rely on a GPU as host processor. Both take advanced software development efforts and most medical applications have benefitted from the steady upward trend in GPU performance capabilities on a range of devices–from using the Volta GPU for training neural networks to recognize medical image data down to much smaller, lower power devices that sit inside a range of more compact medical gear.

On the road to abstracting local compute, which is something seen far more in areas where GE Healthcare plays, the rooting of physical infrastructure is different than in HPC or even large-scale deep learning. In other words, GPU devices matter in the embedded sense as well as remotely.

GE talked about this evolution and why it started development on AWS, slowly adopting more and more of their cloud services. Of course not all medical devices are on a network, so standalone systems are needed as well. Recent announcements from GTC this week, particularly around Kubernetes being deployed on NVIDIA hardware are of particular interest to GE. They still need significant computing horsepower, so continue to train on the cloud, but then need to define artifacts and annotate locally. They need to deploy the exact same models on cloud and off cloud. We will look at this trend in the context of use cases in a moment.

GE Healthcare put the role of GPUs in medicine in context by discussing a few use cases and how they have evolved in recent years. For instance, cardiovascular ultrasound is a critical diagnostic technology used in emergency rooms throughout the globe. Making decisions based on ultrasound images is a key life and safety issue. The team at GE traditionally designed custom hardware and software to support their platforms, but as image quality needed to be improved to increase the capability of their diagnostic platforms the team turned to GPU technology and more software defined systems.

GE Healthcare believes that moving from custom systems enabled more flexibility, speed and accuracy. Also, by decoupling the algorithms required for training and onsite insitu annotation in the emergency room has finally allowed them to provide real time systems that can detect anomalies in images in the clinic.

The talk used Automatic Cardiac View Automation from GE Healthcare as the basis for some of their infrastructure discussions. The need their current and future scanners to both recognize the images and build a pipeline based around standard cardiac views. In the clinic, multiple “views” are taken – suprasternal, parasternal, subcostal and apical. Unfortunately, there is considerably more variability than one would first think in how these scans are carried out.

By building a rich dataset from many different hospitals and processes, diversity could be developed right into the training set. Over 8,000 ultrasound loops with variable image quality were used for training. 900 additional loops from a separate group were used for validation. The orientation and alignment of images can now be computed in real time directly on the instrument in-situ. This removes variability and potential for error and more importantly misdiagnosis.

The Next Platform spoke with Kimberly Powell, VP Healthcare & AI Business Development at NVIDIA about the “large edge” issue of having compute heavy devices inside the clinic. She was quick to point out potential solutions to the obvious “last mile” issue. Her suggestion was to apply “Virtual GPU” technology from NVIDIA. Grid based AI and visualization could then rapidly remove the challenge of having a “large edge” by rendering the image data and building the annotations in either an on premise of off premise cloud based on “GPU as a Service”. Such services are becoming popular in the gaming community, but have yet to show significant inroads into healthcare. It is an interesting idea and could allow scale and also reduce complexity. Based on some of these concepts, NVIDIA also demonstrated their upcoming “Project Clara”. Clara shows promise for providing both remote compute and visualization in a wide potential assortment of healthcare imaging challenges.

During the discussion at the meeting the question of “compute global”, “annotate local” was raised by a number in the audience. The Next Platform noted an immediate comparison to the autonomous driving systems. Autonomous requires large HPC systems to be applied to first train the networks, but then smaller lower power but fault tolerant systems are used at the “edge” (inside the vehicle) to provide real time diagnostics and analytics of the environment. This is essentially a “the last mile” challenge in healthcare at the edge. How do we provide clinicians with the tools they need without having to wheel around large, cumbersome computing equipment in the clinic?

Last mile challenges are notoriously difficult to solve — ask any telecommunications provider, the last mile is the part of the network with the least amount of control and the highest levels of variation, ambiguity and potential for error. The exact same can be said of medical devices. This is now a ubiquitous and classical edge computing conundrum.

Importantly today medical images are analysed at the pixel/RGB color and intensity level. Traditional “human readable” output was provided by devices for the attending clinician to physically look at with their expertly trained eyes. Raw channel data from detectors that is available are often not considered computationally, other than via standard data reduction methods to generate constrained DICOM standard images.

More sophisticated algorithms to consider RAW and RF channel data rather than human readable DICOM are being developed by GE in partnership with Partners Healthcare in Boston Massachusetts. This is an active area of research and advanced artificial intelligence at the edge and within the data center.

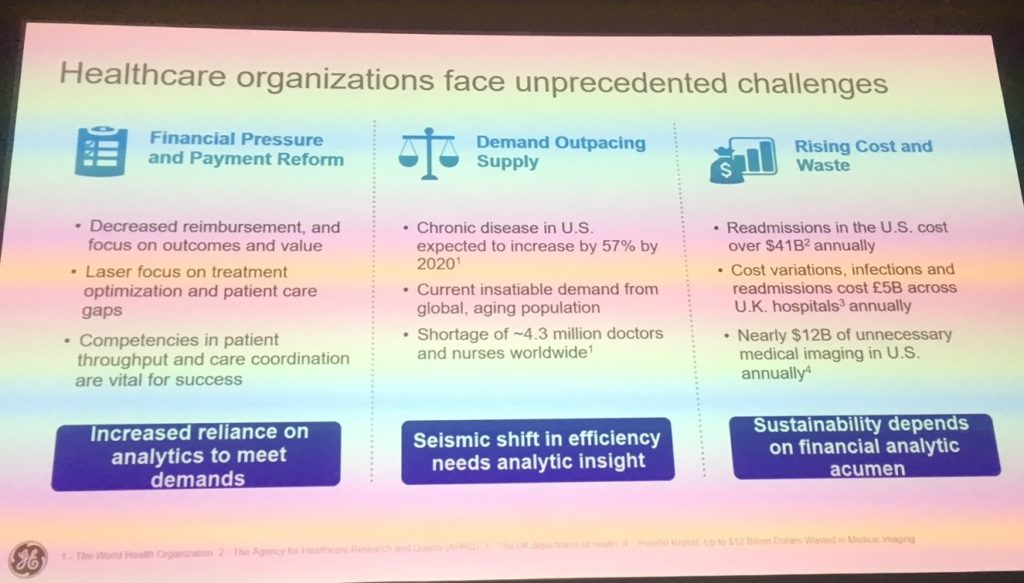

With ever more sophisticated real time analytics, advanced computing can rapidly identify errors, often faster and more accurately than a tired and overworked human. Often these are simple artifacts of the physical imaging process. So much so that in the United States alone Bigelow stated that over $12 Billion of wasted medical images are taken annually. This results in significant costs for both the providers and the payers in an already cost sensitive business. If imaging waste raises concern, then the $41 billion cost of medical readmissions, often also driven by the need for test to be rerun due to “medical image waste” is an even more powerful persuader to get the imaging right in-situ the first time.

Better imaging and annotation right there in the clinic “at the edge” has considerable and immediate impact to real world health life and safety issues, let alone the phenomenal potential for lowering the total cost of global healthcare.

Dr. James Cuff

Dr. James Cuff

Distinguished Technical Author, The Next Platform

James Cuff brings insight from the world of advanced computing following a twenty-year career in what he calls “practical supercomputing”. James initially supported the amazing teams who annotated multiple genomes at the Wellcome Trust Sanger Institute and the Broad Institute of Harvard and MIT.

Over the last decade, James built a research computing organization from scratch at Harvard. During his tenure, he designed and built a green datacenter, petascale parallel storage, low-latency networks and sophisticated, integrated computing platforms. However, more importantly he built and worked with phenomenal teams of people who supported our world’s most complex and advanced scientific research.

James was most recently the Assistant Dean and Distinguished Engineer for Research Computing at Harvard, and holds a degree in Chemistry from Manchester University and a doctorate in Molecular Biophysics with a focus on neural networks and protein structure prediction from Oxford University.

Follow James Cuff on Twitter or Contact via Email

That “Edge” Branding for whatever it is fully intended to imply is a little too Marketing Oriented in the worst sort of way and can we get on to using some more generic naming that is less Marketing Oriented. And I mean that most sincerely for the NextPlatform before all reader trust is lost. That “Edge” has no place in any discussion from any sort of person with that has that “Dr” as a Title.

And Any discussion of any singular maker’s product that is not campared and contrasted with any of the competition’s similar IP is always going the be interpreted by most readers as sponsored content.