The dark and mysterious art of artificial intelligence and machine learning is neither straightforward, or easy. AI systems have been termed “black boxes” for this reason for decades now. We desperately continue to present ever larger, more unwieldy datasets to increasingly sophisticated “mystery algorithms” in our attempts to rapidly infer and garner new knowledge.

How can we try to make all of this just a little easier?

Hyperscalers with multi-million dollar analytics teams have access to vast, effectively unlimited compute and storage of all shapes and sizes. Huge teams of analysts, systems managers, resilience and reliability experts are standing up equipment in literally football sized units of issue. They are well fed, and they are mostly happy–they have this down, but this is the metaphorical 1% of large-scale deep learning users.

In there real world things are quite a bit different. There, we have the lone researcher. The lone analyst and likely the analyst who has just been handed the unenviable task of making some sort of sense of their moderate sized enterprise customer relationship database. As one might imagine, it’s really not a great time for that analyst. Maybe they call IT? Maybe they have a little team who can stand up some open source software. Maybe they have heard of CUDA? Most likely though – they are absolutely on their own.

Enter “Easy AI”

A large number of AI toolkits are available to “lower the barrier” to potential consumers of exciting modern machine learning toolkits. However, these toolkits originally sprung up inside either the hyperscale or academic fields. They are by definition quirky. The software stacks are huge moving targets and they continue to also evolve rapidly. As we have discussed before, there are minimal standards in AI and methods. It’s a complicated world of moving parts and pieces. The challenge now is how to tame the complexity.

This isn’t a new idea, of course, but few in this space are. In the late 1990’s the author spent many hours sat in front of software called The Stuttgart Neural Network Simulator. This software essentially allowed an unskilled machine learning researcher to build, train and interact with basic neural networks to solve their scientific questions. Acronyms like IBM RS 6000/320, SUN SparcSt. 5, SunOS 4.1.3, SGI Indigo 2, and IRIX 4.0.5 were also popular back then. However, the comparison to today’s AI underlying complexity are strikingly similar.

These types of “interfaces” and elegant UI/UX to allow humans to interact with complex systems have become ubiquitous in the areas of scientific research. For example, the now popular Apache Taverna started out from a similar challenge in the life sciences and the then early “grid” and web services world. Building integrated pipelines for research was hard. Sprinkle data provenance on top of all of that, and you have a real challenges to overcome. We needed the computers to be easier to interact with so we could focus on asking the scientific questions and not worry about the plumbing so much. This unfortunately isn’t the only problem.

Pete Warden of the Google deep learning team (who design the popular and complex TensorFlow software), recently wrote about “The Machine Learning Reproducibility Crisis”. He is not wrong, it is a serious and growing issue. The challenge of data reproducibility and provenance coupled with the 1000’s of moving pieces and parts of software are terrifying. It should be terrifying, what exactly are these “black box” devices actually predicting. What are they telling us?

Machine learning unfortunately brings along the even larger challenge in that we can’t actually interrogate the models we build and ask them why questions. Why did you predict that? Why did you classify the data that specific way? However, they often appear to work sufficiently well enough. So we believe them. We believe them to the point where enterprises are now happily deploying “Transfer Learning”. Transfer learning is when you use a pre trained neural network or system to solve one problem but then turn around and apply that exact same network to a new different but related problem.

As an example, think about using a trained classifier that can identify trees to identify flowers, or what is more often happening, a demographics predictor to correlate purchasing habits of 19-25 year olds versus 44-60 year olds. These predictions might not be particularly good, but as a brute force engine, they are often “good enough”. Transfer learning also significantly cuts down on the required training time and compute, because you don’t need to spend cycles training. You can in theory do more with less. Sounds great doesn’t it?

The lovely thing about training neural networks is that you can sit and watch the gradient descent graph as the machine iterates on your data and tries to convince yourself that it is working. Your network is somehow “learning” from your data. It is pure magic. More often unfortunately it is simply overfitting your training data. It isn’t actually learning a great deal at all.

We have studied neural networks for decades, and without high quality input data, trying to resolve complex meaningful patterns is an exercise in pure futility. Unless the signals you are searching for are obvious, for example, many cats and purchasing habits look rather similar from a distance, you are doomed. Finding a correlating signal and feature isn’t actually all that hard given a sufficient large set of input data. However, discovering nuances in complex financial state models and subtle three dimensional protein structures, for example is far from easy.

Interaction with complex software such as TensorFlow, Caffe, Intel Caffe, and MXNet sure is tricky. As humans we clearly need help. Previously we spoke with Soren Klemm who assembled Barista to aid in making more meaningful interactions between humans and machine learning toolkits. It is important to focus on human and computer interaction.

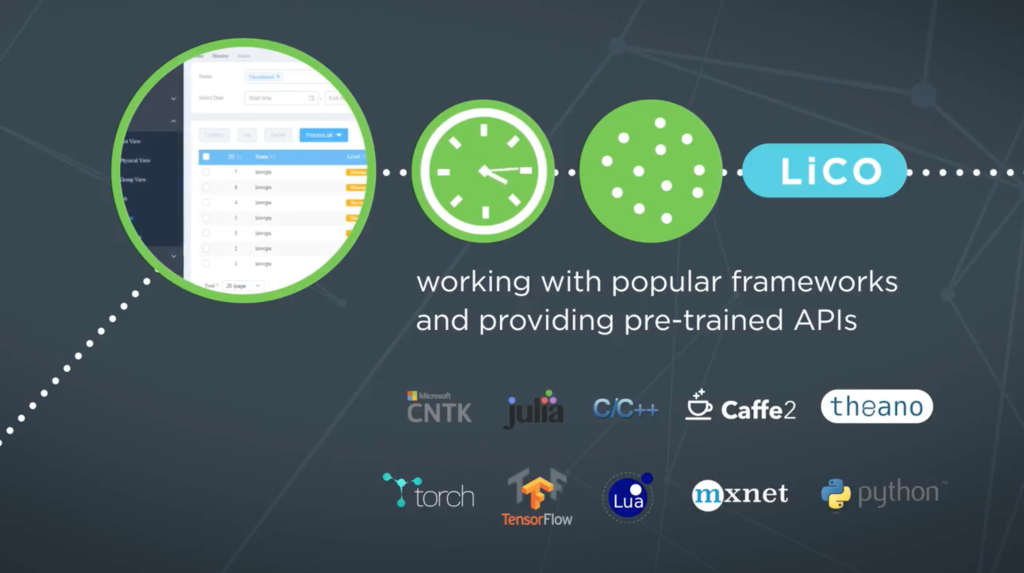

We also recently spoke with Dr. Bhushan Desam, Lenovo’s AI Global Business Lead who has his own take on “Easy AI”. Their technology stack impressively called “Lenovo Intelligent Computing Orchestration”, or “LiCO” aims to reduce the complexity of the total software stack. With careful selection of containerization and methods to “orchestrate” pre made machine learning images they say they can reduce the burden on the “lone analyst”.

LiCO fits squarely in the “post prototype” part of the data analysis development pipeline. The time when you grow out of a single GPU instance and start to scale to multiple machines, but still of the order 2-8 GPU. This clearly isn’t exascale. It’s for boutique iterative development of techniques based on moderate sized data that can fit down an single or couple of ethernet wires.

It essentially the same idea as SNNS from the late nineties, but now Lenovo can also integrate some hardware into the mix for you as well. The Lenovo SR650 systems and SD530 are basically designed for these type of small boutique training work loads. Take a handful of those servers and add a spot of orchestration software, and the bar has been lowered sufficiently for many workgroups to start to dabble effectively in ML and deep learning.

Not too deep mind you, but maybe just deep enough.

ClusterOne also plays directly in this space but slightly more focused on making machine learning at scale a touch more easy. “Concurrent jobs or distributed runs. One command does it all.” – that’s a seriously bold claim indeed. They can also do on premise, off premise, hybrid, cloud or a mixture. Whatever you need, one stop machine learning shopping. Fascinating.

NVIDIA has also made significant inroads to the “machine learning in a box”. They understood early on that the power of their platform could only be fully unlocked through tight integration. For example, the DGX-1 platform brings some serious horsepower to the game (up to 1 Petaflop performance on DL codes with their V100 powered solutions). They couple that impressive hardware with a system image and software to quickly build out machine learning workloads at what were previously restricted to datacenter sized operations, in a single box.

If the DGX-1 is too rich for you, you can also look at the Jetson TX1 and TX2 embedded systems. These are also packaged with both custom operating systems and software toolkits to get your machine learning ideas up and running quickly. These embedded systems based on the NVIDIA Maxwell architecture, are rapidly finding a niche in low power autonomous and driverless and edge applications that have a need real time custom AI.

We are seeing folks such as Connect Tech building ruggedized systems with these development platforms for real world applications and non standard, more hostile computing environments. Edge computing applications we have discussed previously are becoming ever more hungry for something like an 8 watt part that can deliver teraflops of potential performance with high memory bandwidth. This is serious edge capability, and there is the potential to be millions of such devices, each calling home to the datacenter with large chunks of output and results.

“What we are seeing is essentially a democratization of machine learning at all levels.”

So here we are. At a crossroads. More and more players are entering the AI market to essentially make machine learning cheaper and easier for you. No one is promising to make it either more accurate, or proving to you that it will directly deliver more value to your business. However, many are now keen and able to allow you to purchase packaged more tightly integrated solutions so you can at least start have a look into your complex data and see if there is anything there. It is a good thing.

Finally, the last remaining real problem with “the middle” is it’s often unfortunately too small and not quite detailed enough for the large players, and by definition lacking complexity. For the small players, as simple and easy to use as the toolkits try to be, they may still end up being just slightly too complex.

“Easy AI” is clearly a rapidly evolving and very interesting part of our new AI landscape. The Next Platform will be at GTC next week, where we will continue to examine in more detail the many players in this new ecosystem.

Dr. James Cuff

Dr. James Cuff

Distinguished Technical Author, The Next Platform

James Cuff brings insight from the world of advanced computing following a twenty-year career in what he calls “practical supercomputing”. James initially supported the amazing teams who annotated multiple genomes at the Wellcome Trust Sanger Institute and the Broad Institute of Harvard and MIT.

Over the last decade, James built a research computing organization from scratch at Harvard. During his tenure, he designed and built a green datacenter, petascale parallel storage, low-latency networks and sophisticated, integrated computing platforms. However, more importantly he built and worked with phenomenal teams of people who supported our world’s most complex and advanced scientific research.

James was most recently the Assistant Dean and Distinguished Engineer for Research Computing at Harvard, and holds a degree in Chemistry from Manchester University and a doctorate in Molecular Biophysics with a focus on neural networks and protein structure prediction from Oxford University.

Follow James Cuff on Twitter or Contact via Email

Be the first to comment