In part one of our series on reaching computational balance, we described how computational complexity is increasing exponentially. Unfortunately, data and storage follows an identical trend.

The challenge of balancing compute and data at scale remains constant. Because providers and consumers don’t have access to “the crystal ball of demand prediction”, the appropriate computational response to vast, unpredictable amounts of highly variable complex data becomes unintentionally unplanned.

We must address computational balance in a world barraged by vast and unplanned data.

Before starting any discussion of data balance, it is important to first remind ourselves of scale. Small “things” when placed inside a high speed computational environment rapidly become significantly “bigger things” that immediately cease to scale.

Consider the following trivial example to computationally generate all possible 10 character passwords containing only lowercase letters and numbers. One can quickly understand and imagine the size of the resulting output. It isn’t a challenging problem. The first password hashes in UNIX used a DES-based scheme and had the limitation that they only took into account the first 8 ASCII characters. Ten characters should be trivial.

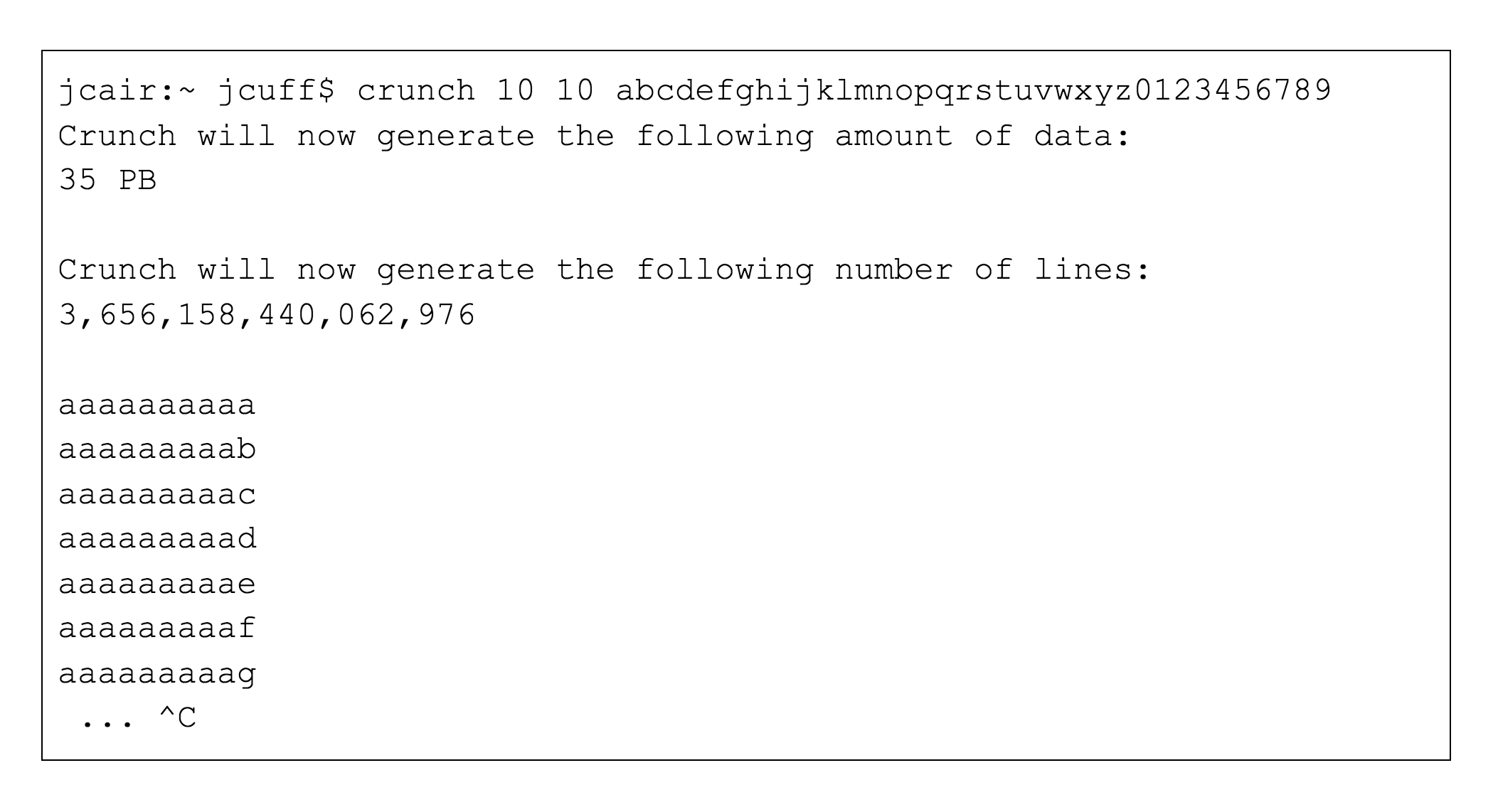

Applying the UNIX command “crunch” to generate all possible 10 character long passwords:

You would need thirty five petabytes of data storage to hold the 3,656,158,440,062,976 possible 10 character long passwords. A total of 36,577 average 1TB laptop hard drives would be needed to store the file. At 200MB/s it would take 53,270 hours or about 6 years to write out the file, not including needing to swap out 36,577 disk drives.

Was that your first guess?

Small things quickly become bigger things.

This trivial example shows that very small things have the potential to become unexpected, and significantly larger things. Don’t try this at home. However, data sets in analytics, research and science can often quickly look a whole lot like this apparently trivial example.

Generating initial condition sets to understand how galaxies would collide in the early universe, for instance turns out is a very similar storage bound challenge. Large sparse matrices are generated computationally in memory in parallel in a few seconds resulting in huge outputs, for example the popular super computer powered Illustris project. Such workloads need carefully designed I/O paths to manage all the resulting write operations. Weather and climate modeling algorithms have a similar challenge generating appropriate “initial conditions” which are then computed against in parallel.

Our password example is a simple streaming application. Few if any real world workloads would ever consider a single stream as their sole data path. Seeking into datasets and taking out pieces requires reading, computing and writing at the same time. It is essentially “the walking and chewing gum” challenge of modern computation.

As computing tasks started to be distributed amongst multiple discrete devices to increase throughput and performance, storage systems needed to adapt quickly. Many to one architectures for shared storage simply do not scale. Even using our trivial password streaming example, you can imagine 1000’s of machines all trying to write simultaneously to a single storage instance. It would quickly become overwhelmed.

Becoming parallel

To solve for the issue of storage being inundated by parallel requests, we designed our filesystems to also be parallel. Storage evolved from single network attached shared volumes, to clustered file systems, to parallel file systems, and on to massively distributed object based systems. Each evolution resulted in more complexity, each needed to track billions of data objects and their state while simultaneously trying to manage to achieve performance and balance.

Storage evolution didn’t happen overnight. Early systems dating back to 1960 such as the cutely named Incompatible Timesharing System (ITS) had a functional concept of shared storage. ITS machines were all connected to the ARPAnet, and a user on one machine could perform the same operations with files on other ITS machines as if they were local files. In 1985 we saw Sun build the now ubiquitous NFS “Network File System”. The Next Platform recently discussed the evolution of NFS now on its fourth major iteration. The NFS we know and love is here to stay.

The critical issue with storage is that it fails. Early systems architects quickly realized that without functioning and available storage, any computing was effectively useless. Clustered storage systems were assembled so that “failover” replaced the ever more common and undesired “fall over”. It is here where things become very tricky, very quickly.

Distributed lock management on shared clustered systems with reads and writes in flight taking place during a failure event needed to be immediately reconciled for “consistency” and “concurrency”. Systems with quorum voting schemes were designed to solve for the “queenio cokio” issue, knowing which node effectively “had the ball” and was allowed to issue the update. It was a required, but highly inefficient choke point.

More time passed. Enter the distributed file system and the concept now known as “eventual consistency”. Turns out if you don’t care about consistency you can eliminate a huge component of file system complexity. By distributing the workload across multiple devices and not needing any messy “quorum” rapidly improves performance as a key bottleneck is simply removed. Yes you lose only state, and can no longer prove that a write is consistent at a given moment in time, but that doesn’t always matter.

Hyperscale quickly realized these benefits, they also threw away POSIX file semantics by using HTTP protocols to “POST” and “GET” data objects. Alas, decades of POSIX aware software is still challenged by these changes as they continue to expect “fread()” and their friends to still exist.

Engineering teams were not daunted by this, they built “gateways”. Effectively, high performing data caches sitting between the end user and large web based object stores. Unfortunately if not executed well, this gateway approach brings us right back to square one by once again having a “gate” through which we march our complex data. What was old, is now new again.

Hope was not lost. More time passed, and now one of the most ubiquitous file systems in HPC – Lustre enters the scene. It looks like a distributed file system, but provides us with the warm and familiar comforts of POSIX we have grown to love. The clever separation and routing of “metadata” and the distribution of physical data objects allows for the potential to build extremely highly performing filesystems that can handle the barrage of data from tens of thousands of nodes.

The development effort over 14 years that has been put into Lustre software by a number of experts and practitioners is now reaping significant benefits for the community. Couple this clever software with ever more highly performing flash storage and super low latency interconnects that improve each I/O you can do per second and you are basically off to the races.

Deploying multiple meta data targets (MDT) on top of metadata servers (MDS) to then be able to “index” and reference the highly distributed and striped object storage targets (OST) has resulted in a file system that not only scales but can also provide huge capacities up to a theoretical 16 exabytes, with 100 petabyte systems being found in production today. Intersect360 stated that 24% of 317 HPC systems in 2017 used Lustre or a derivative, with IBM’s Spectrum Scale (GPFS rebranded from 2015) deployments also taking up another identical 24%. Highly performing distributed POSIX file systems are clearly deployed and enjoyed by our community.

On Costs and Religion

So we are all set right? Not quite. Turns out that storage systems have significant “costs”. Not only in terms of the cost of “I/O”, but more of one directly associated with $/TB/operation. Engineering storage systems to provide “unlimited capacity” while maintaining high levels of performance is the largest set of headaches a HPC support team will have today.

Making rapid analogies to Formula 1 or NASCAR is fairly obvious here. Designing a for purpose vehicle to travel at high speed and without component or driver failure is their goal. These obvious similarities to high performance storage should not be underestimated. Storage systems are fraught with potential risk, danger and expense.

Fortunately due to a wealth of available storage solutions, you can effectively “buy yourself out of trouble” and throw money at your issue. A large number of proprietary, highly available and highly performing storage systems now exist. We are literally spoiled for choices, but as with anything, nothing in life comes for free.

But, wait. Open source is free right?

Commodity off the shelf hardware coupled with open source storage subsystems that can be continually tweaked and improved by you and the community provides what appears to be excellent value for money. Free software. Cheap hardware. It just seems somehow perfect. Until you factor in the expert humans and technical know how needed to support complex, fragile boutique storage systems. In large shared user systems, the storage needs to be continuously available and you simply can’t spend time to fiddle with it while people are trying to run important workloads. Again it is an issue of balance.

There will always be two camps in the storage debate, forever separated by an almost “religious” chasm. “On prem or cloud” is another heavily debated topic with mostly similar outcomes. Our intent here is not to convince you one path is better than the other, only to accurately describe the landscape and show how with everything in technology selection the correct answer is: “It depends”.

Data Chaos

So what goes wrong with storage and why should we worry about failure? Any product, be it from a multi billion dollar global company or a nifty new block storage system fresh out of a research lab is “sold”. Humans can’t help but describe technology and “sell it” in glowing terms. There is distinct ownership of ideas and concepts and we love to describe them in positive detail. Rarely if ever does the human interaction and conversation ever turn to potential issues with an idea, product or service. Imagine visiting your local car dealership and being told what potential problems there are with the new vehicle you are thinking about purchasing. It will not happen.

To restore balance, especially in storage, we need to have an open and candid conversation about how systems perform in the real world so they can be best matched to workload. When Adrian Cockcroft and team architected the systems needed to manage data deluge in the technology group at Netflix, they rapidly realized that “failure was indeed an option”.

Unplanned data balance

To understand failure, the Netflix group built “Chaos Monkey” (now on version 2). This is quite literally intentionally, destructive software that randomly wanders through their infrastructure terminating instances. Essentially creating computational and data havoc. Chaos Kong took this to a whole new level by “terminating” entire data centers. That’s bold. All of this effort so the team could understand the obvious impact of failure and what would happen to their data intensive global service. This is a wonderful case study in not only how to manage unplanned data balance, but also more importantly one of extreme technical transparency. We need more of this.

So imagine you’re building a high performance file system… What would be the practical questions to ask yourself, your team or your storage vendor? We provide ourselves with 1,000’s of “benchmarks” to measure systems against hypothetical workloads. SpecFS and others all go a long way to describe “speeds and feeds” associated with storage. IOPS, TB/s, resilience, endurance, security, management, $/TB, the list goes on. But a more meaningful set of questions need to be asked. This is why here at The Next Platform we dive deeply, with unbiased long form detail into claims, and commentary to provide a meaningful and thoughtful analysis of systems and products. We do this work so you aren’t caught out, or in anyway surprised like in our earlier 35 petabyte “password” example.

The third part of “Practical Computational Balance” will focus on software challenges. Algorithms and code sit on top of all the storage systems and computing infrastructure we carefully select, design and build. Balance there can only be achieved through careful and considerate integration of both software, hardware and humans.

About the Author

Dr. James Cuff

Dr. James Cuff

Distinguished Technical Author, The Next Platform

James Cuff brings insight from the world of advanced computing following a twenty-year career in what he calls “practical supercomputing”. James initially supported the amazing teams who annotated multiple genomes at the Wellcome Trust Sanger Institute and the Broad Institute of Harvard and MIT.

Over the last decade, James built a research computing organization from scratch at Harvard. During his tenure, he designed and built a green datacenter, petascale parallel storage, low-latency networks and sophisticated, integrated computing platforms. However, more importantly he built and worked with phenomenal teams of people who supported our world’s most complex and advanced scientific research.

James was most recently the Assistant Dean and Distinguished Engineer for Research Computing at Harvard, and holds a degree in Chemistry from Manchester University and a doctorate in Molecular Biophysics with a focus on neural networks and protein structure prediction from Oxford University.

Follow James Cuff on Twitter or Contact via Email

Be the first to comment