Two important changes to the datacenter are happening in the same year—one on the hardware side, another in software. And together, they create a force big enough to blow away the clouds, at least over the long haul.

As we covered this year from a datacentric (and even supercomputing) point of view, 2018 is the time for Arm to shine. With a bevy of inroads to commercial markets at the high-end all the way down to the micro-device level, the architecture presents a genuine challenge to the processor establishment. And now, coupled with the biggest trend since cloud or big data (which ironically could be replaced in various ways by this contender) machine learning comes steamrolling along, changing the way we could think about the final product of training—inference.

Deep learning training has a clear winner on the hardware side. There is little doubt the GPU reigns supreme here and it has in fact bolstered the various cloud providers to rush to the latest GPU offerings to serve up for both high performance computing and training needs. Google’s TPU and the other architectures have a role here too, but for the mainstream trainers (if there are enough of them yet to classify a middle ground) are Nvidia GPU-centric.

The story for inference is not so clean and easy. This is for a few different reasons, including the big one—the compute needed for training is dramatically different than what inference requires. Ultra-low power, reduced precision are key characteristics and accordingly, a host of existing device types have been moved in to fill demand and get a new breath of life; from DSPs to FPGAs to very small custom ASICs that once only had very domain specific markets to fill—all of these (and little GPUs too) have a chance.

But enter Arm and its growing presence, and recall the company just rolled out big news around architectures and a machine learning-ready software stack and we have all the makings of a major force in inference. And as it turns out, it’s one that already has significant reach into the many edge devices that are now being tasked with pulling some intelligence on the fly out of pre-trained networks.

For context about the market for inference, where Project Trillium (that news we linked to), and what it means for the fate of datacenter we have known and loved all these years, we talked with Jem Davies, VP and GM for the machine learning group at Arm. Davies summed up how inference and ever-more compute at the edge will change the datacenter quite simply:

“The general trend is toward a distribution of intelligence. The idea that everything will be in a few megalithic datacenters in the world is already starting to give way. The scalability of centralizing everything just does not work any longer.”

For example, recall Google’s assertion even a few years ago that if everyone with an Android phone used the search giant’s voice recognition for three minutes each day it would mean doubling the number of datacenters. We have no insight (only guesses) into what kind of efficiencies the TPU has inside Google or what ROI in dollar terms the company gets out the smattering of ad clicks or intelligence gleaned from each snippet of voice, but we’d have to guess the economics would break down rather quickly.

Generally, if you want to perform a certain amount of computation, it is cheaper to do it on an end device rather than the datacenter, Davies explains. Of course, not all workloads can be compressed to fit into these devices although there is fierce work being done to add more memory (and do more with memory) on devices to keep as much of a trained network available for edge inference as possible when accuracy matters—and intelligently shed bits that are not critical for ultra-small devices. He argues that as we start to see more work on the software side to do more with less compute and memory we will see some big economic and laws of physics trends that push to the devices whenever possible to avoid cost and latency.

“In terms of pure volume, the datacenter is something of a minority sport. Yes, it is where the training is being done, but in inference terms, much of it is being done on Arm-based devices at the edge on CPU and GPU and all the way down to the microcontroller type devices.”

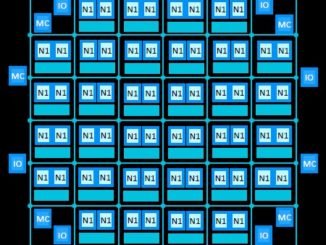

Arm will be working on scaling the devices it announced this week in both directions—all the way up to more robust inference chips for datacenters and down to ever-smaller form factors.

In terms of how more capabilities might be added to a datacenter-class inference chip that still maintains the performance and efficiency bar Arm-based devices set, we asked if they might follow a course that puts memory at the center of more compute as other deep learning chip startups that tackle inference have done. Davies says they are working on making intelligent use of memory capacity and bandwidth but those approaches are a bit too bleeding edge for the market they want to capture—a mass market that totals in the billions of devices.

“The scale we project is that machine learning is a once-in-a-generation inflection point in computing. We will look back on this time as the pre-machine learning age because every time some researcher takes a classical computing method and applies it experimentally, they get better results—even in the most surprising areas.”

More on the Trillum hardware and software stack that fits across several deep learning frameworks here.

Be the first to comment