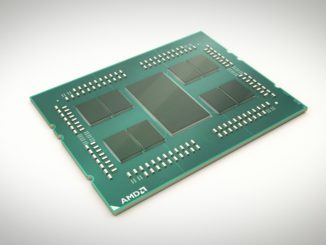

The HPC crowd got a little taste of the IBM’s “Nimbus” Power9 processors for scale out systems, juiced by Nvidia “Volta” Tesla GPU accelerators, last December with the Power AC922 system that is the basis of the “Summit” and “Sierra” pre-exascale supercomputers being built by Big Blue for the US Department of Energy.

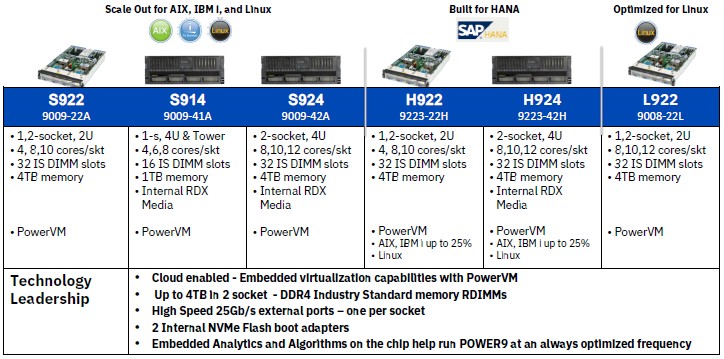

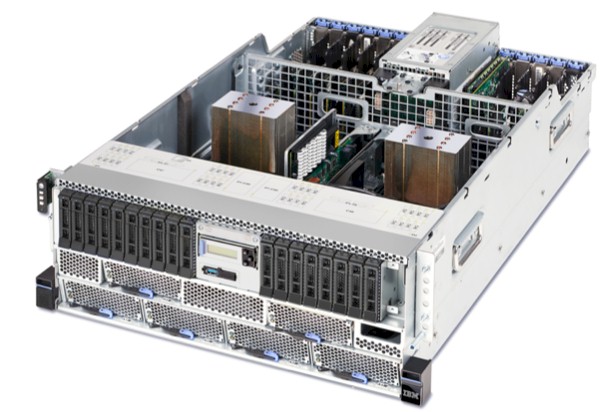

Now, IBM’s enterprise customers that use more standard iron in their clusters, and who predominantly have CPU-only setups rather than adding in GPUs or FPGAs and who need a lot more local storage, are getting more of a Power9 meal with the launch of six new machines under the Cognitive Systems banner.

IBM differentiated the hardware and the pricing of its Power8 and Power8+ with NVLink machines, depending on the workload and the competition, with its most aggressive pricing and a leaner and cheaper microcode and hypervisor stack reserved for the Linux workloads that the company is chasing. IBM very much wants to sell its Power-Linux combo against Intel’s Xeon-Linux duo, and also keep AMD’s Epyc-Linux tag team at bay. The Power8 chip had the advantage over the “Haswell” and “Broadwell” Xeon E5 processors when it came to memory capacity and memory bandwidth per socket, and could meet or beat the Xeons when it came to performance on a lot of workloads, too.

Because IBM has a captive base of customers using its IBM i and AIX platforms, it would charge a little bit more for compute, storage, and networking for them and therefore be able to charge a little less for customers buying its Linux-only variants. This way, IBM could compete to win Linux deals – particularly on data analytics and open source databases, its initial focus area – as well as on some traditional simulation and modeling workloads in the HPC and machine learning areas. While IBM has been successful on its own terms, with Linux driving about a quarter of Power Systems revenues as 2017 came to an end, a factor of 10X increase compared to a few years ago when the company started its Linux push in earnest on Power iron. But IBM wants more, and frankly, it needs more if it is going to be able to justify the considerable investment it makes in processor and system design.

We caught wind of IBM’s general plan for the Power9 rollout for enterprise customers, and told you all about it last week; the plan is pretty much as we had heard through the grapevine, with a twist that IBM is also rolling out two-socket machines specifically tuned for SAP HANA in-memory transaction processing workloads, and a slight price discount to make them competitive with Intel’s “Skylake” Xeon SP platforms. Here is the lineup of the six new machines that are being announced today and that will start shipping on March 20. IBM is still putting together the feeds and speeds and won’t have official comparative benchmarks on all of its SKUs until February 27. (This is one of the good things that IBM still does for its server customers that no one else does any more.)

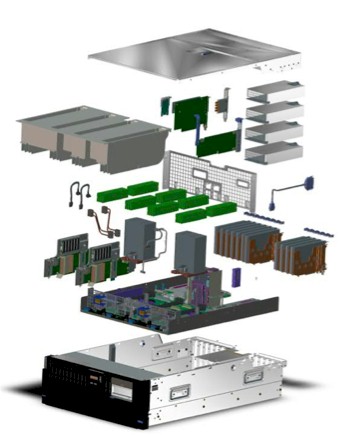

All of the machines are based on a basic system design that IBM has code-named “ZZ,” which is obviously short for the Texas rock band ZZ Top. We infer this because we know that the other Power9 machines that have yet to be announced are called “Boston,” “Zeppelin,” and “Fleetwood” internally at IBM – we told you about Fleetwood, which is a 16-socket NUMA machine based on IBM’s “Cumulus” Power9 variant and its “Mack” management console, back in October last year.

The ZZ machines, as well as the “Newell” design that is sold as the Power AC922 and the future Boston, Zeppelin, and Fleetwood machines, have some significant advantages over the current crop of X86 and ARM iron in the datacenter field. With these ZZ machines in particular, IBM is dropping memory buffering, which was used with the Power8 and Power8+ systems and which doubles up the capacity and bandwidth per socket, and that means memory cards or memory sticks do not have to be equipped with IBM’s “Centaur” memory buffer chip. Intel used memory buffering with its prior Xeon E7 lines, but dropped it for high-end Xeon SP Platinum SKUs last year, and both IBM and Intel dropped this because it adds to the cost and complexity even if it does sacrifice some memory capacity and bandwidth.

To be precise, with the prior generation of Power S822 and Power S824 scale out machines aimed at enterprise customers, which used the original Power8 chips, the machines had a peak memory bandwidth of 182 GB/sec per socket and delivered 173 GB/sec on the STREAM benchmark test. With the equivalent ZZ systems based on the Power9 Nimbus chip, the memory runs faster but is not buffered, and it works out to 153 GB/sec peak and 115 GB/sec sustained per socket on the STREAM test. If you compare that to a top-end Skylake Xeon SP-8180 with 2.67 GHz DDR4 memory, a two-socket box is delivering somewhere around 190 GB/sec with latencies on STREAM running at around 100 nanoseconds, and the knee in the curve where performance gets worse quickly tops out at around 225 GB/sec over a pair of sockets. So, conservatively, IBM can meet or beat Intel on memory bandwidth per socket. By the way, IBM is supporting standard DDR4 memory sticks running at 2.13 GHz, 2.4 GHz, and 2.67 GHz in these machines.

Because IBM it is supporting fatter memory sticks in these Power9 ZZ systems, IBM can cram up to 2 TB per socket, which is more than the 1.5 TB for the memory extended Xeon SP Platinum M chips and a lot more than the 768 GB of the standard Xeon SP parts. AMD Epycs, of course, support 2 TB per socket and have an advantage here, too, over Xeon SPs. The prior Power8 chips topped out at 1 TB per socket, even with buffered memory. To get to the 2 TB per socket maximum of the Power9 Nimbus chip, IBM has 16 memory slots per socket and requires 128 GB DDR4 memory sticks. These are very expensive indeed, even in the open market, and it seems far more likely that customers will fully populate their machines with 32 GB or maybe 64 GB sticks to hit 512 GB or 1 TB capacities per socket, and thereby maximize their memory bandwidth even if they are scrimping a bit on their memory capacity for budgetary reasons. Memory and flash prices are still high, and not coming down fast.

On the I/O front, with the machines support PCI-Express 4.0 peripherals as well as 25 Gb/sec OpenCAPI ports and (in the AC922, also NVLink ports), have an aggregate of 74 GB/sec of total bandwidth across the various fabrics and 80 GB/sec of PCI-Express (a combination of bandwidth 3.0 and 4.0); the prior Power8 machines had 38.4 GB/sec of fabric bandwidth on these other I/O ports (like CAPI) plus another 48 GB/sec across the PCI-Express 3.0 controllers.

We will be drilling down into the feeds and the speeds of these Power9 ZZ machines in a future story. But for now, we can give you some important highlights. Steve Sibley, vice president of offering management for the Cognitive Systems division at IBM, tells The Next Platform that unlike for Power8 iron, where IBM artificially lowered prices for memory, storage, networking, and other peripherals on its L and LC series of Linux-only Power Systems, with these ZZ systems, Big Blue is setting the prices for these components at the same level whether they run IBM i, AIX, or Linux on top of its PowerVM hypervisor or whether they run Linux bare metal on the OPAL microcode developed through the OpenPower consortium in conjunction with Google or its companion OpenKVM hypervisor. Moreover, IBM is no longer selling processor cards with a price and then activation fees to turn on each core; now, you buy a Power9 card and all of the cores are active and are part of the price. The PowerVM hypervisor is also bundled with the system – meaning both that it is there and its price is baked in, which is another way of saying either IBM cut the hardware price to cover the cost of the hypervisor or the hypervisor is now free. Sibley adds, however, that the Power9 ZZ machines running IBM i and AIX will have a slightly higher base server price compared to the L model that only runs Linux or the H model that mostly supports SAP HANA. How much, we have yet to calculate.

It is hard to say as yet if the Power9 chips have the advantage when it comes to compute performance, and it will take some time to figure it out.

With the Power9 chips, IBM is not doing what it and other chip makers have done to characterize their clock speeds, meaning providing a base clock speed and then a set of Turbo Boost speeds that are attainable under the right environmental conditions. Instead, IBM is offering a typical maximum CPU clock speed range based on a set of typical workloads and that is generated using the workload optimized frequency circuits and microcode that is built into the Power9 chip. We will be drilling into this feature some more, but the idea is to let the system figure out how best to optimize the clocks for the jobs and stay within a thermal envelope. IBM is shipping Power9 chips for the ZZ systems that have 190 watt, 225 watt, and 300 watt thermal design points. The Nimbus Power9 chips used in all of these machines have 4, 8, 10, or 12 cores activated, and they all have IBM’s SMT8 simultaneous multithreading active, which yields eight threads per core. The maximum clock speeds range from 3.4 GHz to 4 GHz. For the hyperscale and HPC crowd, IBM is using an SMT4 variant of the chip, which has four threads per core and which presents 24 cores per chip. We don’t think that IBM will deliver SMT4 versions of the “Cumulus” Power9 chips used in the Power9 machines with four or more processor sockets, and will instead stick to fat cores with lots of threads.

In general, running various enterprise workloads focused on online transaction processing, AIX, Linux, and IBM i customers can expect for a Power9 system with a like-for-like configuration of cores and memory to deliver somewhere between 1.3X and 1.5X more performance than the Power8 system it replaces. For Linux and AIX configurations, the ZZ machines will yield somewhere between 20 percent and 35 percent better bang for the buck than their Power8 equivalents; because IBM i includes a relational database bundled in, which adds significantly to the base system cost, the price/performance improvements here will be smaller because that database cost dominates the overall price of the system. These are not huge increases in price/performance, and certainly not over the four year span that separates the Power8 launch from the Power9 rollout. But that is the modern server business. Now, as Intel has shown with its Skylake Xeon SPs, the game has shifted to cramming as many features into the platform as can be done and charging a premium for them.

Wow! Impressive memory, bandwidth and I/O. Looking forward to see the results. It seems that IBM did a great job positioning the POWER9 as cloud, AI and IO ready! Are they available in any cloud? also, couple this with GPUs, accelerators and network cards via NVlink or OpenCAPI and the performance will be impressive compared to industry standard pci3.0 peripheral… lots of extra possibilities and value for the POWER9.

Well the Power9’s are first to use PCIe 4.0 and that should rate more attention from Folks at the Reg. But OpenCAPI means that AMD’s Vega Professional GPUs should(?) be usable owing to the fact that AMD along with IBM/Others are all founding Members of OpenCAPI, IBM get’s the most props in that call out for Being the creator of CAPI(Now OpenCAPI). So it’s by OpenCAPI or PCIe 4.0 that most Non Nvidia GPUs/Other accelerator products will be connected to Power9. And really everything IBM releted has Bluelink with the Power9 SO chip supporting 48 lanes of 25 Gb/sec BlueLink bandwidth per socket . So IBM will not be Limited to one GPU vendor and any OpenPower licensees if they can use PCIe 4.0 or OpenCAPI. And any PCIe 3.0 compliant GPU/Accelerator should be able to be slotted into a PCIe 4.0 slot if the hardware is PCIe standards compliant and that GPU card used at PCIe 3.0 bandwidth.

Looking at the current AMD Pro GPU offerings, I’d like to know more about AMD’s OpenCAPI support on any of its Radeon Pro WX/Radeon Instinct MI25 SKUs and that support for OpenCAPI and someone at the NextPatform really needs to ask AMD about any OpenCAPI suppport on their current generation Professional GPU Compute/AI Products. I guess it’s still too early on For Vega(The Vega 10 base die based Pro GPU variants at least) for that OpenCAPI support and Vega 20(New Base Die Tapeout on GF’s 7nm process node) really should support PCIe 4.0 as well as be backwards compatable with PCIe 3.0 if AMD/RTG is serious about things in addition to having OpenCAPI support ready for any Vega based GPU accelerator products.

AMD Really needs to up its whitepaper production(Authored by Actual PHDs and those with advanced degrees and not any technical marketing folks). Everything that AMD does or will be donig Coherent Connection Fabric wise on its processors(CPUs, GPUs, APUs/Others) will haved to be piped in/out/across via the Infinity Fabric so I’d imagine that any OpenCAPI support is simply a matter of attatching the supporting OpenCAPI controller functional blocks and assoicated controller firmware on the processor and tapping into the Infinity Fabric. Whitepapers help explain that and any other potential use cases and that’s where AMD is lacking relative to its competition. Nvidia sure has a nice complement of Whitepapers covering all sorts of subjects related to Nvidia’s Professional GPU compute/AI accelerator offerings.