Burst buffers are carving out a significant space for themselves in the HPC arena as a way to improve data checkpointing and application performance at a time when traditional storage technologies are struggling to keep up with the increasingly large and complex workloads including traditional simulation and modeling and new things like as data analytics.

The fear has been that storage technologies such as parallel file systems could become the bottleneck that limits performance, and burst buffers have been designed to manage peak I/O situations so that organizations aren’t forced to scale their storage environments to be able to support those peak moments – essentially creating a buffer between the compute and traditional storage layers.

We at The Next Platform have followed the development and use of burst buffer technologies over the past few years, including laying out the whys and hows of burst buffers and a discussion with Gary Grider at the Los Alamos National Laboratory, who invented the concept. There’s also been a push to expand the use of burst buffers beyond the niche of alleviating congestion caused by I/O issues, such as a file system accelerator or application optimizer.

At the recent SC17 conference show, James Coomer, vice president of products with storage provider DataDirect Networks, said the perception of burst buffers has changed over the last few years, but that the need has stayed consistent.

“About five years ago, people thought, ‘Oh, these supercomputers are getting huge, are getting so many components we’re going to need to checkpoint this stuff,’” Coomer said during a presentation at the conference. “’We can’t afford to checkpoint thing with disk, we can’t afford it for the capacity with flash, so let’s mix it up and have a thing we can write to very fast into flash burst buffer, and then we’ll also have a capacity system behind it made out of cheap hard drives.’ That was the original kind of idea behind burst buffers. We see it kind of differently now. It’s kind of evolved. I think pretty much everybody thinks about it like this now: It’s really a flash-based high-performance system, but more general than the burst buffer, which is a write thing. It’s write, it’s read, it’s random I/O – all that stuff.”

Historically, application developers have been asked to adjust their I/O patterns to match the state of files systems, which has meant block-aligned, sequential, no random I/O and not too much metadata, he said. But workflows are getting increasingly complex.

“This increased sophistication in application behavior has consequences for the storage systems,” Coomer said. “We can no longer really expect all these application developers not only to think about their mode of physics, but their applications, about efficiencies, GPUs, CPUs, networks, and then, ‘By the way, make sure all your I/O is always block-aligned, sequential I/O.’ It’s getting a bit crazy, so we’re at DDN with our Infinite Memory Engine trying to build a storage system that really doesn’t care, so you can throw random stuff at it, you can not align things, you can do 4K I/O, you can make it random, and it won’t care. It’s a flash-based system that was designed to cope with a much broader set of workloads at top performance. And the other thing nobody will deny is that volumes are going up, so you’ve got to do this at scale. That’s the point. There’s no point in building a system this big that will serve only a few computers.”

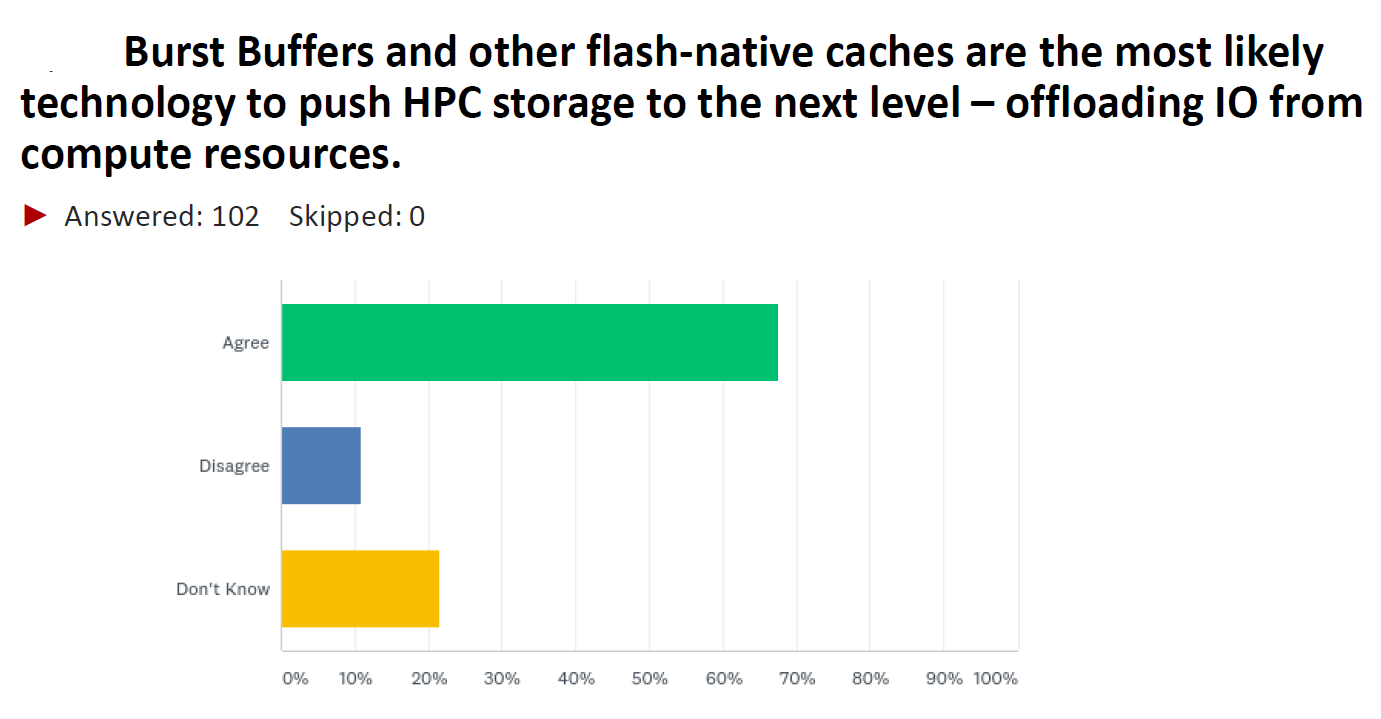

In its annual survey of the HPC space, DDN found that organizations are continuing to adopt flash-based storage – and are expanding how they use the technology – but that I/O workload performance continues to be a challenge. The company also found that burst buffers and similar flash-native caches that offload I/O from compute resources will be the key technology for improving HPC storage performance.

“I/O performance is a huge bottleneck to unlocking the power of HPC applications in use today, and customers are beginning to realize that simply adding flash to the storage infrastructure isn’t delivering the anticipated application level improvements,” said Kurt Kuckein, director of marketing for DDN. “Suppliers are starting to offer architectures that include flash tiers optimized specifically for non-volatile memory and customers are actively pursuing implementations utilizing these technologies.”

According to the survey, 56.4 percent of 101 respondents said that storage I/O is the number-one bottleneck in analytic workload, while more than 67 percent said that burst buffers will be technology that accelerates storage performance in HPC, with just over 10 percent disagreeing.

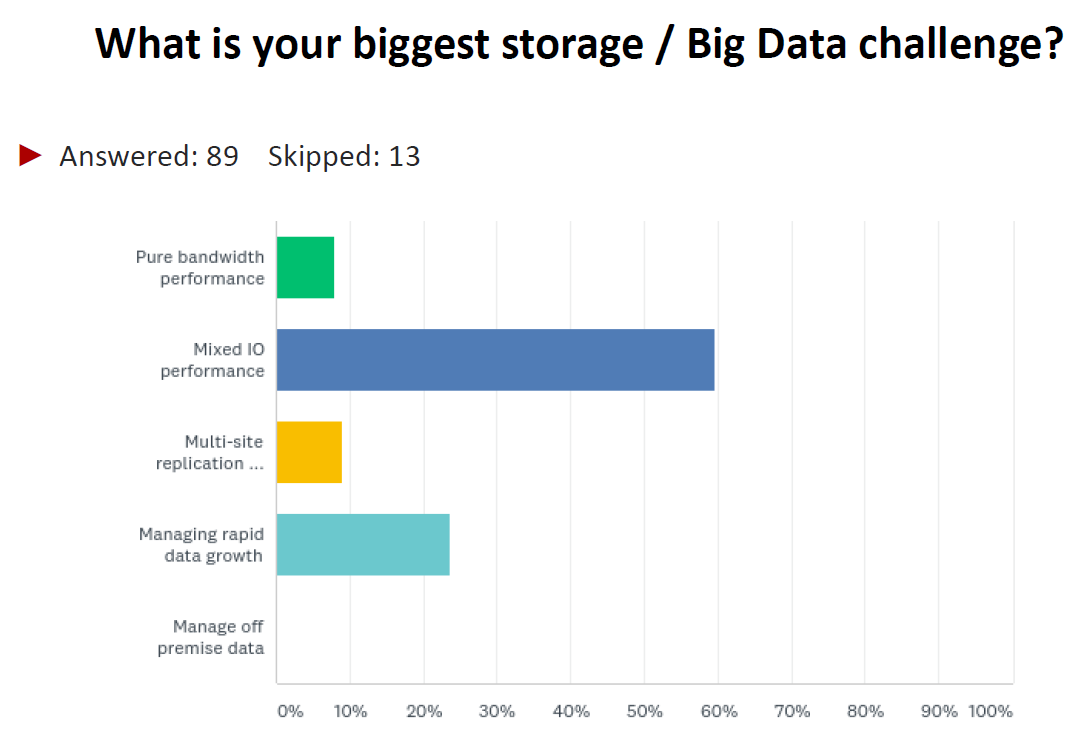

In a related question, respondents were asked about their biggest storage and big data challenge. Again, I/O was the top choice, with more than 59 percent pointing to mixed I/O. Another 23.6 percent said it was managing rapid data growth.

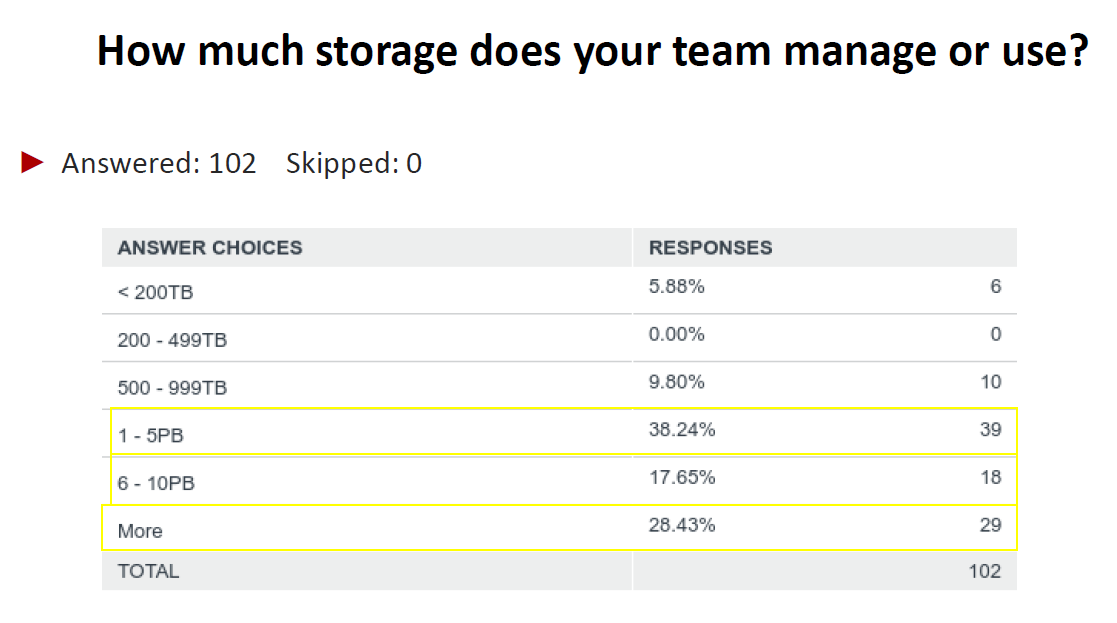

The amount of data being stored also is going up. Eighty-five percent of respondents said they manage or use more than a petabyte of storage – a 12 percent increase from the numbers in last year’s survey, with 28.4 percent managing more than 10 PB. Another 38.2 percent said they manage 1 PB to 5 PB.

Flash is increasingly the storage technology of choice. While about 90 percent of respondents said they were using flash storage to some degree in their datacenters – which is pretty much flat when compared with the survey last year – they are putting more of their data in flash. Currently more than 76 percent say that that less than 20 percent of their data is stored in flash, while only 3.2 percent said that 30 to 40 percent of their data is in flash. However, by the end of next year, just under half of the respondents will have less than 20 percent of their data in flash, while 10.1 percent will have 30 to 40 percent of it in flash.

Be the first to comment