If you want to get a microcosmic view of the epic battle between Ethernet and InfiniBand (which also includes Omni-Path no matter how much Intel protests) as they relate to high performance computing in its many modern guises, there is perhaps no better place to look at what Mellanox Technologies is selling.

Mellanox, which has been peddling InfiniBand chips, switches, and adapters since the inception of this technology, bought its biggest rival in switch sales, Voltaire, for $218 million back in November 2010. And that was perhaps its smartest move right up to the moment where the company launched the SwitchX line of chip ASICs only a few months later in April 2011 and marked its first foray into the high-end Ethernet switch arena.

The switch-hitting SwitchX chips took a latency hit when running the InfiniBand protocol, and actually ran a little bit slower on latency than 40 Gb/sec QDR InfiniBand with its native chips. But the SwitchX chips, which supported 40 Gb/sec data rates, and their follow-ons, the SwitchX-2 chips that debuted in October 2012 supporting 56 Gb/sec data rates, got Mellanox into Ethernet and down a track that, nearly seven years on, has the company’s Ethernet business now larger than its InfiniBand business. This has largely been the result of Mellanox courting the big hyperscalers and cloud builders of the world and the Tier 2 players that are trying to carve out their own niches to fight against them.

With Spectrum line of chips launched in June 2015, Mellanox broke InfiniBand free of Ethernet so it could push the latencies back down on InfiniBand and better compete against Intel’s Omni-Path, an evolution of the QLogic InfiniBand business that Intel acquired in January 2012 for $125 million. InfiniBand, as you well know, is a favorite of HPC shops that run scientific simulations and models on parallel clusters as well as for commercial database and storage clusters where latency and bandwidth are both vital.

Ethernet, being a much fatter stack than InfiniBand because of the zillions of standards and protocols it has to support, cannot beat InfiniBand when it comes to latency, but it looks poised to step in front of InfiniBand in the race to 400 Gb/sec ports – unless Mellanox decides to keep them in lockstep. Mellanox, not Intel, is setting the pace for bandwidth in InfiniBand, with Intel’s own Omni-Path 1 generation hitting only 100 Gb/sec and no word on when the 200 Gb/sec Omni-Path 2 might see the light of day. While Intel bought a perfectly decent Ethernet switch chip maker called Fulcrum Microsystems back in July 2011 that made well regarded, low latency Ethernet switch chips, it has never delivered a follow-on to the FocalPoint ASICs, which were aimed at 10 Gb/sec Ethernet. (Which is perplexing, unless you think that sometimes big companies buy technologies to sit on them, thereby removing a threat.) Intel also bought the “Gemini” XT and “Aries” XC interconnects from supercomputer maker Cray for $140 million in April 2012, and bits of Aries are embedded in Omni-Path 1 and more is expected to be woven into Omni-Path 2.

The game with Ethernet is to please the hyperscalers and cloud builders who want disaggregated switches where they can install their own software and who want to highest bandwidth possible and are less concerned with chopping a few tens of nanoseconds off the latencies. They want cheap, dense, open switches and they want the cost per bit to keep dropping on a Moore’s Law curve or better. That is why Mellanox has been so aggressive with being first with 100 Gb/sec Ethernet with its Spectrum switches, and it is also why the company is pushing the bandwidth pedal to the metal with Spectrum-2, which will be ramping next year to 200 Gb/sec and 400 Gb/sec, depending on how you want to carve up the 6.4 Tb/sec of bandwidth the chip offers. On the InfiniBand side, the Quantum ASICs that were unveiled last November and which will be shipping shortly, are rated at 200 Gb/sec HDR InfiniBand speeds. This chip has a whopping 16 Tb/sec of aggregate switching bandwidth and below 90 nanoseconds for a port-to-port hop across the switch.

The reason why we walked through all of those technology transitions in recent years and the competition that Mellanox is up against, particularly from Ethernet incumbents like Cisco Systems, Arista Networks, Juniper Networks, Hewlett Packard Enterprise, and Dell and from an Intel that is bent on getting a much larger share of the network budget, is to show that Mellanox is not just competing on technology, but getting more of the networking pie, ever so slowly, and despite some of the ups and downs that always plague networking.

The only market that might be tougher than networking is compute, which Intel more or less controls except for GPU offload (owned by Nvidia) and a smattering of RISC and mainframe iron that holds on because of legacy applications that are not easily moved off those platforms without creating tremendous risks for the companies that depend on them. Networks don’t have applications that are anything close to as sticky (or large in terms of the revenue streams) as the database applications in the corporate datacenter, so network vendors have to win each round of technology advances like they were new to the market.

That said, networking represents somewhere on the order of 20 percent to 25 percent of the budget for a modern distributed system, double that it was a decade ago, so performance, cost, thermals, and all of the normal factors that hyperscalers, cloud builders, and HPC shops look at come into play.

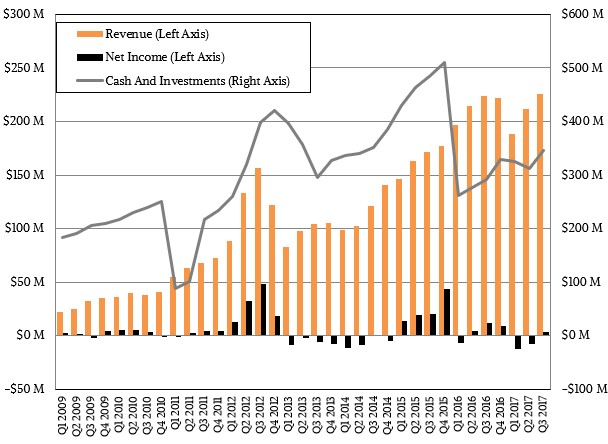

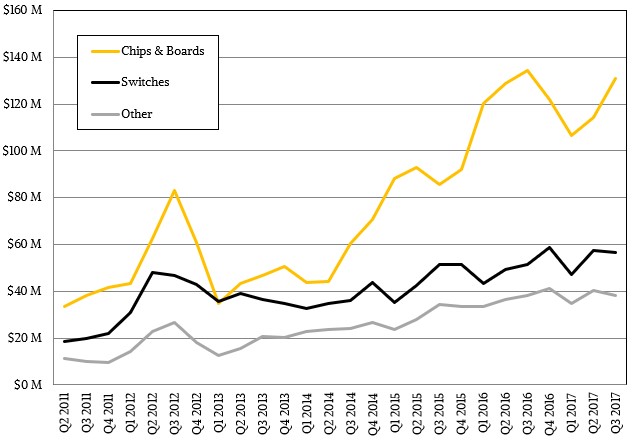

Mellanox is playing both sides of the switch game, and is selling the server endpoint (where it has had dominant market share for years because its ConnectX adapters switch hit between Ethernet and InfiniBand), the switches, and now the cables that link them all together. This strategy is paying off, and now the company is operating at a $900 million run rate, eight times larger than it was when it came out of the Great Recession and started doing acquisitions and its expansion out into Ethernet two years later.

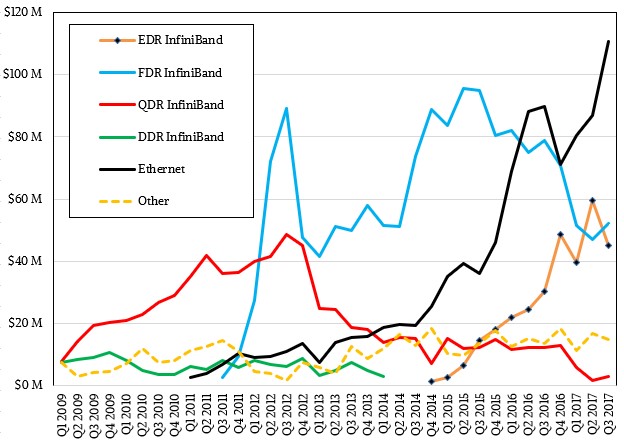

In the quarter ended in September, revenues at Mellanox were up seven-tenths of a point to $225.7 million, and after reporting slight losses in the first and second quarter, the company shifted into the black, bringing $3.4 million to the bottom line. Significantly for the quarter, Ethernet sales for Q3 were larger than InfiniBand sales – the first time that has happened in the history of the company. Look at the curves:

That does not mean that InfiniBand is not still growing. As is the case with all HPC technologies, you have to relate them to Intel (and sometimes IBM and Nvidia) compute product cycles because customers tend to upgrade clusters with new servers and new switches and adapters at the same time, and usually every three years. If processors are delayed – as was the case with Intel’s “Skylake” Xeon SP and IBM’s Power9 – then network equipment sales slosh around with them. That affects InfiniBand and Ethernet equally, if differently. The hyperscalers and cloud builders do some InfiniBand, but they are mostly Ethernet and perhaps will be increasingly so as the RDMA over Converged Ethernet (RoCE) capability of Ethernet keeps getting better and is good enough for a lot of workloads even if InfiniBand’s RDMA is leaner and meaner.

The second quarter was a good one for InfiniBand, Eyal Waldman, chief executive officer at Mellanox, explained on a conference call with Wall Street analysts, because the “Summit” and “Sierra” supercomputers being built by IBM for the US Department of Energy were set up with most of their networks at that time. Waldman elaborated and said that the CORL deal for these two machines, which are respectively at Oak Ridge National Laboratory and Lawrence Livermore National Laboratory and which are based on the current 100 Gb/sec EDR InfiniBand, accounted for tens of millions of dollars in revenues for Mellanox in the quarter ended in June, and another few million dollars in the quarter ended in September. As far as we know, IBM has shipped a rack or two of Power9 servers to these facilities and is planning to ramp up the installations during the fourth quarter. IBM won’t book revenues until the machines are shipped, and presumably not until they are qualified. Nvidia will be able to book the sales of its Volta GPU accelerators, which comprise a big part of the contract, as soon as it ships the devices to IBM, we presume, so there should be a big revenue bump for the GPU maker coming soon.

In the second quarter, thanks in large part to ConnectX adapters running Ethernet but also to growing switch sales, Mellanox posted $80.5 million in Ethernet sales in the first quarter, then $87 million in the second quarter, and finally $110.7 million in the third quarter. Across all speeds, InfiniBand represented $97 million in the first quarter, $108.1 million in the second quarter, and $100.2 in the third quarter. Both InfiniBand and Ethernet products are expected to show growth in the fourth quarter and in the full 2018 year ahead, according to Waldman. Ethernet is growing faster, but the core HPC centers that opt for InfiniBand are expected to drive revenue growth; sales of embedded InfiniBand use cases behind parallel databases and storage are expected to slow, not the least of which because these vendors want to use the same kind of network to face out to servers as they use to last together clusters for databases and storage internally. If Ethernet didn’t provide decent latencies – usually still three or four times slower than InfiniBand – and high bandwidth, this would not be possible. But, ironically, with the Spectrum and Spectrum-2 ASICs from Mellanox, those customers that might have gone with InfiniBand can now go woth Ethernet.

It is always better to eat a part of your own business than lose it to a competitor, and this has been the strategic direction of Mellanox since 2009. The company saw this day coming, and prepared for it.

“We are seeing good design win momentum for our Spectrum family of Ethernet switches with key OEMs, hyperscale, and additional end customers,” Waldman explained on the call. “We anticipate that Spectrum revenue growth will accelerate in 2018 with the introduction of key customer product platforms as well as strong pipeline of future opportunity. We also expect growth of HPC and artificial intelligence in 2018. During 2018, we project InfiniBand storage-based revenues will improve as key OEM customers introduced new platforms to the market.”

InfiniBand, of course, will always find its niches, and we think it will carve out its place among GPU accelerated workloads like traditional HPC, as the Summit and Sierra machines demonstrate so well, but also for database, visualization, and machine learning workloads that also run on beefy clusters with GPU acceleration. This will very likely be more than enough to get Mellanox to break through the $1 billion barrier, a task that might take it 19 years since its founding in 1999. If Mellanox can get a fair share of the hyperscale and cloud build out, getting to that $2 billion level will not take quite as long. Arista Networks was founded in 2004 and started shipping products two years later.

It only took a little more than six years for Arista, a specialist in high speed switches, to break through $1 billion. Money ti took away largely from Cisco Systems and Juniper Networks. Arista was initially a user of both Fulcrum and Broadcom ASICs during the 10 Gb/sec Ethernet era, and moved on to Broadcom and Cavium/XPliant ASICs in the 100 Gb/sec generation. Why not Mellanox in the 200 Gb/sec and 400 Gb/sec eras? That would pump up the revenues for both companies. That was the deal between Mellanox and Voltaire – right up to the moment that Mellanox decided it wanted to have its chips and ship them in switches, too.

We know what you are thinking. Maybe Mellanox should buy Arista? Well, perhaps not. Arista has a market capitalization of $14.2 billion as we go to press, and Mellanox is sitting at a nice but considerably smaller $2.34 billion valuation by the investors on Wall Street. It is far more likely that a company like Juniper, which is losing share to Arista but which is still a $5 billion company (revenue) worth $9.5 billion (market cap), might think about buying Mellanox, to bolster its sales, but as we have pointed out before, it is hard to make money – mean, bring cash to the bottom line – in the HPC and hyperscale markets where Mellanox plays. That is just the way it is, and the idea is to perfect technologies there and sell them at higher margins to regular enterprises that won’t mind paying the premium.

That is the trick, and that is what Mellanox and every other company that has HPC roots and higher goals aspires to. Those Cisco margins are still pretty high, and everyone in networking is still chasing them.

And as we have pointed out before, if IBM really believed in the clustered systems business, it would buy Nvidia, Mellanox, and FPGA maker Xilinx and totally control its destiny in accelerated systems. That’s what Intel did when it bought all those networking businesses mentioned above plus FPGA maker Altera and neural net chip maker Nervana Systems.

IBM’s market capitalization is only a little larger than NVDA so that acquisition is not likely to happen!

Seems to me the headline is misleading: if the job can be done cheaper by Ethernet, use it; if it requires either the price/performance at leading edge speeds or the interface optimizations (and lower tail latency) of InfiniBand, then upsell the interconnect to InfiniBand.

A handful of other observations:

1. A potential huge advantage of Omni-Path over a standards-based InfiniBand implementation could occur if Intel embedded an Omni-Path using 25Gb or 50Gb serdes in a processor module in place of PCIe connections, while Mellanox was bottlenecked through slower PCIe serdes and had the added cost of HCAs. I think this would be seen as an anti-competitive move if Intel did so (without simply adding a typical HCA price to the CPU module price).

2. Mellanox is really, really good at optimizing and tuning the (user space) software stack and hardware interface at both ends of a connection. That’s where the CPU cycles are spent, and where the majority of latency is spent. Unless Intel magically supports the Cray PGAS or some other really low latency software interface over Omni-Path (yeah I know InfiniBand is messages, but everyone has a little shared memory window block on their InfiniBand interfaces) I think Mellanox will continue to lead here.

Nice article , but you got some number wrong . Juniper market cap is ~9.45B .

For IBM buying Nvidia , at current stage , it seem financially not feasible , Nvidia has market cap ~124B , while IBM is ~144B . Maybe 2.5 years ago when Nvidia was 1/10 of it current value .

Adding Mellanox and Xilinx is another 20B …. Not talking about premiums related to acquisitions …

I was talking revenue, but I clarified.

i think this article may also mislead your readers in one specific aspect. isn’t the ethernet you are talking about rdma based, i.e. RoCE? both infiniband and roce are rdma based. if a reader did not know that mellanox is engaged in rdma-based technology (for both ib and roce), they may read the article and get the wrong idea. just my 2 cents worth …