At the end of July, Oak Ridge National Laboratories started receiving the first racks of servers that will eventually be expanded to become the “Summit” supercomputer, the long-awaited replacement to the “Titan” hybrid CPU-GPU system that was built by Cray and installed back in the fall of 2012. So, technically speaking, IBM has begun shipping its Power9-based “Witherspoon” system, the kicker to the Power8-based “Minksy” machine that Big Blue unveiled in September 2016 as a precursor and a testbed for the Power9 iron.

Given that IBM is shipping Summit nodes to Oak Ridge and has also started shipping similar (but different) nodes to Lawrence Livermore National Laboratory for the companion “Sierra” supercomputer, we would have expected some sort of announcement of Power9 servers by now. Such an announcement hasn’t happened, but we are hearing rumblings that announcements could be imminent, perhaps in late September or sometime in October.

With Intel getting its “Skylake” Xeon SP processors out the door formally in July but shipping them since late last year, and AMD pushing both its “Naples” Epyc X86 server processors and companion Radeon Instinct GPU accelerators in a one-two punch that Intel cannot do, it would be good for IBM’s Cognitive Systems business for the Power9 systems to launch sooner rather than later. We know for sure that Power9 systems supporting IBM’s own AIX Unix and IBM i (formerly OS/400) proprietary OS/database platform will not be coming until early 2018, maybe March or April. But that does not mean IBM can’t launch Linux-only Power9 systems, most likely aimed at the lucrative HPC and AI markets, before the end of the year.

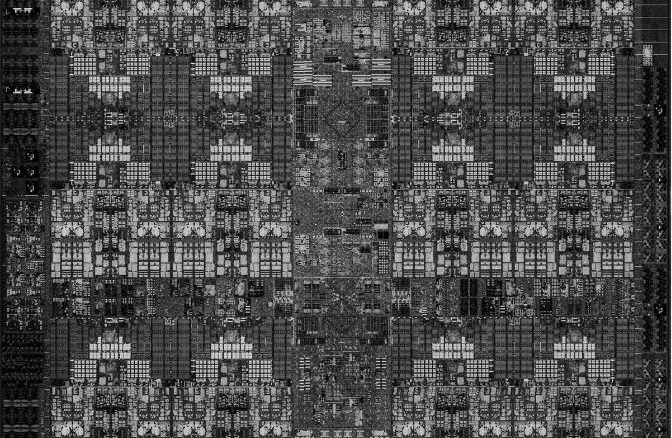

There are two flavors of Power9 chips, the “Nimbus” Power9 scale out processor for machines with one and two sockets and the “Cumulus” Power9 scale up processor for machines with four, eight, or sixteen sockets. We detailed all of the feeds and speeds, and the underlying architecture of the Power9 chips, back in August 2016, and we talked about how the hybrid approach embodied in Power9 would bring competition to datacenter compute and, more specifically, how NVLink and OpenCAPI (which are very closely related) and coherency across CPUs and GPUs inside of nodes would be transformative. While the Minsky servers had native NVLink 1.0 ports, which ran at 16 Gb/sec per lane, this coherency support was not ready for the Power8+ chips (we know IBM doesn’t call it that, but that is what it is) and the NVLink 2.0 ports will run at the much faster 25 Gb/sec signaling per lane and will support hardware-assisted coherency across the memory spaces between the Power9 chip and the Volta (and maybe the Pascal) GPU accelerators. This coherency is a key part of IBM’s computing strategy, and support for it is in the early stages for Linux right now and will be more generally available early in 2018, as IBM explained to us at the ISC17 supercomputing event.

IBM has said since the middle of 2016, when it unfolded Power chip roadmaps out past 2020, that we should expect Power9 systems to ship in the second half of this year, and the presumption was not just for the Summit and Sierra machines, which are being paid for by the US Department of Energy, to get them, but for other organizations that want to deploy Power9 CPUs tightly coupled to Nvidia Tesla V100 GPU coprocessors through the NVLink interconnect, to also have them. This tight coupling is not possible with Intel Xeon or AMD Epyc processors because, thus far, neither has native NVLink ports on the CPU like the Power9 chip does.

IBM has a bunch of different machines using the Power8 and Power8+ processors, and we suspect that Big Blue and its motherboard and system partners, including a number of members of the OpenPower consortium, will have a slew of machines tricked out for the Power9 chips, too. IBM will no doubt roll out a bunch of Power9 machinery over the course of 2018, and possibly starting here in late 2017, but the exact feeds and speeds of this iron and the timing of its delivery under the Cognitive Systems brand (formerly known as Power Systems) has not yet been revealed. We are hearing things, however.

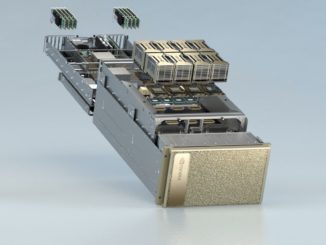

The Minksy system, which was also was known under the code-name of “Garrison” when it was being developed, is sold as the Power S822LC for HPC by IBM and is actually made by Wistron, one of the big ODMs, and rebadged and resold by Big Blue. It has two Power8+ chips, with two NVLink 1.0 ports and four “Pascal” Tesla P100 accelerators in the SXM2 form factor mounted on the motherboard. Two of the Pascals link directly to each Power8+ chip through NVLink ports, and then the remaining NVLink ports are used to cross couple the GPUs in the system so they can share data at 80 GB/sec rates. This design, like a few other Power8 systems from the OpenPower camp, put main memory on a riser card and the “Centaur” memory buffer chip and its integrated L4 cache memory onto that riser rather than on the memory DIMMs themselves, as IBM does for its own designs.

Spiritually, it looks like the Minsky/Garrison system is more linked to Sierra than to Summit. Lawrence Livermore has been less vocal about its Power9 server deliveries, but sources at the lab tell The Next Platform that it has received its first machines and that they do, as rumors have suggested, pair two Power9 processors with four Volta accelerators in each node. Lawrence Livermore has been quiet about the precise feeds and speeds of the machine, but has said that Sierra will deliver somewhere around 120 petaflops to 150 petaflops with total memory on the order of 2 PB to 2.4 PB and at around 11 megawatts of power consumption. That makes it about five times as energy efficient as the “Sequoia” BlueGene/Q system it replaces to help steward the nuclear weapons stockpile of the United States, with 5X to 7X the application performance of Sequoia. At 7.5 teraflops at double precision for each Volta coprocessor, and assuming that the Power9 chip has negligible math skills compared to the GPUs, that works out to 4,000 to 5,000 nodes for Sierra, and that also works out to a clean 512 GB of main memory per node if you do the math.

That memory capacity does not include the 16 GB of HBM2 memory on each Volta coprocessor. That HBM2 memory, which has a bandwidth of 900 GB/sec, is as far as applications are concerned, the important thing. Programmatically speaking, that main memory on the Power9 processor is more like a L3 cache for the GPU, once the coherency is turned on, and all of the other caches in the Power9 complex, including the L4 cache in the Centaur buffer chips, are really just staging for the GPUs except for the parts where they are doing serial work in C or Fortran.

As we have previously reported, the Summit supercomputer at Oak Ridge will pair two Power9 chips with six Volta GPU accelerators. Oak Ridge said that it would build Summit from around 4,600 nodes, up a bit from its previous estimate a few years back, and that each node would have 512 GB of main memory and 800 GB of flash memory. That’s 2.24 PB of main memory, 3.5 PB of flash memory, and nearly 72 GB of HBM2 memory across the cluster, which will be linked with 100 Gb/sec EDR InfiniBand. (The 200 Gb/sec HDR InfiniBand from Mellanox Technologies was not quite ready in time for the initial installations in July.) Those extra GPUs push the power envelope of the Summit machine up to around 13 megawatts, and they should deliver around 207 petaflops of peak theoretical performance (absent the Power9 floating point) at double precision. Oak Ridge had been planning for around 40 petaflops of performance per node, and it looks like it is getting 45 petaflops.

What we are hearing on the street is that IBM’s Witherspoon kicker to the Minksy/Garrison system will support either four or six Volta GPU accelerators and is being used in both the Summit and Sierra boxes, which makes sense if you want to amortize the $325 million cost of the two systems across a single architecture. If this is true, that means, in theory, that Lawrence Livermore will be able to boost its per node performance by 33 percent just by adding GPU accelerators to empty slots.

If we had to guess, we would say that IBM will use a consistent naming convention, and replace the 8s in the Power Systems line with 9s in the Cognitive Systems line, and so this machine should be called the Cognitive Systems 922LC for HPC. And we are willing to bet that this will be the first machine IBM formally launches for other customers, and it will probably be sooner rather than later. It cannot let Intel and AMD breathe up all the processor oxygen, and it has to lay the groundwork for the Power9 rollout that will no doubt happen throughout 2018, including servers aimed at its core enterprise customers. This naming, and the very nature of the IBM product line, implied the Cognitive Systems S914, S922, S924 with some L and LC variants and the Cognitive Systems E850C, E870C, and E880C.

We expect that the Cognitive Systems 922LC for HPC will employ a chip with high core count and low threading, and make use of industry standard memory with no Centaur buffer chips to reduce cost. The Power9 chip is designed with 24 cores, and it will depend on yields at Globalfoundries using its 14 nanometer processes to see if the Summit and Sierra nodes, as well as the ones sold to others, get 20, 22, or 24 cores with SMT4 threading. This machine will no doubt come with a water cooled option, as did the Minksky/Garrison box, and it will be a Linux only machine with the open OPAL microcode that has been created under the auspices of the OpenPower consortium with search engine giant Google. We have a strong suspicion that the InfiniBand networking in this system will be a snapable mezzanine card, allowing for the two labs and any other customer using this machine to upgrade the network without having to change the servers; this mezzanine card approach is becoming more common in systems for this very reason.

We suspect that there will be other variants of the entry Cognitive Systems line that have one or two sockets and that may or may not include the SXM2 socket for Volta GPU coprocessors. We suspect that the other members of the future Cognitive Systems line will use the PCI-Express versions of the Volta accelerators and have expansion for other kinds of PCI-Express cards and even NVM-Express storage, which is becoming increasingly important in the datacenter. IBM is first out the door among its server peers to support the PCI-Express 4.0 protocol, has twice the bandwidth of PCI-Express 3.0 and delivers a total bandwidth of 64 GB/sec across a sixteen lane adapter slot. We are curious, too, if IBM is going to be reselling the “Zaius” motherboard created by Google and Rackspace Hosting, and if Supermicro is going to be revamping with follow-ups to the “Briggs” and “Stratton” systems it launched with Power8 chips last year.

The past rollouts of the Power7, Power7+, and Power8 systems are not necessarily an indicator of how IBM will roll out the Power9 systems. But in general, IBM does not launch all of its machines at once and there is no reason to believe it will do so this time. Sometimes, as with the Power7, it starts in the middle and then works its way down the line and then up the line. Power8 started with entry machines, then big NUMA boxes, and then the midrange. A lot will depend on where IBM thinks demand is, and what yields Globalfoundries gets on the Power9 Nimbus and Cumulus chips.

The good news is that IBM can tune a chip to have 24 cores with SMT4 threading (four threads per core) or 12 cores with SMT8 threading (eight threads per core) pretty much on the fly, and so it can balance between threading and core count as needed. The SMT4 cores are aimed at Linux workloads, and the SMT8 cores are aimed mostly at AIX and IBM i. It can also dial in buffered or unbuffered memory, with different bandwidths and capacities, as needed across these two different kinds of platforms.

“specifically, how NVLink and OpenCAPI (which are very closely related) and coherency across CPUs and GPUs inside of nodes would be transformative.”

It’s funny how AMD is, along with IBM(CAPI’s creator)/Others, a founding member of OpenCAPI and Nvidia is only with OpenCAPI as an assoicate member. But I’m more interested in some direct comparsions between AMD’s Infinity Fabric IP and Nvidia’s NVLink IP as we can see how that Infinity Fabric IP works out for AMD/Epyc. And is should be noted that AMD’s Vega GPU’s Support that Infinity Fabric IP as well.

So that AMD Infinity Fabric IP may just be utlized on any Dual GPU(On one PCIe card) Vega variants in a similar manner to the way the Infinity Fabric is used across the Zeppelin Dies/Zen CCX Units for a more effective way of combining more than one physical processor(GPU, CPU, FPGA, other) die that may appear to software as just a larger single logical GPU/other logical processor.

I’d expect that Because Vega supports that Infinity Fabric IP that any muiti-GPU load balancing issues will not be a problem on Vega as they where in the past, when using dual GPUs on one PCIe card was achieved using the PCIe protocol with no such good multi-GPU scaling results. Expect Navi to also make use of that Infinity Fabric IP, only Navi will make use of much smaller and more numerous GPU DIEs compared to Vega’s rather large size DIEs, with better wafer/die yields expected for Navi is a similar manner to The Zen/Zeppelin smaller dies used by AMD for its entire line of consumer Ryzen, Threadripper, and professional Epyc CPU SKUs.

AMD’s Infinity Fabric represents some potential in excess of NVLink currently but I’m sure Nvidia is thinking that modular is also a good idea what with Nvidia also looking into multi-GPU dies on an MCM module arrangements. Wafer/Die yield issues can sure take a bite of of potential revenues where large monolithic processor dies are concerned.

> “The past rollouts of the Power7, Power7+, and Power8 systems are not necessarily an indicator of how IBM will roll out the Power9 systems.”.

The way they will be rolled out at the end of the year is when IBM’s OpenSystems Partners are up to speed and there’s enough Systems (CPU and Chassis) to meet the expected demand.

Currently what is available is being eaten up by Google and Facebook, etc.

See the last paragraph of this Article: http://ibmsystemsmag.com/power/businessstrategy/competitiveadvantage/power9-plans/?page=2 .

If you’re a Consumer with only U$5K to spend you can preorder from Raptor: https://www.raptorcs.com/TALOSII/ .

If someone else knows of a Company accepting preorders for a complete Desktop or Rackmount please share the Link.

Really curious to see how the power9+Volta combo performs against the intel xeon/phi.

Hoping it will blast the xeon competition away, would be a great poster card for IBM & Nvidia.