We continue with our second part of the series on the Tsubame supercomputer (first section here) with the next segment of our interview with Professor Satoshi Matsuoka, of the Tokyo Institute of Technology (Tokyo Tech).

Matsuoka researches and designs large scale supercomputers and similar infrastructures. More recently, he has worked on the convergence of Big Data, machine/deep learning, and AI with traditional HPC, as well as investigating the post-Moore technologies towards 2025. He has designed supercomputers for years and has collaborated on projects involving basic elements for the current and more importantly future exascale systems.

TNP: Will you be running machine learning and artificial intelligence (AI) workloads on Tsubame 3? Was the machine designed for AI, or was it designed differently from Tsubame 2 to accommodate AI? And did the interconnect consideration become important with respect to AI?

Matsuoka: I’ve been involved in AI since long before AI entered its winter stages, when early explorations in artificial intelligence were based on symbolic languages, like LISP and Prolog. That was back in the 1980s and 1990s, but they went out of style, and we experienced 20 years of a so-called “AI winter”. So, when we built Tsubame 2, machine learning, deep learning, and AI were not in the mainstream as they are today. Tsubame 2 wasn’t originally built with those targets in mind. But, with the emergence and maturing of big data workloads over the last several years, we’ve been running an increasing number of big data and analytics applications on it centered around machine learning and AI. In 2012, we did upgrade Tsubame 2 with new GPUs from Nvidia, and we’re running analytics workloads on them. Tsubame 3, however, was built with big data and AI workloads very much in mind.

Relative to AI and the interconnect based on Intel Omni-Path Architecture, or any interconnect, our analysis of these machine learning workloads indicates that for small-scale learning, the network bandwidth doesn’t really matter that much. The many core processors and traditional, monolithic processors, like Intel Xeon processors, are quite capable of handling these smaller projects within the node.

But, as we go to scalable learning, meaning that we employ a lot more many-core processors for machine learning, then network injection bandwidth in the nodes plays a significant role in sustaining scalability. So, with Intel OPA, having this significant amount of injection bandwidth will allow these machine learning workloads, especially deep learning training and inference workloads, to scale well. With Tsubame 3 and its multiple host fabric adapters per node, we expect to accelerate those types of applications, along with the traditional HPC jobs.

TNP: What kind of mix of simulation and AI jobs do you expect to run on Tsubame 3?

Matsuoka: We actually expect to do both simulation and analytics and AI on the same machine simultaneously. Scientists are doing very large simulations on today’s supercomputers. Because the data is very big, it’s difficult to do simulation on one machine and shift target data to a different machine to do analytics. There would be significant bandwidth bottlenecks, which would slow down discovery. So, Tsubame 3 was designed to run as a hybrid machine, where we co-locate traditional simulation workloads and big data, analytics workloads, running hand in hand. Co-location offers more tightly coupling of the applications, which leads to more innovative uses of machine learning and analytics, for example, using an approach called data assimilation. In data assimilation, we continuously use data analytics to correct the trajectory of our simulations.

We have a partnership with scientists who do weather forecasting. They want to run weather simulations, but as they run them, they ingest real-time weather data from sensors, so they can continuously correct the trajectory of their simulation. These sensors are highly sophisticated, like phase array radars, which stream data as much as a terabyte per second. To run with real-time data that fast, they need to do both simulation and analytics together. There are many use cases like this. Being able to co-locate these workloads gives the researchers significant benefits. We believe that this multi-purpose design of supercomputers will be the mainstream going forward.

TNP: What network challenges did you have to consider in designing a hybrid?

Matsuoka: There were many questions that we faced in considering the design of Tsubame 3. How does the network play into this hybrid? Can we build these hybrids effectively? Can these two workloads really co-exist and run efficiently?

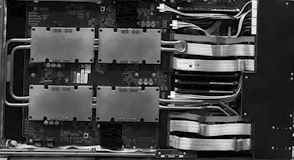

With respect to the network, of course, the interconnect plays a significant role, as with other elements of the machine. We’ve done a lot of studies on this. In many cases, the bottleneck is in the bandwidth of the machine, whether it is in memory or in the interconnect or something that connects the processors to the HCAs. One obvious necessity of a high bandwidth network is because we are building clusters with a very large number of many core processors, closely coupled with traditional processors in a heterogeneous configuration. There is a massive amount of processing going on, and a lot of data manipulation and communications. So, bandwidth is important because we need to manipulate the data, both within a simulation and between simulation and analytics. With Tsubame 3, that’s why we have vast interconnects—Intel OPA, NVLink, and switched PCIe.

As I mentioned before, when you do small-scale learning, the interconnect bandwidth does not play that important of a role. But once you go to scalable learning, which requires extremely large data sets, and can involve very large simulation workloads plus these data acquisition processes to drive data simulations, then you need to scale your training. That’s when you need very high performance in your interconnect. The nature of the algorithm demands it. We have been trying to scale this effectively on the software side. We have tried to reduce the bandwidth requirement using algorithmic and software tricks. But fundamentally, we need high bandwidth in the hardware, otherwise machine learning will not scale. So, interconnects play a very important role in modern big data analytics workloads, especially coupled with this immense scaling of the accompanying simulation workloads.

TNP: Do your users really care about the fabric in terms of the performance it will provide for their workloads?

Matsuoka: For some advanced users, yes. Advanced users profile their code. So, they get a pretty good picture of where time is spent, including in the interconnect. They can get picky when it comes to interconnect selections, and they also complain when it isn’t working correctly.

But, we see performance as more than just how many gigabytes per second you get out of the fabric. It’s about the time spent, for example, in any sort of MPI collectives. It also concerns the stability of the performance when we’re running these workloads.

Most codes go through a very repetitive loop of computation and communication for hundreds of thousands of times. So, people expect the interconnect to perform repetitively in a very stable manner throughout their jobs. If they get huge fluctuations in speed, they wonder what’s going on. In some cases, these fluctuations are critical, because people are starting to use asynchronous communications algorithms. With these, networks suddenly becoming unstable and can result in significant differences in the latencies between different sections of the machine. And then, their apps become very unstable. So, getting both high performance and high stability of performance across the entire run of the applications are sensitive issue with these apps and users.

So far to date we’ve been very happy, even under the extreme stress in our benchmarks. Intel OPA, combined with the Intel Xeon processors and GPUs, have performed with both high performance and extreme stability. Our benchmark team, for example, is extremely happy with the interconnect, because it’s rock solid. And, that’s played a major role in achieving our Green 500 level of status, because our team has to run these benchmarks repetitively under different parameter settings. One of the worst is Top500, which really stresses the network at the very end of the run. At the very terminal phase of the computation, it becomes network bound; you communicate constantly at full bandwidth throughout the machine. That’s where instability in the network surfaces. We don’t stop breathing until the very end of the LINPACK. So far to date, though, Intel OPA has been fitting the bill very nicely for Tokyo Tech. And the users do care. Those people who tax the network, they care.

TNP: As cluster sizes go, Tsubame 3 is not a giant system, but it has many, many thousands of cores, in both the GPUs and the Intel Xeon processors, so it’s truly a large machine in a small space, isn’t it?

Matsuoka: We wanted it small for efficiency. Our philosophy is to make it as small as possible. We expect to become a demonstrator, showing how we have fit this tremendous number of cores, memory, and interconnect into a small package. We want something like this to eventually make it into the cloud as the norm. Because, when you get to hyperscalar data centers, efficiency becomes critical to enabling them to operate at low cost with high services value. And as more advanced simulation and analytics workloads are moved to the cloud, we need to put these supercomputers there. A lot of data center professionals have come to see Tsubame 2. We believe there will be even more audiences looking at the new machine. So, we expect it will become a very important tech transfer exercise to populate cloud data centers with these high-density, high-bandwidth, high-efficiency machines.

TNP: Do you have any future plans and enhancements for Tsubame 3?

We do have some minor upgrade plans, such as working with Intel to add 3D Xpoint™ U.2 drives. We expect this will enhance the memory capacity, and thus the big data and simulation capabilities, to support the very large memory requirements that some of our applications have. We have some preliminary results that do look promising using the U.2 devices. We also anticipate taking the Tsubame 3 design and applying it to future generation machines with upgraded technologies and components, as well as the architecture and configurations. So, there are machines I’m planning where we are leveraging Tsubame 3’s design. We are also looking at a variety of processors and not focusing on GPUs. We are looking at Knights Mill, and possibly combining Knights Mill and GPUs, so there are various proposals on the table. For the interconnect configuration, we’re looking at how to exploit the high radix nature of the Intel OPA switches. How can we achieve much better cost-optimized networks, even compared to Tsubame 3? So, we’re looking at ways to leverage our experiences with Tsubame 3 in various way for our next generation machines.

Ken Strandberg is a technical story teller. He writes articles, white papers, seminars, web-based training, video and animation scripts, and technical marketing and interactive collateral for emerging technology companies, Fortune 100 enterprises, and multi-national corporations. Mr. Strandberg’s technology areas include Software, HPC, Industrial Technologies, Design Automation, Networking, Medical Technologies, Semiconductor, and Telecom. He can be reached at ken@catlowcommunications.com.

Be the first to comment