When talking about the future of supercomputers and high-performance computing, the focus tends to fall on the ongoing and high-profile competition between the United States with its slowly eroding place as the kingpin in the industry and China and the tens of billions of dollars that the government has invested in recent years to rapidly expand the reach of the country’s tech community and the use of home-grown technologies in massive new systems.

Both trends were on display at the recent International Supercomputing Conference in Frankfurt, Germany, where China not only continued to hold the top two spots on the Top500 list of the world’s fastest supercomputers with the Sunway TaihuLight and Tianhe-2 systems, but a performance boost in the Cray-based Piz Daint supercomputer in Switzerland pushed it into the number-three spot, marking only the second time in 24 years – and the first time since November 1996 – that a U.S.-based supercomputer has not held one of the top three spots.

That said, the United States had five of the top 10 fastest systems on the list, and still has the most – 169 – of the top 500, with China trailing in second at 160. But as we’ve noted here at The Next Platform, the United States’ dominance of the HPC space is no longer assured, and the combination of China’s aggressive efforts in the field and worries about what a Trump administration may mean to funding of U.S. initiatives has fueled speculation of what the future holds and has garnered much of the attention.

However, not to be overlooked, Europe – as illustrated by the rise of the Piz Daint system, which saw its performance double with the addition of more Nvidia Tesla 100 GPU accelerators – is making its own case as a significant player in the HPC arena. For example, Germany houses 28 of the supercomputers in the latest Top500 list released last month, with France and the United Kingdom both with 17. In total, Europe houses 105 of the Top500 systems, good for 21 percent of the market and third behind the United States and China.

Something that didn’t generate a lot of attention at the show was the introduction of the DAVIDE (Development for an Added Value Infrastructure Designed in Europe) system onto the list, coming in at 299 with a performance of 654.2 teraflops and a peak performance of more than 991 teraflops. Built by Italian vendor E4 Computer Engineering, DAVIDE came out of a multi-year Pre-Commercial Procurement (PCP) project of the Partnership for Advanced Computing in Europe (PRACE), a nonprofit association headquartered in Brussels. PRACE in 2014 kicked off the first phase of its project to fund the development of a highly power-efficient HPC system design. PCP is a process in Europe in which different vendors compete through multiple phases of development in a project. A procurer like PRACE gets multiple vendors involved in the initial stage of the program and then compete through phases – solution design, prototyping, original development and validation and testing of first projects. Through each evaluation phase, the number of competing vendors is reduced. The idea is to have the procurers share risks and benefits of innovation with the vendors. Over the course of almost three years, the number of system vendors for this program was whittled down from four – E4, Bull, Megaware and Maxeler Technologies – with E4 last year being awarded the contract to build its system.

The goal was to build an HPC system that can run highly parallelized, memory-intensive workloads such as weather forecasting, machine learning, genomic sequencing and computational fluid dynamics, the type of applications that are becoming more commonplace in HPC environments. At the same time, power efficiency also was key. With a peak performance of more than 991 teraflops, the 45-node cluster consumes less than 2 kilowatts per node, according to E4.

E4 already offers systems powered by Intel’s x86 processors, ARM-based chip and GPUs, but for DAVIDE, the company opted for IBM’s OpenPower Foundation, an open hardware development community that IBM officials launched with partners like Nvidia and Google in 2014 to extend the reach of the vendor’s Power architecture beyond the core data center and into new growth areas like hyperscale environments and emerging workloads – including machine learning, artificial intelligence and virtual reality – while cutting into Intel’s dominant share of the server chip market. E4 already is a member of the foundation and builds other systems running on Power.

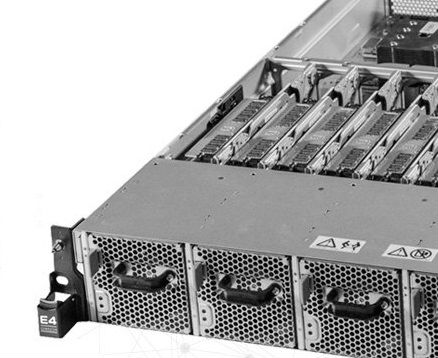

Each 2U node of the cluster is based on the OpenPower systems design codenamed “Minsky” and runs on two 3.62GHz Power8+ eight-core processors and four Nvidia Tesla P100 SXM2 GPUs based on the company’s “Pascal” architecture and aimed at such modern workloads as artificial intelligence and climate predictions. The GPUs are hooked into the CPUs via Nvidia’s NVLink high-speed interconnect, and the nodes use Mellanox Technologies’ EDR 100 Gb/s Infiniband interconnects as well as 1 Gigabit Ethernet networking. Each node offers a maximum performance of 22 TFlops. In total, the cluster runs on 10,800 Power8+ cores, with 11,520GB of memory and SSD SATA and NVMe drives for storage. It runs the CentOS Linux operating system

The Minsky nodes are designed to be air-cooled, but to increase the power efficiency of the system, E4 is leveraging technology that uses direct hot-water cooling – at between 35 and 40 degrees Celsius – for the CPUs and GPUs, with cooling capacity of 40 kW. Each rack includes an independent liquid-liquid or liquid/air heat exchanger unit with redundant pumps, and the compute nodes are connected to the heat exchange via pipes and a side bar for water distribution. E4 officials estimate that this technology can extract about 80 percent of heat generated by the nodes.

The chassis is custom-built and based on the OpenRack form factor.

Power efficiency is further enhanced by software developed in conjunction with the University of Bologna that enables fine-grain measuring and monitoring of power consumption by the nodes and the system as a whole through data collected from components like the CPUs, GPUs, memory components and fans. There also is the ability to cap the amount of power used and schedule tasks based on the amount of power being consumed and to profile the power an application uses, according to the vendor. A dedicated power monitor interface – based on the BeagleBone Black Board, an open-source development platform – enables frequent direct sampling from the power backplane and integrates with the system-level power management software.

DAVIDE takes advantage of other IBM technologies, including IBM XL compilers, ESSL math library and Spectrum MPI, and APIs enable developers to tune the cluster’s performance and power consumption.

E4 currently is making DAVIDE available to some users for just jobs as porting applications and profiling energy consumption.

Be the first to comment