Virtual machines and virtual network functions, or VMs and VNFs for short, are the standard compute units in modern enterprise, cloud, and telecommunications datacenters. But varying VM and VNF resource needs as well as networking and security requirements often force IT departments to manage servers in separate silos, each with their own respective capabilities.

For example, some VMs or VNFs may require a moderate number of vCPU cores and lower I/O bandwidth, while VMs and VNFs associated with real-time voice and video, IoT, and telco applications require a moderate-to-high number of vCPU cores, rich networking services, and high I/O bandwidth, thus forcing the use of different technologies and management techniques to support these varied needs.

Delivering optimal I/O bandwidth to VMs or VNFs of different flavors is a challenge; it requires different networking configurations and affects the heterogeneity and efficiency of server infrastructures. So it is appropriate to look at how varying VM resource requirements impact overall datacenter efficiency and how a new network configuration approach allows datacenter managers to eliminate server management silos and consolidate architectures for more efficient, cost-effective operations.

Resource Requirements

As applications vary, each need various resource requirements for VMs and VNFs, making considerations for network resource and CPU utilization among the most important to examine in terms of data center costs. We can classify these application requirements broadly into three categories from a workload perspective:

- Low demand: Traditional IT workloads like web servers will typically have very low CPU, networking, and throughput requirements.

- Medium demand: Telco networking workloads such as virtual customer premises equipment (CPE) or broadband remote access server (BRAS) have high networking and security requirements in the form of things like access control lists (ACLs), quality of service (QoS), rate limiting, firewall, and load balancing, as examples, but have low needs from a CPU and throughput perspective.

- High demand: Telco core networking workloads like vEPC or vIMS have high networking and security requirements as described above, but also have extremely challenging needs in terms of CPU utilization and and bandwidth.

Configuration Options

Because of these varying resource needs, each type of workload requires a different server networking configuration. In traditional server-based networking infrastructure, there are three key technologies functioning as a demultiplexing layer between networks and the destination VM or VNF: Virtio, DPDK, and Single Root I/O Virtualization (SR-IOV). Let’s take a look:

- Virtio is a virtualization standard for network and device drivers where guest VMs know that they are running in a virtual environment, and cooperate with the hypervisor. In this case, VMs and VNFs are completely hardware independent requiring no specific drivers and can therefore be easily migrated across servers to boost infrastructure efficiency. When using Virtio, rich networking services provided by the OVS and vRouter datapaths are available to the VMs. However, I/O bandwidth to and from the VMs is limited by the software dataplane and is the lowest of all three options by a factor of 3X to 20X.

- Data Plane Development Kit (DPDK): In this case, applications need to be modified to leverage the performance benefits of DPDK via a hardware-independent DPDK poll mode driver, and as a result, customer and third party VM application onboarding is not seamless. I/O bandwidth to and from the VMs is higher than Virtio but is significantly lower than with SR-IOV.

- SR-IOV: Of the options described I/O bandwidth to and from VMs or VNFs is the highest using SR-IOV. However, in this case, VMs or VNFs require a hardware dependent driver and thus applications need to be modified to leverage the performance benefits of SR-IOV. Customer and third party VM and application onboarding is impossible unless the vendor specific hardware driver is available in the guest operating system. Additionally, live migration of VMs or VNFs across servers is not possible. Also, when SR-IOV is implemented with traditional NICs, the networking services provided by the OVS and vRouter datapaths in kernel or user space are not available to the VMs.

None of these three mechanisms satisfies the requirements of all the potential workload scenarios outlined above. If using SR-IOV for all potential cases, while performance requirements may be achieved, it may be considered a wasteful approach because you have to dedicate a virtual function (VF) to each VM. Additionally, one can not provide the rich networking and security services like ACLs or QoS on the server adding reliance to the top-of-rack switch or physical appliances for those capabilities, which can be viewed as a difficult scenario from a management perspective.

Using DPDK may allow for rich networking services but uses a considerable amount of CPU resources for delivering these networking functions. The CPU resources that are needed for the VM/VNF applications are wasted performing networking tasks which is especially an issue for medium demand and high demand application scenarios. In summary, if DPDK is used for medium demand applications, a considerable amount of networking resources are used unnecessarily and each application also needs to be modified for DPDK support.

Finally, while the most flexible approach, if using Virtio, the necessary throughput requirements of the medium or high demand applications can not be met.

Working In Silos

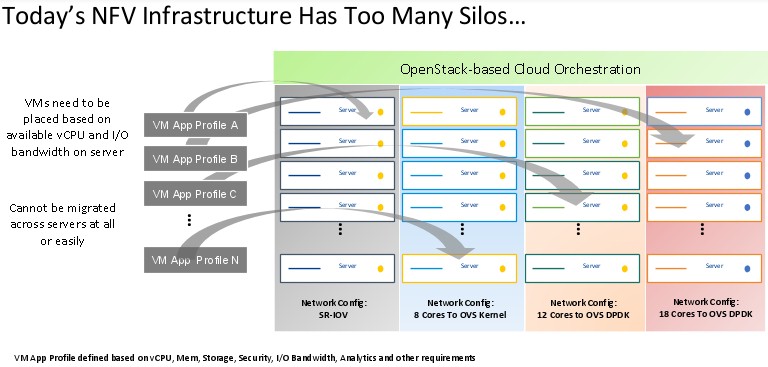

Because different networking configurations serve different needs, a data center manager ends up deploying different racks of servers to meet each group of application needs: one for Virtio-supported applications, one for DPDK-supported applications, and one for SR-IOV-supported applications. Like this:

This approach has several drawbacks:

- Capital expenditures are higher because you use many more servers than needed, provisioning each silo for peak workload expected for that group of services.

- Server utilization suffers due to the need to over provision to meet SLAs.

- Servers cannot be shared across different type of services to scale up/down on-demand limiting the number of users supported per device cluster.

- Many more servers are required thereby requiring more power, cooling, and rack space than absolutely necessary.

- The “silo” configuration requires high operational complexity – staff need varied skillsets for each silo and administrators need to track patches and upgrades for each of the technologies.

A Different Approach

By combining the flexibility of Virtio with the performance of SR-IOV in a single package called Express Virtio (XVIO), one implementation of which has been created by network adapter maker Netronome, the best of both worlds can be achieved providing the ability to continue using Virtio for all workloads while retaining the benefits of DPDK and SR-IOV. By offloading networking and security processing to a SmartNIC and running the networking datapath on the SmartNIC under XVIO, the performance benefits of SR-IOV, the simplicity in management of Virtio, and rich networking services provided by DPDK can be maintained simultaneously.

XVIO eliminates the challenges highlighted previously. It brings the level of performance of SR-IOV solutions to standard Virtio drivers (available in guest OSs), maintaining full flexibility in terms of VM mobility and the full gamut of networking and security services. This enables VMs or VNFs managed using OpenStack to experience SR-IOV-like networking performance while at the same time supporting complete hardware independence, flexibility, and seamless customer VM onboarding.

With the same configuration running on all servers, application services can flexibly be scaled up and down, over provisioning is avoided, the need for multiple support teams is eliminated, and workloads can be migrated from server to server without worrying about protocol compatibility. In addition, space, cooling, and power utilization is reduced, thus lowering TCO. XVIO and SmartNICs enable a homogenous data center that is more flexible, easier to manage, more agile, and more cost effective than a silo approach.

Daniel Proch is senior director of solutions architecture at Netronome. His role includes technology strategy, network architecture, and hardware and software planning. Previously he was responsible for Product Management responsible of Netronome’s line of SmartNICs and software. He has over 20 years of industry experience spanning product management, CTO’s office, strategic planning, and engineering. Proch has engineering and telecommunications degrees from Carnegie Mellon University and the University of Pittsburgh.

Be the first to comment