Astronomy is the oldest research arena, but the technologies required to process the massive amount of data created from radio telescope arrays represents some of the most bleeding-edge research in modern computer science.

With an exabyte of data expected to stream off the Square Kilometer Array (SKA), teams from both the front and back ends of the project have major challenges ahead. One “small” part of that larger picture of seeing farther into the universe than ever before is moving the data from the various distributed telescopes into a single unified platform and data format. This means transferring data from the antennae, converting those signals from analog to digital, and meshing them together for further analysis.

According to Simon Ratcliffe, technical lead for scientific computing at SKA in South Africa, one of their arrays—the 64-antennae MeerKAT installation—just using less than a quarter of the available antennae at that site has yielded the discovery of 1500 new galaxies. All of this comes at a high computational and data storage price, of course. “We’ve gone from a point when astronomy was starved for data to now, just ten years later, where we are in the exabytes range,” he explains. “We were pushing construction boundaries with SKA, now we are pushing HPC limits.”

“We need massive parallelism, to handle both task and node level parallelism, dealing with accelerators like FPGAs and GPUs and getting the memory we need on the processor. These are the things that occupy our minds. On the hardware side, we have the front end challenges where at least there is a fixed processing problem, but the throughput, bandwidth, and memory requirements are huge.”

On that front-end processing side is a system teams have worked on that is based on a combination of FPGAs, which provide the compute needed in the low-power profile needed for the location. The team has worked for a number of years on this platform with UC Berkely on the Skarab platform pictured below.

As Francois Kapp, subsystem manager for the digital backend at SKA explains, “The signals from all the antennae are combined and converted to digital. For every one of these 64 antennae there is a datastream, each of which is around 40Gb/s. We need both deeper and wider memory that can be distributed and unlike other centers, we are running 24 hours per day. We don’t need much in the way of numerical precision and are power limited so FPGAs are ideally suited for radio astronomy instruments.” However, the one piece that was missing was efficient, high performance memory.

The SKA team looked to Hybrid Memory Cube (HMC) after hitting limits with adding memory. “Getting more depth with memory was the easy part; memory chips keep getting bigger, but not wider. In FPGAs the memory hasn’t kept up with processing and the data rates we needed were not matched. The problem the teams faced with accessing HMC was far less technical and more budgetary, however.

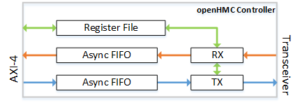

To get around the cost constraints of adopting HMC, they looked to an effort started at the University of Heidelberg called OpenHMC, a memory controller for HMC built to lower the cost of entry for researchers. Juri Schmidt, who started the project after his teams wanted to use HMC but could not afford to, says that the serial interface of HMC reduces limitations on connected devices and abstracts the memory interface to enable tech-independent development, so with each generation of DDR memory, the interface changes, which used to place the burden on the user. “The complex of the memory has shifted into the memory stack so now the manufacturer has to deal with it—we can implement processing elements in the logic base of the device and get the processing in memory needed.”

“When we started with HMC there were only a few available solutions for the controller and they were all too expensive, so we built out own. We realized many others were interested in using HMC but couldn’t afford the commercial options. We have since seen a lot of contributions to the controller and a growing number of community members,” Schmidt says. “We now support many projects including SKA.” His team is now working on a related project around network attached memory for broader processing in memory across larger systems to alleviate the expensive data movement problem in large-scale systems.

Micron’s VP of Advanced Computing. Steve Pawlowski points to a use case like SKA and its multiple data processing requirements beyond the first-stage signal processing as representative of the next generation of data-intensive HPC problems that need a new approach to memory use and management.

“When the industry moved to multicore and packed more cores into devices, they left out the fact that each device needed a certain amount of memory bandwidth to calculate the problem. Issues like SKA and HPC more generally have is that they need more than they’re getting with standard wide interfaces. We needed a way to get all the bandwidth out of memory devices and into the computing elements as fast as possible—and in a power efficient way. It takes 1000x more energy to move a data bit to the processing than to do the processing itself. This is the beginning of moving more computing capabilities into the logic layer so we can process and mate FPGA, machine learning, and other fabrics into that logic layer to get it as close to memory as possible.”

We have talked in the past about HMC and a rival in the effort for standardizing on stacked memory, High Bandwidth Memory (HBM) which is used by Nvidia, for example, are pushing to add more intelligence into the logic layer than sits between memory and processors to cut down on data movement. SKA’s implementation of this via FPGA is a good use case for how this might work on non-CPU devices. All that was missing for this to potentially take off was a less expensive on-ramp to HMC, which appears to be presented by the openHMC effort.

“The biggest impact of HMC will come when we can demonstrate not just the capability of HMC in cases like SKA and HPC, but get this moved into mainstream products. If we can get a technology like this on the chip, whether its Nvidia, Intel, IBM or others, that use at the high end starts to push into higher volume roadmaps—but this can only happen when HMC becomes a full industry standard,” Pawlowski says.

“The key to adoption of any new technology is providing an affordable way for researchers to get their hands on a technology,” Schmidt adds. More on the openHMC effort can be found here.

Be the first to comment