Whether being built for capacity or capability, the conventional wisdom about memory provisioning on the world’s fastest systems is changing quickly. The rise of 3D memory has thrown a curveball into the field as HPC centers consider the specific tradeoffs between traditional, stacked, and hybrid combinations of both on next-generation supercomputers. In short, allocating memory on these machines is always tricky—with a new entrant like stacked memory into the design process, it is useful to gauge where 3D devices might fit.

While stacked memory is getting a great deal of airplay, for some HPC application areas, it might fall just short of revolutionary without traditional memory to complement it. For others, of course, it could be a game-changer. Until recently, it has been difficult to determine because the metrics for evaluating just how much memory per core should be allocated are out of date, at least according to a team from the Barcelona Supercomputer Center (BSC). The researchers took a look at how disruptive 3D memory will be based on two prominent HPC benchmarks that capture performance assessments from two different points of view, and further gauged how out of balance some approaches to memory provisioning are in HPC.

Leaving stacked memory aside for a moment, most HPC centers currently tune around the idea that for every X86 core, 2-3GB of memory should be assigned. However, the team suggests this perception is out of sync with a changing system landscape. “This rule of thumb is based on experience with previous HPC clusters and on undocumented knowledge of the principal system integrators and it is uncertain whether it matches the memory requirements of production HPC applications.” As it turns out, memory, whether it is stacked or in DIMM form, is subject to more speculation than one might imagine, especially as HPC applications continue to evolve to meet the promises of new, higher performance architectures.

“Novel 3D memories provide significantly higher memory bandwidth and lower latency, leading to higher energy efficiency. However, the adoption of 3D memories in the HPC domain requires use cases needed much less memory capacity than currently provisioned. With good out-of-the-box performance, these use cases would be the first success stories for these memory systems, and could be an important driving force for their further adoption.”

In a first-of-its-kind study, the BSC team put this to the test against supercomputing benchmarks with 3D memory thrown into the mix. The standard metric for over two decades has been High Performance Linpack (HPL)—the number that guides the Top 500 supercomputer rankings twice per year. A relative newcomer to the HPC benchmark pool, which was developed by Dr. Jack Dongarra, the founder of HPL, is the High Performance Conjugate Gradients (HPCG). Many argue the latter is more representative of real-world applications, which are more limited by data movement and less focused on sheer floating point operations per second. While both FLOPs and data movement are critical to supercomputer performance, memory is the next battleground for HPC centers—both in terms of where, when, and how to consider 3D memory technologies, and how memory is the fine point upon which large-scale system balance hangs.

In the analysis of how different memory configurations match to these benchmarks, the researchers say that current systems that perform well on Linpack require 2 GB of main memory per core and this trend is consistent throughout the HPL-based list. However, HPCG benchmarks reveal another story—one that is in line with its emphasis on measuring real-world application performance. For this benchmark, “to converge to the optimal performance, the benchmark requires roughly 0.5 GB of memory per core, and this will not change as the cluster size increases.”

“We detected that HPCG could be an important success story for 3D-stacked memory in HPC. With low memory footprints and performance directly proportional to the available memory bandwidth, this benchmark is a perfect fit for memory systems based on 3D chiplets. HPL, however, could be one of the show-stoppers because reaching good performance requires memory capacities that are unlikely to be provided by 3D chiplets.”

Of course, to 3D or not to 3D is not a simple proposition. One approach to provisioning memory based on application requirements is to pull the best both sides—the performance hop of 3D memory and the high capacity of DIMMs. “Replacing conventional DIMMs with new 3D memory chiplets located on the silicon interposer could be the next breakthrough in memory system design. It would provide significantly higher memory bandwidth and lower latency, leading to higher performance and energy efficiency. On the down side, it is unlikely that (expensive) 3D memory chiplets alone would provide the same memory capacities as DIMM-based memory systems,” the team explains. The goal is to find applications that require less memory and also, for some applications, to look at hybrid memory systems. These bring the best of both worlds—the benefits described above for 3D devices along with the capacity of a DIMM. Even still, there are some tricky costs to that hybrid approach as well since it puts greater demands on programmers, which adds to a higher code cost and time burden.

In addition to establishing how the metrics stack up to trends in memory allocation, the BSC researchers went a step further and compared their findings about how much memory is required to real-world applications. The collection suite of codes to benchmark systems sowed that “most of the HPC applications under study have per-core memory footprints in the range of hundreds of megabytes—an order of magnitude less than the main memory available in state-of-the-art HPC systems” although there are several that actually require GBs of main memory.

While the BSC researchers cannot make broad-based assertions about what works for all HPC systems (given the wide range of scientific and engineering codes), the point is that 3D memory is going to cause HPC sites to begin rethinking about how memory is provisioned. This has always been done in the design process, but with the novel 3D memory (at a higher price but with much higher performance) and existing DIMMs that can handle the capacity demands, those larger capacity/capability machine questions will apply at machine—and memory—scale.

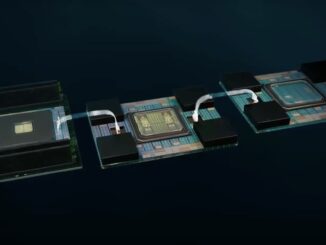

Just in time a new research paper for an exascale APU! Note the fully 3d stacked HBM on top of the GPU “Chiplets”. I’m Including the paper’s abstract and the full PDF of the paper(1) is a very interesting read:

“Abstract—The challenges to push computing to exaflop

levels are difficult given desired targets for memory capacity,

memory bandwidth, power efficiency, reliability, and cost.

This paper presents a vision for an architecture that can be

used to construct exascale systems. We describe a conceptual

Exascale Node Architecture (ENA), which is the computational

building block for an exascale supercomputer. The

ENA consists of an Exascale Heterogeneous Processor (EHP)

coupled with an advanced memory system. The EHP provides

a high-performance accelerated processing unit (CPU+GPU),

in-package high-bandwidth 3D memory, and aggressive use of

die-stacking and chiplet technologies to meet the requirements

for exascale computing in a balanced manner. We present

initial experimental analysis to demonstrate the promise of our

approach, and we discuss remaining open research challenges

for the community.”

(1)

“Design and Analysis of an APU for Exascale Computing”

http://www.computermachines.org/joe/publications/pdfs/hpca2017_exascale_apu.pdf